Difference between Object Detection vs. Instance Segmentation

Difference between Faster RCNN vs. MASK RCNN

Define Mask RCNN

Model Architecture of Mask RCNN

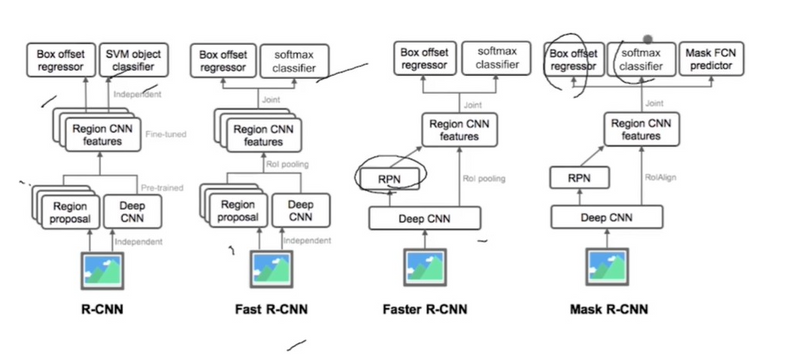

Faster R-CNN and Mask R-CNN are both widely used architectures for object detection, but they serve different purposes and have distinct features. Here's a comparison of the two:

Object Detection vs. Instance Segmentation

Image Classification helps us to classify what is contained in an image. Image Localization will specify the location of single object in an image whereas Object Detection specifies the location of multiple objects in the image. Finally, Image Segmentation will create a pixel wise mask of each object in the images. We will be able to identify the shapes of different objects in the image using Image Segmentation.

Difference between Faster RCNN vs. MASK RCNN

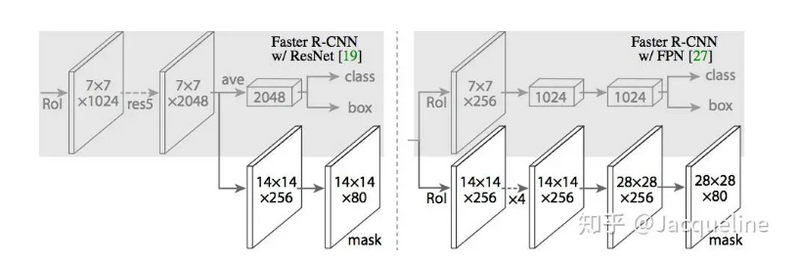

Faster R-CNN is primarily designed for object detection, where the goal is to localize objects within an image and classify them into predefined categories.

Mask R-CNN extends Faster R-CNN to also perform instance segmentation, which involves not only detecting objects but also segmenting each instance of the object with pixel-level accuracy, providing a precise mask for each object in the image.

Architecture:

Faster R-CNN consists of two main components:

a Region Proposal Network (RPN) for generating region proposals and a Fast R-CNN network for object detection.

Mask R-CNN builds upon Faster R-CNN by adding an additional branch to predict segmentation masks for each region proposal generated by the RPN. This branch operates in parallel with the existing classification and bounding box regression branches.

Output:

Faster R-CNN outputs bounding boxes and class labels for detected objects within an image.

Mask R-CNN extends Faster R-CNN by also providing pixel-level segmentation masks for each detected object instance, allowing for more detailed understanding of object shapes and boundaries.

Use Cases:

Faster R-CNN is suitable for tasks where object detection and classification are sufficient, such as object localization, counting, and tracking.

Mask R-CNN is preferred for tasks requiring precise instance-level segmentation, such as image segmentation, object counting, and instance-aware image editing.

Performance:

Mask R-CNN typically achieves slightly lower detection performance compared to Faster R-CNN due to the additional computational overhead of generating segmentation masks.

However, Mask R-CNN provides more detailed and fine-grained information about object shapes and boundaries, making it more suitable for certain applications requiring pixel-level accuracy.

Define Mask RCNN

Mask rcnn means segment rcnn or segment image combines object detection and instance segmentation

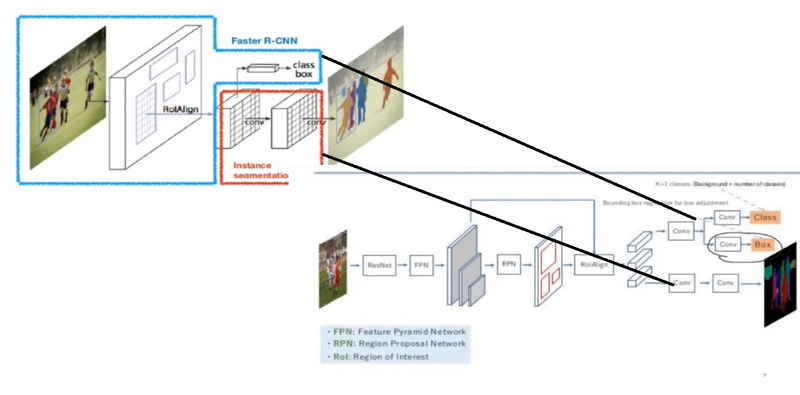

Model Architecture of Mask RCNN

The key innovation of Mask R-CNN lies in its ability to perform pixel-wise instance segmentation alongside object detection. This is achieved through the addition of an extra "mask head" branch, which generates precise segmentation masks for each detected object. This enables fine-grained pixel-level boundaries for accurate and detailed instance segmentation.

Two critical enhancements integrated into Mask R-CNN are ROIAlign and Feature Pyramid Network (FPN). ROIAlign addresses the limitations of the traditional ROI pooling method by using bilinear interpolation during the pooling process. This mitigates misalignment issues and ensures accurate spatial information capture from the input feature map, leading to improved segmentation accuracy, particularly for small objects.

FPN plays a pivotal role in feature extraction by constructing a multi-scale feature pyramid. This pyramid incorporates features from different scales, allowing the model to gain a more comprehensive understanding of object context and facilitating better object detection and segmentation across a wide range of object sizes

Mask R-CNN Architecture

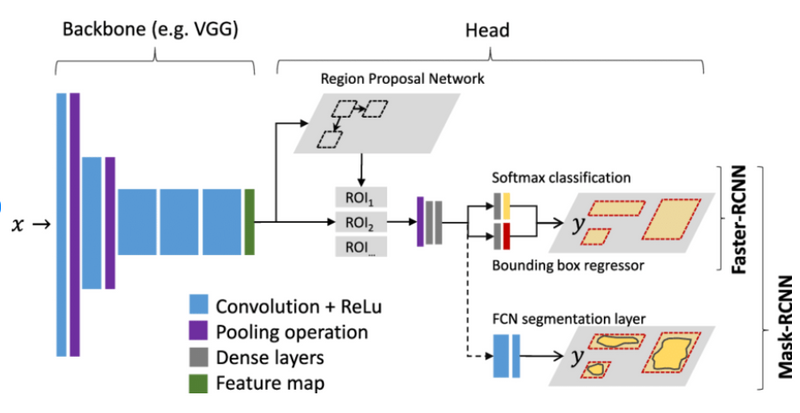

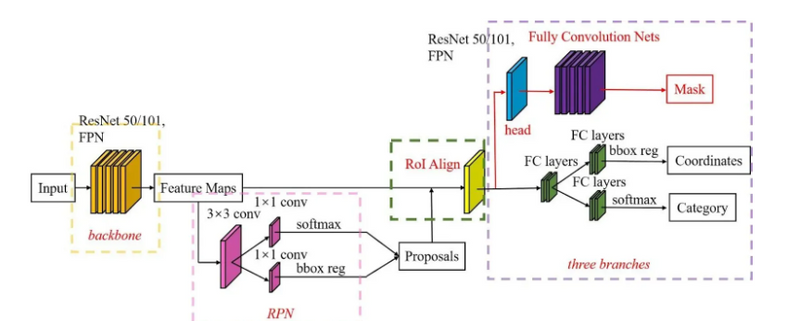

The architecture of Mask R-CNN is built upon the Faster R-CNN architecture, with the addition of an extra "mask head" branch for pixel-wise segmentation. The overall architecture can be divided into several key components:

Backbone Network

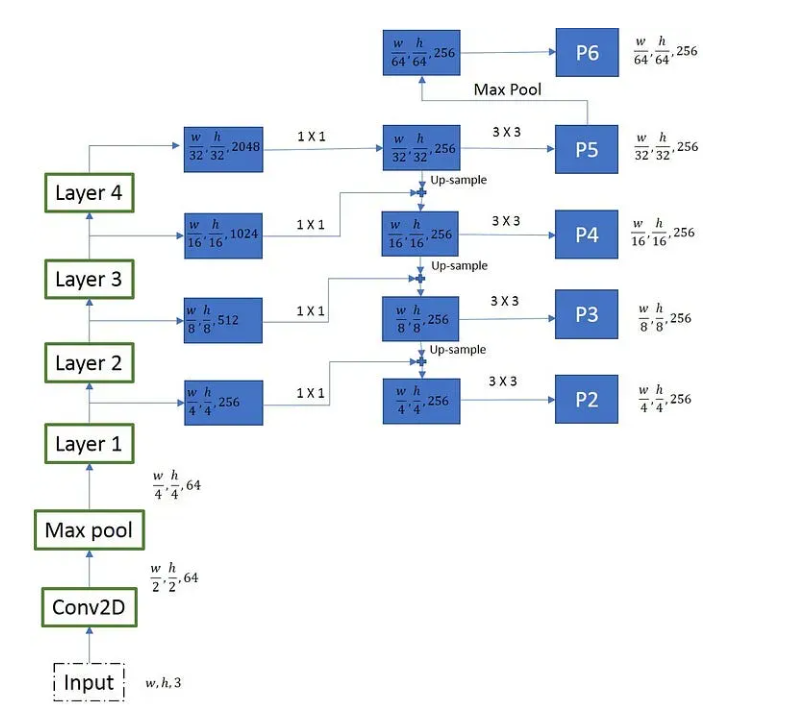

The backbone network in Mask R-CNN is typically a pre-trained convolutional neural network, such as ResNet or ResNeXt. This backbone processes the input image and extracts high-level features. An FPN is then added on top of this backbone network to create a feature pyramid.

FPNs are designed to address the challenge of handling objects of varying sizes and scales in an image. The FPN architecture creates a multi-scale feature pyramid by combining features from different levels of the backbone network. This pyramid includes features with varying spatial resolutions, from high-resolution features with rich semantic information to low-resolution features with more precise spatial details.

The FPN in Mask R-CNN consists of the following steps:

Feature Extraction: The backbone network extracts high-level features from the input image.

Feature Fusion: FPN creates connections between different levels of the backbone network to create a top-down pathway. This top-down pathway combines high-level semantic information with lower-level feature maps, allowing the model to reuse features at different scales.

Feature Pyramid: The fusion process generates a multi-scale feature pyramid, where each level of the pyramid corresponds to different resolutions of features. The top level of the pyramid contains the highest-resolution features, while the bottom level contains the lowest-resolution features.

The feature pyramid generated by FPN enables Mask R-CNN to handle objects of various sizes effectively. This multi-scale representation allows the model to capture contextual information and accurately detect objects at different scales within the image.

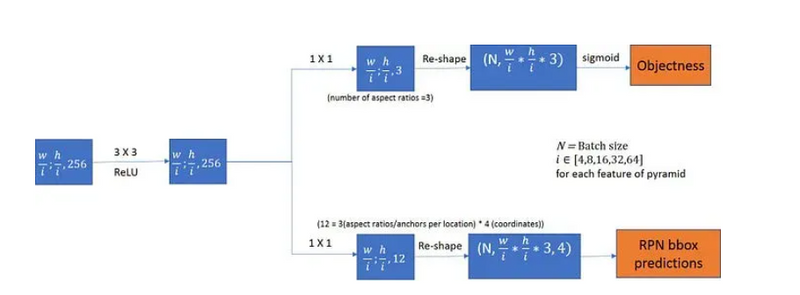

Region Proposal Network (RPN)

The RPN is responsible for generating region proposals or candidate bounding boxes that might contain objects within the image. It operates on the feature map produced by the backbone network and proposes potential regions of interest.

ROIAlign

After the RPN generates region proposals, the ROIAlign (Region of Interest Align) layer is introduced. This step helps to overcome the misalignment issue in ROI pooling.

ROIAlign plays a crucial role in accurately extracting features from the input feature map for each region proposal, ensuring precise pixel-wise segmentation in instance segmentation tasks.

The primary purpose of ROIAlign is to align the features within a region of interest (ROI) with the spatial grid of the output feature map. This alignment is crucial to prevent information loss that can occur when quantizing the ROI's spatial coordinates to the nearest integer (as done in ROI pooling).

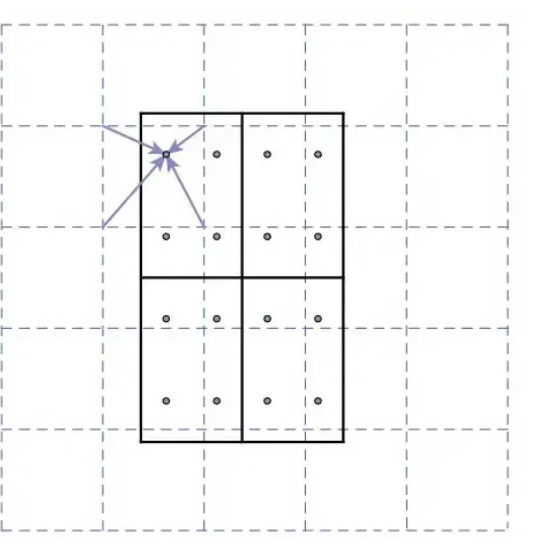

The ROIAlign process involves the following steps:

Input Feature Map: The process begins with the input feature map, which is typically obtained from the backbone network. This feature map contains high-level semantic information about the entire image.

Region Proposals: The Region Proposal Network (RPN) generates region proposals (candidate bounding boxes) that might contain objects of interest within the image.

Dividing into Grids: Each region proposal is divided into a fixed number of equal-sized spatial bins or grids. These grids are used to extract features from the input feature map corresponding to the region of interest.

Bilinear Interpolation: Unlike ROI pooling, which quantizes the spatial coordinates of the grids to the nearest integer, ROIAlign uses bilinear interpolation to calculate the pooling contributions for each grid. This interpolation ensures a more precise alignment of the features within the ROI.

Output Features: The features obtained from the input feature map, aligned with each grid in the output feature map, are used as the representative features for each region proposal. These aligned features capture fine-grained spatial information, which is crucial for accurate segmentation.

By using bilinear interpolation during the pooling process, ROIAlign significantly improves the accuracy of feature extraction for each region proposal, mitigating misalignment issues.

This precise alignment enables Mask R-CNN to generate more accurate segmentation masks, especially for small objects or regions that require fine details to be preserved. As a result, ROIAlign contributes to the strong performance of Mask R-CNN in instance segmentation tasks.

Mask Head

The Mask Head is an additional branch in Mask R-CNN, responsible for generating segmentation masks for each region proposal. The head uses the aligned features obtained through ROIAlign to predict a binary mask for each object, delineating the pixel-wise boundaries of the instances. The Mask Head is typically composed of several convolutional layers followed by upsample layers (deconvolution or transposed convolution layers)

During training, the model is jointly optimized using a combination of classification loss, bounding box regression loss, and mask segmentation loss. This allows the model to learn to simultaneously detect objects, refine their bounding boxes, and produce precise segmentation masks.

Top comments (0)