Introduction

The DataOps Certified Professional (DOCP) is a structured credential designed for engineers who want to build, automate, and scale modern data platforms using production-grade practices. If you work with pipelines, analytics systems, ML workflows, or cloud data infrastructure, this guide is for you.

The certification program is delivered through the official course page at DataOps Certified Professional (DOCP) and hosted on DevOpsschool. It focuses on real operational data engineering—not academic theory.

Data systems today are complex, distributed, and deeply integrated with business decision-making. As organizations move toward analytics-driven operations, DataOps becomes a strategic engineering discipline. This guide helps you understand whether DOCP aligns with your career goals, experience level, and long-term growth strategy.

If you are planning your next step in DevOps, SRE, Data Engineering, or platform roles, this document will help you make a practical and informed decision.

What is the DataOps Certified Professional (DOCP)?

DataOps Certified Professional (DOCP) represents structured validation of modern data engineering and operational excellence practices. It is built around automation, pipeline reliability, governance, observability, and scalable architectures.

Unlike traditional data engineering programs that focus primarily on SQL or analytics tooling, DOCP emphasizes real-world pipeline deployment, CI/CD for data workflows, monitoring of data systems, and maintaining data quality in production.

The certification aligns closely with cloud-native architecture patterns. It reflects how modern organizations build streaming data platforms, batch ETL systems, lakehouse architectures, and ML-ready infrastructure.

Its core philosophy is simple: treat data systems like software systems—with version control, testing, automation, rollback mechanisms, and monitoring.

Who Should Pursue DataOps Certified Professional (DOCP)?

DataOps Certified Professional (DOCP) is ideal for working data engineers who want to formalize and operationalize their knowledge. If you are already building pipelines but want to apply DevOps-style rigor, this certification fits well.

DevOps engineers transitioning into data platforms will find it extremely useful. SREs responsible for data platform uptime and reliability also benefit significantly.

Cloud professionals managing analytics workloads on AWS, Azure, or GCP gain structured exposure to scaling and cost management practices around data workloads.

Engineering managers overseeing analytics teams can use DOCP to better evaluate design decisions and implement governance standards across teams. It is equally relevant in India’s growing data ecosystem and global markets where platform reliability is critical.

Why DataOps Certified Professional (DOCP) is Valuable in 2026 and Beyond

Data volumes continue to explode across industries. Enterprises rely on real-time dashboards, predictive models, and AI-driven recommendations. Data systems are no longer optional.

DataOps Certified Professional (DOCP) equips engineers to manage this scale effectively. It prepares professionals to build systems that are automated, monitored, version-controlled, and resilient.

Technology tools will change—Spark today, something else tomorrow. But principles like automation, observability, testing, and governance remain constant. DOCP focuses on those fundamentals.

From a career perspective, it offers strong return on investment. Engineers with operational data skills are in short supply, and organizations increasingly prefer candidates who understand both DevOps and data engineering.

DataOps Certified Professional (DOCP) Certification Overview

The program is delivered via the official DataOps Certified Professional (DOCP) course and hosted on DevOpsschool. It follows a structured assessment model combining theoretical understanding with hands-on implementation expectations.

The certification framework is owned and curated by senior practitioners who emphasize real deployment scenarios rather than classroom-only knowledge.

Assessment typically validates your ability to design, build, automate, monitor, and troubleshoot pipeline architectures.

The structure is progressive. Candidates can begin at foundational levels and advance toward professional and architect-level capabilities.

DataOps Certified Professional (DOCP) Certification Tracks & Levels

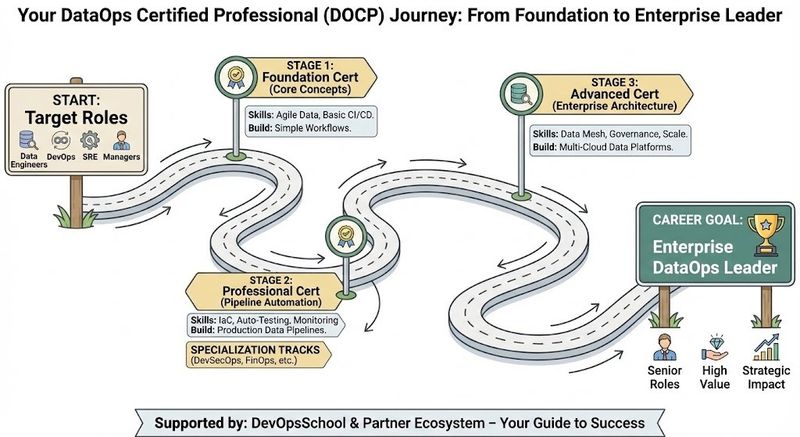

The certification is structured across three levels:

Foundation Level – Covers fundamentals of DataOps principles, CI/CD for data, pipeline architecture basics, and core observability concepts.

Professional Level – Focuses on enterprise-grade implementations, complex orchestration workflows, governance frameworks, and scaling strategies.

Advanced/Architect Level – Emphasizes system design, multi-cloud data strategy, cost optimization, and large-scale reliability engineering.

Specialization tracks may align with DevOps-integrated DataOps, SRE-driven DataOps reliability, AI/ML data lifecycle automation, and FinOps optimization for data workloads.

These levels mirror career growth—from engineer to senior engineer to architect or lead.

Complete DataOps Certified Professional (DOCP) Certification Table

Track Level Who it’s for Prerequisites Skills Covered Recommended Order

Core DataOps Foundation Beginners, junior data engineers Basic SQL, cloud familiarity CI/CD for data, pipeline basics, monitoring fundamentals 1

Enterprise DataOps Professional Mid-level engineers Pipeline experience, scripting Orchestration, testing frameworks, governance, scaling 2

DataOps Architect Advanced Senior engineers, architects 4–6 years experience Architecture design, reliability, cost optimization 3

DevOps-Integrated DataOps Professional DevOps engineers CI/CD knowledge Automation pipelines, infra-as-code for data After Foundation

FinOps-Aligned DataOps Professional Cost-conscious data teams Cloud billing knowledge Workload optimization, storage lifecycle planning After Foundation

Detailed Guide for Each DataOps Certified Professional (DOCP) Certification

DataOps Certified Professional (DOCP) – Foundation Level

What it is

This certification validates core DataOps knowledge. It ensures you understand pipeline fundamentals, automation basics, and operational monitoring.

It proves you can move from manual data workflows to automated systems.

Who should take it

Junior data engineers.

DevOps engineers entering data.

Cloud engineers managing analytics workloads.

Skills you’ll gain

Designing basic ETL/ELT pipelines

Implementing CI/CD for data workflows

Setting up monitoring and alerting

Version control for pipeline configurations

Real-world projects you should be able to do

Deploy automated batch pipelines

Integrate Git-based version control with workflows

Configure alert systems for failed jobs

Implement basic data quality checks

Preparation plan

7–14 days: Review fundamentals and lab practice.

30 days: Build sample pipelines and automate deployments.

60 days: Practice troubleshooting and real deployment simulations.

Common mistakes

Ignoring automation principles.

Memorizing tools instead of understanding workflows.

Skipping monitoring design practices.

Best next certification after this

Same-track option: Professional Level

Cross-track option: DevOps-related certification

Leadership option: Engineering management fundamentals

DataOps Certified Professional (DOCP) – Professional Level

What it is

This level validates enterprise DataOps competence. It focuses on scalable orchestration, security integration, and governance.

It confirms readiness for mid-to-senior roles.

Who should take it

Data engineers with real pipeline experience.

SREs managing data platform uptime.

Platform engineers building internal analytics systems.

Skills you’ll gain

Advanced orchestration using workflow tools

Data contract and validation design

Governance automation

Incident handling and RCA processes

Real-world projects you should be able to do

Deploy distributed data pipelines

Implement automated rollback mechanisms

Enforce schema validation rules

Perform root cause analysis for pipeline failures

Preparation plan

7–14 days: Audit current knowledge gaps.

30 days: Build complex multi-stage pipelines.

60 days: Simulate enterprise-scale deployment scenarios.

Common mistakes

Underestimating governance complexity.

Ignoring cost factors.

Overlooking observability integration.

Best next certification after this

Same-track option: Architect Level

Cross-track option: FinOps-focused certification

Leadership option: Technical leadership certification

DataOps Certified Professional (DOCP) – Architect Level

What it is

This certification validates large-scale data system architecture capabilities.

It focuses on multi-cloud data design and strategic oversight.

Who should take it

Senior engineers.

Enterprise architects.

Data platform leads.

Skills you’ll gain

Designing distributed data architectures

Multi-cloud pipeline optimization

Advanced cost governance strategies

High-availability architecture planning

Real-world projects you should be able to do

Architect data lakehouse infrastructure

Design cross-region failover systems

Implement end-to-end observability frameworks

Optimize petabyte-scale workloads

Preparation plan

7–14 days: Review architecture patterns.

30 days: Evaluate case studies.

60 days: Design full enterprise reference architecture.

Common mistakes

Focusing only on tools.

Ignoring long-term scalability.

Skipping disaster recovery planning.

Best next certification after this

Same-track option: Specialized domain certifications

Cross-track option: AIOps or MLOps paths

Leadership option: Senior engineering management programs

Choose Your Learning Path

DevOps Path

Start with Foundation. Move toward Professional focusing on automation integration. Combine CI/CD pipelines for both application and data workloads. Advance toward Architect for strategic pipeline infrastructure.

DevSecOps Path

Begin with Foundation. Add governance and compliance automation. Strengthen security controls around data pipelines. Integrate policy enforcement and secrets management.

SRE Path

Focus on monitoring, reliability engineering, and incident management. Professional level suits SREs managing data services uptime. Architect level prepares you for reliability leadership roles.

AIOps / MLOps Path

Use Foundation to understand data pipelines. Build toward ML data lifecycle management. Combine with model versioning and monitoring systems for full-stack intelligence.

DataOps Path

Progress sequentially through Foundation, Professional, and Architect. Focus on scaling complexity and organizational influence.

FinOps Path

Combine Professional level with cloud cost optimization knowledge. Focus heavily on storage lifecycle and processing efficiency.

Role → Recommended DataOps Certified Professional (DOCP) Certifications

Role Recommended Certifications

DevOps Engineer Foundation + Professional

SRE Professional

Platform Engineer Professional + Architect

Cloud Engineer Foundation

Security Engineer Foundation + Governance-focused Professional

Data Engineer All Levels

FinOps Practitioner Professional

Engineering Manager Professional or Architect

Next Certifications to Take After DataOps Certified Professional (DOCP)

Same Track Progression

Move deeper into architectural specialization. Focus on governance frameworks or large-scale distributed systems.

Cross-Track Expansion

Add certifications in DevSecOps, MLOps, or FinOps. This broadens impact across teams.

Leadership & Management Track

Transition toward engineering leadership programs. Focus on decision-making, budgeting, and strategic planning skills.

Training & Certification Support Providers for DataOps Certified Professional (DOCP)

DevOpsSchool

DevOpsSchool offers structured instructor-led programs with industry practitioners. Their approach emphasizes real labs, production-grade examples, and mentoring sessions. Learners benefit from scenario-based learning instead of tool demonstrations alone. The training includes architecture discussions, troubleshooting sessions, and structured preparation guidance tailored for working professionals.

Cotocus

Cotocus supports enterprise adoption services around DevOps and data platforms. They provide consulting-oriented guidance that aligns certification preparation with organizational implementation. This makes it suitable for teams wanting real transformation alongside certification.

Scmgalaxy

Scmgalaxy focuses on practical skill-building and tool exposure. It is suitable for professionals who want structured foundational understanding before moving into enterprise complexity.

BestDevOps

BestDevOps emphasizes exam-focused preparation combined with applied labs. It caters to individuals who prefer structured curriculum flow and milestone-based progress tracking.

devsecopsschool.com

devsecopsschool.com integrates security thinking into engineering certifications. It benefits professionals who want secure DataOps integration.

sreschool.com

sreschool.com aligns DataOps practices with reliability engineering. It helps SREs understand uptime and monitoring integration for data services.

aiopsschool.com

aiopsschool.com focuses on intelligent automation and observability enhancements. It supports professionals exploring AI-driven operations.

dataopsschool.com

dataopsschool.com offers focused DataOps-centered programs for candidates wanting domain specialization and deeper engagement.

finopsschool.com

finopsschool.com helps align cloud cost optimization with operational execution. It benefits professionals integrating DataOps with financial governance.

Frequently Asked Questions (General – 12 Questions)

Is this certification difficult?

Moderate difficulty, depending on experience.

How long does preparation take?

30–60 days for most professionals.

Is prior DevOps knowledge required?

Basic understanding helps significantly.

Does it require coding?

Yes, scripting familiarity is beneficial.

Is it globally recognized?

It is relevant globally where data engineering maturity exists.

Is it suitable for beginners?

Foundation level is accessible.

Is it theoretical?

Strongly practical and production-focused.

Does it include cloud concepts?

Yes, cloud-native data systems are covered.

Is it suitable for managers?

Professional and Architect levels are helpful.

What is the ROI?

Strong due to growing demand for operational data skills.

Does it help in salary growth?

Yes, especially in data-driven enterprises.

Can it complement MLOps?

Yes, significantly.

FAQs on DataOps Certified Professional (DOCP)

How is it different from data engineering certifications?

It integrates DevOps-style automation and governance into data systems.Does it cover streaming systems?

Yes, pipeline reliability includes streaming considerations.Is Kubernetes knowledge required?

Helpful but not mandatory at foundation stage.Does it help in AI projects?

Strong pipeline discipline benefits AI workflows.Is it tool-specific?

It emphasizes principles over specific tools.How relevant is it in India?

Highly relevant due to rapid data platform adoption.Can SREs pursue it?

Yes, especially Professional and Architect levels.Does it improve system design capability?

Architect level significantly enhances system thinking.

Final Thoughts: Is DataOps Certified Professional (DOCP) Worth It?

If your career touches data systems in any capacity, the DataOps Certified Professional (DOCP) is a practical investment. It builds durable engineering habits instead of teaching temporary tooling trends.

It is especially valuable for professionals who want to bridge DevOps discipline with data platform execution.

Do not pursue it only for a title. Pursue it if you are ready to build reliable, automated, scalable data systems. If that aligns with your direction, this certification is worth your time and effort.

Top comments (0)