what is loss function and gradient calculation

Explain Gradient Descent is an optimization algorithm

How to decide learning rate in optimizer gradient descent

Explain different type of optimizer gradient descent

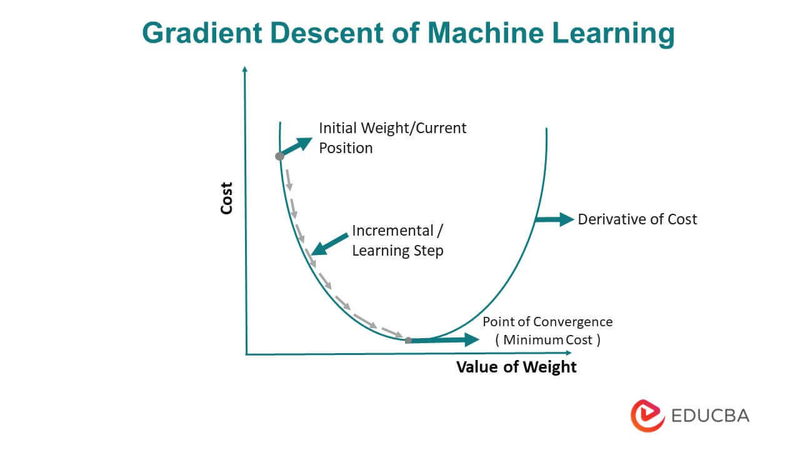

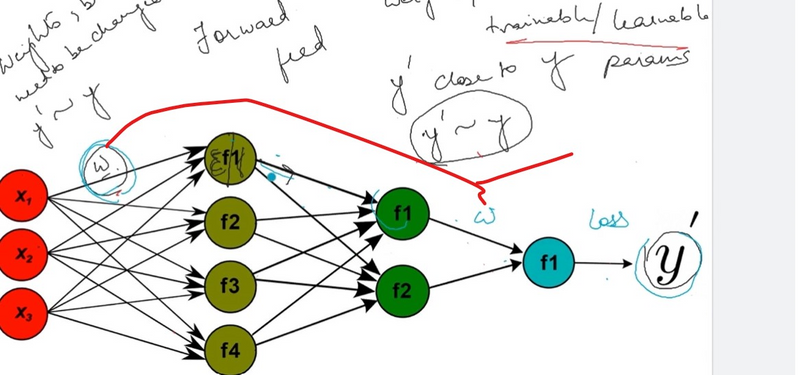

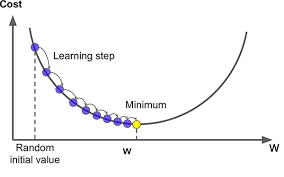

Gradient Descent is an optimization algorithm commonly used in training neural networks. Its primary purpose is to minimize a loss function by adjusting the parameters (weights and biases) of the network. Here's an explanation of how Gradient Descent works in the context of a neural network:

Loss Function:

In a neural network, you have a loss function (often referred to as the cost or objective function), which measures how well the network's predictions match the actual target values (ground truth).

The goal of training is to minimize this loss function.

Parameters (Weights and Biases):

Neural networks consist of layers of neurons, and each connection between neurons has an associated weight, and each neuron has a bias term.

These weights and biases are the parameters that the Gradient Descent algorithm adjusts during training to minimize the loss.

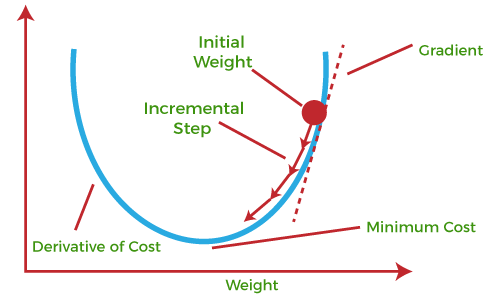

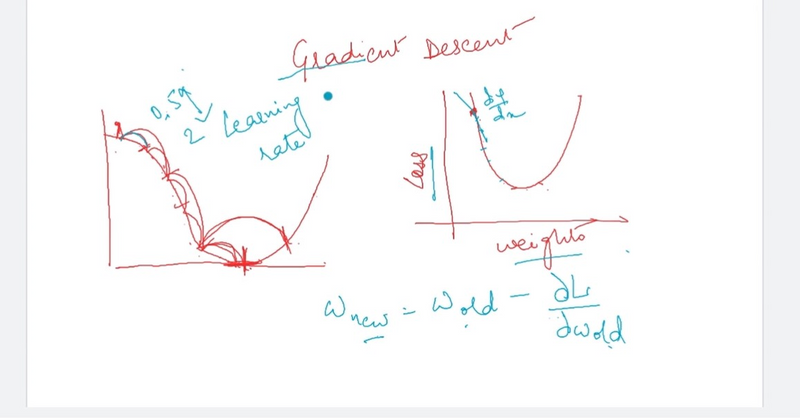

Gradient Calculation:

Gradient Descent calculates the gradient of the loss function with respect to the network's parameters. The gradient points in the direction of the steepest increase in the loss.

The gradient is calculated using the chain rule and backpropagation, which allows the network to efficiently compute gradients layer by layer.

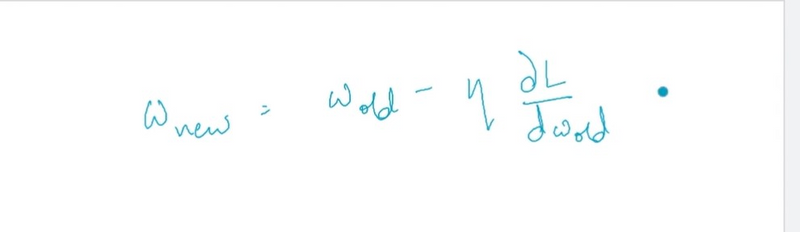

Parameter Update:

Gradient Descent updates the parameters (weights and biases) by moving in the opposite direction of the gradient.

The update rule typically follows this form: parameter_new = parameter_old - learning_rate * gradient, where the learning rate is a hyperparameter that controls the size of the steps.

Iteration:

This process of calculating gradients, updating parameters, and repeating the steps is iteratively performed for a specified number of iterations (epochs) or until convergence.

The network keeps learning and adjusting its parameters to minimize the loss.

Example:

Let's consider a simple example where you have a neural network for binary classification. You want to distinguish between cats and dogs based on image features.

The loss function measures the difference between the network's predictions and the true labels (e.g., 0 for cats and 1 for dogs).

During training, Gradient Descent calculates the gradients of the loss with respect to the weights and biases.

It then updates the weights and biases in the opposite direction of the gradients, gradually reducing the loss.

The process is repeated over multiple epochs until the network learns to make accurate predictions.

Learning Rate:

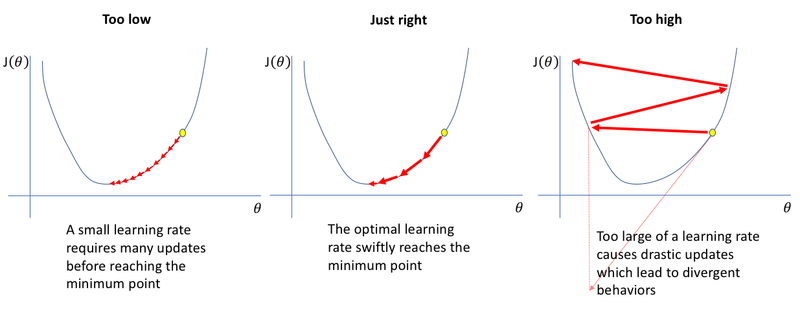

The learning rate is a critical hyperparameter in Gradient Descent. It determines the step size in parameter space. If the learning rate is too large, the optimization may overshoot the minimum and lead to divergence. If it's too small, the optimization may converge very slowly.

There are variations of Gradient Descent, such as Stochastic Gradient Descent (SGD), Mini-batch Gradient Descent, and adaptive optimizers like Adam and RMSprop, which enhance the convergence and learning speed, making them suitable for training deep neural networks. However, the core idea of iteratively updating parameters based on gradients remains the same.

How to decide learning rate in optimizer gradient descent

Choosing an appropriate learning rate for the Gradient Descent optimization algorithm in a neural network is a crucial hyperparameter tuning task. An optimal learning rate can lead to faster convergence and more accurate models, while a poorly chosen learning rate can result in slow training, divergence, or getting stuck in local minima. Here are some methods and guidelines for deciding the learning rate:

Grid Search:

One straightforward approach is to perform a grid search over a range of learning rates. You can define a range of learning rates to explore, such as [0.1, 0.01, 0.001, 0.0001], and train your model with each learning rate.

Evaluate the performance of the model on a validation dataset and choose the learning rate that results in the best performance.

Learning Rate Schedules:

Learning rate schedules decrease the learning rate over time. Common schedules include step decay, exponential decay, or 1/t decay (inverse time decay).

You can start with a relatively high learning rate and gradually reduce it during training. For example, you may start with a learning rate of 0.1 and reduce it by a factor (e.g., 0.1) every few epochs.

Cyclical Learning Rates:

Cyclical learning rates involve periodically cycling the learning rate between a lower and an upper bound. This approach can help the model escape local minima and explore the loss landscape more effectively.

Learning Rate Finder:

A learning rate finder is a technique that automatically suggests a good learning rate by monitoring the loss as the learning rate changes. You can find libraries and code implementations for this approach.

Adaptive Learning Rate Algorithms:

Some advanced optimizers, such as Adam, RMSprop, and Adagrad, adaptively adjust the learning rate during training based on the historical gradient information. These algorithms often eliminate the need for manually tuning the learning rate.

Cross-Validation:

Use k-fold cross-validation to assess the model's performance across various learning rates. This can provide insights into how sensitive your model is to different learning rates and help you make an informed choice.

Visual Inspection:

Plot the training loss or validation loss as a function of the learning rate. Look for the point where the loss starts to decrease quickly, and then levels off or increases. This is often an indicator of an appropriate learning rate.

Experience and Heuristics:

Over time, machine learning practitioners develop a sense of reasonable learning rate values for different types of networks and problems. These heuristics can serve as initial starting points.

Remember that the optimal learning rate can vary depending on the network architecture, dataset, and problem type. It may take some experimentation to find the best learning rate for your specific scenario. It's also essential to monitor the training process and make adjustments as needed, such as applying early stopping to prevent overfitting if the learning rate is too high.

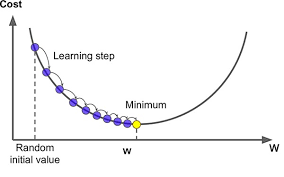

The learning rate in the Gradient Descent optimization algorithm determines the size of the incremental steps taken when updating the model's parameters (weights and biases). The relationship between the learning rate and the step size is as follows:

High Learning Rate: When the learning rate is high, the step size during parameter updates is also large. This can lead to the following effects:

- Rapid convergence: The model may reach a minimum of the loss function faster.

- Risk of divergence: If the learning rate is too high, the optimization process might overshoot the minimum and diverge, causing the loss to increase.

Oscillations: In some cases, a high learning rate can lead to oscillations around the minimum

.

Low Learning Rate: When the learning rate is low, the step size is small during parameter updates. This results in:Slower convergence: The model may take longer to reach the minimum of the loss function.

Reduced risk of divergence: A low learning rate is less likely to cause divergence because the updates are more conservative.

Stable behavior: The optimization process is less likely to exhibit erratic behavior or oscillations

.

Choosing the right learning rate is a trade-off. If the learning rate is too high, the optimization process might be unstable, but if it's too low, convergence can be very slow. The ideal learning rate strikes a balance between rapid convergence and stable optimization.

Here's how to approach the choice of learning rate:

- Start with a moderate learning rate that is typically in the range of 0.1 to 0.01. This serves as a good initial guess.

- Monitor the training process and observe the behavior of the loss function. If the loss is not decreasing or if it is increasing, consider reducing the learning rate.

- Experiment with different learning rates by performing a learning rate search, which can involve decreasing the learning rate if the loss is not decreasing or increasing it if convergence is too slow . Use learning rate schedules or adaptive optimizers, such as Adam or RMSprop, which can automatically adjust the learning rate during training based on the historical gradient information.

Explain different type of optimizer gradient descent

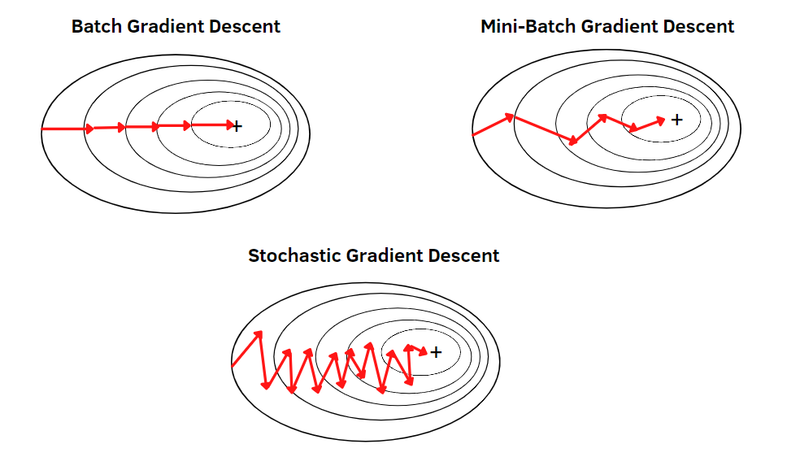

Gradient Descent is a fundamental optimization algorithm used in training neural networks. It's used to minimize the loss function by iteratively adjusting the model's parameters in the direction of the steepest descent of the loss. There are several variants of Gradient Descent, each with its unique characteristics. Here are some common types of optimizers in the context of neural networks:

Gradient descent

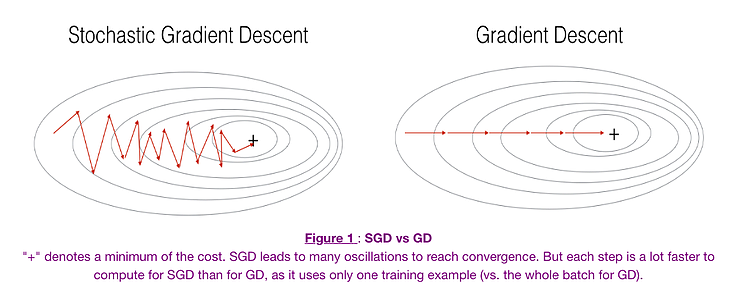

Gradient Descent computes the gradient (derivative) of the loss function with respect to the entire training dataset and updates the model's parameters in the direction that minimizes the loss over the entire dataset. It's also known as Batch Gradient Descent.

Pros:

- It usually converges to a more accurate minimum of the loss function since it considers the complete dataset.

It can take advantage of vectorized operations for efficiency on modern hardwar

e.

Cons:Computationally expensive for large datasets.

Prone to slow convergence in high-dimensional spaces or saddle points

.

Example of when to use GD: GD is suitable for small to medium-sized datasets where the entire dataset can comfortably fit in memory. It's commonly used when training linear regression models, logistic regression, and other models where the training data is not too large.

# Example using scikit-learn with Batch Gradient Descent

from sklearn.linear_model import LinearRegression

model = LinearRegression()

model.fit(X, y)

Stochastic Gradient Descent (SGD):

SGD updates the model's parameters using the gradient of the loss with respect to a single training example at a time.

It is computationally efficient and can escape local minima because the updates are noisy.

Example usage in Python with a simple linear regression model:

from tensorflow.keras.optimizers import SGD

optimizer = SGD(learning_rate=0.01)

model.compile(optimizer=optimizer, loss='mean_squared_error')

SGD, on the other hand, updates the model's parameters using the gradient of the loss with respect to a single randomly chosen training example at each iteration. It introduces randomness, which can help escape local minima but can also lead to noisy updates.

Pros:

Faster convergence due to frequent parameter updates.

Suitable for large datasets because it only requires a single example in memory at a time.

Cons:

Noisy updates can lead to oscillations in the loss function.

It may converge to a suboptimal minimum due to the noise.

Example of when to use SGD: SGD is commonly used in deep learning and neural networks where the dataset is large and training with Batch GD is computationally expensive. It can also be used when the dataset is continuously updated, such as in online learning scenarios.

python

Copy code

Example using TensorFlow/Keras with Stochastic Gradient Descent

from tensorflow.keras.optimizers import SGD

optimizer = SGD(learning_rate=0.01)

model.compile(optimizer=optimizer, loss='categorical_crossentropy')

model.fit(X_train, y_train, epochs=10, batch_size=32)

In practice, a compromise called Mini-Batch Gradient Descent is often used. It divides the dataset into small batches, and the model's parameters are updated using the gradients from each batch. Mini-batch SGD combines some advantages of both GD and SGD and is the most commonly used optimization method for training deep neural networks. The choice between GD, SGD, or Mini-Batch SGD depends on the specific problem, available computational resources, and dataset size.

User

explain when and where to use different type of optimizer gradient descent in neural network with examples

ChatGPT

The choice of optimizer in training a neural network depends on various factors, including the problem you are solving, the architecture of your neural network, and the characteristics of your dataset. Here are some different types of optimizers and when and where to use them:

Stochastic Gradient Descent (SGD):

When to use: SGD is a good choice when you have a large dataset. It works well in cases where the dataset is noisy, and you want to escape local minima.

Example: Use SGD with a deep convolutional neural network (CNN) for image classification on a large dataset.

from tensorflow.keras.optimizers import SGD

optimizer = SGD(learning_rate=0.01)

model.compile(optimizer=optimizer, loss='categorical_crossentropy')

model.fit(X_train, y_train, epochs=10, batch_size=32)

Momentum:

When to use: Momentum helps accelerate convergence in the presence of noisy gradients. It's beneficial for shallow networks and deep networks with smooth cost functions.

Example: Use Momentum with a feedforward neural network for binary classification.

from tensorflow.keras.optimizers import SGD

optimizer = SGD(learning_rate=0.01, momentum=0.9)

model.compile(optimizer=optimizer, loss='binary_crossentropy')

model.fit(X_train, y_train, epochs=10, batch_size=32)

Adagrad (Adaptive Gradient Algorithm):

When to use: Adagrad is suitable for problems with sparse features. It adapts learning rates for each parameter based on historical gradient information.

Example: Use Adagrad with a text classification model where the input data is sparse.

from tensorflow.keras.optimizers import Adagrad

optimizer = Adagrad(learning_rate=0.01)

model.compile(optimizer=optimizer, loss='categorical_crossentropy')

model.fit(X_train, y_train, epochs=10, batch_size=32)

RMSprop (Root Mean Square Propagation):

When to use: RMSprop is a good choice for deep networks. It addresses the diminishing learning rate problem, making it suitable for problems with a high-dimensional parameter space.

Example: Use RMSprop with a recurrent neural network (RNN) for sequence prediction tasks.

from tensorflow.keras.optimizers import RMSprop

optimizer = RMSprop(learning_rate=0.001)

model.compile(optimizer=optimizer, loss='mean_squared_error')

model.fit(X_train, y_train, epochs=10, batch_size=32)

Adam (Adaptive Moment Estimation):

When to use: Adam is one of the most popular optimizers. It combines the benefits of both momentum and RMSprop and is generally a safe choice for many scenarios.

Example: Use Adam with a deep neural network for a wide range of tasks, such as image classification, natural language processing, or reinforcement learning.

from tensorflow.keras.optimizers import Adam

optimizer = Adam(learning_rate=0.001)

model.compile(optimizer=optimizer, loss='categorical_crossentropy')

model.fit(X_train, y_train, epochs=10, batch_size=32)

In practice, the choice of optimizer can also depend on hyperparameters like learning rate, which you may need to tune to find the best combination for your specific problem. Additionally, you can employ learning rate schedules to adjust the learning rate during training, which can further improve convergence and performance.

Mini-Batch Gradient Descent:

Mini-batch GD is a compromise between SGD and Batch GD. It updates the parameters using a small random subset (mini-batch) of the training data.

This approach combines the efficiency of batch processing with the noise of SGD.

It's the most commonly used optimizer in practice.

from tensorflow.keras.optimizers import SGD

optimizer = SGD(learning_rate=0.01)

model.compile(optimizer=optimizer, loss='mean_squared_error')

Momentum:

Momentum is an extension of SGD that introduces an additional velocity term to smooth out the updates.

It helps the optimizer to converge faster and navigate through flat regions.

Example usage in Python with TensorFlow/Keras:

from tensorflow.keras.optimizers import SGD

optimizer = SGD(learning_rate=0.01, momentum=0.9)

model.compile(optimizer=optimizer, loss='mean_squared_error')

Adagrad (Adaptive Gradient Algorithm):

Adagrad adapts the learning rate for each parameter individually, based on the historical gradient information.

It's suitable for sparse data because it gives more weight to parameters that have received rare updates.

Example usage:

from tensorflow.keras.optimizers import Adagrad

optimizer = Adagrad(learning_rate=0.01)

model.compile(optimizer=optimizer, loss='mean_squared_error')

RMSprop (Root Mean Square Propagation):

RMSprop is an improvement over Adagrad. It also adapts the learning rate but uses a moving average of the squared gradient values.

It mitigates the problem of diminishing learning rates in deep networks.

Example usage:

from tensorflow.keras.optimizers import RMSprop

optimizer = RMSprop(learning_rate=0.001)

model.compile(optimizer=optimizer, loss='mean_squared_error')

Adam (Adaptive Moment Estimation):

Adam combines the concepts of momentum and RMSprop. It maintains moving averages of both gradients and squared gradients.

It's one of the most popular and widely used optimizers in deep learning.

Example usage:

from tensorflow.keras.optimizers import Adam

optimizer = Adam(learning_rate=0.001)

model.compile(optimizer=optimizer, loss='mean_squared_error')

Each of these optimizers has its advantages and disadvantages, and their performance can vary depending on the specific problem and dataset. Experimenting with different optimizers and their hyperparameters is often necessary to find the best optimization strategy for a given neural network.

Top comments (0)