What are the minimum requirement in keras layer

What are the minimum requirement in keras layer

Keras layer requires below minimum details to create a complete layer.

Shape of the input data

Number of neurons / units in the layer

Initializers

Regularizers

Constraints

Activations

Let us understand the basic concept in the next chapter. Before understanding the basic concept, let us create a simple Keras layer using Sequential model API to get the idea of how Keras model and layer works.

from keras.models import Sequential

from keras.layers import Activation, Dense

from keras import initializers

from keras import regularizers

from keras import constraints

model = Sequential()

model.add(Dense(32, input_shape=(16,), kernel_initializer = 'he_uniform',

kernel_regularizer = None, kernel_constraint = 'MaxNorm', activation = 'relu'))

model.add(Dense(16, activation = 'relu'))

model.add(Dense(8))

where,

Line 1-5 imports the necessary modules.

Line 7 creates a new model using Sequential API.

Line 9 creates a new Dense layer and add it into the model. Dense is an entry level layer provided by Keras, which accepts the number of neurons or units (32) as its required parameter. If the layer is first layer, then we need to provide Input Shape, (16,) as well. Otherwise, the output of the previous layer will be used as input of the next layer. All other parameters are optional.

First parameter represents the number of units (neurons).

input_shape represent the shape of input data.

kernel_initializer represent initializer to be used. he_uniform function is set as value.

kernel_regularizer represent regularizer to be used. None is set as value.

kernel_constraint represent constraint to be used. MaxNorm function is set as value.

activation represent activation to be used. relu function is set as value.

Line 10 creates second Dense layer with 16 units and set relu as the activation function.

Line 11 creates final Dense layer with 8 units.

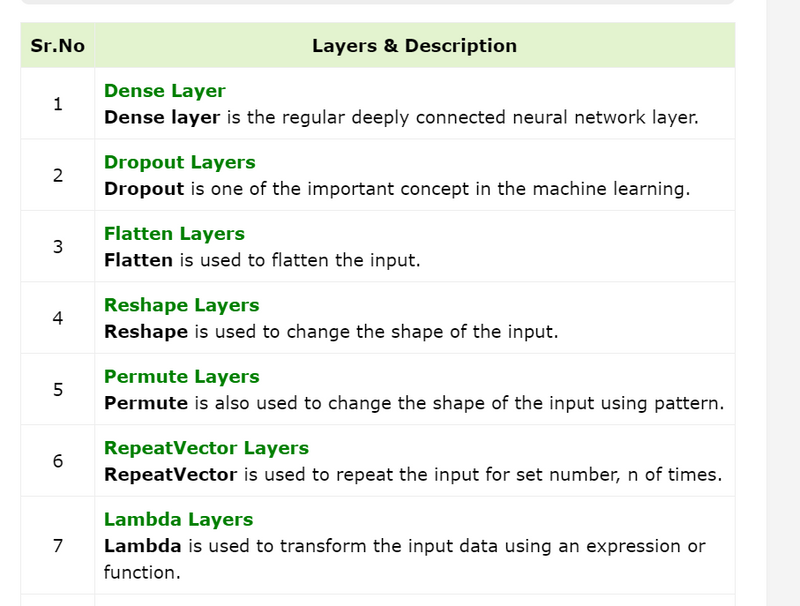

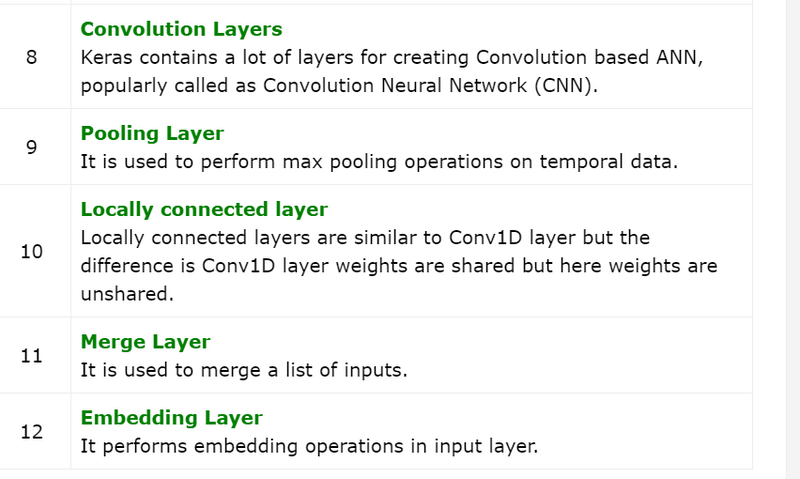

Basic Concept of Layers

Input shape

In machine learning, all type of input data like text, images or videos will be first converted into array of numbers and then feed into the algorithm. Input numbers may be single dimensional array, two dimensional array (matrix) or multi-dimensional array. We can specify the dimensional information using shape, a tuple of integers. For example, (4,2) represent matrix with four rows and two columns.

>>> import numpy as np

>>> shape = (4, 2)

>>> input = np.zeros(shape)

>>> print(input)

[

[0. 0.]

[0. 0.]

[0. 0.]

[0. 0.]

]

>>>

Similarly, (3,4,2) three dimensional matrix having three collections of 4x2 matrix (two rows and four columns).

>>> import numpy as np

>>> shape = (3, 4, 2)

>>> input = np.zeros(shape)

>>> print(input)

[

[[0. 0.] [0. 0.] [0. 0.] [0. 0.]]

[[0. 0.] [0. 0.] [0. 0.] [0. 0.]]

[[0. 0.] [0. 0.] [0. 0.] [0. 0.]]

]

>>>

To create the first layer of the model (or input layer of the model), shape of the input data should be specified.

Initializers

In Machine Learning, weight will be assigned to all input data. Initializers module provides different functions to set these initial weight. Some of the Keras Initializer function are as follows −

Zeros

Generates 0 for all input data.

from keras.models import Sequential

from keras.layers import Activation, Dense

from keras import initializers

my_init = initializers.Zeros()

model = Sequential()

model.add(Dense(512, activation = 'relu', input_shape = (784,),

kernel_initializer = my_init))

Where, kernel_initializer represent the initializer for kernel of the model.

Ones

Generates 1 for all input data.

from keras.models import Sequential

from keras.layers import Activation, Dense

from keras import initializers

my_init = initializers.Ones()

model.add(Dense(512, activation = 'relu', input_shape = (784,),

kernel_initializer = my_init))

Constant

Generates a constant value (say, 5) specified by the user for all input data.

from keras.models import Sequential

from keras.layers import Activation, Dense

from keras import initializers

my_init = initializers.Constant(value = 0) model.add(

Dense(512, activation = 'relu', input_shape = (784,), kernel_initializer = my_init)

)

where, value represent the constant value

RandomNormal

Generates value using normal distribution of input data.

from keras.models import Sequential

from keras.layers import Activation, Dense

from keras import initializers

my_init = initializers.RandomNormal(mean=0.0,

stddev = 0.05, seed = None)

model.add(Dense(512, activation = 'relu', input_shape = (784,),

kernel_initializer = my_init))

where,

mean represent the mean of the random values to generate

stddev represent the standard deviation of the random values to generate

seed represent the values to generate random number

RandomUniform

Generates value using uniform distribution of input data.

from keras import initializers

my_init = initializers.RandomUniform(minval = -0.05, maxval = 0.05, seed = None)

model.add(Dense(512, activation = 'relu', input_shape = (784,),

kernel_initializer = my_init))

where,

minval represent the lower bound of the random values to generate

maxval represent the upper bound of the random values to generate

TruncatedNormal

Generates value using truncated normal distribution of input data.

from keras.models import Sequential

from keras.layers import Activation, Dense

from keras import initializers

my_init = initializers.TruncatedNormal(mean = 0.0, stddev = 0.05, seed = None

model.add(Dense(512, activation = 'relu', input_shape = (784,),

kernel_initializer = my_init))

VarianceScaling

Generates value based on the input shape and output shape of the layer along with the specified scale.

from keras.models import Sequential

from keras.layers import Activation, Dense

from keras import initializers

my_init = initializers.VarianceScaling(

scale = 1.0, mode = 'fan_in', distribution = 'normal', seed = None)

model.add(Dense(512, activation = 'relu', input_shape = (784,),

skernel_initializer = my_init))

where,

scale represent the scaling factor

mode represent any one of fan_in, fan_out and fan_avg values

distribution represent either of normal or uniform

VarianceScaling

It finds the stddev value for normal distribution using below formula and then find the weights using normal distribution,

stddev = sqrt(scale / n)

where n represent,

number of input units for mode = fan_in

number of out units for mode = fan_out

average number of input and output units for mode = fan_avg

Similarly, it finds the limit for uniform distribution using below formula and then find the weights using uniform distribution,

limit = sqrt(3 * scale / n)

lecun_normal

Generates value using lecun normal distribution of input data.

from keras.models import Sequential

from keras.layers import Activation, Dense

from keras import initializers

my_init = initializers.RandomUniform(minval = -0.05, maxval = 0.05, seed = None)

model.add(Dense(512, activation = 'relu', input_shape = (784,),

kernel_initializer = my_init))

It finds the stddev using the below formula and then apply normal distribution

stddev = sqrt(1 / fan_in)

where, fan_in represent the number of input units.

lecun_uniform

Generates value using lecun uniform distribution of input data.

from keras.models import Sequential

from keras.layers import Activation, Dense

from keras import initializers

my_init = initializers.lecun_uniform(seed = None)

model.add(Dense(512, activation = 'relu', input_shape = (784,),

kernel_initializer = my_init))

It finds the limit using the below formula and then apply uniform distribution

limit = sqrt(3 / fan_in)

where,

fan_in represents the number of input units

fan_out represents the number of output units

glorot_normal

Generates value using glorot normal distribution of input data.

from keras.models import Sequential

from keras.layers import Activation, Dense

from keras import initializers

my_init = initializers.glorot_normal(seed=None) model.add(

Dense(512, activation = 'relu', input_shape = (784,), kernel_initializer = my_init)

)

It finds the stddev using the below formula and then apply normal distribution

stddev = sqrt(2 / (fan_in + fan_out))

where,

fan_in represents the number of input units

fan_out represents the number of output units

glorot_uniform

Generates value using glorot uniform distribution of input data.

from keras.models import Sequential

from keras.layers import Activation, Dense

from keras import initializers

my_init = initializers.glorot_uniform(seed = None)

model.add(Dense(512, activation = 'relu', input_shape = (784,),

kernel_initializer = my_init))

It finds the limit using the below formula and then apply uniform distribution

limit = sqrt(6 / (fan_in + fan_out))

where,

fan_in represent the number of input units.

fan_out represents the number of output units

he_normal

Generates value using he normal distribution of input data.

from keras.models import Sequential

from keras.layers import Activation, Dense

from keras import initializers

my_init = initializers.RandomUniform(minval = -0.05, maxval = 0.05, seed = None)

model.add(Dense(512, activation = 'relu', input_shape = (784,),

kernel_initializer = my_init))

It finds the stddev using the below formula and then apply normal distribution.

stddev = sqrt(2 / fan_in)

where, fan_in represent the number of input units.

he_uniform

Generates value using he uniform distribution of input data.

from keras.models import Sequential

from keras.layers import Activation, Dense

from keras import initializers

my_init = initializers.he_normal(seed = None)

model.add(Dense(512, activation = 'relu', input_shape = (784,),

kernel_initializer = my_init))

It finds the limit using the below formula and then apply uniform distribution.

limit = sqrt(6 / fan_in)

where, fan_in represent the number of input units.

Orthogonal

Generates a random orthogonal matrix.

from keras.models import Sequential

from keras.layers import Activation, Dense

from keras import initializers

my_init = initializers.Orthogonal(gain = 1.0, seed = None)

model.add(Dense(512, activation = 'relu', input_shape = (784,),

kernel_initializer = my_init))

where, gain represent the multiplication factor of the matrix.

Identity

Generates identity matrix.

from keras.models import Sequential

from keras.layers import Activation, Dense

from keras import initializers

my_init = initializers.Identity(gain = 1.0) model.add(

Dense(512, activation = 'relu', input_shape = (784,), kernel_initializer = my_init)

)

Constraints

In machine learning, a constraint will be set on the parameter (weight) during optimization phase. <>Constraints module provides different functions to set the constraint on the layer. Some of the constraint functions are as follows.

NonNeg

Constrains weights to be non-negative.

from keras.models import Sequential

from keras.layers import Activation, Dense

from keras import initializers

my_init = initializers.Identity(gain = 1.0) model.add(

Dense(512, activation = 'relu', input_shape = (784,),

kernel_initializer = my_init)

)

where, kernel_constraint represent the constraint to be used in the layer.

UnitNorm

Constrains weights to be unit norm.

from keras.models import Sequential

from keras.layers import Activation, Dense

from keras import constraints

my_constrain = constraints.UnitNorm(axis = 0)

model = Sequential()

model.add(Dense(512, activation = 'relu', input_shape = (784,),

kernel_constraint = my_constrain))

MaxNorm

Constrains weight to norm less than or equals to the given value.

from keras.models import Sequential

from keras.layers import Activation, Dense

from keras import constraints

my_constrain = constraints.MaxNorm(max_value = 2, axis = 0)

model = Sequential()

model.add(Dense(512, activation = 'relu', input_shape = (784,),

kernel_constraint = my_constrain))

where,

max_value represent the upper bound

axis represent the dimension in which the constraint to be applied. e.g. in Shape (2,3,4) axis 0 denotes first dimension, 1 denotes second dimension and 2 denotes third dimension

MinMaxNorm

Constrains weights to be norm between specified minimum and maximum values.

from keras.models import Sequential

from keras.layers import Activation, Dense

from keras import constraints

my_constrain = constraints.MinMaxNorm(min_value = 0.0, max_value = 1.0, rate = 1.0, axis = 0)

model = Sequential()

model.add(Dense(512, activation = 'relu', input_shape = (784,),

kernel_constraint = my_constrain))

where, rate represent the rate at which the weight constrain is applied.

Regularizers

In machine learning, regularizers are used in the optimization phase. It applies some penalties on the layer parameter during optimization. Keras regularization module provides below functions to set penalties on the layer. Regularization applies per-layer basis only.

L1 Regularizer

It provides L1 based regularization.

from keras.models import Sequential

from keras.layers import Activation, Dense

from keras import regularizers

my_regularizer = regularizers.l1(0.)

model = Sequential()

model.add(Dense(512, activation = 'relu', input_shape = (784,),

kernel_regularizer = my_regularizer))

where, kernel_regularizer represent the rate at which the weight constrain is applied.

L2 Regularizer

It provides L2 based regularization.

from keras.models import Sequential

from keras.layers import Activation, Dense

from keras import regularizers

my_regularizer = regularizers.l2(0.)

model = Sequential()

model.add(Dense(512, activation = 'relu', input_shape = (784,),

kernel_regularizer = my_regularizer))

L1 and L2 Regularizer

It provides both L1 and L2 based regularization.

from keras.models import Sequential

from keras.layers import Activation, Dense

from keras import regularizers

my_regularizer = regularizers.l2(0.)

model = Sequential()

model.add(Dense(512, activation = 'relu', input_shape = (784,),

kernel_regularizer = my_regularizer))

Activations

In machine learning, activation function is a special function used to find whether a specific neuron is activated or not. Basically, the activation function does a nonlinear transformation of the input data and thus enable the neurons to learn better. Output of a neuron depends on the activation function.

As you recall the concept of single perception, the output of a perceptron (neuron) is simply the result of the activation function, which accepts the summation of all input multiplied with its corresponding weight plus overall bias, if any available.

result = Activation(SUMOF(input * weight) + bias)

So, activation function plays an important role in the successful learning of the model. Keras provides a lot of activation function in the activations module. Let us learn all the activations available in the module.

linear

Applies Linear function. Does nothing.

from keras.models import Sequential

from keras.layers import Activation, Dense

model = Sequential()

model.add(Dense(512, activation = 'linear', input_shape = (784,)))

Where, activation refers the activation function of the layer. It can be specified simply by the name of the function and the layer will use corresponding activators.

elu

Applies Exponential linear unit.

from keras.models import Sequential

from keras.layers import Activation, Dense

model = Sequential()

model.add(Dense(512, activation = 'elu', input_shape = (784,)))

selu

Applies Scaled exponential linear unit.

from keras.models import Sequential

from keras.layers import Activation, Dense

model = Sequential()

model.add(Dense(512, activation = 'selu', input_shape = (784,)))

relu

Applies Rectified Linear Unit.

from keras.models import Sequential

from keras.layers import Activation, Dense

model = Sequential()

model.add(Dense(512, activation = 'relu', input_shape = (784,)))

softmax

Applies Softmax function.

from keras.models import Sequential

from keras.layers import Activation, Dense

model = Sequential()

model.add(Dense(512, activation = 'softmax', input_shape = (784,)))

softplus

Applies Softplus function.

from keras.models import Sequential

from keras.layers import Activation, Dense

model = Sequential()

model.add(Dense(512, activation = 'softplus', input_shape = (784,)))

softsign

Applies Softsign function.

from keras.models import Sequential

from keras.layers import Activation, Dense

model = Sequential()

model.add(Dense(512, activation = 'softsign', input_shape = (784,)))

tanh

Applies Hyperbolic tangent function.

from keras.models import Sequential

from keras.layers import Activation, Dense

model = Sequential()

model.add(Dense(512, activation = 'tanh', input_shape = (784,)))

sigmoid

Applies Sigmoid function.

from keras.models import Sequential

from keras.layers import Activation, Dense

model = Sequential()

model.add(Dense(512, activation = 'sigmoid', input_shape = (784,)))

hard_sigmoid

Applies Hard Sigmoid function.

from keras.models import Sequential

from keras.layers import Activation, Dense

model = Sequential()

model.add(Dense(512, activation = 'hard_sigmoid', input_shape = (784,)))

exponential

Applies exponential function.

from keras.models import Sequential

from keras.layers import Activation, Dense

model = Sequential()

model.add(Dense(512, activation = 'exponential', input_shape = (784,)))

Top comments (0)