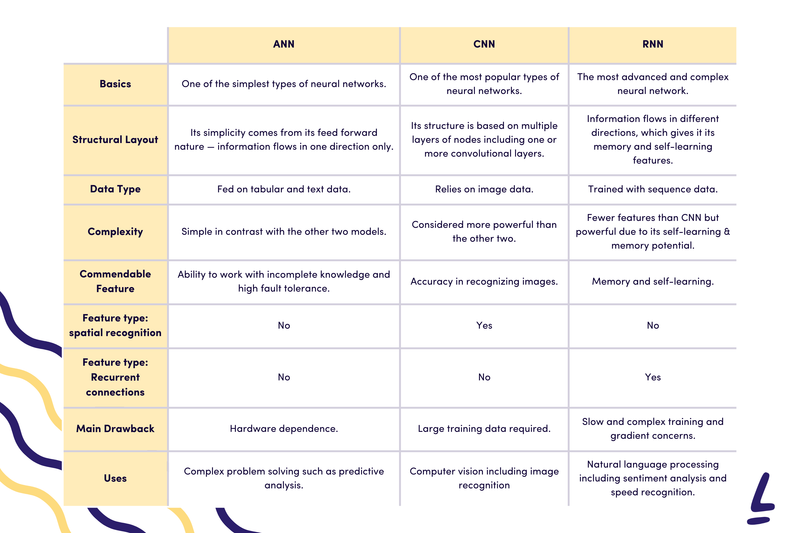

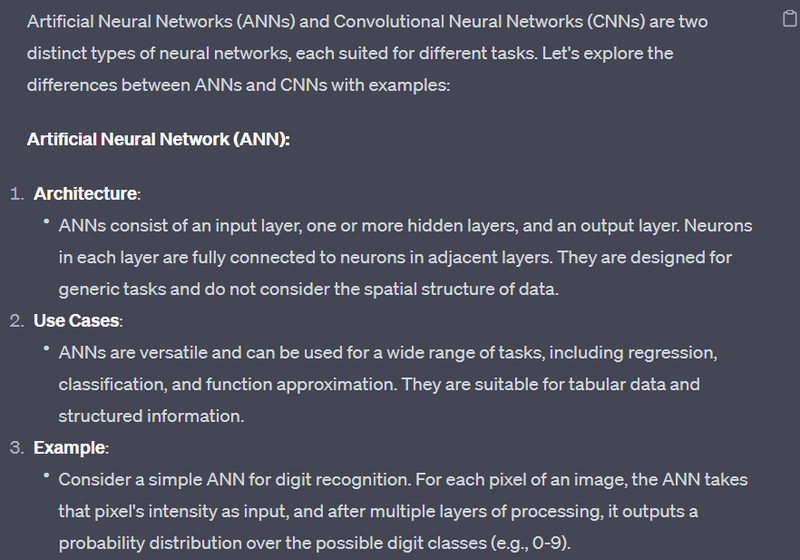

Explain the difference between ANN and CNN with example

Explain the difference between RNN and LSTM

Explain the advantage and disadvantage of Recurrent Neural Networks

Here are different types of neural networks along with examples of their applications:

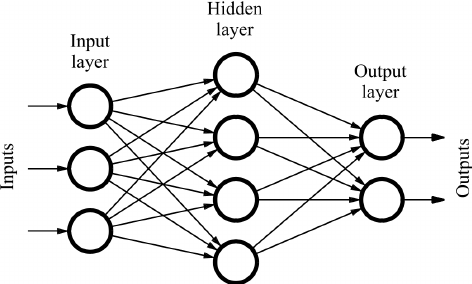

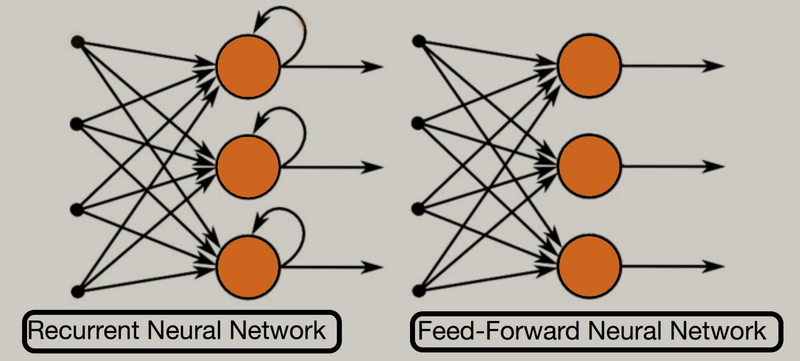

Feedforward Neural Network (FNN):

Also known as a Multi-Layer Perceptron (MLP), it consists of an input layer, one or more hidden layers, and an output layer. Information flows in one direction, from input to output.

Example: Used in image classification tasks, such as recognizing handwritten digits in the MNIST dataset.

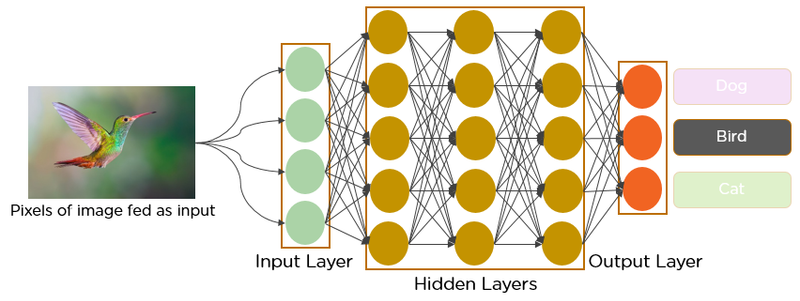

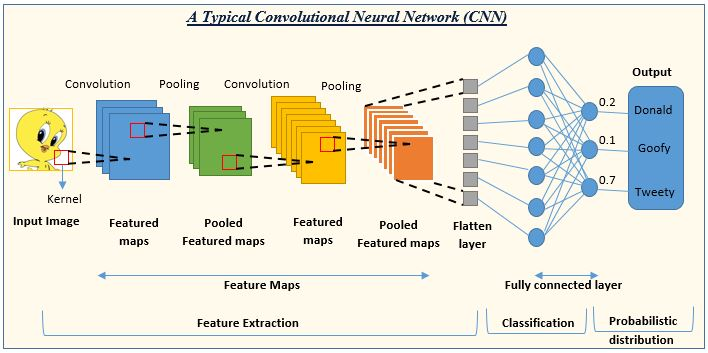

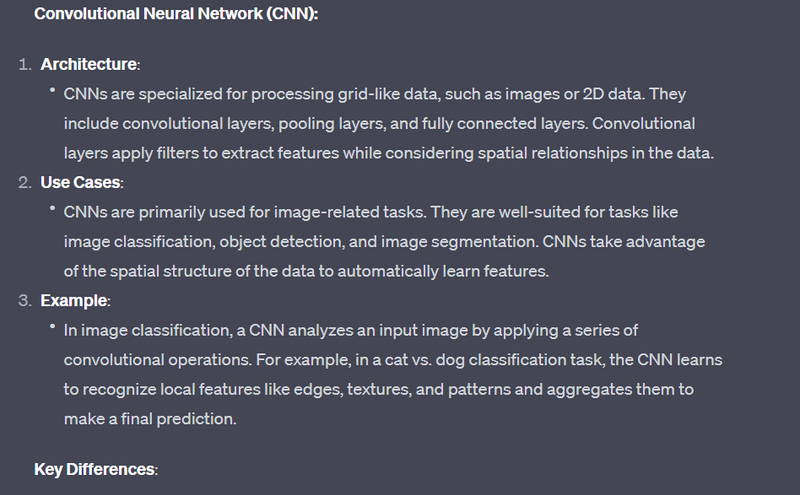

Convolutional Neural Network (CNN):

Designed for image processing tasks, CNNs use convolutional layers to automatically learn hierarchical features from input images.

Example: Used in image classification, object detection, and facial recognition. One popular CNN architecture is the VGGNet.

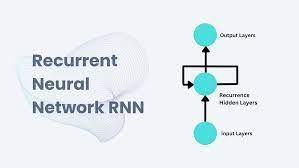

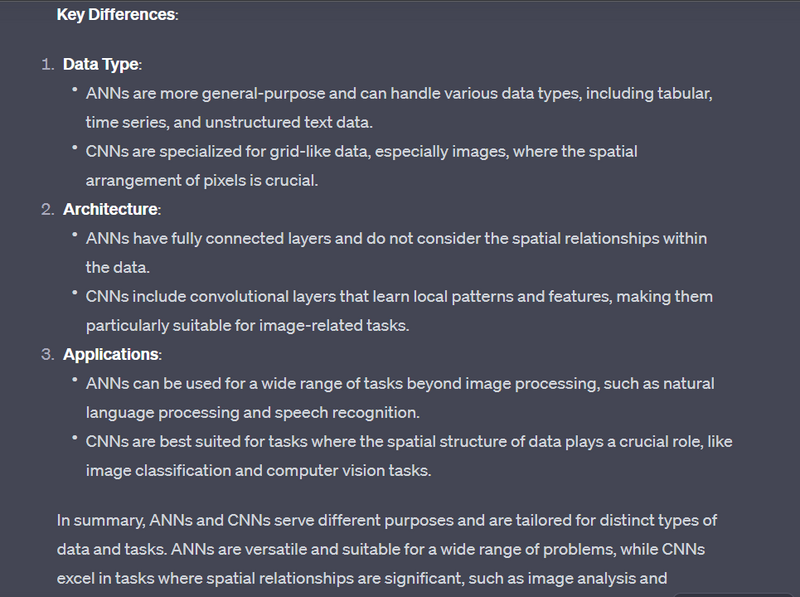

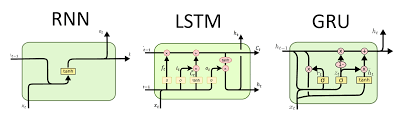

Recurrent Neural Network (RNN):

Suited for tasks involving sequences or time series data. RNNs have recurrent connections, allowing them to maintain a memory of previous inputs.

Example: Used in natural language processing tasks like text generation, machine translation, and sentiment analysis.

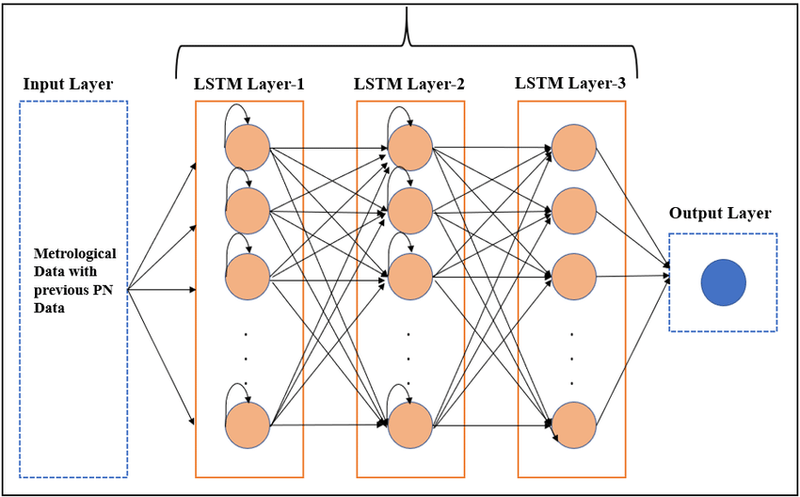

Long Short-Term Memory (LSTM):

A variant of RNN with specialized units to capture long-term dependencies in sequential data.

Example: Used in speech recognition, language modeling, and financial time series predictions.

Gated Recurrent Unit (GRU):

Similar to LSTMs, GRUs are used for sequential data tasks but have a simplified architecture.

Example: Applied in speech recognition, recommendation systems, and video analysis.

Autoencoder:

Unsupervised networks used for dimensionality reduction and feature learning. They consist of an encoder and decoder to reconstruct input data.

Example: Used in image denoising, anomaly detection, and feature extraction.

Generative Adversarial Network (GAN):

Comprises a generator and discriminator network that are trained adversarially. The generator creates realistic data, and the discriminator tries to distinguish real from generated data.

Example: Applied in image generation, deepfake creation, and style transfer.

Radial Basis Function Network (RBFN):

Uses radial basis functions as activation functions in the hidden layer.

Example: Used for function approximation, interpolation, and pattern recognition.

Self-Organizing Map (SOM):

Maps high-dimensional input data to a lower-dimensional grid and clusters similar data points together.

Example: Utilized for data visualization, clustering, and exploratory data analysis.

Boltzmann Machine:

A stochastic, recurrent neural network used in restricted forms like Restricted Boltzmann Machines (RBMs) for unsupervised feature learning and collaborative filtering.

Capsule Network (CapsNet):

Designed to handle hierarchical and spatial relationships in data, particularly for image recognition.

Example: Used in image recognition, especially for detecting objects with complex spatial structures.

Radial Basis Function Neural Network (RBFNN):

Utilizes radial basis functions as activation functions and is suitable for interpolation, function approximation, and classification.

These are just a few examples of the many types of neural networks, each tailored to different tasks and data structures. The choice of neural network type depends on the specific problem you are trying to solve and the nature of your data.

Top comments (0)