How to embediing dynamic collection of lists

from langchain.embeddings import OpenAIEmbeddings

# Initialize the OpenAIEmbeddings wrapper

embeddings = OpenAIEmbeddings(model="text-embedding-ada-002")

# Example dynamic collection of lists

documents = [

["Alpha is the first letter of the Greek alphabet", "It is used in physics as a constant"],

["Beta is the second letter of the Greek alphabet", "It is often associated with testing"],

["Gamma is the third letter", "It is used in gamma rays"],

]

# Function to embed a collection of lists dynamically

def embed_dynamic_collection(collection):

all_embeddings = [] # To store embeddings for all lists

for doc_list in collection:

embeddings_batch = embeddings.embed_documents(doc_list) # Embed each sublist

all_embeddings.append(embeddings_batch)

return all_embeddings

# Generate embeddings

result = embed_dynamic_collection(documents)

# Print embeddings for the second document in the second list

print("Embedding for second document in the second list:", result[1][1])

output

Example: result

For simplicity, we'll represent embeddings with shortened values:

[

[

[0.001, -0.002, 0.003, ..., 0.0012], # Embedding for "Alpha is the first letter..."

[0.004, -0.001, 0.002, ..., 0.0023] # Embedding for "It is used in physics..."

],

[

[0.002, -0.003, 0.001, ..., 0.0017], # Embedding for "Beta is the second letter..."

[0.003, -0.004, 0.002, ..., 0.0028] # Embedding for "It is often associated..."

],

[

[0.001, -0.005, 0.002, ..., 0.0014], # Embedding for "Gamma is the third letter..."

[0.002, -0.006, 0.003, ..., 0.0031] # Embedding for "It is used in gamma rays"

]

]

Accessing result[1][1]

This retrieves the embedding for the second document in the second sublist:

[0.003, -0.004, 0.002, ..., 0.0028]

How to embediing dynamic collection of lists after flattening

Flattening the Collection

If the original documents collection looks like this:

documents = [

["Alpha is the first letter of the Greek alphabet", "It is used in physics as a constant"],

["Beta is the second letter of the Greek alphabet", "It is often associated with testing"],

["Gamma is the third letter", "It is used in gamma rays"],

]

After flattening, it will become:

flattened_documents = [

"Alpha is the first letter of the Greek alphabet",

"It is used in physics as a constant",

"Beta is the second letter of the Greek alphabet",

"It is often associated with testing",

"Gamma is the third letter",

"It is used in gamma rays",

]

This allows you to embed all the text in one batch.

from langchain.embeddings import OpenAIEmbeddings

# Initialize the OpenAIEmbeddings wrapper

embeddings = OpenAIEmbeddings(model="text-embedding-ada-002")

# Original collection of documents (nested list)

documents = [

["Alpha is the first letter of the Greek alphabet", "It is used in physics as a constant"],

["Beta is the second letter of the Greek alphabet", "It is often associated with testing"],

["Gamma is the third letter", "It is used in gamma rays"],

]

# Flatten the collection

flattened_documents = [doc for sublist in documents for doc in sublist]

# Generate embeddings for the flattened list

all_embeddings = embeddings.embed_documents(flattened_documents)

# Print information about the embeddings

print("Number of documents:", len(flattened_documents))

print("Embedding for the first document:", all_embeddings[0][:5]) # Print first 5 values of first embedding

print("Embedding for the second document:", all_embeddings[1][:5]) # Print first 5 values of sec

Sample Output

Number of documents: 6

Embedding for the first document: [0.0012, -0.0023, 0.0034, 0.0045, -0.0011]

Embedding for the second document: [0.0021, -0.0034, 0.0015, 0.0032, -0.0008]

How to embed a dynamic collection of JSON data

from langchain.embeddings import OpenAIEmbeddings

# Initialize OpenAIEmbeddings

embeddings = OpenAIEmbeddings(model="text-embedding-ada-002")

# Example JSON data

data = [

{"id": 1, "title": "Alpha is the first letter", "description": "Used in physics as a constant"},

{"id": 2, "title": "Beta is the second letter", "description": "Associated with software testing"},

{"id": 3, "title": "Gamma is the third letter", "description": "Used in gamma rays"},

]

# Function to embed JSON data dynamically

def embed_json_data(json_data, fields_to_embed):

embeddings_result = {} # To store embeddings by ID or a unique key

for item in json_data:

combined_text = " ".join([str(item[field]) for field in fields_to_embed if field in item])

embeddings_result[item["id"]] = embeddings.embed_documents([combined_text])[0]

return embeddings_result

# Specify fields to embed

fields = ["title", "description"]

# Generate embeddings

embedded_data = embed_json_data(data, fields)

# Output embeddings for each JSON item

for id, embedding in embedded_data.items():

print(f"ID: {id}, Embedding: {embedding[:5]}...") # Show first 5 values for brevity

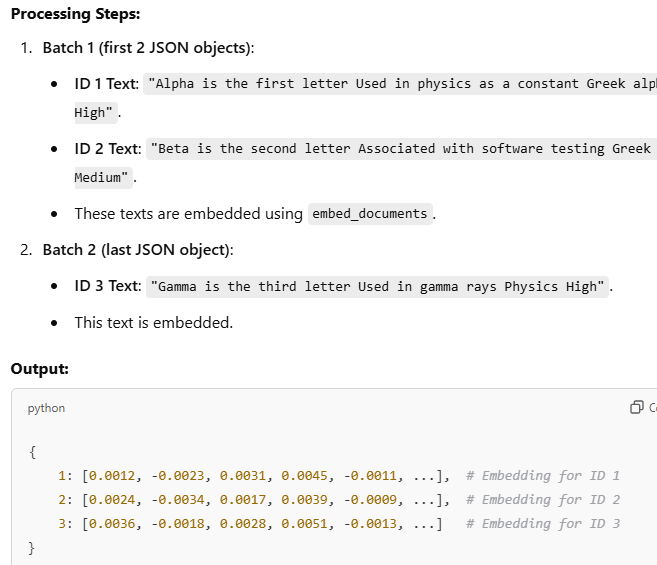

How to handling nested JSON and Batch Processing

from langchain.embeddings import OpenAIEmbeddings

# Initialize OpenAIEmbeddings

embeddings = OpenAIEmbeddings(model="text-embedding-ada-002")

# Example Nested JSON Data

data = [

{

"id": 1,

"title": "Alpha is the first letter",

"description": "Used in physics as a constant",

"extra": {"category": "Greek alphabet", "importance": "High"}

},

{

"id": 2,

"title": "Beta is the second letter",

"description": "Associated with software testing",

"extra": {"category": "Greek alphabet", "importance": "Medium"}

},

{

"id": 3,

"title": "Gamma is the third letter",

"description": "Used in gamma rays",

"extra": {"category": "Physics", "importance": "High"}

},

]

# Recursive function to extract and combine text fields from nested JSON

def extract_text_from_json(json_obj, fields_to_embed):

combined_text = []

for field in fields_to_embed:

if isinstance(json_obj, dict):

# Check for nested dictionaries

if field in json_obj and isinstance(json_obj[field], dict):

combined_text.append(extract_text_from_json(json_obj[field], json_obj[field].keys()))

elif field in json_obj:

combined_text.append(str(json_obj[field]))

elif isinstance(json_obj, list):

# Handle lists by applying the function recursively

for item in json_obj:

combined_text.append(extract_text_from_json(item, fields_to_embed))

return " ".join(combined_text)

# Function to process JSON data in batches

def embed_json_data_in_batches(json_data, fields_to_embed, batch_size=2):

embeddings_result = {}

batch = []

for item in json_data:

combined_text = extract_text_from_json(item, fields_to_embed)

batch.append((item["id"], combined_text))

# Process batch when it reaches batch_size

if len(batch) == batch_size:

ids, texts = zip(*batch)

batch_embeddings = embeddings.embed_documents(texts)

embeddings_result.update({id_: embedding for id_, embedding in zip(ids, batch_embeddings)})

batch = [] # Reset the batch

# Process any remaining items

if batch:

ids, texts = zip(*batch)

batch_embeddings = embeddings.embed_documents(texts)

embeddings_result.update({id_: embedding for id_, embedding in zip(ids, batch_embeddings)})

return embeddings_result

# Specify fields to embed

fields = ["title", "description", "extra"]

# Generate embeddings in batches

embedded_data = embed_json_data_in_batches(data, fields, batch_size=2)

# Output embeddings for each JSON item

for id, embedding in embedded_data.items():

print(f"ID: {id}, Embedding: {embedding[:5]}...") # Show first 5 values for brevity

Example Output

For the provided nested JSON, the output might look like this:

ID: 1, Embedding: [0.0012, -0.0023, 0.0031, 0.0045, -0.0011]...

ID: 2, Embedding: [0.0024, -0.0034, 0.0017, 0.0039, -0.0009]...

ID: 3, Embedding: [0.0036, -0.0018, 0.0028, 0.0051, -0.0013]...

Function: extract_text_from_json

This function recursively extracts text from the specified fields in a JSON object, including nested dictionaries and lists.

Input Example:

json_obj = {

"id": 1,

"title": "Alpha is the first letter",

"description": "Used in physics as a constant",

"extra": {"category": "Greek alphabet", "importance": "High"}

}

fields_to_embed = ["title", "description", "extra"]

SUMMARY

How to embediing dynamic collection of lists using OpenAIEmbeddings through class

Initialize the OpenAIEmbeddings wrapper

declare or define dynamic collection of lists

Function to embed a collection of lists dynamically==>create empty list To store embeddings for all lists==>apply for loop==

inside for loop Embed each sublist

How to embediing dynamic collection of lists after flattening

flattened_documents = [doc for sublist in documents for doc in sublist]

embeddings.embed_documents(flattened_documents)==> len(flattened_documents)===>all_embeddings[0][:5]

How to embed a dynamic collection of JSON data

Top comments (0)