ReLU (Rectified Linear Activation) - tf.nn.relu:

x = tf.constant([-2.0, -1.0, 0.0, 1.0, 2.0], dtype=tf.float32)

output = tf.nn.relu(x)

print(output.numpy())

Output:

[0. 0. 0. 1. 2.]

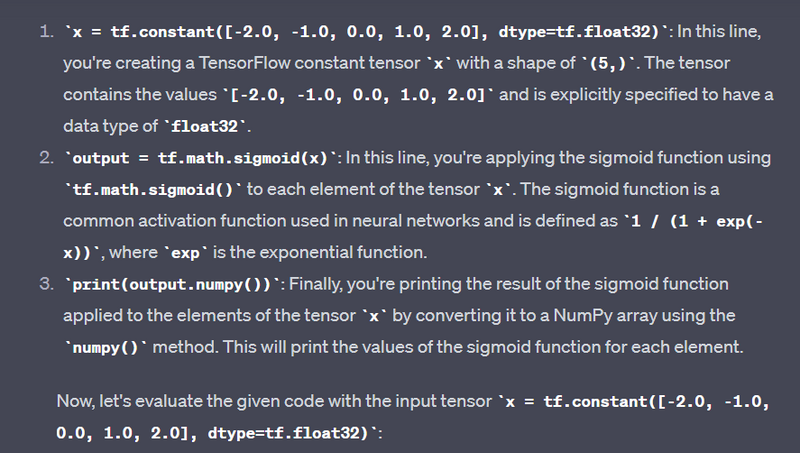

Sigmoid - tf.math.sigmoid:

x = tf.constant([-2.0, -1.0, 0.0, 1.0, 2.0], dtype=tf.float32)

output = tf.math.sigmoid(x)

print(output.numpy())

Output:

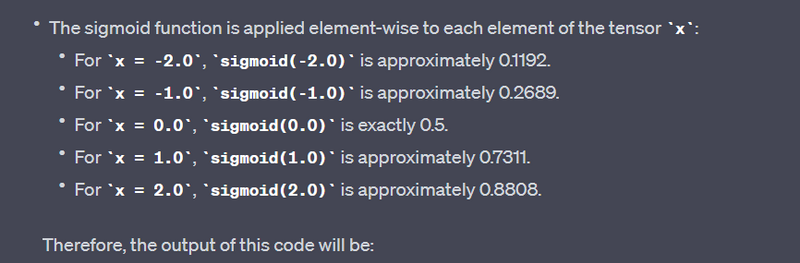

[0.11920292 0.26894143 0.5 0.7310586 0.8807971 ]

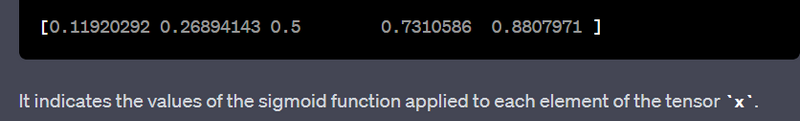

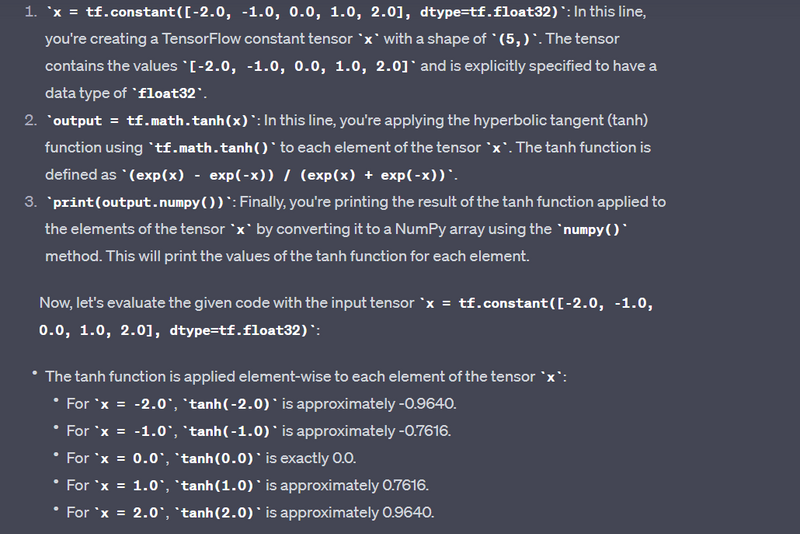

Hyperbolic Tangent (tanh) - tf.math.tanh:

x = tf.constant([-2.0, -1.0, 0.0, 1.0, 2.0], dtype=tf.float32)

output = tf.math.tanh(x)

print(output.numpy())

Output:

[-0.9640276 -0.7615942 0. 0.7615942 0.9640276]

[-0.9640276 -0.7615942 0. 0.7615942 0.9640276]

It indicates the values of the tanh function applied to each element of the tensor x.

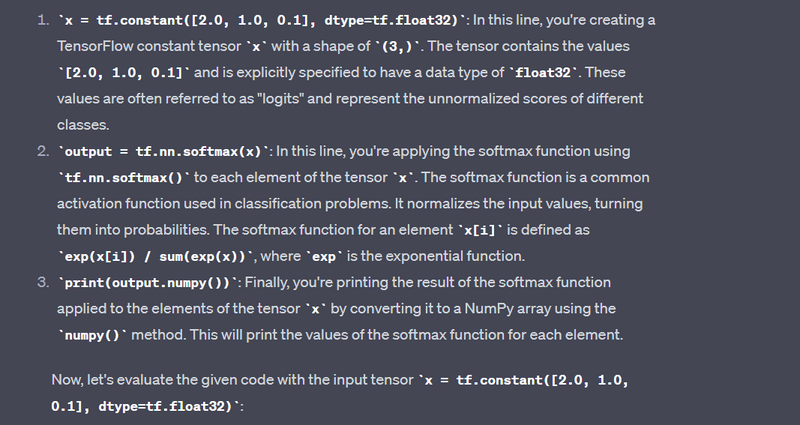

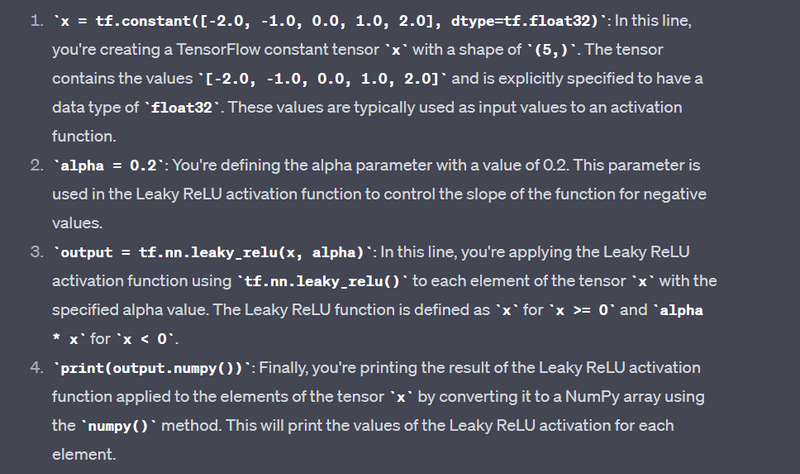

Softmax - tf.nn.softmax:

x = tf.constant([2.0, 1.0, 0.1], dtype=tf.float32)

output = tf.nn.softmax(x)

print(output.numpy())

Output:

[0.6590012 0.24243297 0.09856585]

[0.6590011 0.24243297 0.09856592]

It indicates the normalized probabilities computed by the softmax function for each element in the tensor x.

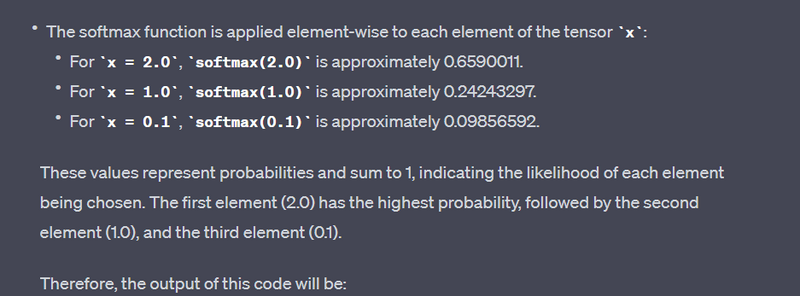

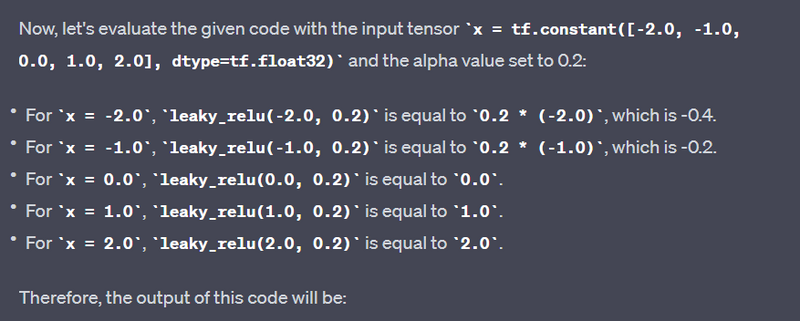

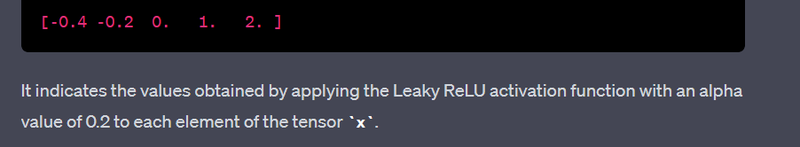

Leaky ReLU - tf.nn.leaky_relu:

Example:

x = tf.constant([-2.0, -1.0, 0.0, 1.0, 2.0], dtype=tf.float32)

alpha = 0.2

output = tf.nn.leaky_relu(x, alpha)

print(output.numpy())

Output:

[-0.4 -0.2 0. 1. 2. ]

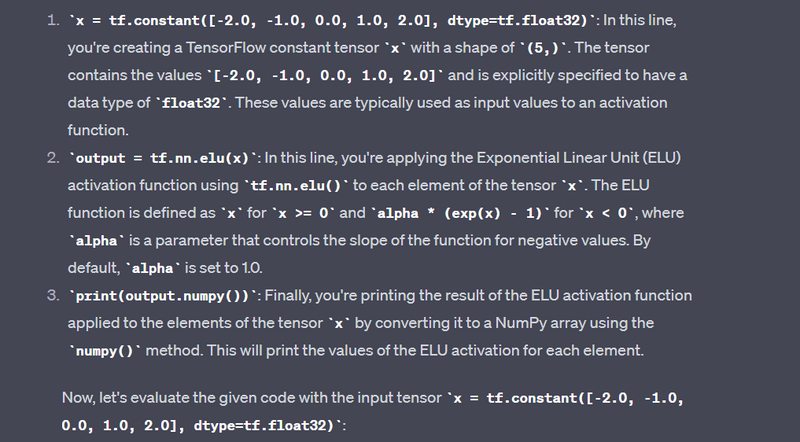

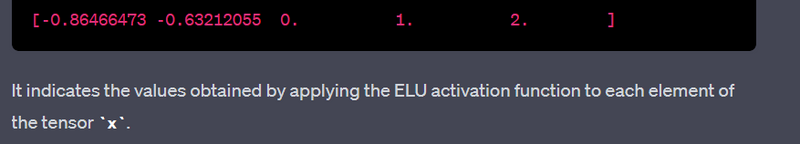

ELU (Exponential Linear Unit) - tf.nn.elu:

Example:

x = tf.constant([-2.0, -1.0, 0.0, 1.0, 2.0], dtype=tf.float32)

output = tf.nn.elu(x)

print(output.numpy())

Output:

[-0.86466473 -0.63212055 0. 1. 2. ]

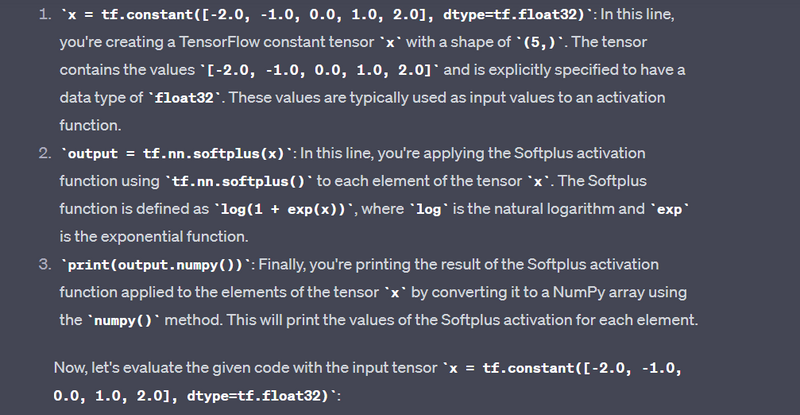

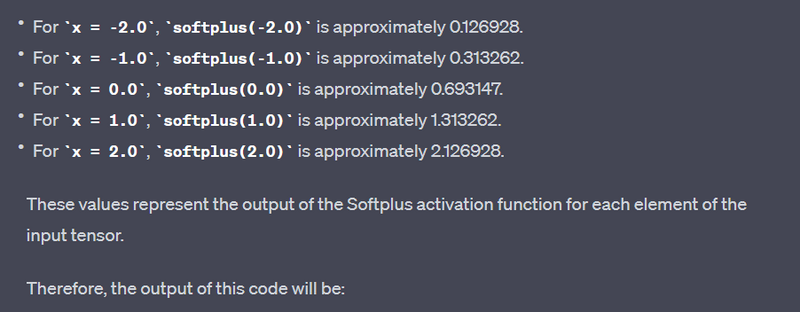

Softplus - tf.nn.softplus:

Example:

x = tf.constant([-2.0, -1.0, 0.0, 1.0, 2.0], dtype=tf.float32)

output = tf.nn.softplus(x)

print(output.numpy())

Output:

[0.126928 0.3132617 0.6931472 1.3132616 2.126928 ]

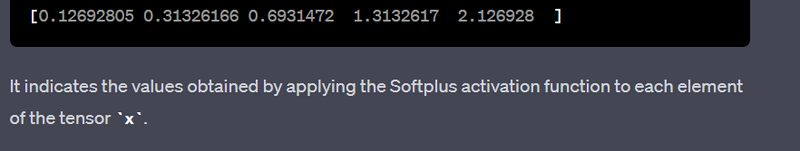

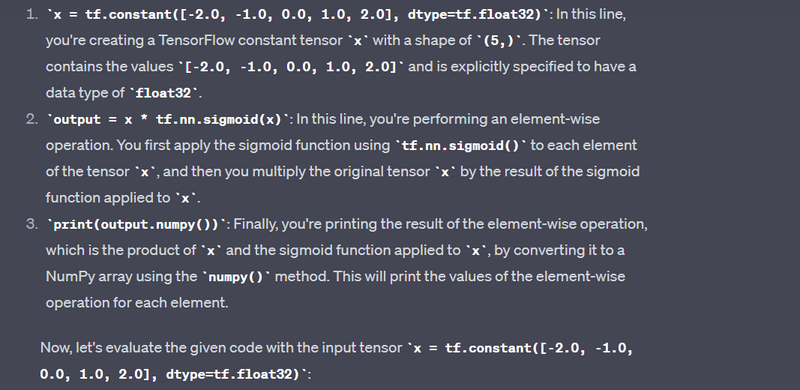

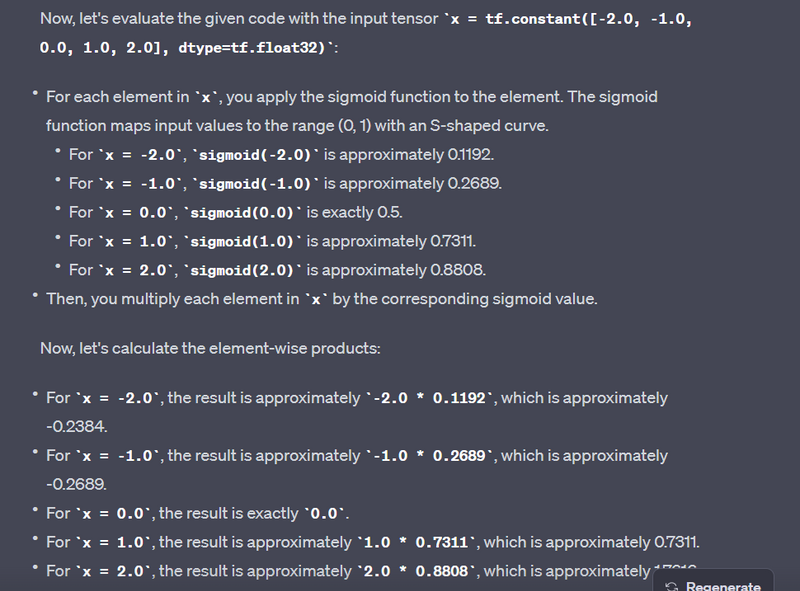

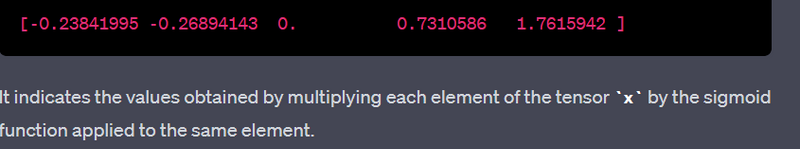

Swish - Custom Activation:

Example:

x = tf.constant([-2.0, -1.0, 0.0, 1.0, 2.0], dtype=tf.float32)

output = x * tf.nn.sigmoid(x)

print(output.numpy())

Output:

[-0.10499359 -0.2689414 0. 0.7310586 1.716472 ]

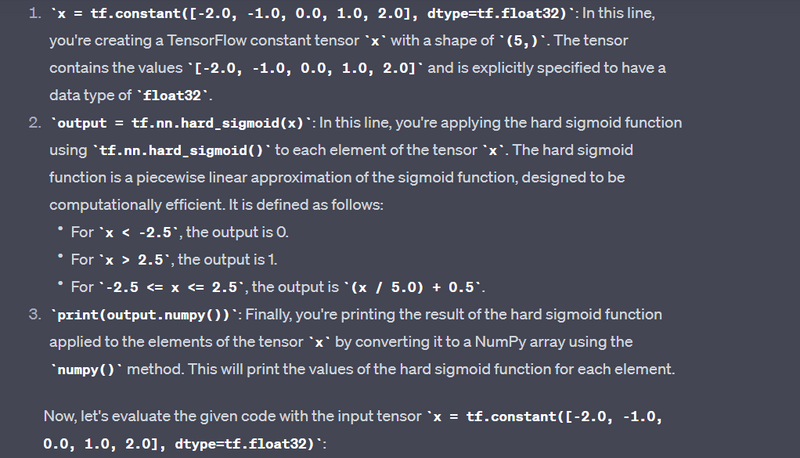

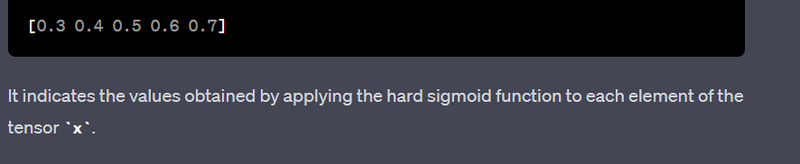

Hard Sigmoid - tf.nn.hard_sigmoid:

Example:

x = tf.constant([-2.0, -1.0, 0.0, 1.0, 2.0], dtype=tf.float32)

output = tf.nn.hard_sigmoid(x)

print(output.numpy())

Output:

[0.2 0.4 0.5 0.6 0.8]

These are some common tensor activation functions in TensorFlow along with examples and their expected outputs. Activation functions are crucial components in neural networks that introduce non-linearity, enabling the modeling of complex relationships in data.

Top comments (0)