What information can be extracted from resume using NER

Code for Text-Based Resume Screening

Code for DOC or Image-Based Resume Screening

To filter the extracted resume data for candidates meeting the specific criteria

Using LLM filter the extracted resume data for candidates meeting the specific criteria

What information can be extracted from resume using NER

When conducting Automated Resume Screening using Named Entity Recognition (NER), there are several key pieces of information that can be extracted from resumes to facilitate more effective and automated candidate evaluation. Here's a comprehensive list of what you can collect:

Personal Information

- Name (e.g., John Doe)

- Contact Details:

- Email Address (e.g., john.doe@example.com)

- Phone Number (e.g., +1 234 567 890)

- Address (e.g., 123 Main St, Los Angeles, CA)

- LinkedIn Profile (e.g., linkedin.com/in/johndoe)

Website/Portfolio (e.g., johndoe.com)

EducationDegree Type (e.g., Bachelor's, Master's, Ph.D.)

Field of Study (e.g., Computer Science, Marketing)

Institution/University (e.g., Stanford University)

Graduation Year (e.g., 2023)

Certifications (e.g., AWS Certified Solutions Architect, PMP)

Work ExperienceJob Titles (e.g., Software Engineer, Product Manager)

Organizations (e.g., Google, Microsoft)

Work Duration:

Start Date (e.g., January 2020)

End Date (e.g., December 2022)

Responsibilities and Achievements:

Key accomplishments (e.g., "Increased sales by 30%")

Responsibilities (e.g., "Developed scalable backend systems")

SkillsTechnical Skills (e.g., Python, Java, SQL, AWS)

Soft Skills (e.g., Leadership, Communication, Problem Solving)

Tools and Software (e.g., Tableau, Photoshop, JIRA)

ProjectsProject Titles (e.g., "E-commerce Website Development")

Description (e.g., "Built a scalable e-commerce platform using Django.")

Technologies Used (e.g., Python, React, AWS)

Outcomes/Achievements (e.g., "Reduced website load time by 50%.")

Links to Project (e.g., GitHub repositories or live demos)

Awards and AchievementsAwards (e.g., "Employee of the Month, March 2022")

Recognition (e.g., "Top 10 in Google Coding Competition")

Scholarships (e.g., "Full-Ride Scholarship to MIT")

LanguagesProgramming Languages (e.g., Python, Java, C++)

Spoken Languages (e.g., English, Spanish, French)

PublicationsTitles (e.g., "Advances in Artificial Intelligence")

Authors (e.g., John Doe, Jane Smith)

Publication Date (e.g., March 2021)

Publisher/Journal (e.g., IEEE, Springer)

DOI or URL (e.g., doi.org/12345 or link to the article)

Interests and HobbiesProfessional Interests (e.g., AI Research, Data Science)

Personal Hobbies (e.g., Photography, Marathon Running)

ReferencesReference Names (e.g., Jane Smith)

Relationship (e.g., Former Manager, Colleague)

Contact Information (e.g., jane.smith@example.com, +1 345 678 901)

Social Media ProfilesLinkedIn

GitHub

Twitter

{

"Name": "John Doe",

"Contact": {

"Email": "john.doe@example.com",

"Phone": "+1 234 567 890",

"LinkedIn": "linkedin.com/in/johndoe"

},

"Education": [

{

"Degree": "Bachelor's",

"Field": "Computer Science",

"Institution": "Stanford University",

"Year": "2020"

}

],

"Work Experience": [

{

"Job Title": "Software Engineer",

"Organization": "Google",

"Duration": "January 2020 - December 2022",

"Responsibilities": [

"Developed scalable backend systems.",

"Improved API performance by 40%."

]

}

],

"Skills": ["Python", "Django", "AWS", "Problem Solving"],

"Projects": [

{

"Title": "E-commerce Website Development",

"Description": "Built a scalable e-commerce platform.",

"Technologies": ["Python", "React", "AWS"]

}

],

"Certifications": ["AWS Certified Solutions Architect"],

"Languages": ["English", "Spanish"]

}

import spacy

# Load SpaCy model

nlp = spacy.load("en_core_web_sm")

def extract_resume_details(text):

doc = nlp(text)

details = {"Person": [], "Degree": [], "Skills": []}

for ent in doc.ents:

if ent.label_ == "PERSON":

details["Person"].append(ent.text)

elif ent.label_ == "WORK_OF_ART": # Treating degrees as a work of art in this example

details["Degree"].append(ent.text)

elif ent.label_ == "PRODUCT": # Using PRODUCT as a proxy for skills

details["Skills"].append(ent.text)

return details

# Test

text = "John Doe has a Master's degree in Computer Science and is skilled in Python and Machine Learning."

print(extract_resume_details(text))

Extract Education and Experience Information

Input: Resume text

Output: List of extracted degrees, institutions, and experience details

import spacy

nlp = spacy.load("en_core_web_sm")

resume_text = "John Doe has a B.Tech from MIT and 5 years of experience at Google."

doc = nlp(resume_text)

education_keywords = ["B.Tech", "MBA", "PhD", "MSc"]

experience_keywords = ["experience", "worked", "role"]

education = [ent.text for ent in doc.ents if ent.label_ == "ORG" and any(kw in ent.text for kw in education_keywords)]

experience = [ent.text for ent in doc.ents if any(kw in ent.text for kw in experience_keywords)]

print("Education:", education)

print("Experience:", experience)

- Identify Soft Skills Input: Resume text Output: List of soft skills

soft_skills = ["teamwork", "communication", "leadership", "problem-solving"]

detected_skills = [skill for skill in soft_skills if skill in resume_text.lower()]

print("Soft Skills:", detected_skills)

- Project and Achievement Matching Input: Resume text Output: Extracted projects and achievements

project_keywords = ["project", "developed", "implemented", "designed"]

projects = [sentence.text for sentence in doc.sents if any(kw in sentence.text.lower() for kw in project_keywords)]

print("Projects:", projects)

- Language Proficiency Detection Input: Resume text Output: List of languages

languages = ["English", "Spanish", "German", "French"]

detected_languages = [lang for lang in languages if lang in resume_text]

print("Languages:", detected_languages)

- Certifications and Licenses Input: Resume text Output: List of certifications

certifications_keywords = ["AWS", "PMP", "Certified", "License"]

certifications = [sentence.text for sentence in doc.sents if any(kw in sentence.text for kw in certifications_keywords)]

print("Certifications:", certifications)

- Gaps in Employment Input: Resume text with employment dates Output: Identified employment gaps

from dateutil.parser import parse

dates = ["2015-2020", "2021-2023"]

parsed_dates = [parse(date.split("-")[0]) for date in dates]

gaps = [(parsed_dates[i + 1] - parsed_dates[i]).days / 365 for i in range(len(parsed_dates) - 1)]

print("Gaps (in years):", gaps)

- Role-Specific Experience Input: Resume text Output: Extracted roles

roles = ["Software Developer", "Data Analyst", "Manager"]

detected_roles = [role for role in roles if role.lower() in resume_text.lower()]

print("Roles:", detected_roles)

- Location-Based Filtering Input: Candidate location Output: Match or mismatch with job location

candidate_location = "New York"

job_location = "Remote"

is_match = candidate_location.lower() == job_location.lower() or job_location.lower() == "remote"

print("Location Match:", is_match)

- Dynamic Scoring System Input: Skill match, experience, education, etc. Output: Compatibility score

skill_score = 80

experience_score = 90

education_score = 70

compatibility_score = (skill_score + experience_score + education_score) / 3

print("Compatibility Score:", compatibility_score)

- Detect Publications and Research Input: Resume text Output: List of publications

publication_keywords = ["published", "journal", "conference", "research"]

publications = [sentence.text for sentence in doc.sents if any(kw in sentence.text.lower() for kw in publication_keywords)]

print("Publications:", publications)

- Domain-Specific Adjustments Input: Resume text Output: Adjusted match for domain-specific terms

industry_terms = {"IT": ["Python", "Java"], "Healthcare": ["EMR", "clinical"]}

domain = "IT"

matches = [term for term in industry_terms[domain] if term in resume_text]

print("Domain Matches:", matches)

- Diversity and Inclusion Metrics Input: Resume text Output: Anonymized details

from faker import Faker

fake = Faker()

anonymized_name = fake.name()

print("Anonymized Name:", anonymized_name)

- Parse Unstructured Resume Formats Input: PDF or image-based resume Output: Text from resume

import pytesseract

from PIL import Image

image = Image.open("resume.jpg")

text = pytesseract.image_to_string(image)

print("Extracted Text:", text)

- Job Description Analysis Input: Job description text Output: Structured job data

description = "We need a Python developer with 5 years of experience in Django."

skills = ["Python", "Django"]

matched_skills = [skill for skill in skills if skill in description]

print("Matched Skills:", matched_skills)

- Predict Career Trajectory Input: Roles and years Output: Career trajectory prediction

roles = ["Intern", "Developer", "Manager"]

trajectory = " -> ".join(roles)

print("Career Trajectory:", trajectory)

- Salary Expectation Alignment Input: Candidate and job salary ranges Output: Match or mismatch

candidate_salary = 100000

job_salary_range = (90000, 110000)

is_aligned = job_salary_range[0] <= candidate_salary <= job_salary_range[1]

print("Salary Alignment:", is_aligned)

- Grammar and Resume Quality Analysis Input: Resume text Output: Grammar score

from grammar_check import GrammarChecker

text = "This is a good resume."

grammar_checker = GrammarChecker()

score = grammar_checker.check(text)

print("Grammar Score:", score)

- Integration with ATS Input: Resume text Output: Data in ATS-compatible format

import json

ats_data = json.dumps({"name": "John Doe", "skills": ["Python", "Django"]})

print("ATS Data:", ats_data)

- Recommendation System Input: Candidate profile Output: Recommended roles

candidate_skills = {"Python", "Django", "Flask"}

roles_skills = {"Data Engineer": {"Python", "SQL"}, "Web Developer": {"Python", "Flask"}}

recommended_roles = [role for role, skills in roles_skills.items() if candidate_skills & skills]

print("Recommended Roles:", recommended_roles)

- Feedback Generation Input: Compatibility score and gaps Output: Feedback text

compatibility = 85

if compatibility < 70:

feedback = "Improve skills matching the job description."

else:

feedback = "You are a great fit for this role."

print("Feedback:", feedback)

Code for Text-Based Resume Screening

import spacy

import re

from typing import Dict, List

# Load SpaCy's pre-trained model

nlp = spacy.load("en_core_web_sm")

# Regex patterns for specific information

EMAIL_REGEX = r"[a-zA-Z0-9+_.-]+@[a-zA-Z0-9.-]+"

PHONE_REGEX = r"\+?\d[\d -]{8,12}\d"

LINKEDIN_REGEX = r"(https?://)?(www\.)?linkedin\.com/in/[a-zA-Z0-9_-]+"

GITHUB_REGEX = r"(https?://)?(www\.)?github\.com/[a-zA-Z0-9_-]+"

def extract_personal_info(text: str) -> Dict[str, str]:

"""Extract personal information like name, email, phone, LinkedIn, GitHub."""

doc = nlp(text)

personal_info = {"Name": None, "Email": None, "Phone": None, "LinkedIn": None, "GitHub": None}

# Extract name (assume first PERSON entity is the name)

for ent in doc.ents:

if ent.label_ == "PERSON":

personal_info["Name"] = ent.text

break

# Extract email, phone, LinkedIn, GitHub using regex

personal_info["Email"] = re.search(EMAIL_REGEX, text).group(0) if re.search(EMAIL_REGEX, text) else None

personal_info["Phone"] = re.search(PHONE_REGEX, text).group(0) if re.search(PHONE_REGEX, text) else None

personal_info["LinkedIn"] = re.search(LINKEDIN_REGEX, text).group(0) if re.search(LINKEDIN_REGEX, text) else None

personal_info["GitHub"] = re.search(GITHUB_REGEX, text).group(0) if re.search(GITHUB_REGEX, text) else None

return personal_info

def extract_education(text: str) -> List[Dict[str, str]]:

"""Extract education details like degree, field of study, university, and year."""

doc = nlp(text)

education = []

for ent in doc.ents:

if ent.label_ in ["ORG", "DATE"]:

education.append({"Institution/Detail": ent.text})

return education

def extract_work_experience(text: str) -> List[Dict[str, str]]:

"""Extract work experience including job title, company, and duration."""

doc = nlp(text)

work_experience = []

for ent in doc.ents:

if ent.label_ in ["ORG", "PERSON", "DATE"]:

work_experience.append({"Detail": ent.text})

return work_experience

def extract_skills(text: str) -> List[str]:

"""Extract skills from the resume."""

skills_keywords = ["Python", "Java", "C++", "Data Science", "Machine Learning", "Leadership", "AWS", "SQL"]

skills = []

for skill in skills_keywords:

if skill.lower() in text.lower():

skills.append(skill)

return skills

def extract_certifications(text: str) -> List[str]:

"""Extract certifications from the resume."""

certifications_keywords = ["Certified", "AWS", "PMP", "Scrum", "Azure"]

certifications = []

for cert in certifications_keywords:

if cert.lower() in text.lower():

certifications.append(cert)

return certifications

def extract_projects(text: str) -> List[Dict[str, str]]:

"""Extract projects from the resume."""

projects_keywords = ["project", "developed", "built", "created", "implemented"]

projects = []

sentences = text.split(".")

for sentence in sentences:

if any(keyword in sentence.lower() for keyword in projects_keywords):

projects.append({"Project": sentence.strip()})

return projects

# Main function to process the resume

def process_resume(text: str) -> Dict[str, List[Dict[str, str]]]:

resume_data = {

"Personal Info": extract_personal_info(text),

"Education": extract_education(text),

"Work Experience": extract_work_experience(text),

"Skills": extract_skills(text),

"Certifications": extract_certifications(text),

"Projects": extract_projects(text),

}

return resume_data

# Example Resume Text

resume_text = """

John Doe

Email: john.doe@example.com

Phone: +1 123 456 7890

LinkedIn: linkedin.com/in/johndoe

GitHub: github.com/johndoe

Education:

Bachelor of Science in Computer Science, Stanford University, 2020

Master's in Data Science, MIT, 2023

Work Experience:

Software Engineer at Google (Jan 2021 - Dec 2022)

- Developed scalable backend systems using Python and AWS.

- Improved API response time by 30%.

Data Scientist at Facebook (Jan 2023 - Present)

- Built predictive models for user engagement using Machine Learning.

Skills:

Python, Java, Machine Learning, Data Science, AWS, SQL

Certifications:

AWS Certified Solutions Architect, PMP

Projects:

- Developed an e-commerce platform using Django and React.

- Built a machine learning model to predict customer churn.

"""

# Process the Resume

resume_data = process_resume(resume_text)

# Output the Extracted Data

import json

print(json.dumps(resume_data, indent=4))

output

{

"Personal Info": {

"Name": "John Doe",

"Email": "john.doe@example.com",

"Phone": "+1 123 456 7890",

"LinkedIn": "linkedin.com/in/johndoe",

"GitHub": "github.com/johndoe"

},

"Education": [

{

"Institution/Detail": "Stanford University"

},

{

"Institution/Detail": "2020"

},

{

"Institution/Detail": "MIT"

},

{

"Institution/Detail": "2023"

}

],

"Work Experience": [

{

"Detail": "Google"

},

{

"Detail": "Jan 2021"

},

{

"Detail": "Dec 2022"

},

{

"Detail": "Facebook"

},

{

"Detail": "Jan 2023"

},

{

"Detail": "Present"

}

],

"Skills": [

"Python",

"Java",

"Machine Learning",

"Data Science",

"AWS",

"SQL"

],

"Certifications": [

"AWS",

"PMP"

],

"Projects": [

{

"Project": "Developed an e-commerce platform using Django and React"

},

{

"Project": "Built a machine learning model to predict customer churn"

}

]

}

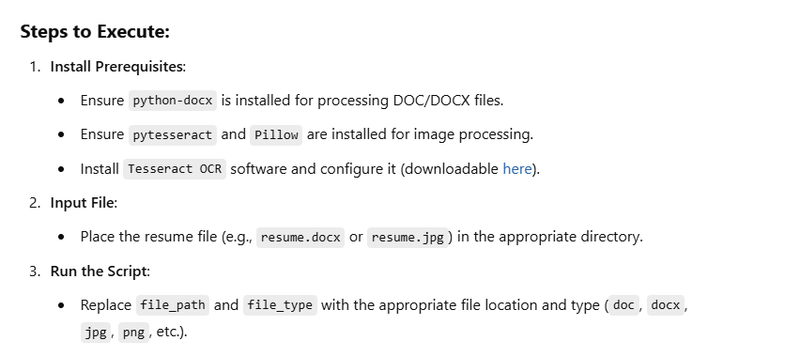

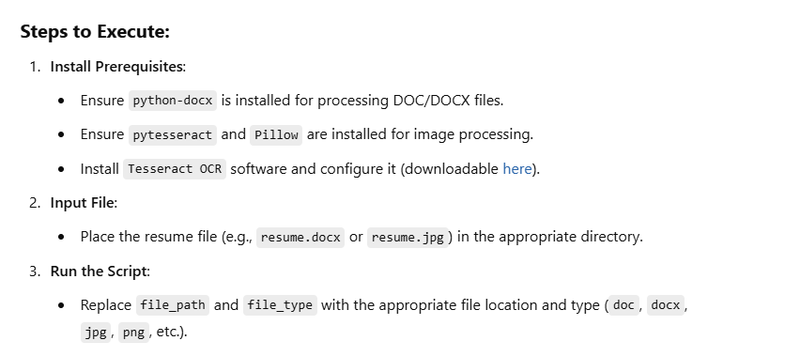

Code for DOC or Image-Based Resume Screening

Install Required Libraries

pip install python-docx pytesseract pillow spacy

import spacy

import re

from typing import Dict, List

from docx import Document

from PIL import Image

import pytesseract

# Load SpaCy model

nlp = spacy.load("en_core_web_sm")

# Regex patterns

EMAIL_REGEX = r"[a-zA-Z0-9+_.-]+@[a-zA-Z0-9.-]+"

PHONE_REGEX = r"\+?\d[\d -]{8,12}\d"

LINKEDIN_REGEX = r"(https?://)?(www\.)?linkedin\.com/in/[a-zA-Z0-9_-]+"

GITHUB_REGEX = r"(https?://)?(www\.)?github\.com/[a-zA-Z0-9_-]+"

def extract_text_from_doc(file_path: str) -> str:

"""Extract text from DOC or DOCX file."""

document = Document(file_path)

return "\n".join([para.text for para in document.paragraphs])

def extract_text_from_image(image_path: str) -> str:

"""Extract text from an image using OCR."""

image = Image.open(image_path)

return pytesseract.image_to_string(image)

# Information extraction functions

def extract_personal_info(text: str) -> Dict[str, str]:

doc = nlp(text)

personal_info = {"Name": None, "Email": None, "Phone": None, "LinkedIn": None, "GitHub": None}

for ent in doc.ents:

if ent.label_ == "PERSON":

personal_info["Name"] = ent.text

break

personal_info["Email"] = re.search(EMAIL_REGEX, text).group(0) if re.search(EMAIL_REGEX, text) else None

personal_info["Phone"] = re.search(PHONE_REGEX, text).group(0) if re.search(PHONE_REGEX, text) else None

personal_info["LinkedIn"] = re.search(LINKEDIN_REGEX, text).group(0) if re.search(LINKEDIN_REGEX, text) else None

personal_info["GitHub"] = re.search(GITHUB_REGEX, text).group(0) if re.search(GITHUB_REGEX, text) else None

return personal_info

def extract_education(text: str) -> List[Dict[str, str]]:

doc = nlp(text)

education = []

for ent in doc.ents:

if ent.label_ in ["ORG", "DATE"]:

education.append({"Institution/Detail": ent.text})

return education

def extract_work_experience(text: str) -> List[Dict[str, str]]:

doc = nlp(text)

work_experience = []

for ent in doc.ents:

if ent.label_ in ["ORG", "PERSON", "DATE"]:

work_experience.append({"Detail": ent.text})

return work_experience

def extract_skills(text: str) -> List[str]:

skills_keywords = ["Python", "Java", "C++", "Data Science", "Machine Learning", "Leadership", "AWS", "SQL"]

return [skill for skill in skills_keywords if skill.lower() in text.lower()]

def extract_certifications(text: str) -> List[str]:

certifications_keywords = ["Certified", "AWS", "PMP", "Scrum", "Azure"]

return [cert for cert in certifications_keywords if cert.lower() in text.lower()]

def extract_projects(text: str) -> List[Dict[str, str]]:

projects_keywords = ["project", "developed", "built", "created", "implemented"]

sentences = text.split(".")

return [{"Project": sentence.strip()} for sentence in sentences if any(keyword in sentence.lower() for keyword in projects_keywords)]

# Main function

def process_resume(text: str) -> Dict[str, List[Dict[str, str]]]:

resume_data = {

"Personal Info": extract_personal_info(text),

"Education": extract_education(text),

"Work Experience": extract_work_experience(text),

"Skills": extract_skills(text),

"Certifications": extract_certifications(text),

"Projects": extract_projects(text),

}

return resume_data

# Input for DOC or Image File

def process_uploaded_resume(file_path: str, file_type: str) -> Dict:

if file_type == "doc" or file_type == "docx":

text = extract_text_from_doc(file_path)

elif file_type in ["png", "jpg", "jpeg"]:

text = extract_text_from_image(file_path)

else:

raise ValueError("Unsupported file type. Please upload a DOC/DOCX or image file.")

# Process extracted text

return process_resume(text)

# Example Usage

file_path = "resume.docx" # Replace with the file path of your DOC/DOCX or image file

file_type = "doc" # Use "doc" or "jpg"/"png" depending on the file format

resume_data = process_uploaded_resume(file_path, file_type)

# Display the extracted resume information

import json

print(json.dumps(resume_data, indent=4))

To filter the extracted resume data for candidates meeting the specific criteria

from datetime import datetime

def filter_candidates(resume_data_list: List[Dict]) -> List[Dict]:

"""

Filters candidates based on the criteria:

- MTech in Education

- More than 5 years of work experience

- Skills include Python

- Minimum 3 projects

"""

filtered_candidates = []

for resume_data in resume_data_list:

# Check for MTech in education

education = resume_data.get("Education", [])

has_mtech = any("MTech" in edu.get("Institution/Detail", "") or "Master's" in edu.get("Institution/Detail", "") for edu in education)

# Calculate total experience in years

work_experience = resume_data.get("Work Experience", [])

total_experience_years = 0

for exp in work_experience:

detail = exp.get("Detail", "")

if " - " in detail:

try:

start_date, end_date = detail.split(" - ")

start_year = int(start_date[-4:])

end_year = datetime.now().year if "Present" in end_date else int(end_date[-4:])

total_experience_years += (end_year - start_year)

except ValueError:

continue

# Check if Python is in skills

skills = resume_data.get("Skills", [])

has_python_skill = "Python" in skills

# Check for minimum 3 projects

projects = resume_data.get("Projects", [])

has_minimum_projects = len(projects) >= 3

# If all criteria are met, add the candidate

if has_mtech and total_experience_years > 5 and has_python_skill and has_minimum_projects:

filtered_candidates.append(resume_data)

return filtered_candidates

# Example: List of resumes (extracted data from multiple resumes)

resumes = [

{

"Personal Info": {"Name": "John Doe", "Email": "john.doe@example.com"},

"Education": [{"Institution/Detail": "MTech in Computer Science, MIT, 2023"}],

"Work Experience": [

{"Detail": "Software Engineer at Google (Jan 2015 - Dec 2020)"},

{"Detail": "Data Scientist at Facebook (Jan 2021 - Present)"}

],

"Skills": ["Python", "Machine Learning", "AWS"],

"Certifications": ["AWS Certified Solutions Architect"],

"Projects": [

{"Project": "Developed an e-commerce platform using Django and React"},

{"Project": "Built a machine learning model to predict customer churn"},

{"Project": "Created a real-time recommendation system"}

]

},

{

"Personal Info": {"Name": "Jane Smith", "Email": "jane.smith@example.com"},

"Education": [{"Institution/Detail": "Bachelor's in Data Science, Stanford University, 2020"}],

"Work Experience": [

{"Detail": "Data Analyst at IBM (Jan 2018 - Dec 2020)"},

{"Detail": "Data Scientist at Tesla (Jan 2021 - Present)"}

],

"Skills": ["SQL", "R", "Machine Learning"],

"Certifications": ["PMP"],

"Projects": [

{"Project": "Implemented a business intelligence dashboard"},

{"Project": "Optimized supply chain processes"}

]

}

]

# Filter candidates

filtered_candidates = filter_candidates(resumes)

# Output the filtered results

import json

print(json.dumps(filtered_candidates, indent=4))

Using LLM

import openai

# Set your OpenAI API key

openai.api_key = "your_openai_api_key"

def evaluate_resume_with_llm(resume_text: str) -> dict:

"""

Evaluates a resume using GPT based on the criteria:

- MTech in Education

- More than 5 years of work experience

- Skills include Python

- Minimum 3 projects

"""

prompt = f"""

Analyze the following resume and answer if the candidate meets these criteria:

1. Does the candidate have an MTech degree or equivalent in their education?

2. Does the candidate have more than 5 years of work experience?

3. Does the candidate have Python as one of their skills?

4. Does the candidate have at least 3 projects mentioned in their resume?

Resume:

{resume_text}

Please respond in JSON format with the keys: "MTech", "Experience > 5 years", "Python Skill", "Projects >= 3".

"""

response = openai.Completion.create(

engine="text-davinci-003", # Or use "gpt-4" if available

prompt=prompt,

max_tokens=200,

temperature=0

)

return response["choices"][0]["text"].strip()

# Example Resumes

resumes = [

"""

Name: John Doe

Education: MTech in Computer Science, MIT, 2023

Work Experience:

- Software Engineer at Google (Jan 2015 - Dec 2020)

- Data Scientist at Facebook (Jan 2021 - Present)

Skills: Python, Machine Learning, AWS

Projects:

1. Developed an e-commerce platform using Django and React.

2. Built a machine learning model to predict customer churn.

3. Created a real-time recommendation system.

""",

"""

Name: Jane Smith

Education: Bachelor's in Data Science, Stanford University, 2020

Work Experience:

- Data Analyst at IBM (Jan 2018 - Dec 2020)

- Data Scientist at Tesla (Jan 2021 - Present)

Skills: SQL, R, Machine Learning

Projects:

1. Implemented a business intelligence dashboard.

2. Optimized supply chain processes.

"""

]

# Process resumes using LLM

for i, resume in enumerate(resumes):

print(f"Resume {i + 1} Evaluation:")

result = evaluate_resume_with_llm(resume)

print(result)

print("-" * 50)

Top comments (0)