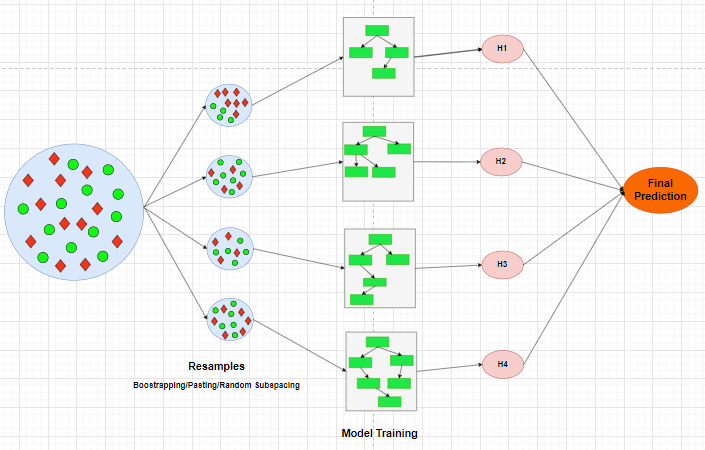

Bootstrap and pasting are both resampling techniques used in machine learning, especially in the context of ensemble learning methods. They involve creating multiple subsets of the original dataset to train multiple models, introducing diversity and reducing overfitting. However, they differ in how they create these subsets. Let's explain both methods with examples:

Bootstrap (Bagging):

Bootstrap, short for "bootstrap aggregating," involves creating multiple subsets (bags) of the original dataset by randomly sampling data points with replacement. This means that each subset may contain duplicate instances, and some instances may be left out. Each subset is used to train a separate base learner (e.g., decision tree), and their predictions are combined to make the final prediction.

Example:

Suppose you have the following dataset of exam scores:

Original Dataset: [80, 75, 90, 85, 92, 78, 88, 93, 89, 84]

When bootstrapping for training multiple models, you might create three bootstrap samples like this:

Bootstrap Sample 1: [75, 85, 90, 93, 89, 78, 80]

Bootstrap Sample 2: [84, 90, 80, 92, 85, 78, 89]

Bootstrap Sample 3: [78, 84, 93, 85, 92, 80, 80]

Each of these samples is used to train a different model, and their predictions are combined to make the final prediction.

Pasting:

Pasting is similar to bootstrapping but involves creating multiple subsets of the original dataset by randomly sampling data points without replacement. This means that each subset contains unique instances and the original dataset is not altered. Pasting is generally used when you want to maintain the integrity of the original data.

Example:

Using the same original dataset as in the previous example:

Original Dataset: [80, 75, 90, 85, 92, 78, 88, 93, 89, 84]

When pasting for training multiple models, you might create three subsets like this:

Pasting Subset 1: [75, 85, 90, 93, 89, 78, 88]

Pasting Subset 2: [80, 92, 84, 75, 85, 78, 93]

Pasting Subset 3: [84, 88, 80, 92, 85, 93, 89]

Each of these subsets is used to train a different model, and their predictions are combined for the final prediction.

When bootstrap is set to True (the default) in ensemble methods, it means that bootstrapping (sampling with replacement) is allowed, and each base learner is trained on a bootstrapped subset of the data.

When bootstrap is set to False or when using pasting, it means that bootstrapping is not allowed, and each base learner is trained on a s*ubset of the data sampled without replacement*.

Top comments (0)