One-Hot Encoding

Label Encoding

Ordinal Encoding

Frequency Encoding

Binary Encoding

In machine learning, encoding techniques are used to transform categorical data into numerical format, which can be used as input for machine learning models. Here are some popular encoding techniques along with examples:

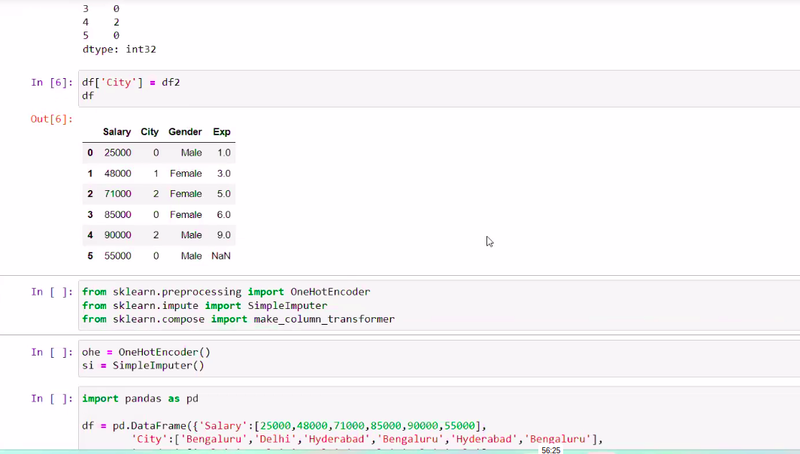

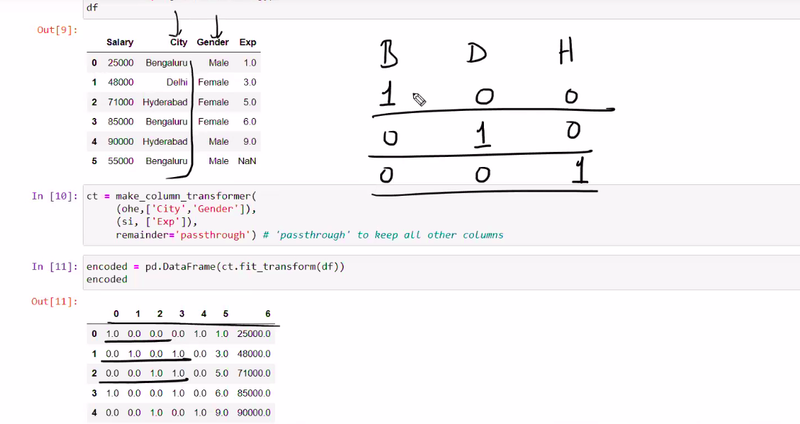

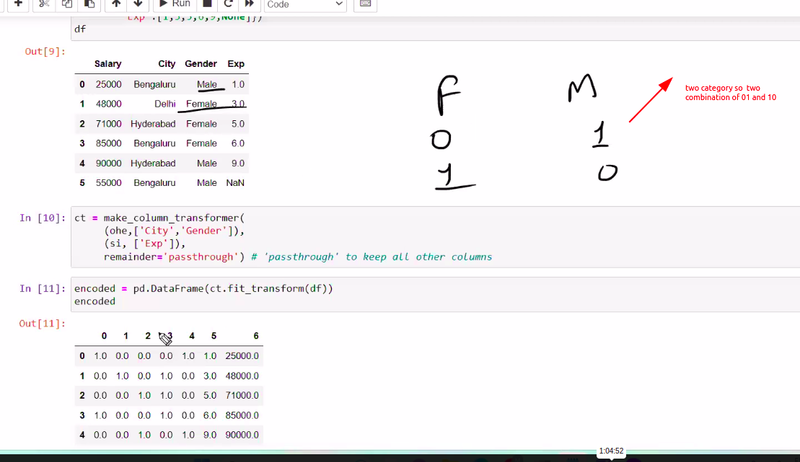

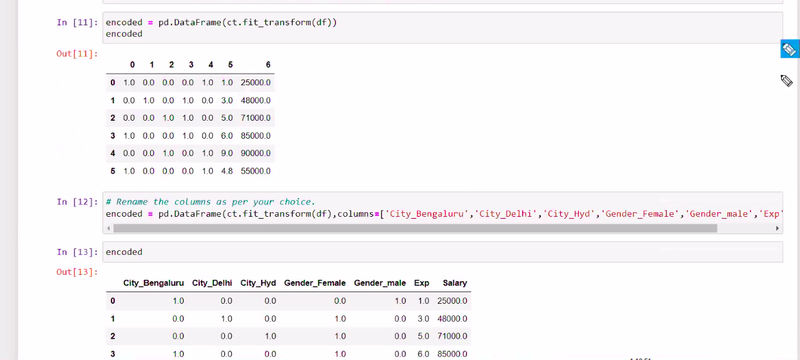

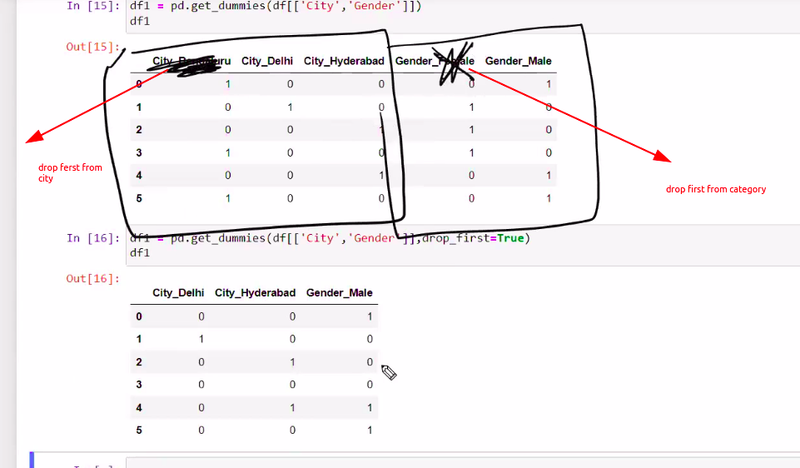

One-Hot Encoding:

This technique converts each category in a categorical feature into a binary column (0 or 1). It creates new binary features for each category, indicating the presence or absence of that category.

Example:

Let's say we have a "Color" feature with categories ['Red', 'Green', 'Blue']. After one-hot encoding, it will be transformed into three binary features: "Color_Red", "Color_Green", and "Color_Blue"

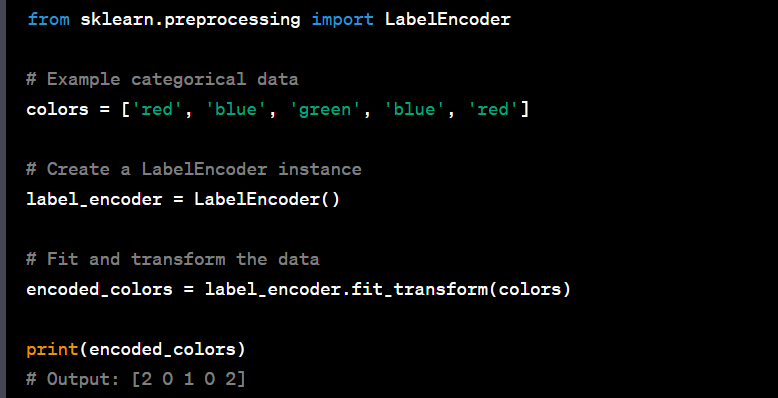

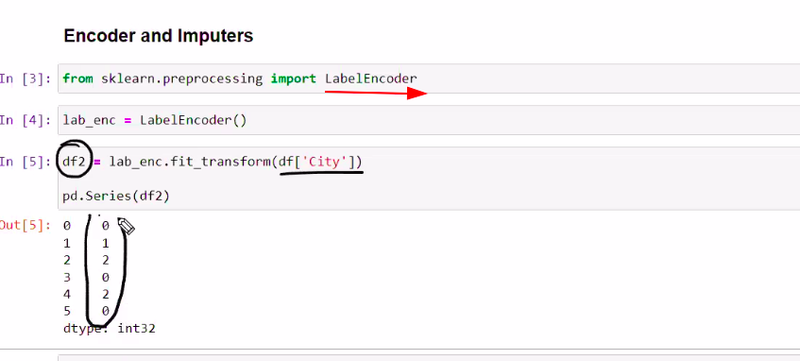

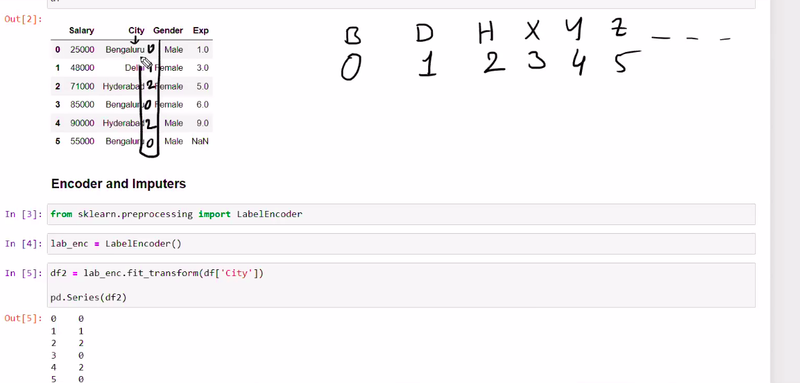

Label Encoding:

Label encoding assigns a unique integer to each category in a categorical feature. It is suitable for ordinal categorical data (data with a natural order).

Example:

Label Encoder is a simple technique that assigns a unique integer to each category in a categorical variable. It is primarily used for transforming categorical target variables or ordinal features where the order of the categories matters. The assigned integers are arbitrary and do not hold any intrinsic meaning. For example:

If we have a "Size" feature with categories ['Small', 'Medium', 'Large'], after label encoding, it will be converted to [0, 1, 2].

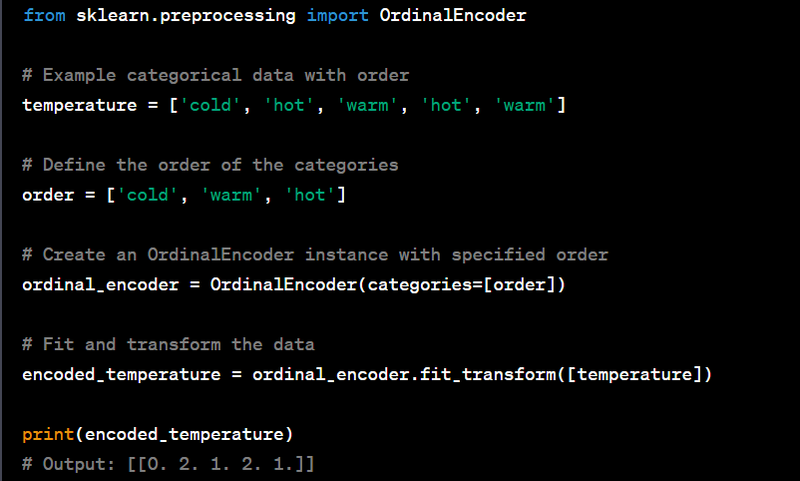

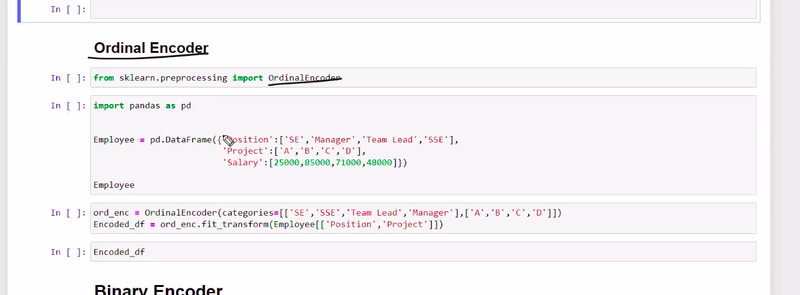

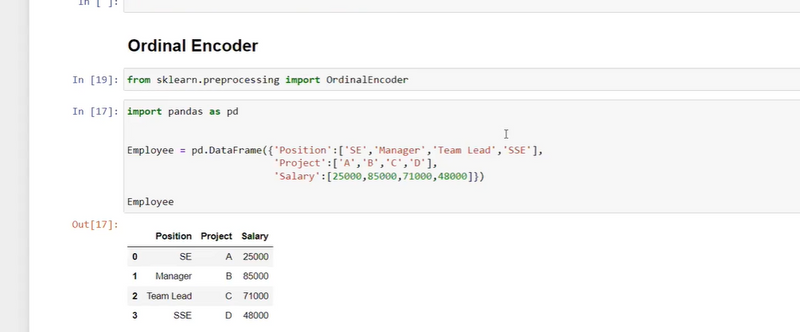

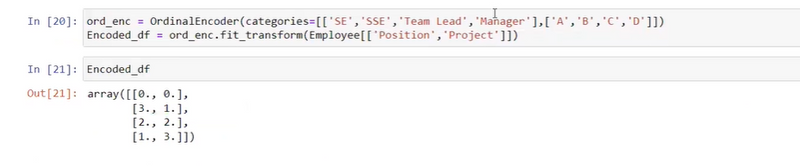

Ordinal Encoding:

Ordinal encoding is similar to label encoding, but it preserves the order of the categories.

Example:

If we have a "Education_Level" feature with categories ['High School', 'Bachelor's', 'Master's', 'PhD'], ordinal encoding might convert it to [1, 2, 3, 4].

Frequency Encoding:

Frequency encoding replaces each category with its frequency or count in the dataset. This encoding is useful for high cardinality categorical features.

Example:

If we have a "Country" feature with categories ['USA', 'Canada', 'USA', 'UK', 'Canada'], frequency encoding will convert it to [2, 2, 2, 1, 2].

Binary Encoding:

Binary encoding converts each category into binary code and creates new features with binary values.

Example:

If we have a "Gender" feature with categories ['Male', 'Female'], binary encoding might convert it to '00', '01'.

Hash Encoding:

Hash encoding hashes the categories into a fixed number of unique values and converts them into numerical format.

Example:

If we have a "City" feature with different city names, hash encoding will convert each city name into a numerical value based on the hash function.

These are some of the commonly used encoding techniques in machine learning. The choice of encoding technique depends on the nature of the data and the requirements of the machine learning model.

OBJECTIVE

which encoding technique preserve order

which encoding technique categorical feature into a binary column

What is feature encoding in machine learning?

a. Converting continuous features to discrete features

b. Transforming categorical data into a numerical format

c. Reducing the dimensionality of data

d. Normalizing data for better visualization

Answer: b. Transforming categorical data into a numerical format

Which of the following encoding methods is suitable for ordinal categorical data?

a. One-Hot Encoding

b. Label Encoding

c. Binary Encoding

d. Frequency Encoding

Answer: b. Label Encoding

In One-Hot Encoding, how many binary columns are created for a categorical feature with "n" unique values?

a. n

b. n - 1

c. n + 1

d. 2n

Answer: a. n

Which encoding technique can lead to the "curse of dimensionality" if used on high-cardinality categorical features?

a. Label Encoding

b. Binary Encoding

c. One-Hot Encoding

d. Frequency Encoding

Answer: c. One-Hot Encoding

When using Label Encoding, what is the potential issue?

a. Increased dimensionality

b. Loss of ordinal information

c. Loss of information for machine learning algorithms

d. Difficulty handling missing values

Answer: b. Loss of ordinal information

Which encoding method assigns unique binary codes to each category based on their frequency of occurrence in the dataset?

a. Label Encoding

b. Binary Encoding

c. Count Encoding

d. Target Encoding

Answer: c. Count Encoding

What is the purpose of target encoding in machine learning?

a. Converting numerical features into categorical features

b. Reducing the dimensionality of the dataset

c. Encoding the target variable for regression problems

d. Encoding categorical features based on their relationship with the target variable

Answer: d. Encoding categorical features based on their relationship with the target variable

Which encoding method is most suitable for handling high-cardinality categorical features without increasing dimensionality significantly?

a. Binary Encoding

b. Label Encoding

c. Frequency Encoding

d. Target Encoding

Answer: c. Frequency Encoding

In Binary Encoding, how many binary columns are created for a categorical feature with "n" unique values?

a. n

b. n - 1

c. log2(n)

d. n/2

Answer: c. log2(n)

When should you consider using feature scaling techniques like Min-Max scaling or Standardization after encoding?

a. Only for categorical features

b. Only for numerical features

c. After encoding, for numerical features that require it

d. Before encoding, for categorical features that require it

Answer: c. After encoding, for numerical features that require it

Top comments (0)