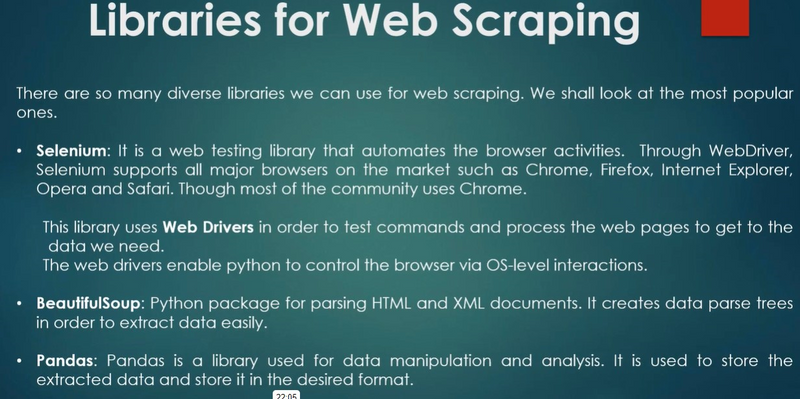

There are several Python libraries commonly used for web scraping. Each of these libraries has its own strengths and can be chosen based on your specific scraping needs and preferences. Here are some popular web scraping libraries, along with examples of how to use them:

Beautiful Soup:

Beautiful Soup is a widely used library for parsing HTML and XML documents. It makes it easy to navigate and extract data from web pages.

from bs4 import BeautifulSoup

import requests

# Send an HTTP GET request to the URL

url = 'https://example.com'

response = requests.get(url)

# Parse the HTML content of the page

soup = BeautifulSoup(response.text, 'html.parser')

# Extract and print the page title

title = soup.title.string

print('Page Title:', title)

Requests:

The Requests library is used to make HTTP requests to web pages and retrieve their content. It's often used in combination with other libraries like BeautifulSoup.

import requests

# Send an HTTP GET request to the URL

url = 'https://example.com'

response = requests.get(url)

# Print the content of the page

print('Page Content:', response.text)

Scrapy:

Scrapy is a powerful web crawling and scraping framework. It's designed for more complex scraping projects and allows you to define spiders that navigate websites and extract data.

import scrapy

class MySpider(scrapy.Spider):

name = 'example'

start_urls = ['https://example.com']

def parse(self, response):

# Extract data from the page using XPath or CSS selectors

title = response.xpath('//title/text()').get()

print('Page Title:', title)

Selenium:

Selenium is a web testing framework that can be used for web scraping, especially for websites that use JavaScript heavily. It can automate browser interactions and data extraction.

from selenium import webdriver

# Start a new web browser instance

driver = webdriver.Chrome()

# Open a web page

url = 'https://example.com'

driver.get(url)

# Extract data from the page

title = driver.title

print('Page Title:', title)

# Close the browser

driver.quit()

Lxml:

Lxml is a library for processing XML and HTML documents. It's known for its speed and efficiency in parsing large documents.

from lxml import html

import requests

# Send an HTTP GET request to the URL

url = 'https://example.com'

response = requests.get(url)

# Parse the HTML content of the page

tree = html.fromstring(response.text)

# Extract and print the page title

title = tree.xpath('//title/text()')[0]

print('Page Title:', title)

PyQuery:

PyQuery provides a jQuery-like syntax for parsing and manipulating XML and HTML documents. It's a convenient choice for those familiar with jQuery.

from pyquery import PyQuery as pq

import requests

# Send an HTTP GET request to the URL

url = 'https://example.com'

response = requests.get(url)

# Parse the HTML content of the page

doc = pq(response.text)

# Extract and print the page title

title = doc('title').text()

print('Page Title:', title)

These libraries offer a wide range of capabilities for web scraping, from basic HTML parsing to more advanced web crawling and browser automation. The choice of library depends on your specific project requirements and your familiarity with the library's features and syntax.

Top comments (0)