Supervised Learning

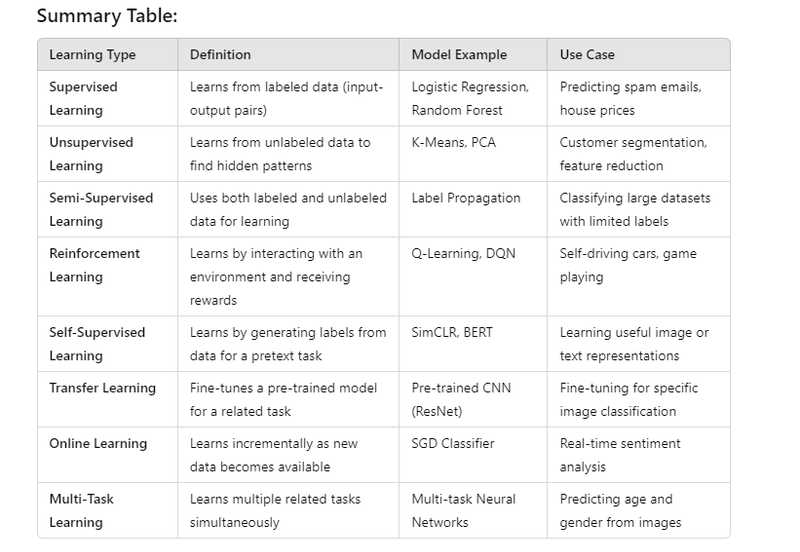

Definition: In supervised learning, the model learns from labeled data (input-output pairs). The goal is to map inputs to known outputs.

Types: Includes classification and regression tasks.

Examples:

Classification (Logistic Regression):

Use Case: Predict whether an email is spam or not (binary classification).

Model: Logistic Regression.

from sklearn.linear_model import LogisticRegression

model = LogisticRegression()

model.fit(X_train, y_train)

predictions = model.predict(X_test)

Regression (Linear Regression):

Use Case: Predict house prices based on features like size, number of rooms, and location.

Model: Linear Regression.

from sklearn.linear_model import LinearRegression

model = LinearRegression()

model.fit(X_train, y_train)

predictions = model.predict(X_test)

-

Unsupervised Learning

Definition: In unsupervised learning, the model learns from unlabeled data. The goal is to find hidden patterns or structures in the data.

Types: Includes clustering, dimensionality reduction, and association tasks.

Examples:

Clustering (K-Means): Use Case: Group customers into different segments based on purchasing behavior.

Model: K-Means Clustering.

from sklearn.cluster import KMeans

model = KMeans(n_clusters=3)

model.fit(X_train)

clusters = model.predict(X_train)

Dimensionality Reduction (PCA):

Use Case: Reduce the number of features in a high-dimensional dataset while retaining important information.

Model: Principal Component Analysis (PCA).

from sklearn.decomposition import PCA

model = PCA(n_components=2)

reduced_data = model.fit_transform(X_train)

- Semi-Supervised Learning Definition: In semi-supervised learning, the model learns from a small amount of labeled data and a large amount of unlabeled data. This is useful when labeling data is expensive or time-consuming. Example: Semi-Supervised Learning (Label Propagation): Use Case: Classify large datasets of customer reviews where only a small subset is labeled as positive or negative.

Model: Label Propagation.

from sklearn.semi_supervised import LabelPropagation

model = LabelPropagation()

model.fit(X_labeled, y_labeled) # X_unlabeled is also used implicitly

predictions = model.predict(X_unlabeled)

-

Reinforcement Learning

Definition: In reinforcement learning, the model (called an agent) learns by interacting with an environment. The agent receives rewards or penalties based on its actions and learns to maximize long-term reward.

Types: Includes Q-learning, Deep Q-Networks (DQN), and policy gradients.

Example:

Q-Learning (Reinforcement Learning): Use Case: Train a self-driving car to navigate through traffic by maximizing a reward (e.g., minimizing time to reach the destination).

Model: Q-Learning Algorithm.

import numpy as np

Q = np.zeros((state_size, action_size)) # Initialize Q-table with zeros

for episode in range(num_episodes):

# Loop through actions, update Q-values based on rewards, and learn the optimal policy

pass

- Self-Supervised Learning Definition: In self-supervised learning, the model generates its own labels from the data and learns through a pretext task. This is often used in tasks like representation learning. Example: Self-Supervised Learning (Contrastive Learning): Use Case: Learn useful image representations by predicting whether two images are similar or not, without labeled data. Model: SimCLR (Simple Framework for Contrastive Learning). # In SimCLR, the model learns to bring similar images closer and push dissimilar images apart in the representation space.

- Transfer Learning Definition: Transfer learning involves taking a pre-trained model on one task and fine-tuning it for another related task. This is particularly useful when labeled data is scarce in the target domain. Example: Transfer Learning (Pre-trained CNN): Use Case: Use a pre-trained image classifier (like ResNet) and fine-tune it for a specific classification task (e.g., identifying different types of flowers). Model: Pre-trained Convolutional Neural Network (CNN) like ResNet.

from tensorflow.keras.applications import ResNet50

model = ResNet50(weights='imagenet', include_top=False)

- Online Learning Definition: Online learning (or incremental learning) involves training a model in a sequential or incremental fashion. The model updates itself as new data becomes available, without requiring a complete retraining. Example: Online Learning (SGD Classifier): Use Case: Continuously update a classifier as new data streams in, such as real-time sentiment analysis for social media posts.

Model: Stochastic Gradient Descent (SGD) Classifier.

from sklearn.linear_model import SGDClassifier

model = SGDClassifier()

model.partial_fit(X_train, y_train, classes=np.unique(y_train))

- Multi-Task Learning Definition: Multi-task learning trains a model to learn multiple related tasks simultaneously. The tasks benefit from shared representations or knowledge. Example: Multi-Task Learning (Neural Networks): Use Case: Train a neural network to predict both the age and gender of a person from their image, where age and gender tasks share some common features. Model: Multi-task Neural Network.

Top comments (0)