Why we use TensorFlow Reshape?

Why we use TensorFlow Reshape

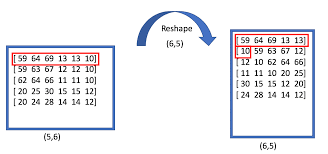

The built-in reshape() function in Tensorflow allows tensors to be reshaped to match the input requirements and maintain consistency.

- It allows the building of complex and multidimensional data models which in turn enables the visualization of data at a deep level. This also enables easy and faster debugging of all the nodes in the neural network and resolves issues without going through code.

- It provides flexibility to try out different dimensions of the data and design as many views of the data and design solutions for any AI-related issues.

- It is an open-sourced platform where ideas from the community constantly improve the quality of solutions provided by this.

- This reshapes option enables users to build many use cases which were not thought of so far . TensorFlow's tf.reshape is a powerful operation that allows you to change the shape of a tensor, rearranging its elements while preserving their values. Reshaping is commonly used for various reasons in deep learning and machine learning tasks. Here are some key use cases with examples:

Syntax

tensorflow.reshape(tensor, shape, name=None)

Parameters

tensor: the tensor that is to be reshaped

shape: the shape of the output tensor

name: operation name (optional)

import tensorflow as tf

a=tf.constant([3,4,8,1,7,2]) #define a tensor of shape [6]

b=tf.constant([[7,8,9,10],[4,5,6,7]]) #define a tensor of shape [2,4]

print ("Shape of tensor a:", a.get_shape())

print ("Shape of tensor b:", b.get_shape())

aNew=tf.reshape(a, [2,3])

bNew=tf.reshape(b, [4,2]) #store the output in new tensors

print ("Tensor a after reshaping:", aNew.get_shape())

print ("Tensor b after reshaping:", bNew.get_shape()) #print the shape of output tensors

output

Shape of tensor a: (6,)

Shape of tensor b: (2, 4)

Tensor a after reshaping: (2, 3)

Tensor b after reshaping: (4, 2)

Flattening a tensor

import tensorflow as tf

a=tf.constant([[3,4],[7,8],[1,2],[9,10],[11,12]]) #define a tensor of shape [5,2]

b=tf.constant([[7,8,9,10],[4,5,6,7]]) #define a tensor of shape [2,4]

print ("Shape of tensor a:", a.get_shape())

print ("Shape of tensor b:", b.get_shape())

aNew=tf.reshape(a,[-1])

bNew=tf.reshape(b,[-1]) #flatten both the arrays

print ("Tensor a after reshaping:", aNew.get_shape())

print ("Tensor b after reshaping:", bNew.get_shape()) #print the shape of output tensors

Output

Shape of tensor a: (5, 2)

Shape of tensor b: (2, 4)

Tensor a after reshaping: (10,)

Tensor b after reshaping: (8,)

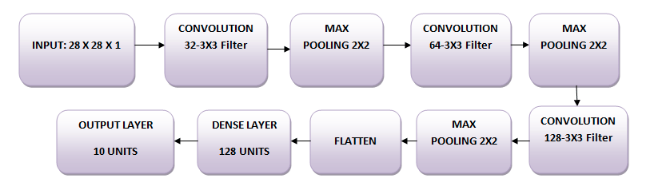

Preparing Data for Neural Networks:

import tensorflow as tf

# Example: Flatten a 28x28 image to a vector of size 784

image = tf.constant(tf.random.normal(shape=(1, 28, 28, 1)))

flat_image = tf.reshape(image, shape=(1, 28 * 28))

print("Original Shape:", image.shape)

print("Reshaped Shape:", flat_image.shape)

Output

Original Shape: (1, 28, 28, 1)

Reshaped Shape: (1, 784)

Aligning Tensors for Mathematical Operations:

import tensorflow as tf

# Example: Matrix multiplication with reshaping

matrix_a = tf.constant([[1, 2], [3, 4]])

matrix_b = tf.constant([[5, 6], [7, 8]])

# Reshape matrix_a to a column vector before multiplication

matrix_a_reshaped = tf.reshape(matrix_a, shape=(2, 1))

result = tf.matmul(matrix_a_reshaped, matrix_b)

print("Result:", result.numpy()

Output

Result: [[19, 22],

[43, 50]]

Adjusting Tensor Shape for Specific Operations:

import tensorflow as tf

# Example: Reshape for a convolutional layer input

input_data = tf.constant([[1, 2, 3], [4, 5, 6], [7, 8, 9]], dtype=tf.float32)

# Reshape input_data to 4D tensor (batch size, height, width, channels)

reshaped_input = tf.reshape(input_data, [1, 3, 3, 1])

print("Original Shape:", input_data.shape)

print("Reshaped Shape:", reshaped_input.shape)

Output

Original Shape: (3, 3)

Reshaped Shape: (1, 3, 3, 1)

In CNNs, input tensors are typically 4D, where the dimensions represent batch size, height, width, and channels. Reshaping is used to convert a 2D image into a 4D tensor.

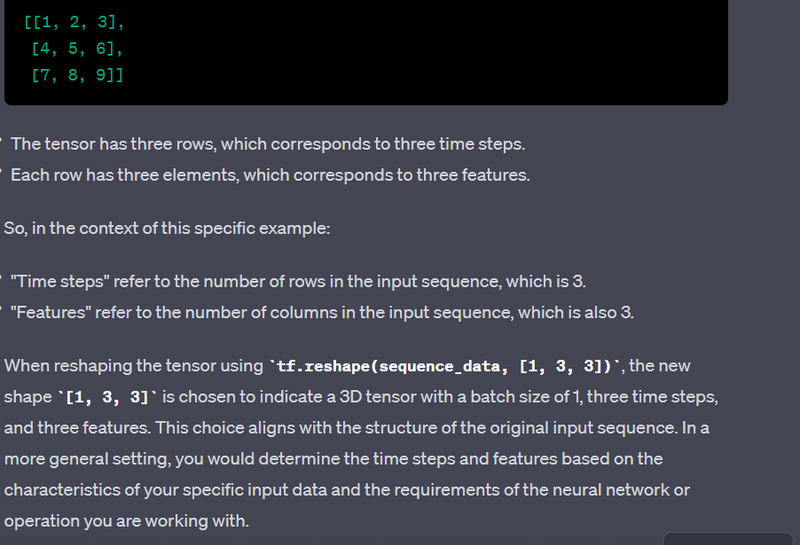

Adjusting Tensor Shape in Recurrent Neural Networks (RNNs)

import tensorflow as tf

# Example: Reshape for an RNN input sequence

sequence_data = tf.constant([[1, 2, 3], [4, 5, 6], [7, 8, 9]], dtype=tf.float32)

# Reshape sequence_data to 3D tensor (batch size, time steps, features)

reshaped_sequence = tf.reshape(sequence_data, [1, 3, 3])

print("Original Shape:", sequence_data.shape)

print("Reshaped Shape:", reshaped_sequence.shape)

Output:

Original Shape: (3, 3)

Reshaped Shape: (1, 3, 3)

In RNNs, input tensors are often 3D, where the dimensions represent batch size, time steps, and features. Reshaping is used to adapt the input data to this format.

Explanation

Flattening for Fully Connected Layers:

import tensorflow as tf

# Example: Flatten for a fully connected layer

convolution_output = tf.constant(tf.random.normal(shape=(1, 4, 4, 32)))

# Flatten convolution_output to a 2D tensor for a fully connected layer

flattened_output = tf.reshape(convolution_output, [1, -1])

print("Original Shape:", convolution_output.shape)

print("Flattened Shape:", flattened_output.shape)

Output

Original Shape: (1, 4, 4, 32)

Flattened Shape: (1, 512)

Explanation

Handling Different Image Batch Sizes:

import tensorflow as tf

# Assume dynamic_batch_size is determined at runtime

dynamic_batch_size = 10

image_data = tf.constant(tf.random.normal(shape=(dynamic_batch_size, 64, 64, 3)))

# Reshape to prepare for a convolutional layer

reshaped_image_data = tf.reshape(image_data, [dynamic_batch_size, 64, 64, 3])

Explanation

Import TensorFlow:

import tensorflow as tf

This line imports the TensorFlow library and aliases it as tf for brevity.

Generate Image Data with Dynamic Batch Size:

# Assume dynamic_batch_size is determined at runtime

dynamic_batch_size = 10

image_data = tf.constant(tf.random.normal(shape=(dynamic_batch_size, 64, 64, 3)))

Here, you create a 4D tensor image_data using TensorFlow's constant function. The tensor represents image data with a batch size of dynamic_batch_size, a spatial size of 64x64 pixels, and 3 color channels (RGB).

Reshape for Convolutional Layer:

reshaped_image_data = tf.reshape(image_data, [dynamic_batch_size, 64, 64, 3])

The tf.reshape function is used to reshape the image_data tensor to the same shape. This operation is often done to prepare the image data for a convolutional layer, which typically expects input tensors in the form of 4D tensors with dimensions (batch size, height, width, channels).

Print Shapes:

print("Original Shape:", image_data.shape)

print("Reshaped Shape:", reshaped_image_data.shape)

These lines print out the shapes of the original and reshaped tensors.

Now, let's discuss the potential output:

Original Shape: (10, 64, 64, 3)

Reshaped Shape: (10, 64, 64, 3)

The original shape of image_data is (10, 64, 64, 3), representing a 4D tensor with a batch size of 10, spatial dimensions of 64x64 pixels, and 3 color channels.

The reshaped tensor, reshaped_image_data, has the same shape (10, 64, 64, 3). In this case, the reshaping operation didn't change the structure of the tensor.

The purpose of this code is to illustrate how you might use tf.reshape to handle image data with a dynamic batch size. It ensures that the image data is properly shaped for subsequent layers in a convolutional neural network, where the input tensor's dimensions are crucial for correct model execution.

Top comments (0)