Using shell_exec to Execute Python Scripts

HTTP Request to Flask Server

Using shell_exec to Execute Python Scripts

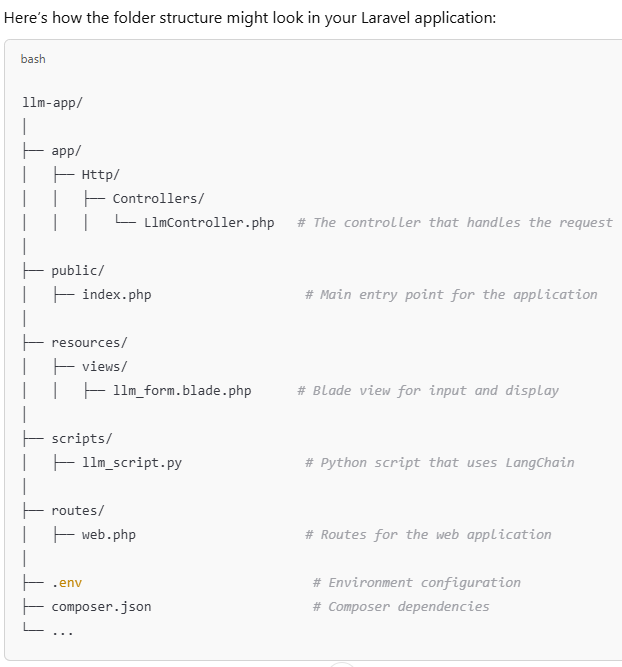

Step-by-Step Implementation

Step 1: Set Up Laravel Application

Install Laravel (if you haven't already):

composer create-project --prefer-dist laravel/laravel llm-app

cd llm-app

Set up your environment:

Ensure you have a .env file configured. You can use the default Laravel configuration.

Step 2: Create Python Script

Create a Python script (e.g., llm_script.py):

In the root directory of your Laravel project, create a new folder named scripts and inside it, create a file named llm_script.py.

mkdir scripts

touch scripts/llm_script.py

Write the following code in llm_script.py:

import sys

import json

from langchain.llms import OpenAI

from langchain.prompts import PromptTemplate

from langchain.chains import LLMChain

# Initialize OpenAI LLM using LangChain

llm = OpenAI(openai_api_key='your_openai_api_key')

def generate_answer(question):

prompt_template = PromptTemplate(

input_variables=["question"],

template="Please provide a detailed answer for the following question: {question}"

)

chain = LLMChain(llm=llm, prompt=prompt_template)

answer = chain.run(question)

return answer.strip()

def get_image(topic):

# Placeholder function; replace with actual image fetching logic

return f"https://via.placeholder.com/400?text={topic}"

if __name__ == "__main__":

topic = sys.argv[1]

question = f"What is {topic}?"

answer = generate_answer(question)

image_url = get_image(topic)

result = {

"question": question,

"answer": answer,

"image_url": image_url

}

print(json.dumps(result))

Step 3: Create Laravel Controller

Create a new controller using Artisan:

php artisan make:controller LlmController

Write the following code in app/Http/Controllers/LlmController.php:

<?php

namespace App\Http\Controllers;

use Illuminate\Http\Request;

class LlmController extends Controller

{

public function index()

{

return view('llm_form');

}

public function getResponse(Request $request)

{

$topic = $request->input('topic');

// Call the Python script

$command = escapeshellcmd("python3 scripts/llm_script.py " . escapeshellarg($topic));

$output = shell_exec($command);

// Decode the JSON output

$result = json_decode($output, true);

return response()->json($result);

}

}

Step 4: Create Blade View

Create a Blade view for the form:

Create a new view file named llm_form.blade.php in the resources/views directory.

touch resources/views/llm_form.blade.php

Write the following code in resources/views/llm_form.blade.php:

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>LLM App</title>

<script src="https://code.jquery.com/jquery-3.6.0.min.js"></script>

</head>

<body>

<h1>Enter a Topic</h1>

<form id="llmForm">

<input type="text" name="topic" placeholder="Enter topic here" required>

<button type="submit">Submit</button>

</form>

<div id="result" style="display:none;">

<h1>Question</h1>

<p id="question"></p>

<h1>Answer</h1>

<p id="answer"></p>

<h1>Relevant Image</h1>

<img id="image" src="" alt="Image related to the question" />

</div>

<script>

$('#llmForm').on('submit', function(e) {

e.preventDefault();

$.ajax({

type: 'POST',

url: '/get-response',

data: $(this).serialize(),

success: function(response) {

$('#question').text(response.question);

$('#answer').text(response.answer);

$('#image').attr('src', response.image_url);

$('#result').show();

}

});

});

</script>

</body>

</html>

Step 5: Define Routes

Open routes/web.php and define the routes:

<?php

use Illuminate\Support\Facades\Route;

use App\Http\Controllers\LlmController;

Route::get('/', [LlmController::class, 'index']);

Route::post('/get-response', [LlmController::class, 'getResponse']);

Step 6: Set Up Python Environment

Make sure you have Python and the required libraries installed for the Python script to run correctly. You need langchain and any other dependencies used in your script.

pip install langchain openai

Step 7: Run the Laravel Application

Start the Laravel development server:

php artisan serve

Access the application:

Open your web browser and navigate to http://127.0.0.1:8000/.

NOTES

To use the shell_exec function in Laravel to run a Python script, there are a few considerations to ensure it works correctly. Here’s what you need to do:

Prerequisites

Ensure Python is Installed: Make sure you have Python installed on your server or local environment where the Laravel application is running. You can check this by running the following command in your terminal:

python3 --version

If Python is installed, this command will display the installed version.

Check Permissions: Ensure that the user running the Laravel application (usually the web server user, like www-data for Apache or nginx for Nginx) has the necessary permissions to execute shell commands. You might need to adjust the permissions of your scripts directory and the Python script file to be executable.

You can change permissions with:

chmod +x scripts/llm_script.py

Step-by-Step Command Execution Setup

Test the Python Script: Before integrating it into Laravel, test the Python script directly from the command line to ensure it works as expected:

python3 scripts/llm_script.py "Your topic here"

This command should execute the script and return the expected output.

Update Your PHP Code: Ensure that your Laravel controller method properly calls the Python script as shown in your previous examples:

public function getResponse(Request $request)

{

$topic = $request->input('topic');

// Call the Python script

$command = escapeshellcmd("python3 scripts/llm_script.py " . escapeshellarg($topic));

$output = shell_exec($command);

// Decode the JSON output

$result = json_decode($output, true);

return response()->json($result);

}

HTTP Request to Flask Server

To create a Laravel application that captures user input from a text field, calls a Python script via an HTTP POST request, and utilizes Large Language Models (LLMs) with LangChain for generating responses, follow these detailed steps. We'll set up a Flask server to handle the Python logic and use Laravel to communicate with it.

Step-by-Step Implementation

Step 1: Set Up the Flask Server

Create a Flask Application:

First, set up a Flask application that will handle the HTTP requests from Laravel.

Directory Structure:

flask_app/

├── app.py

└── requirements.txt

Create the Flask app (app.py):

from flask import Flask, request, jsonify

from langchain.llms import OpenAI

from langchain.prompts import PromptTemplate

from langchain.chains import LLMChain

app = Flask(__name__)

# Initialize OpenAI LLM using LangChain

llm = OpenAI(openai_api_key='your_openai_api_key')

@app.route('/generate', methods=['POST'])

def generate_response():

data = request.json

topic = data.get('topic')

question = f"What is {topic}?"

prompt_template = PromptTemplate(

input_variables=["question"],

template="Please provide a detailed answer for the following question: {question}"

)

chain = LLMChain(llm=llm, prompt=prompt_template)

answer = chain.run(question)

# Placeholder for image generation

image_url = f"https://via.placeholder.com/400?text={topic}"

result = {

"question": question,

"answer": answer.strip(),

"image_url": image_url

}

return jsonify(result)

if __name__ == '__main__':

app.run(port=5000)

Create requirements.txt:

flask

langchain

openai

Install Flask and dependencies:

Navigate to the flask_app directory and run:

pip install -r requirements.txt

Run the Flask Server:

Start the Flask server:

python app.py

The Flask server will run at http://127.0.0.1:5000.

Step 2: Set Up Laravel Application

Create a Laravel Project:

If you haven't created a Laravel project yet, do so by running:

composer create-project --prefer-dist laravel/laravel llm-laravel

cd llm-laravel

Create a Controller:

Use Artisan to create a new controller:

php artisan make:controller LlmController

Write the Controller Logic:

Open app/Http/Controllers/LlmController.php and add the following code:

<?php

namespace App\Http\Controllers;

use Illuminate\Http\Request;

use Illuminate\Support\Facades\Http;

class LlmController extends Controller

{

public function index()

{

return view('llm_form');

}

public function submit(Request $request)

{

$topic = $request->input('topic');

// Call the Flask server

$response = Http::post('http://127.0.0.1:5000/generate', [

'topic' => $topic,

]);

$data = json_decode($response->body(), true);

return view('result', compact('data'));

}

}

Create Blade Views:

Create a view file for the form input:

resources/views/llm_form.blade.php:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>LLM App</title>

<script src="https://code.jquery.com/jquery-3.6.0.min.js"></script>

</head>

<body>

<h1>Enter a Topic</h1>

<form id="llmForm" method="POST" action="{{ route('llm.submit') }}">

@csrf

<input type="text" name="topic" placeholder="Enter topic here" required>

<button type="submit">Submit</button>

</form>

</body>

</html>

Create a view file for displaying the results:

resources/views/result.blade.php:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>Result</title>

</head>

<body>

<h1>Question</h1>

<p>{{ $data['question'] }}</p>

<h1>Answer</h1>

<p>{{ $data['answer'] }}</p>

<h1>Relevant Image</h1>

<img src="{{ $data['image_url'] }}" alt="Image related to {{ $data['question'] }}" />

<br>

<a href="{{ route('llm.index') }}">Go back</a>

</body>

</html>

Define Routes:

Open routes/web.php and define the routes:

<?php

use Illuminate\Support\Facades\Route;

use App\Http\Controllers\LlmController;

Route::get('/', [LlmController::class, 'index'])->name('llm.index');

Route::post('/submit', [LlmController::class, 'submit'])->name('llm.submit');

Step 3: Run the Laravel Application

Start the Laravel Development Server:

Open a terminal and navigate to your Laravel project directory:

php artisan serve

The Laravel application will run at http://127.0.0.1:8000.

Access the Application:

Open your browser and go to http://127.0.0.1:8000. Enter a topic in the text field and submit the form. This will trigger a POST request to your Flask server.

Top comments (0)