Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) networks are both types of neural networks designed for sequential data processing, such as time series data, text data, and audio data. While they are both used for sequential data processing, there are key differences between them:

Architecture:

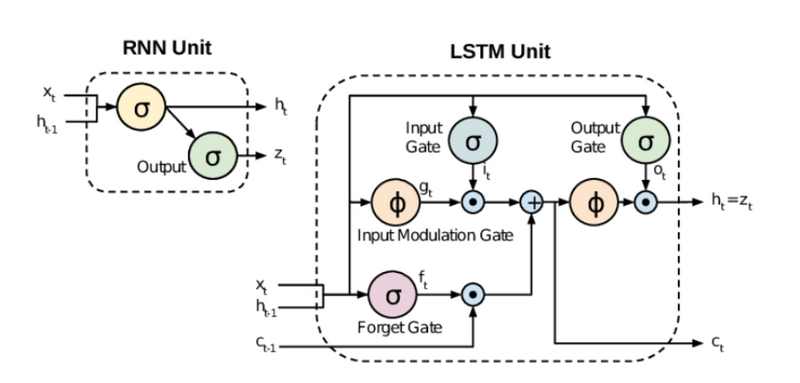

RNN: A basic RNN consists of a chain of repeating neural network modules. Each module takes an input and produces an output while also passing a hidden state to the next module in the sequence. However, traditional RNNs suffer from the vanishing gradient problem, which limits their ability to capture long-term dependencies in sequences.

LSTM: LSTM networks are a type of RNN specifically designed to address the vanishing gradient problem. They include additional components called "gates" that regulate the flow of information through the network. These gates allow LSTM networks to selectively remember or forget information over long sequences, making them better suited for capturing long-term dependencies.

Memory Cells:

RNN: In a basic RNN, the hidden state serves as the memory of the network. However, as the network processes longer sequences, the gradients can diminish, causing the network to "forget" earlier information.

LSTM: LSTM networks have more complex memory cells, which consist of a cell state and three gates: input gate, forget gate, and output gate. These gates control the flow of information into and out of the cell state, allowing the LSTM to retain or discard information over long sequences.

Handling Long-Term Dependencies:

RNN: Traditional RNNs struggle to capture long-term dependencies due to the vanishing gradient problem. As a result, they are less effective at tasks that require understanding of context over long sequences.

LSTM: LSTM networks are designed to address the vanishing gradient problem by allowing the network to selectively remember or forget information over time. This enables LSTMs to capture long-term dependencies more effectively, making them well-suited for tasks such as language modeling, machine translation, and sentiment analysis.

Example

let's illustrate how Long Short-Term Memory (LSTM) networks are better suited for capturing long-term dependencies compared to traditional Recurrent Neural Networks (RNNs) using an example.

Consider a task of predicting the next word in a sentence. Here's an example sentence:

"John went to the ________."

To predict the next word in the sentence, both RNNs and LSTMs take the previous words as input. Let's analyze how they process this sentence:

Using a Traditional RNN:

- At each time step, a traditional RNN takes the current word ("to") and the hidden state from the previous time step as input. It then calculates a new hidden state and output.

- As the RNN processes the sentence, it tries to capture the sequential dependencies between words. However, traditional RNNs often struggle with capturing long-term dependencies because of the vanishing gradient problem.

In this example, if the sentence continues with a word like "grocery", the RNN may have difficulty remembering that "John went to the grocery" requires "grocery" to be predicted next. This is because the information about "John went to" may have faded away in the hidden state due to the vanishing gradient problem

.

Using an LSTM:Like the traditional RNN, an LSTM also takes the current word ("to") and the hidden state from the previous time step as input. However, it also has a more complex architecture with additional components like memory cells and gates.

The LSTM's forget gate allows it to selectively forget or remember information from the previous time step's hidden state. This enables the LSTM to maintain long-term dependencies more effectively.

In the example sentence, if the LSTM sees "John went to" and then encounters a word like "grocery", it can learn to retain the information about "John went to" in its memory cell and use it to predict "grocery" more accurately

.

So, in this example, while both RNNs and LSTMs process the sequence of words, the LSTM is better equipped to remember important information over longer sequences, enabling it to make more accurate predictions about the next word in the sentence. This ability to capture long-term dependencies makes LSTMs superior for tasks involving sequential data, such as language modeling, machine translation, and sentiment analysis.

QUESTION

give example of neural network architecture sequential task

Solution

RNN,LSTM

Top comments (0)