What are the our problem type

General perprocessing steps are done or not

What are the performance matrics to decide which ml algo is to be used

how cross validation helps to determine which ml algo is best

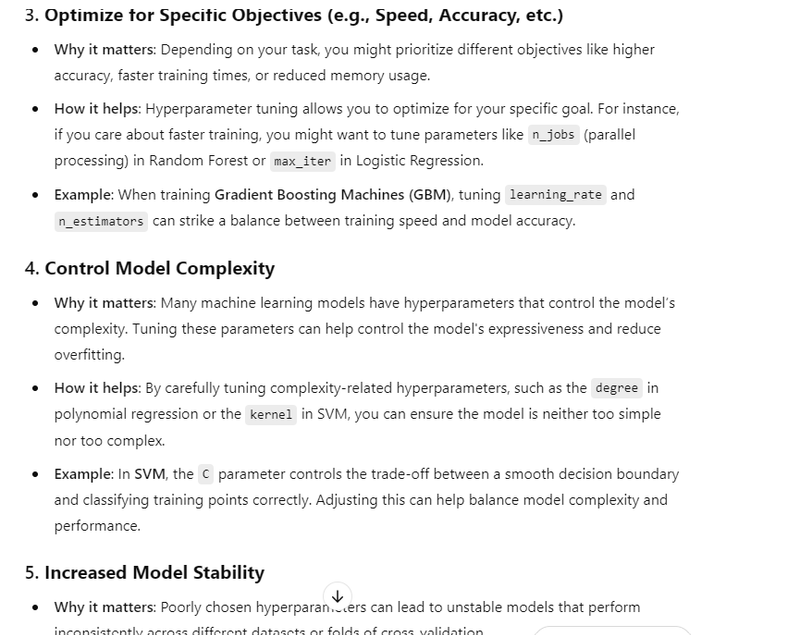

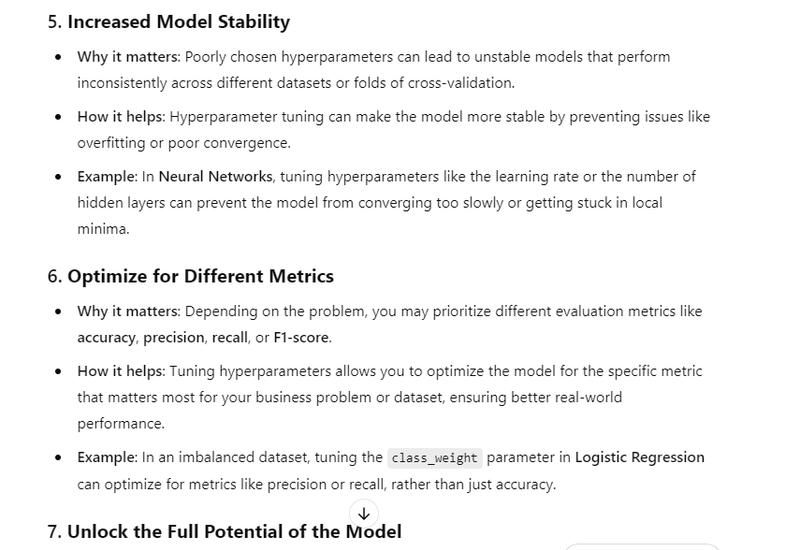

How to tunning hyperparameter helps which ml algo is best

Determining the best machine learning algorithm for a given problem depends on several factors, including the nature of the dataset, the specific task (classification, regression, clustering, etc.), and the performance criteria you care about (accuracy, precision, recall, F1-score, etc.). There is no single algorithm that works best for all types of problems, so you need to evaluate multiple algorithms based on performance and practical considerations.

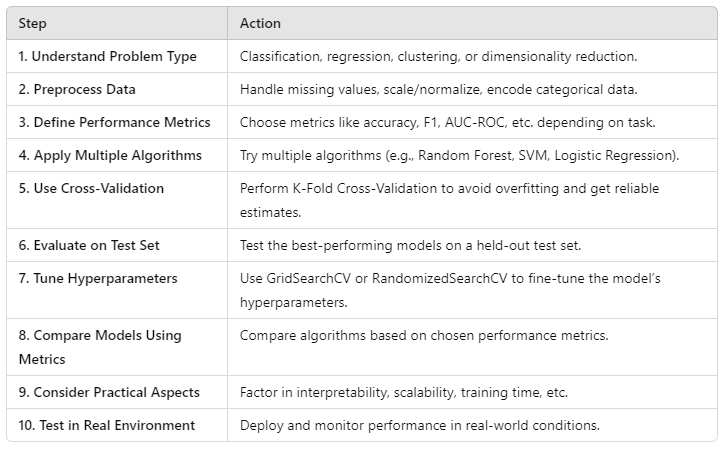

Here’s a step-by-step approach to determining the best machine learning algorithm:

- Understand the Problem Type

Classification: Predicting a categorical outcome (e.g., spam vs. not spam, fraud vs. no fraud).

Regression: Predicting a continuous outcome (e.g., predicting house prices, stock values).

Clustering: Grouping similar data points (e.g., customer segmentation).

Dimensionality Reduction: Reducing the number of features (e.g., using PCA for feature selection).

- Preprocess the Data

Handle Missing Data: Impute missing values or remove incomplete records.

Normalize or Standardize: Scale your features if needed, especially for algorithms sensitive to feature scaling (e.g., SVM, KNN).

Encode Categorical Variables: Use techniques like one-hot encoding or label encoding to convert categorical features into numerical format.

Remove Outliers: Handle or remove outliers if necessary.

-

Define Performance Metrics

Accuracy: Useful when classes are balanced.

Precision/Recall/F1-Score: Important when dealing with imbalanced datasets (e.g., fraud detection, medical diagnosis).

Mean Squared Error (MSE) or R²: For regression tasks.

AUC-ROC: For binary classification to measure the trade-off between true positive and false positive rates.

- Apply Multiple Algorithms It’s essential to try a range of algorithms to compare their performance. Some algorithms may work better for certain types of data and problems than others.

Common Algorithms to Try:

Classification:

Logistic Regression: Good baseline for binary classification.

Decision Trees: Captures non-linear relationships; easy to interpret.

Random Forest: Robust and reduces overfitting, performs well on most problems.

Support Vector Machines (SVM): Effective for small-to-medium datasets and high-dimensional spaces.

K-Nearest Neighbors (KNN): Simple but can struggle with large datasets.

Naive Bayes: Good for text classification, spam detection.

Neural Networks: Useful for more complex, non-linear problems but computationally expensive.

Regression:

Linear Regression: Good baseline for regression tasks.

Decision Trees: Can capture non-linear relationships.

Random Forest: Reduces overfitting and improves accuracy.

Support Vector Regression (SVR): Effective for small datasets and complex relationships.

Gradient Boosting (XGBoost, LightGBM): Often gives high accuracy in regression tasks.

Clustering:

K-Means: Good for well-separated clusters.

Hierarchical Clustering: Effective for datasets with small numbers of samples.

DBSCAN: Works well with non-spherical clusters and noise.

Dimensionality Reduction:

PCA (Principal Component Analysis): Reduces dimensionality while preserving variance.

t-SNE: Useful for visualizing high-dimensional data in 2D or 3D space.

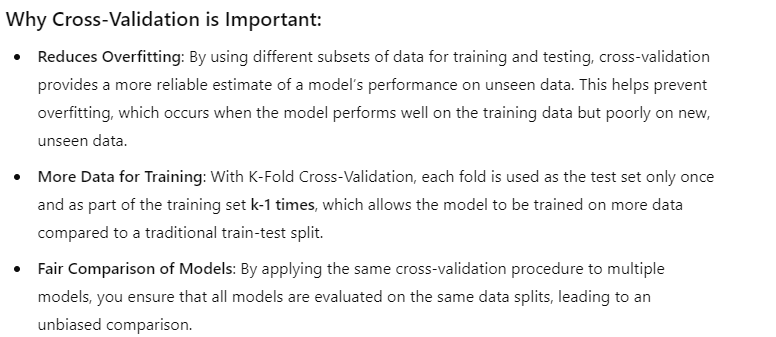

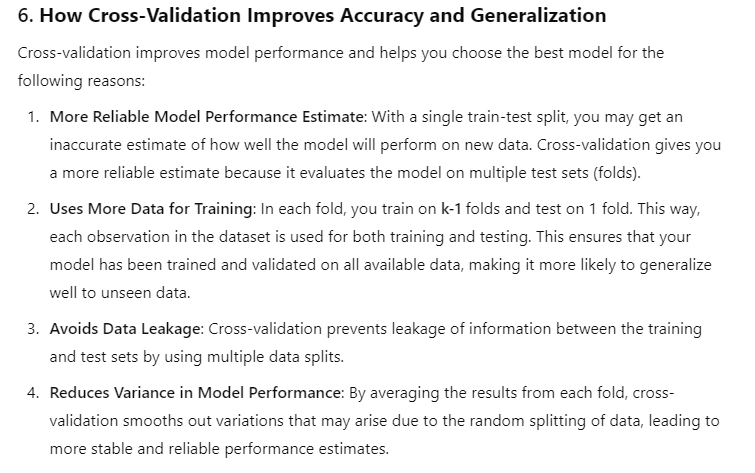

- Use Cross-Validation to Compare Models To avoid overfitting and get an unbiased estimate of each algorithm's performance, use cross-validation (e.g., K-Fold Cross-Validation).

Example:

from sklearn.model_selection import cross_val_score

from sklearn.ensemble import RandomForestClassifier

from sklearn.svm import SVC

# Initialize models

rf_model = RandomForestClassifier(n_estimators=100)

svm_model = SVC(kernel='linear')

# Perform 5-Fold Cross-Validation on both models

rf_scores = cross_val_score(rf_model, X, y, cv=5, scoring='accuracy')

svm_scores = cross_val_score(svm_model, X, y, cv=5, scoring='accuracy')

# Print average accuracy for both models

print(f"Random Forest Mean Accuracy: {rf_scores.mean()}")

print(f"SVM Mean Accuracy: {svm_scores.mean()}")

Evaluate Performance Using Test Set

After tuning and selecting the best model from cross-validation, evaluate the performance of the best model on a held-out test set (data not used for training or validation) to ensure it generalizes well.Tune Hyperparameters

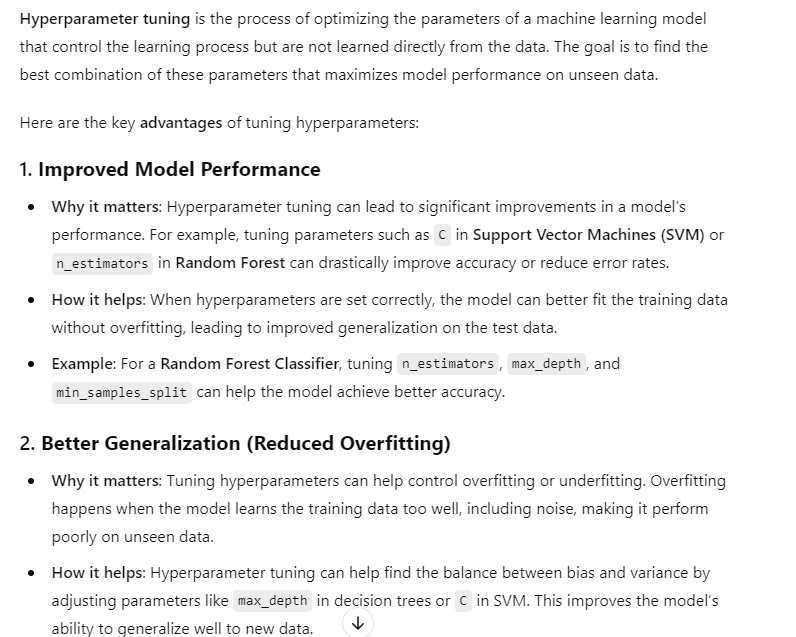

Once you’ve identified the top-performing algorithms, use hyperparameter tuning to fine-tune the model for optimal performance. Common methods:

GridSearchCV: Exhaustively searches over a grid of parameters.

RandomizedSearchCV: Randomly samples a range of parameters, often faster than GridSearchCV.

Example (Using GridSearchCV):

from sklearn.model_selection import GridSearchCV

# Set parameter grid for Random Forest

param_grid = {

'n_estimators': [100, 200, 300],

'max_depth': [10, 20, 30],

'min_samples_split': [2, 5, 10]

}

# Initialize GridSearchCV

grid_search = GridSearchCV(estimator=rf_model, param_grid=param_grid, cv=5, scoring='accuracy')

# Fit the model

grid_search.fit(X_train, y_train)

# Best hyperparameters

print(grid_search.best_params_)

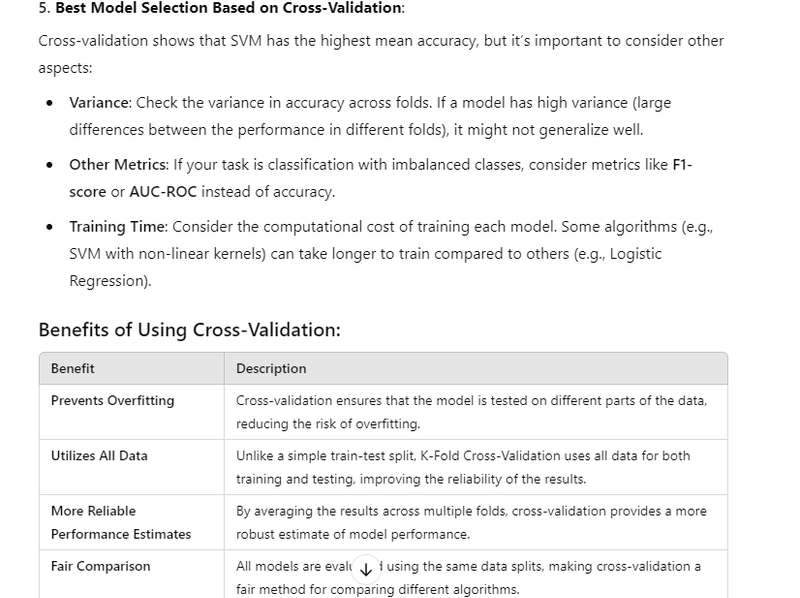

- Compare Models Using Metrics After running multiple algorithms and tuning hyperparameters, compare models based on the chosen evaluation metrics (e.g., accuracy, F1-score, ROC-AUC).

Example Comparison:

Model Accuracy Precision Recall F1-Score

Logistic Regression 85% 0.86 0.82 0.84

Random Forest 90% 0.92 0.88 0.90

SVM 87% 0.89 0.84 0.86

XGBoost 91% 0.93 0.90 0.91

- Consider Practical Aspects Training Time: Some algorithms like Random Forest and XGBoost can take longer to train, especially on large datasets. If you need a model that trains quickly, Logistic Regression or Naive Bayes may be better. Interpretability: If model interpretability is crucial, algorithms like Logistic Regression, Decision Trees, and Random Forest (with feature importance) are preferable. Neural Networks and SVM can be harder to interpret. Scalability: For very large datasets, Random Forest and XGBoost tend to scale better. KNN and SVM can struggle with large datasets.

- Test the Model in a Real Environment Deploy the best model and test it with real-world data to monitor its performance in production. Sometimes models perform differently in production compared to training due to data drift, new patterns, etc. Summary of Steps for Determining the Best Machine Learning Algorithm:

Top comments (0)