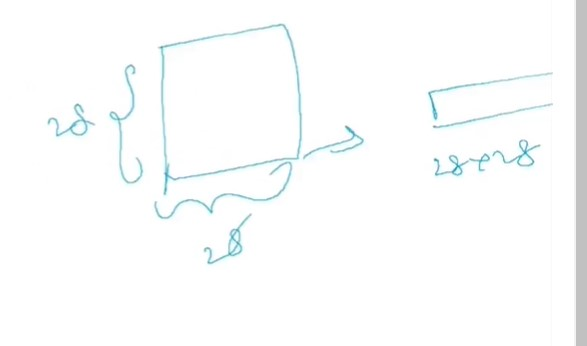

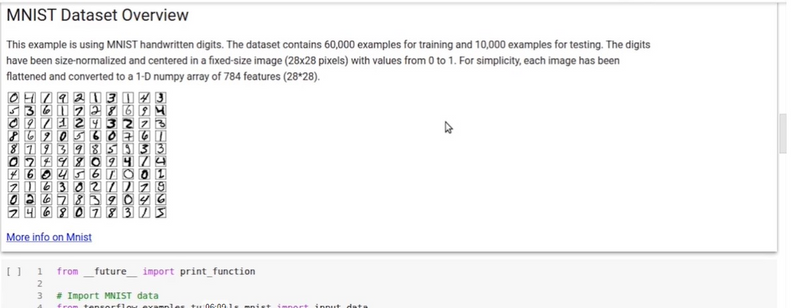

Below is an example code that demonstrates how to implement a simple neural network using TensorFlow to determine loss and accuracy for a classification task. This example uses the MNIST dataset, a dataset of 28x28 pixel grayscale images of handwritten digits (0 through 9).

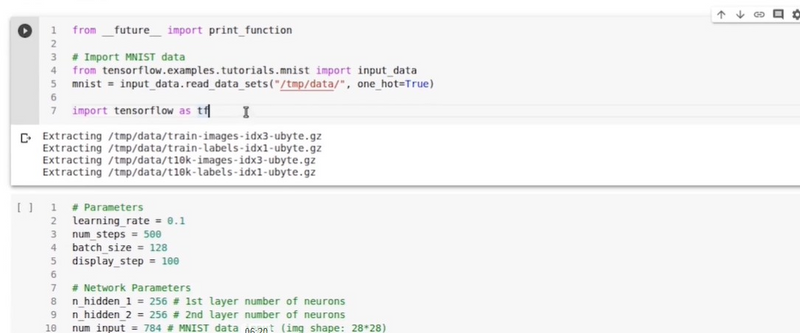

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

# Step 1: Import MNIST data

mnist = input_data.read_data_sets("/tmp/data/", one_hot=True)

# Step 2: Build the neural network

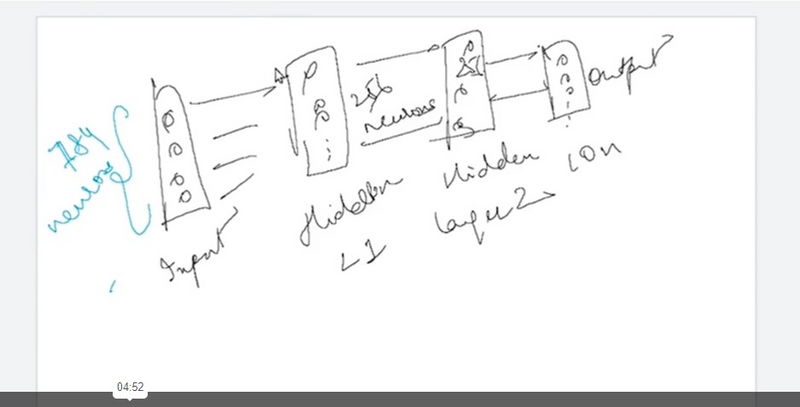

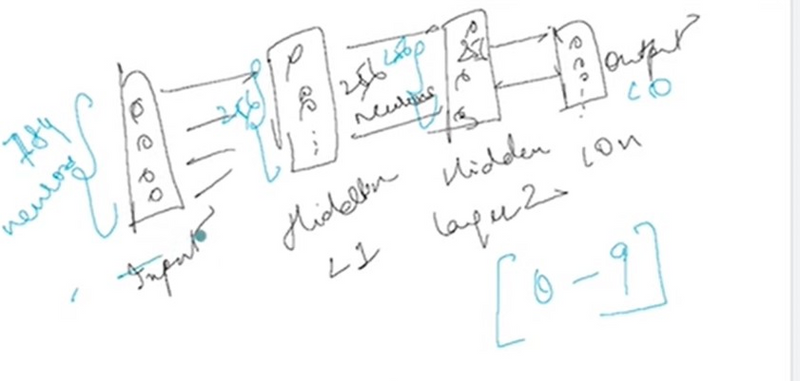

input_size = 784 # 28x28 pixels flattened

output_size = 10 # 10 classes (digits 0-9)

# Define placeholders for input data and labels

x = tf.placeholder(tf.float32, [None, input_size], name='x')

y_true = tf.placeholder(tf.float32, [None, output_size], name='y_true')

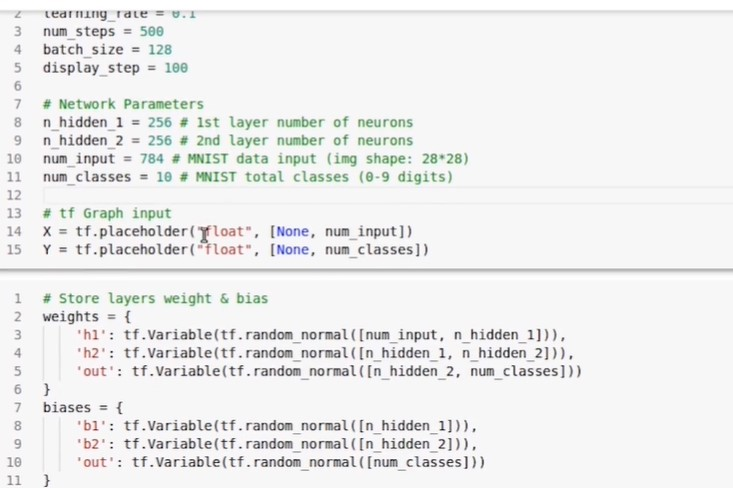

# Define the neural network architecture

hidden_layer = tf.layers.dense(inputs=x, units=128, activation=tf.nn.relu)

output_layer = tf.layers.dense(inputs=hidden_layer, units=output_size, activation=None)

y_pred = tf.nn.softmax(output_layer, name='y_pred')

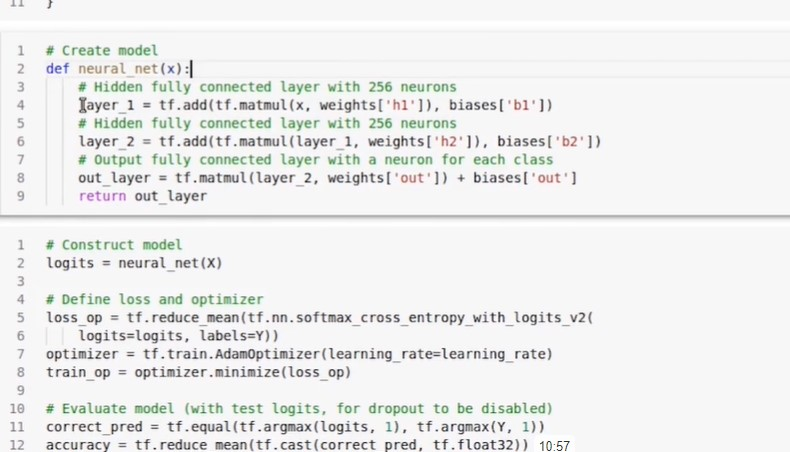

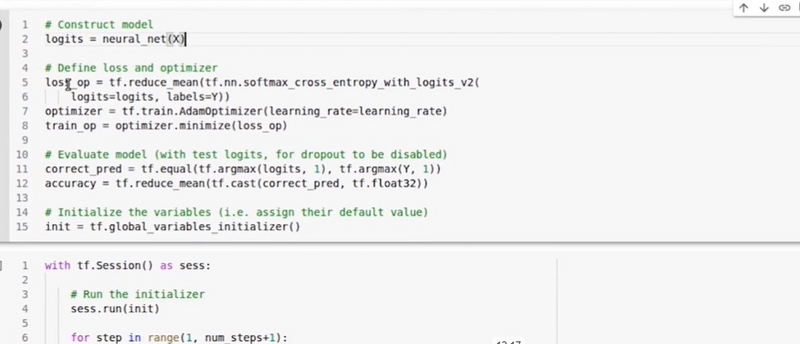

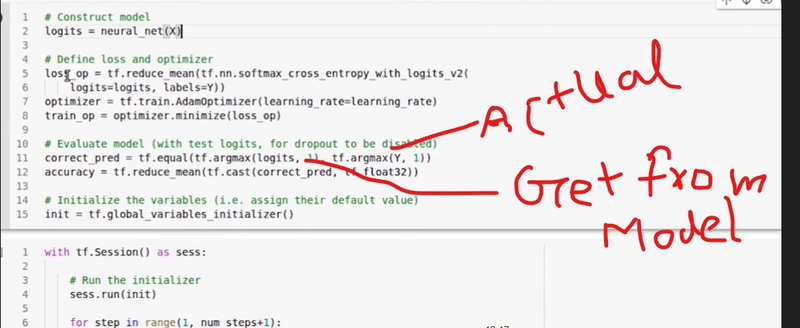

# Step 3: Define loss and accuracy

cross_entropy = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=output_layer, labels=y_true))

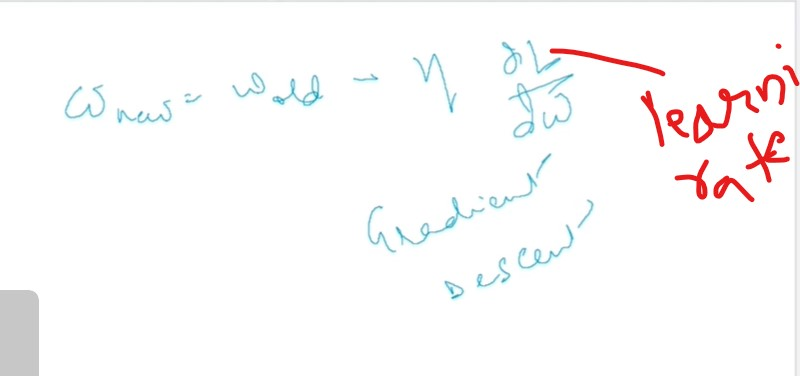

optimizer = tf.train.AdamOptimizer(learning_rate=0.001)

train_op = optimizer.minimize(cross_entropy)

correct_prediction = tf.equal(tf.argmax(y_pred, 1), tf.argmax(y_true, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

# Step 4: Train the model

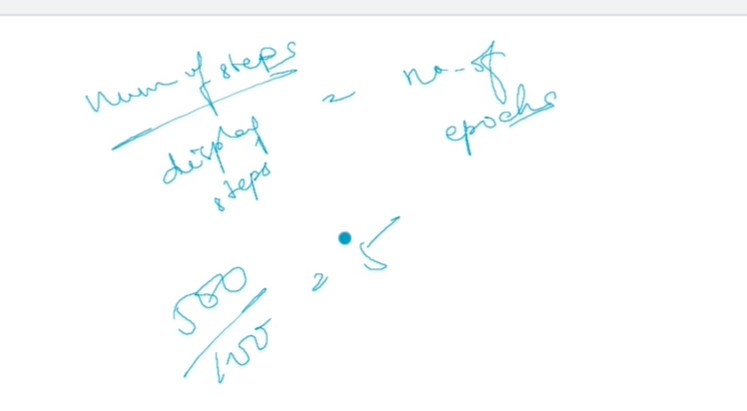

num_epochs = 5

batch_size = 64

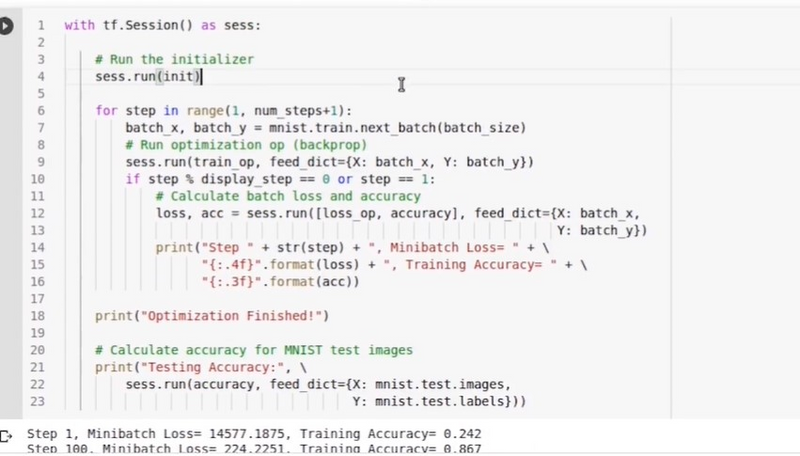

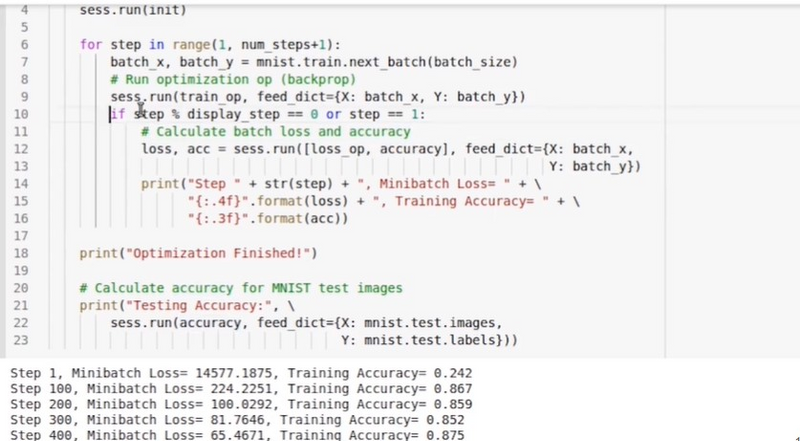

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

for epoch in range(num_epochs):

avg_loss = 0.0

total_batches = int(mnist.train.num_examples / batch_size)

for _ in range(total_batches):

batch_x, batch_y = mnist.train.next_batch(batch_size)

_, loss = sess.run([train_op, cross_entropy], feed_dict={x: batch_x, y_true: batch_y})

avg_loss += loss / total_batches

val_accuracy = sess.run(accuracy, feed_dict={x: mnist.validation.images, y_true: mnist.validation.labels})

print(f'Epoch {epoch + 1}/{num_epochs}, Loss: {avg_loss:.4f}, Validation Accuracy: {val_accuracy:.4f}')

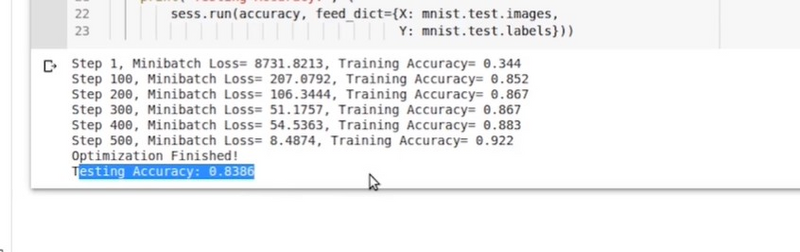

# Step 5: Evaluate the model on the test set

test_accuracy = sess.run(accuracy, feed_dict={x: mnist.test.images, y_true: mnist.test.labels})

print(f'Test Accuracy: {test_accuracy:.4f}')

Explanation of the steps:

Import MNIST data:

Import the MNIST dataset using TensorFlow's input_data.

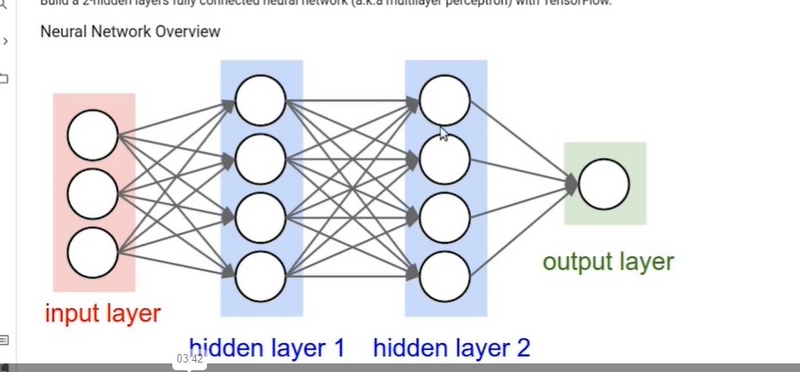

Build the neural network:

Define the architecture of a simple neural network with one hidden layer.

Define loss and accuracy:

Define the cross-entropy loss and accuracy computation.

Train the model:

Use the Adam optimizer to minimize the cross-entropy loss during training.

Evaluate on the test set:

Evaluate the trained model on the test set to determine its accuracy.

This code provides a basic example of building, training, and evaluating a neural network using TensorFlow. Depending on your specific task and dataset, you might need to customize the architecture and hyperparameters.

Why we use tf.reduce_mean

In the provided code, tf.reduce_mean(tf.cast(correct_prediction, tf.float32)) is used to compute the accuracy of the model. Let's break down this expression:

correct_prediction:

- correct_prediction is a boolean tensor resulting from comparing the predicted class indices (tf.argmax(y_pred, 1)) with the true class indices (tf.argmax(y_true, 1)).

It contains True for correctly predicted instances and False for incorrectly predicted instances

.

tf.cast(correct_prediction, tf.float32):The boolean values in correct_prediction are cast to float32, where True becomes 1.0 and False becomes 0.0.

This step is necessary because reduce_mean requires the input to be of numeric type

.

tf.reduce_mean(tf.cast(correct_prediction, tf.float32)):tf.reduce_mean calculates the mean of the values in the tensor along all dimensions.

In this context, it computes the accuracy by taking the average of the 1s and 0s in the tensor obtained from casting correct_prediction

.

Using reduce_mean in this way allows you to calculate the accuracy of your model across a batch of examples. It gives the proportion of correctly predicted instances in the batch.

Here's a more detailed breakdown:

correct_prediction tensor might look like [True, False, True, True, False, ...].

After casting to float32, it becomes [1.0, 0.0, 1.0, 1.0, 0.0, ...].

The mean of this tensor would be the ratio of correct predictions to the total number of predictions in the batch, providing the accuracy.

Another Examples

Top comments (0)