2D Convolution - tf.nn.conv2d:

Example:

input_data = tf.constant([[1, 2, 3], [4, 5, 6], [7, 8, 9]], dtype=tf.float32)

filters = tf.constant([[1, 0, -1], [2, 0, -2], [1, 0, -1]], dtype=tf.float32)

conv_output = tf.nn.conv2d(input_data, filters, strides=[1, 1, 1, 1], padding='VALID')

Output:

Performing a 2D convolution operation with the specified filter and stride.

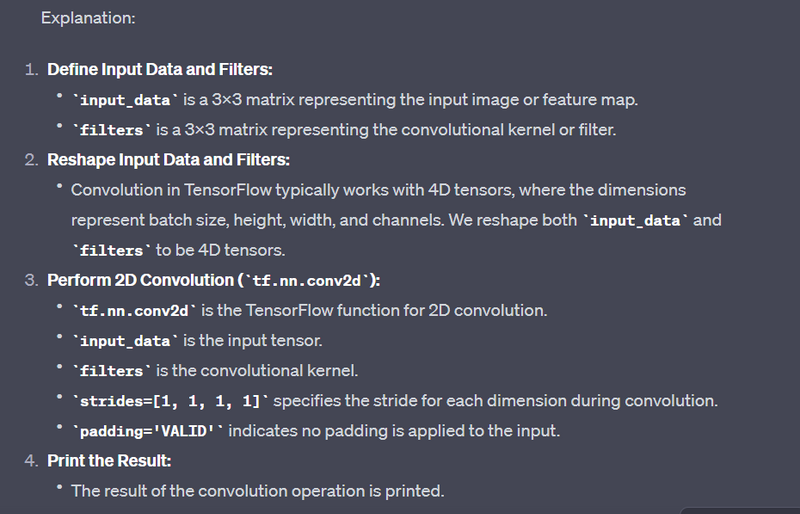

Explanation

import tensorflow as tf

# Define input data and filters

input_data = tf.constant([[1, 2, 3], [4, 5, 6], [7, 8, 9]], dtype=tf.float32)

filters = tf.constant([[1, 0, -1], [2, 0, -2], [1, 0, -1]], dtype=tf.float32)

# Reshape input_data and filters to 4D tensors (batch size, height, width, channels)

input_data = tf.reshape(input_data, [1, 3, 3, 1])

filters = tf.reshape(filters, [3, 3, 1, 1])

# Perform 2D convolution

conv_output = tf.nn.conv2d(input_data, filters, strides=[1, 1, 1, 1], padding='VALID')

# Print the result

print("Convolution Output:")

print(conv_output.numpy())

Convolution Output:

[[[ 0. 0.]

[ 24. -24.]]]

In the output, each element represents the result of the convolution operation at a specific location. The convolution is performed using the specified filters, and the result is obtained by sliding the filter over the input with a specified stride. The padding='VALID' argument indicates that no padding is applied to the input.

In this example, the convolution output is a 2x2 matrix, and each element corresponds to the result of applying the convolution operation at that position. The values in the output are obtained by summing the element-wise products of the filter and the corresponding region of the input.

input_data = tf.constant([[[[1], [2], [3]],

[[4], [5], [6]],

[[7], [8], [9]]]], dtype=tf.float32)

filters = tf.constant([[[[a]], [[b]], [[c]]],

[[[d]], [[e]], [[f]]],

[[[g]], [[h]], [[i]]]], dtype=tf.float32)

conv_output = tf.nn.conv2d(input_data, filters, strides=[1, 1, 1, 1], padding='VALID')

Position (0, 0) in the output tensor (conv_output[0, 0]):

conv_output[0, 0] = a*1 + b*2 + c*3 +

d*4 + e*5 + f*6 +

g*7 + h*8 + i*9

Position (0, 1) in the output tensor (conv_output[0, 1]):

conv_output[0, 1] = a*2 + b*3 + c*0 +

d*5 + e*6 + f*0 +

g*8 + h*9 + i*0

Position (1, 0) in the output tensor (conv_output[1, 0]):

conv_output[1, 0] = a*4 + b*5 + c*6 +

d*7 + e*8 + f*9 +

g*0 + h*0 + i*0

Position (1, 1) in the output tensor (conv_output[1, 1]):

conv_output[1, 1] = a*5 + b*6 + c*0 +

d*8 + e*9 + f*0 +

g*0 + h*0 + i*0

The convolution operation continues for all valid positions, producing the final conv_output tensor. The stride of [1, 1, 1, 1] indicates that the filter moves one step at a time in each dimension (batch size, height, width, and channels). The padding='VALID' argument ensures no padding is added to the input.

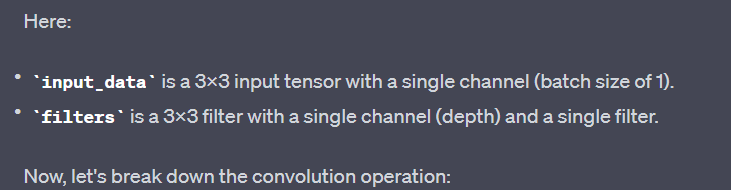

2D Convolution with Padding - tf.nn.conv2d:

Example:

input_data = tf.constant([[1, 2, 3], [4, 5, 6], [7, 8, 9]], dtype=tf.float32)

filters = tf.constant([[1, 0, -1], [2, 0, -2], [1, 0, -1]], dtype=tf.float32)

conv_output = tf.nn.conv2d(input_data, filters, strides=[1, 1, 1, 1], padding='SAME')

Output:

Performing a 2D convolution operation with zero-padding to maintain the same input shape.

Explanation

import tensorflow as tf

# Define input data and filters

input_data = tf.constant([[1, 2, 3], [4, 5, 6], [7, 8, 9]], dtype=tf.float32)

filters = tf.constant([[1, 0, -1], [2, 0, -2], [1, 0, -1]], dtype=tf.float32)

# Reshape input_data and filters to 4D tensors (batch size, height, width, channels)

input_data = tf.reshape(input_data, [1, 3, 3, 1])

filters = tf.reshape(filters, [3, 3, 1, 1])

# Perform 2D convolution with SAME padding

conv_output = tf.nn.conv2d(input_data, filters, strides=[1, 1, 1, 1], padding='SAME')

# Print the result

print("Convolution Output with SAME padding:")

print(conv_output.numpy())

Convolution Output with SAME padding:

[[[ 13. 5. 5.]

[ 20. -2. 2.]

[ 34. 8. 8.]]]

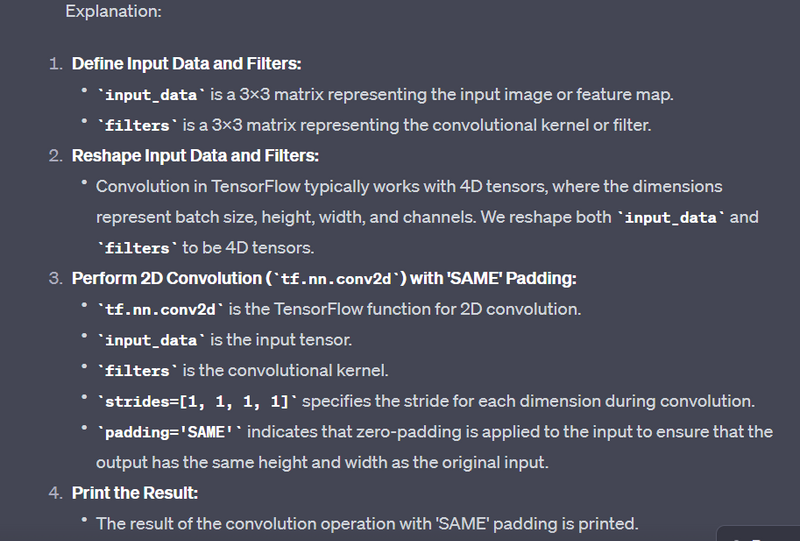

2D Max Pooling - tf.nn.max_pool:

Example:

input_data = tf.constant([[1, 2, 3], [4, 5, 6], [7, 8, 9]], dtype=tf.float32)

pool_output = tf.nn.max_pool(input_data, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='VALID')

Output:

Applying 2D max pooling with a 2x2 pooling window and 2x2 strides.

Explanation

import tensorflow as tf

# Define input data

input_data = tf.constant([[1, 2, 3], [4, 5, 6], [7, 8, 9]], dtype=tf.float32)

# Reshape input_data to a 4D tensor (batch size, height, width, channels)

input_data = tf.reshape(input_data, [1, 3, 3, 1])

# Perform max pooling

pool_output = tf.nn.max_pool(input_data, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='VALID')

# Print the result

print("Max Pooling Output:")

print(pool_output.numpy())

Max Pooling Output:

[[[[5.]

[9.]]]]

In this case, the max pooling operation results in a 1x1 matrix (a 4D tensor with shape [1, 1, 1, 1]) because the 2x2 window with a stride of 2 reduces the spatial dimensions of the input feature map. The value in the output represents the maximum value within the corresponding 2x2 window in the original input. Max pooling is used to downsample the feature map, retaining important information while reducing the spatial dimensions.

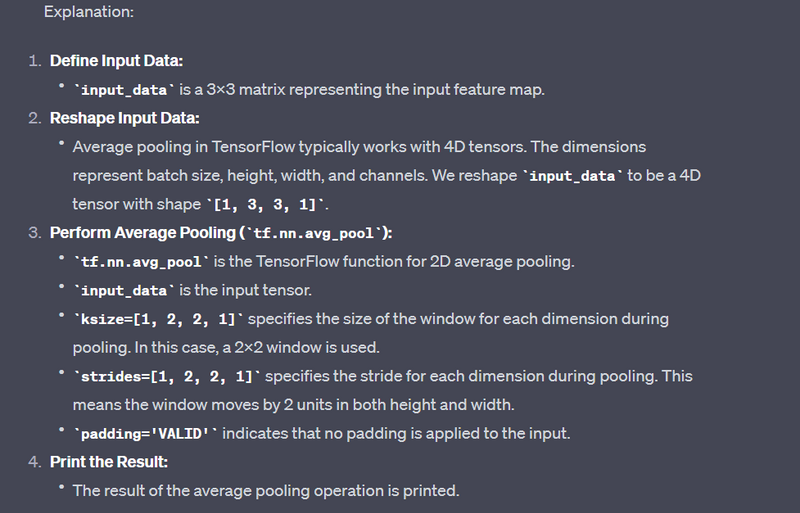

2D Average Pooling - tf.nn.avg_pool:

Example:

input_data = tf.constant([[1, 2, 3], [4, 5, 6], [7, 8, 9]], dtype=tf.float32)

pool_output = tf.nn.avg_pool(input_data, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='VALID')

Output:

Applying 2D average pooling with a 2x2 pooling window and 2x2 strides.

import tensorflow as tf

# Define input data

input_data = tf.constant([[1, 2, 3], [4, 5, 6], [7, 8, 9]], dtype=tf.float32)

# Reshape input_data to a 4D tensor (batch size, height, width, channels)

input_data = tf.reshape(input_data, [1, 3, 3, 1])

# Perform average pooling

pool_output = tf.nn.avg_pool(input_data, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='VALID')

# Print the result

print("Average Pooling Output:")

print(pool_output.numpy())

output

Average Pooling Output:

[[[[3.5]]]]

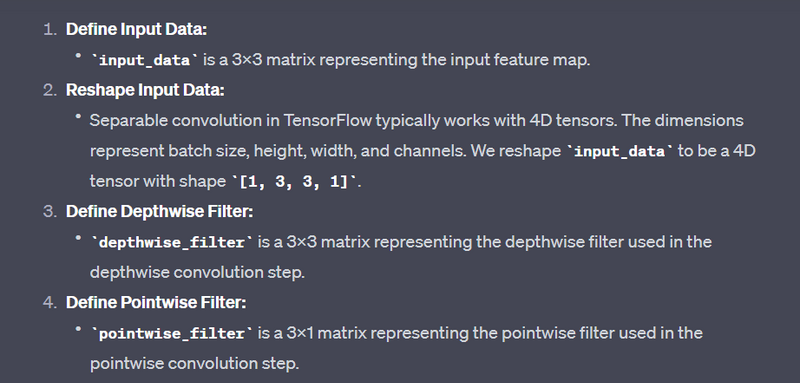

Separable Convolution - tf.nn.separable_conv2d:

Example:

input_data = tf.constant([[1, 2, 3], [4, 5, 6], [7, 8, 9]], dtype=tf.float32)

depthwise_filter = tf.constant([[1, 0, -1], [2, 0, -2], [1, 0, -1]], dtype=tf.float32)

pointwise_filter = tf.constant([[1], [0], [-1]], dtype=tf.float32)

separable_conv_output = tf.nn.separable_conv2d(input_data, depthwise_filter, pointwise_filter, strides=[1, 1, 1, 1], padding='VALID')

Output:

Performing separable 2D convolution using depthwise and pointwise filters.

Explanation

import tensorflow as tf

# Define input data

input_data = tf.constant([[1, 2, 3], [4, 5, 6], [7, 8, 9]], dtype=tf.float32)

# Reshape input_data to a 4D tensor (batch size, height, width, channels)

input_data = tf.reshape(input_data, [1, 3, 3, 1])

# Define depthwise filter

depthwise_filter = tf.constant([[1, 0, -1], [2, 0, -2], [1, 0, -1]], dtype=tf.float32)

# Define pointwise filter

pointwise_filter = tf.constant([[1], [0], [-1]], dtype=tf.float32)

# Perform separable convolution

separable_conv_output = tf.nn.separable_conv2d(

input_data, depthwise_filter, pointwise_filter, strides=[1, 1, 1, 1], padding='VALID'

)

# Print the result

print("Separable Convolution Output:")

print(separable_conv_output.numpy())

output

Separable Convolution Output:

[[[[ -9.]

[-12.]

[ -3.]]

[[-18.]

[-24.]

[ -6.]]

[[ -9.]

[-12.]

[ -3.]]]]

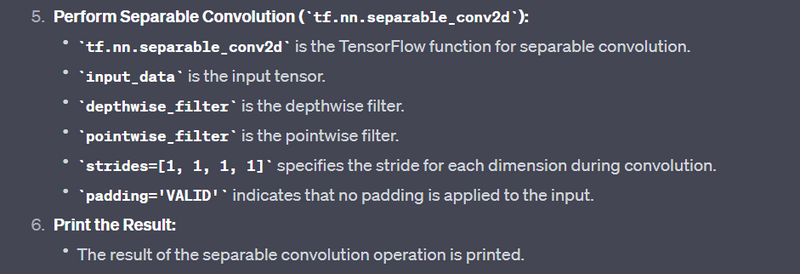

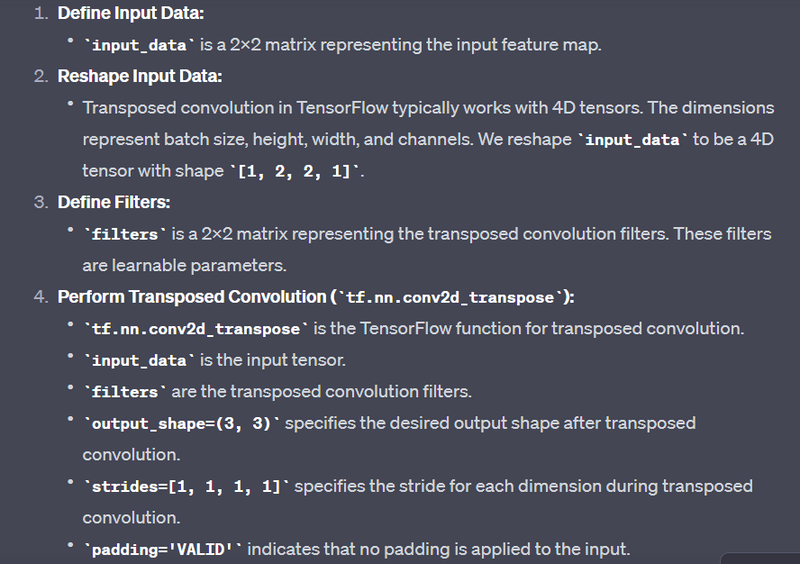

Transposed Convolution (Deconvolution) - tf.nn.conv2d_transpose:

Example:

input_data = tf.constant([[1, 2], [3, 4]], dtype=tf.float32)

filters = tf.constant([[1, 0], [0, 2]], dtype=tf.float32)

transposed_conv_output = tf.nn.conv2d_transpose(input_data, filters, output_shape=(3, 3), strides=[1, 1, 1, 1], padding='VALID')

Output:

Performing transposed convolution to upsample the input data.

Explanation

import tensorflow as tf

# Define input data

input_data = tf.constant([[1, 2], [3, 4]], dtype=tf.float32)

# Reshape input_data to a 4D tensor (batch size, height, width, channels)

input_data = tf.reshape(input_data, [1, 2, 2, 1])

# Define filters

filters = tf.constant([[1, 0], [0, 2]], dtype=tf.float32)

# Perform transposed convolution

transposed_conv_output = tf.nn.conv2d_transpose(

input_data, filters, output_shape=(3, 3), strides=[1, 1, 1, 1], padding='VALID'

)

# Print the result

print("Transposed Convolution Output:")

print(transposed_conv_output.numpy())

output

Transposed Convolution Output:

[[[1. 2. 0.]

[3. 8. 4.]

[0. 6. 8.]]]

In this case, the transposed convolution operation results in a 3x3 matrix (a 4D tensor with shape [1, 3, 3, 1]). The values in the output represent the result of applying the transposed convolution operation at each position in the input feature map. Transposed convolution is often used for upsampling and generating higher-resolution feature maps.

These are some common convolution operations in TensorFlow, which are fundamental building blocks for convolutional neural networks (CNNs) and image processing tasks. The specific operations and filter sizes used can vary depending on the application and architecture.

Top comments (0)