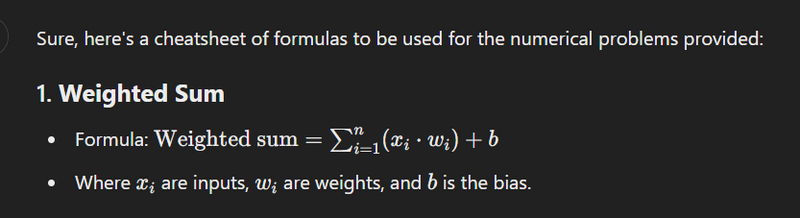

Problem: A neuron has input values [2, 3] and weights [0.4, 0.6]. Calculate the weighted sum.

Solution: Weighted sum = 2 * 0.4 + 3 * 0.6 = 0.8 + 1.8 = 2.6

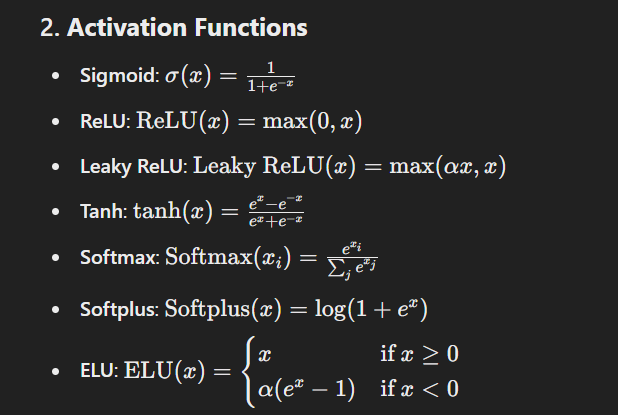

Problem: Given a weighted sum of 2.6, apply the ReLU activation function.

Solution: ReLU(2.6) = max(0, 2.6) = 2.6

Problem: Apply the sigmoid activation function to a weighted sum of 1.0.

Solution: Sigmoid(1.0) = 1 / (1 + exp(-1.0)) ≈ 0.731

Problem: A neuron has input values [1, -1] and weights [0.5, -0.5]. Calculate the weighted sum.

Solution: Weighted sum = 1 * 0.5 + (-1) * (-0.5) = 0.5 + 0.5 = 1.0

Problem: Given a weighted sum of 1.0, apply the tanh activation function.

Solution: tanh(1.0) = (exp(1.0) - exp(-1.0)) / (exp(1.0) + exp(-1.0)) ≈ 0.761

Problem: A neuron receives inputs [0.5, 2.0] with weights [0.2, 0.8]. Calculate the weighted sum.

Solution: Weighted sum = 0.5 * 0.2 + 2.0 * 0.8 = 0.1 + 1.6 = 1.7

Problem: Apply the softmax activation function to the outputs [2.0, 1.0].

Solution: Softmax([2.0, 1.0]) = [exp(2.0)/(exp(2.0) + exp(1.0)), exp(1.0)/(exp(2.0) + exp(1.0))] ≈ [0.731, 0.269]

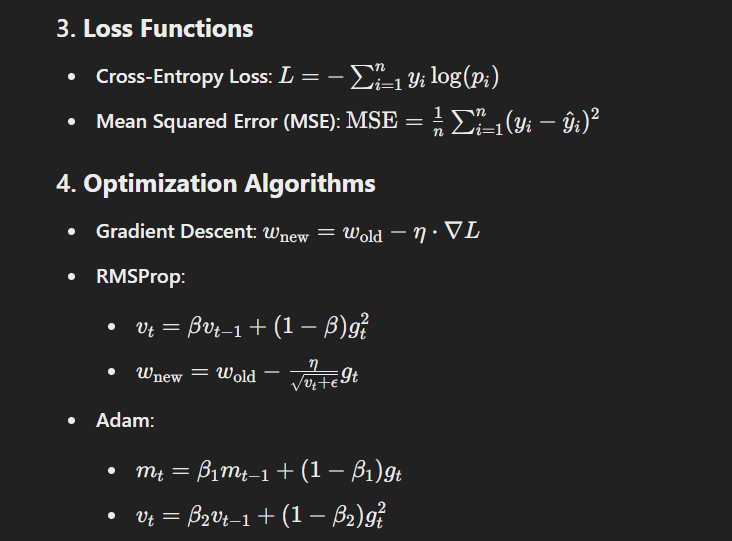

Problem: Compute the cross-entropy loss for a prediction of 0.8 with the true label 1.

Solution: Cross-entropy loss = -log(0.8) ≈ 0.223

Problem: A neuron with inputs [3, 4] and weights [0.3, 0.7]. Calculate the weighted sum.

Solution: Weighted sum = 3 * 0.3 + 4 * 0.7 = 0.9 + 2.8 = 3.7

Problem: Apply the leaky ReLU activation function to a weighted sum of -1.0.

Solution: Leaky ReLU(-1.0) = max(0.01 * -1.0, -1.0) = -0.01

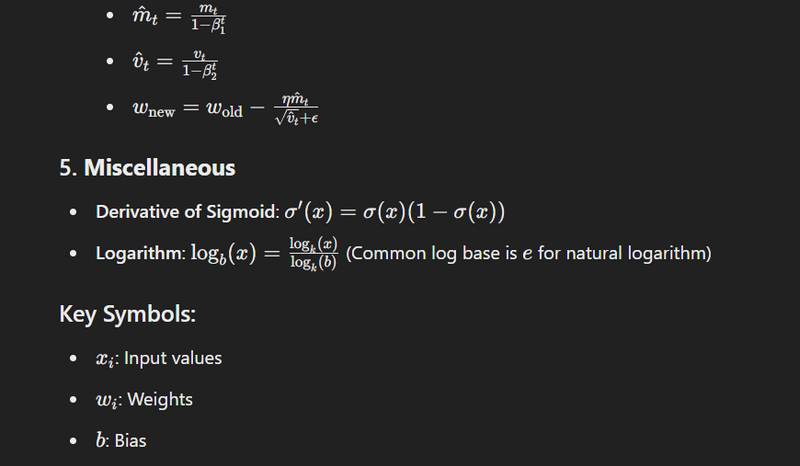

Problem: Calculate the derivative of the sigmoid function at x = 0.5.

Solution: Derivative of sigmoid at 0.5 ≈ 0.235

Problem: For a neuron with inputs [1, 1] and weights [0.4, 0.6], calculate the output using the ReLU activation function.

Solution: Weighted sum = 1 * 0.4 + 1 * 0.6 = 1.0; ReLU(1.0) = 1.0

Problem: Calculate the gradient of the loss function with respect to the weights [0.5, 0.3] for inputs [1, 2].

Solution: Gradient = [input1 * error, input2 * error] (Assuming error = -0.2), Gradient = [1 * -0.2, 2 * -0.2] = [-0.2, -0.4]

Problem: Apply the softplus activation function to a weighted sum of 0.5.

Solution: Softplus(0.5) = log(1 + exp(0.5)) ≈ 0.974

Problem: Calculate the output of a neuron with inputs [2, -1], weights [0.3, -0.2], and using the tanh activation function.

Solution: Weighted sum = 2 * 0.3 + (-1) * (-0.2) = 0.6 + 0.2 = 0.8; tanh(0.8) ≈ 0.664

Problem: For a neuron with input 1.0, weight 1.5, and bias 0.5, calculate the output using the sigmoid activation function.

Solution: Weighted sum = 1.0 * 1.5 + 0.5 = 2.0; Sigmoid(2.0) ≈ 0.881

Problem: Calculate the RMSProp update for a weight of 0.5 with a gradient of -0.1, learning rate of 0.01, decay rate of 0.9, and epsilon of 1e-8.

Solution: Update = learning rate * gradient / sqrt(accumulated_gradient + epsilon) (assuming accumulated_gradient = gradient^2) ≈ 0.01 * -0.1 / sqrt(0.01 + 1e-8) ≈ -0.01

Problem: Calculate the output of a neuron with inputs [1, 2], weights [0.1, 0.9], and using the softmax activation function on the output.

Solution: Weighted sum = 1 * 0.1 + 2 * 0.9 = 0.1 + 1.8 = 1.9; Softmax([1.9]) = [1] (since it's a single output)

Problem: Compute the mean squared error for predictions [0.5, 0.6] and true labels [0.4, 0.8].

Solution: MSE = (1/2) * ((0.5 - 0.4)^2 + (0.6 - 0.8)^2) = (1/2) * (0.01 + 0.04) = 0.025

Problem: Apply the ELU activation function to a weighted sum of -2.0.

Solution: ELU(-2.0) = alpha * (exp(-2.0) - 1) (assuming alpha = 1.0) ≈ -0.865

Problem: You have a neuron with inputs [1.5, -2.0] and weights [0.3, 0.8]. The bias for this neuron is -0.1. Calculate the neuron's output using the linear activation function.

Solution: Weighted sum = (1.5 * 0.3) + (-2.0 * 0.8) + (-0.1) = 0.45 - 1.6 - 0.1 = -1.25

Problem: A neural network layer has 3 neurons. The input to this layer is [1.0, -1.5, 2.0], and the weights for the three neurons are [0.2, 0.8, -0.5], [0.7, -0.1, 0.9], and [-0.3, 0.6, 0.4], respectively. Calculate the output of this layer using the ReLU activation function.

Solution:

Neuron 1: Weighted sum = (1.0 * 0.2) + (-1.5 * 0.8) + (2.0 * -0.5) = 0.2 - 1.2 - 1.0 = -2.0; ReLU(-2.0) = 0

Neuron 2: Weighted sum = (1.0 * 0.7) + (-1.5 * -0.1) + (2.0 * 0.9) = 0.7 + 0.15 + 1.8 = 2.65; ReLU(2.65) = 2.65

Neuron 3: Weighted sum = (1.0 * -0.3) + (-1.5 * 0.6) + (2.0 * 0.4) = -0.3 - 0.9 + 0.8 = -0.4; ReLU(-0.4) = 0

Problem: A neuron receives inputs [0.5, -1.0, 1.5] with weights [0.2, -0.3, 0.7]. Apply the sigmoid activation function to calculate the output.

Solution: Weighted sum = (0.5 * 0.2) + (-1.0 * -0.3) + (1.5 * 0.7) = 0.1 + 0.3 + 1.05 = 1.45; Sigmoid(1.45) = 1 / (1 + exp(-1.45)) ≈ 0.81

Problem: Calculate the output of a softmax function for a neuron layer with outputs [2.0, 1.0, 0.1].

Solution: Softmax([2.0, 1.0, 0.1]) = [exp(2.0)/(exp(2.0) + exp(1.0) + exp(0.1)), exp(1.0)/(exp(2.0) + exp(1.0) + exp(0.1)), exp(0.1)/(exp(2.0) + exp(1.0) + exp(0.1))]

exp(2.0) ≈ 7.39

exp(1.0) ≈ 2.72

exp(0.1) ≈ 1.11

Softmax ≈ [7.39 / (7.39 + 2.72 + 1.11), 2.72 / (7.39 + 2.72 + 1.11), 1.11 / (7.39 + 2.72 + 1.11)] ≈ [0.65, 0.24, 0.10]

Problem: A neural network has 4 outputs before applying the softmax activation function: [3.0, 1.0, 0.2, 2.5]. Calculate the probability distribution.

Solution: Softmax([3.0, 1.0, 0.2, 2.5]) = [exp(3.0)/(exp(3.0) + exp(1.0) + exp(0.2) + exp(2.5)), ..., exp(2.5)/(exp(3.0) + exp(1.0) + exp(0.2) + exp(2.5))]

exp(3.0) ≈ 20.09

exp(1.0) ≈ 2.72

exp(0.2) ≈ 1.22

exp(2.5) ≈ 12.18

Softmax ≈ [20.09 / (20.09 + 2.72 + 1.22 + 12.18), 2.72 / (20.09 + 2.72 + 1.22 + 12.18), 1.22 / (20.09 + 2.72 + 1.22 + 12.18), 12.18 / (20.09 + 2.72 + 1.22 + 12.18)] ≈ [0.51, 0.07, 0.03, 0.31]

Problem: A neuron receives inputs [1.0, 0.5, 2.0], weights [0.4, 0.3, 0.6], and a bias of -0.2. Calculate the output using the tanh activation function.

Solution: Weighted sum = (1.0 * 0.4) + (0.5 * 0.3) + (2.0 * 0.6) + (-0.2) = 0.4 + 0.15 + 1.2 - 0.2 = 1.55; tanh(1.55) ≈ 0.914

Problem: For a neuron with inputs [2.0, 1.5], weights [0.5, -0.7], and a bias of 0.3, calculate the output using the ReLU activation function.

Solution: Weighted sum = (2.0 * 0.5) + (1.5 * -0.7) + 0.3 = 1.0 - 1.05 + 0.3 = 0.25; ReLU(0.25) = 0.25

Problem: Apply the softplus activation function to a neuron with a weighted sum of 1.5.

Solution: Softplus(1.5) = log(1 + exp(1.5)) ≈ 1.70

Problem: A neuron with input [3.0, -2.0], weights [0.2, 0.5], and a bias of 0.1. Calculate the output using the sigmoid activation function.

Solution: Weighted sum = (3.0 * 0.2) + (-2.0 * 0.5) + 0.1 = 0.6 - 1.0 + 0.1 = -0.3; Sigmoid(-0.3) ≈ 0.425

Problem: Calculate the mean squared error for predicted values [0.3, 0.7, 0.2] and true values [0.1, 0.8, 0.3].

Solution: MSE = (1/3) * ((0.3 - 0.1)^2 + (0.7 - 0.8)^2 + (0.2 - 0.3)^2) = (1/3) * (0.04 + 0.01 + 0.01) = 0.02

Problem: Compute the gradient descent update for a weight of 0.4 with a learning rate of 0.01 and a gradient of -0.5.

Solution: New weight = Old weight - (learning rate * gradient) = 0.4 - (0.01 * -0.5) = 0.4 + 0.005 = 0.405

Problem: Apply the ELU activation function to a neuron with a weighted sum of -3.0 and alpha = 1.0.

Solution: ELU(-3.0) = 1.0 * (exp(-3.0) - 1) ≈ 1.0 * (0.05 - 1) = -0.95

Problem: A neuron has inputs [0.6, -1.2, 0.4], weights [0.7, -0.8, 0.5], and a bias of 0.2. Calculate the output using the tanh activation function.

Solution: Weighted sum = (0.6 * 0.7) + (-1.2 * -0.8) + (0.4 * 0.5) + 0.2 = 0.42 + 0.96 + 0.2 + 0.2 = 1.78; tanh(1.78) ≈ 0.945

Problem: For a neuron with inputs [1.0, -2.0, 0.5], weights [0.3, -0.4, 0.8], and a bias of -0.1, calculate the output using the sigmoid activation function.

Solution: Weighted sum = (1.0 * 0.3) + (-2.0 * -0.4) + (0.5 * 0.8) + (-0.1) = 0.3 + 0.8 + 0.4 - 0.1 = 1.4; Sigmoid(1.4) ≈ 0.802

Problem: Calculate the RMSProp update for a weight of 0.3 with a gradient of -0.4, learning rate of 0.01, decay rate of 0.9, and epsilon of 1e-8, given an accumulated gradient of 0.1.

Solution:

New accumulated gradient = decay_rate * old_accumulated_gradient + (1 - decay_rate) * (gradient^2) = 0.9 * 0.1 + 0.1 * 0.16 = 0.1; Update = learning rate * gradient / sqrt(new_accumulated_gradient + epsilon) ≈ 0.01 * -0.4 / sqrt(0.1 + 1e-8) ≈ -0.0126

Problem: Compute the Adam optimizer update for a weight of 0.5 with a gradient of -0.3, learning rate of 0.01, beta1 = 0.9, beta2 = 0.999, epsilon of 1e-8, and initial moments m = 0 and v = 0.

Solution:

m_t = beta1 * m + (1 - beta1) * gradient = 0.9 * 0 + 0.1 * -0.3 = -0.03

v_t = beta2 * v + (1 - beta2) * (gradient^2) = 0.999 * 0 + 0.001 * 0.09 = 0.00009

m_hat = m_t / (1 - beta1^t) = -0.03 / 0.1 = -0.3

v_hat = v_t / (1 - beta2^t) = 0.00009 / 0.001 = 0.09

Update = learning_rate * m_hat / (sqrt(v_hat) + epsilon) ≈ 0.01 * -0.3 / (sqrt(0.09) + 1e-8) ≈ -0.01

Problem: Apply the leaky ReLU activation function with alpha = 0.01 to a neuron with a weighted sum of -2.5.

Solution: Leaky ReLU(-2.5) = max(0.01 * -2.5, -2.5) = -0.025

Problem: Calculate the softmax function for a neural network layer with outputs [0.5, 2.5, 1.5].

Solution: Softmax([0.5, 2.5, 1.5]) = [exp(0.5)/(exp(0.5) + exp(2.5) + exp(1.5)), ..., exp(1.5)/(exp(0.5) + exp(2.5) + exp(1.5))]

exp(0.5) ≈ 1.65

exp(2.5) ≈ 12.18

exp(1.5) ≈ 4.48

Softmax ≈ [1.65 / (1.65 + 12.18 + 4.48), 12.18 / (1.65 + 12.18 + 4.48), 4.48 / (1.65 + 12.18 + 4.48)] ≈ [0.07, 0.72, 0.21]

Problem: A neuron receives inputs [2.0, -1.0, 0.5], weights [0.1, 0.2, 0.3], and a bias of -0.4. Calculate the output using the ReLU activation function.

Solution: Weighted sum = (2.0 * 0.1) + (-1.0 * 0.2) + (0.5 * 0.3) + (-0.4) = 0.2 - 0.2 + 0.15 - 0.4 = -0.25; ReLU(-0.25) = 0

Problem: Calculate the cross-entropy loss for a prediction of [0.7, 0.2, 0.1] with true labels [1, 0, 0].

Solution: Cross-entropy loss = -sum(true_labels * log(predictions)) = - (1 * log(0.7) + 0 * log(0.2) + 0 * log(0.1)) = -log(0.7) ≈ 0.357

Top comments (0)