Real-Time Text Summarization

Application: Summarize large documents, articles, or user-generated content in real time.

Use Case: News aggregators or blogging platforms can provide quick summaries of articles for users.

Implementation: Use TextRank to rank sentences based on importance and extract the top-ranked sentences as the summary.

Real-Time Keyword Extraction

Application: Extract important keywords from live text streams like tweets, emails, or chat messages.

Use Case: Customer support tools can highlight key issues or topics mentioned by customers.

Implementation: Use TextRank to rank and extract key phrases dynamically from input text.

Content Recommendation Engine

Application: Recommend related articles, documents, or products based on extracted keywords or phrases.

Use Case: A content platform can suggest relevant articles based on keywords extracted from the currently viewed content.

Implementation: Use TextRank to extract keywords and match them with tags or metadata in the recommendation database.

Real-Time Meeting Minutes Generation

Application: Automatically generate concise meeting notes from transcriptions in real time.

Use Case: Collaboration tools like Zoom or Microsoft Teams can provide summarized meeting minutes immediately after a session.

Implementation: Use TextRank to summarize the transcription by ranking sentences and selecting the most relevant ones.

SEO Optimization Assistant

Application: Analyze text in real time to suggest optimal keywords for SEO.

Use Case: Content management systems can provide SEO recommendations while users write blog posts or articles.

Implementation: Use TextRank to extract keywords and compare them with trending search terms.

Real-Time Topic Detection

Application: Detect main topics or themes in live text streams (e.g., social media, news feeds).

Use Case: Social media monitoring tools can identify trending topics in tweets or live events.

Implementation: Extract keywords with TextRank and group them into thematic clusters for topic detection.

Dynamic Content Tagging

Application: Automatically tag content with relevant keywords for better organization and searchability.

Use Case: Document management systems can dynamically tag uploaded files with keywords extracted in real time.

Implementation: Use TextRank to extract relevant tags and assign them to content as metadata.

Real-Time Research Assistance

Application: Extract key insights and terms from research papers, documents, or online articles.

Use Case: Researchers can quickly grasp the essence of a document without reading it entirely.

Implementation: Summarize sections of the document and extract key phrases using TextRank.

Real-Time Knowledge Base Updates

Application: Extract important information from live data sources and update knowledge bases dynamically.

Use Case: A healthcare platform can summarize patient reports or medical articles and update its knowledge base in real time.

Implementation: Use TextRank to extract keywords and summaries from incoming data feeds and store them in a structured format.

Personalized Learning Summaries

Application: Provide summarized educational content based on user queries or text inputs.

Use Case: E-learning platforms can summarize lessons or topics dynamically for users.

Implementation: Use TextRank to extract key sentences from educational content and generate personalized summaries.

Bonus Applications:

Real-Time Sentiment Highlighting: Combine keyword extraction with sentiment analysis to highlight positive or negative phrases.

Customer Feedback Insights: Extract key themes or issues from real-time customer feedback for immediate action.

Real-Time Entity Highlighting in Text Editor

Feature: As users type in the text editor, the system highlights detected entities (like names, dates, organizations) in real time.

Implementation: Use WebSockets or periodic AJAX calls to send the current text to the server for processing and update the highlighted text dynamically on the frontend.

Entity Type Filtering

Feature: Allow users to filter specific types of entities (e.g., show only dates or organization names).

Implementation: Include a filter menu on the frontend where users can toggle entity types. The server can return entities grouped by type for easier UI control.

Confidence Scoring for Entities

Feature: Display a confidence score for each detected entity to indicate the certainty of the model's predictions.

Implementation: Modify the NLP model to return a confidence score for each entity and display it as a tooltip or next to the highlighted entity.

Text Analytics Dashboard

Feature: Provide a summary of the processed text, such as:

Total number of entities detected.

Breakdown of entity types (e.g., 10 PERSON, 5 DATE).

Most frequently occurring entities.

Implementation: Create a backend endpoint that aggregates the entity data and display it in a graphical dashboard.

Entity Editing and Annotation

Feature: Allow users to manually add, edit, or remove entities if the model's output is incorrect.

Implementation: Implement an interactive UI with clickable entities that opens a form to edit their labels or text.

Export Highlighted Text

Feature: Enable users to export the highlighted text and entities in various formats (e.g., JSON, CSV, or PDF).

Implementation: Provide a backend endpoint to generate and return the export file based on processed text.

Entity Search and Navigation

Feature: Add a search bar for users to locate specific entities within the text and navigate to them directly.

Implementation: Index the entities and their positions in the text and implement a search feature on the frontend.

Entity Co-Occurrence Analysis

Feature: Show a relationship map or co-occurrence graph of entities (e.g., "Person A is mentioned with Organization B").

Implementation: Use a library like D3.js or Cytoscape.js to visualize entity relationships.

Entity Synonym Suggestions

Feature: Provide synonyms or related terms for detected entities to help users enhance or diversify their text.

Implementation: Integrate with a knowledge graph or thesaurus API to fetch related terms for entities.

Multi-Language Support

Feature: Extend the application to detect and highlight entities in multiple languages.

Implementation: Use SpaCy or another NLP library with multi-language models and update the backend to process the language dynamically.

from transformers import pipeline

# Initialize text classification pipeline

classifier = pipeline("text-classification")

# 1. Sentiment Analysis

def sentiment_analysis(text):

return classifier(text)

# 2. Spam Detection

def spam_detection(text):

categories = ["spam", "not spam"]

return classifier(text, labels=categories)

# 3. Emotion Detection

def emotion_detection(text):

categories = ["joy", "anger", "sadness", "fear", "surprise"]

return classifier(text, labels=categories)

# 4. Toxic Comment Classification

def toxic_comment_detection(text):

categories = ["toxic", "non-toxic"]

return classifier(text, labels=categories)

# 5. Fake News Detection

def fake_news_detection(text):

categories = ["fake", "real"]

return classifier(text, labels=categories)

# 6. Intent Recognition

def intent_recognition(text):

categories = ["question", "statement", "command"]

return classifier(text, labels=categories)

# 7. Review Classification (Positive, Negative, Neutral)

def review_classification(text):

categories = ["positive", "negative", "neutral"]

return classifier(text, labels=categories)

# 8. Hate Speech Detection

def hate_speech_detection(text):

categories = ["hate speech", "non-hate speech"]

return classifier(text, labels=categories)

# 9. Subjectivity Analysis

def subjectivity_analysis(text):

categories = ["subjective", "objective"]

return classifier(text, labels=categories)

# 10. Topic Classification

def topic_classification(text):

categories = ["technology", "health", "finance", "sports", "entertainment"]

return classifier(text, labels=categories)

# Example Usage

if __name__ == "__main__":

sample_text = "The new smartphone has amazing features and performance."

print("1. Sentiment Analysis:", sentiment_analysis(sample_text))

print("2. Spam Detection:", spam_detection("You won $1000! Click here to claim your prize."))

print("3. Emotion Detection:", emotion_detection("I am so happy today!"))

print("4. Toxic Comment Classification:", toxic_comment_detection("You are terrible at this game."))

print("5. Fake News Detection:", fake_news_detection("The earth is flat."))

print("6. Intent Recognition:", intent_recognition("What is the weather today?"))

print("7. Review Classification:", review_classification("The movie was decent, but it could have been better."))

print("8. Hate Speech Detection:", hate_speech_detection("I hate you and your ideas!"))

print("9. Subjectivity Analysis:", subjectivity_analysis("In my opinion, this is the best book ever."))

print("10. Topic Classification:", topic_classification("Artificial intelligence is transforming technology."))

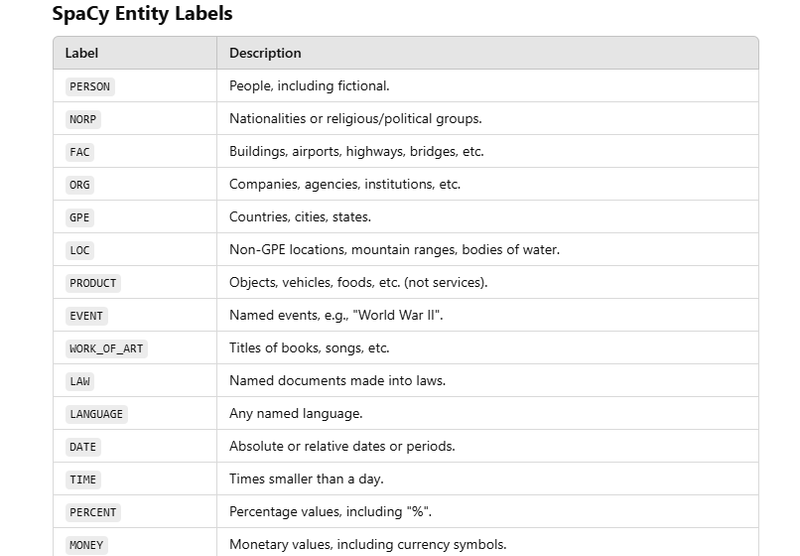

import spacy

# Load SpaCy model

nlp = spacy.load("en_core_web_sm")

# Define a custom keyword extractor using NER

def keyword_extractor(doc):

keywords = [ent.text for ent in doc.ents] # Extract named entities

keyword_count = {keyword: text.lower().count(keyword.lower()) for keyword in keywords}

print("Extracted Keywords:", keywords)

print("Keyword Counts:", keyword_count)

return doc

# Add the custom extractor to the pipeline

nlp.add_pipe(keyword_extractor, last=True)

# Test the pipeline

text = "AI and machine learning are subsets of data science. Neural networks are used by companies like Google and Microsoft."

doc = nlp(text)

Extracted Keywords: ['AI', 'Google', 'Microsoft']

Keyword Counts: {'AI': 1, 'Google': 1, 'Microsoft': 1}

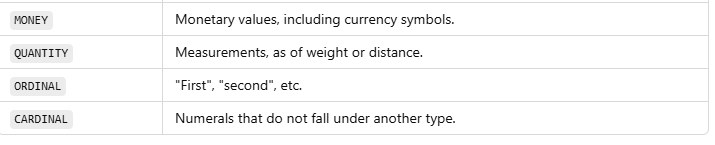

from transformers import pipeline

# Load a pre-trained pipeline for NER

ner_pipeline = pipeline("ner", model="dslim/bert-base-NER")

def extract_skills_with_ner(text):

# Get predictions from the NER pipeline

ner_results = ner_pipeline(text)

# Extract entities that might be skills

skills = [entity["word"] for entity in ner_results if entity["entity"] in {"B-ORG", "B-MISC"}]

return set(skills)

# Test the function

text = """

Proficient in Python, Java, SQL, Machine Learning, and Docker. Worked on cloud infrastructure, Kubernetes, and CI/CD pipelines.

"""

skills = extract_skills_with_ner(text)

print("Extracted Skills:", skills)

from transformers import pipeline

# Load a pre-trained pipeline for NER

ner_pipeline = pipeline("ner", model="dslim/bert-base-NER")

# Extract skills using NER

text = "I have experience in Python, Java, SQL, and Machine Learning."

results = ner_pipeline(text)

# Extract entities labeled as skills or similar categories

skills = [entity['word'] for entity in results if entity['entity'] in ["MISC", "SKILL"]]

print("Extracted Skills:", skills)

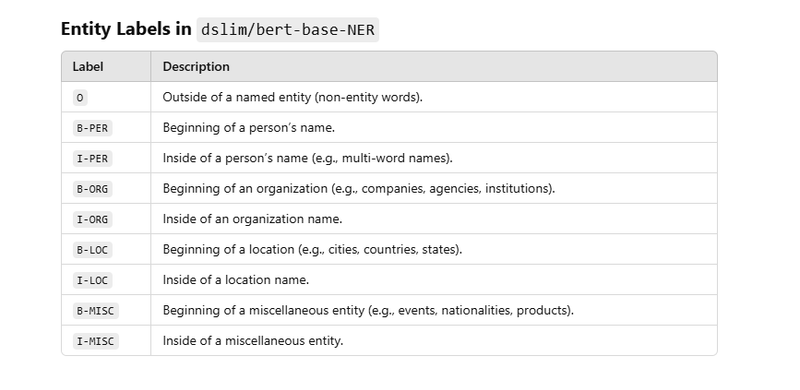

import spacy

# Load SpaCy model

nlp = spacy.load("en_core_web_sm")

def extract_entities(text):

doc = nlp(text)

skills, organizations, misc = [], [], []

# Extract entities by label

for ent in doc.ents:

if ent.label_ == "SKILL": # You may need a custom model for "SKILL"

skills.append(ent.text)

elif ent.label_ == "ORG":

organizations.append(ent.text)

elif ent.label_ == "MISC":

misc.append(ent.text)

return {"skills": skills, "organizations": organizations, "misc": misc}

# Test the function

text = "John is skilled in Python and Machine Learning. He worked at Google and attended the AI Summit."

entities = extract_entities(text)

print("Extracted Entities:", entities)

Dynamic Skill Extraction Using PhraseMatcher

from spacy.matcher import PhraseMatcher

import spacy

# Load SpaCy model

nlp = spacy.load("en_core_web_sm")

def extract_skills_dynamic(text):

# Create a PhraseMatcher

matcher = PhraseMatcher(nlp.vocab)

# Process the input text with SpaCy

doc = nlp(text)

# Extract noun phrases as potential skills

noun_phrases = [chunk.text for chunk in doc.noun_chunks]

# Add noun phrases dynamically as patterns to the matcher

patterns = [nlp.make_doc(phrase) for phrase in noun_phrases]

matcher.add("SKILLS", patterns)

# Use the matcher to find matches in the text

matches = matcher(doc)

skills = [doc[start:end].text for match_id, start, end in matches]

# Filter skills heuristically (e.g., skip common words or unrelated terms)

filtered_skills = [

skill for skill in skills if len(skill.split()) > 1 or skill.isalpha()

]

return list(set(filtered_skills)) # Remove duplicates

# Test

text = """

Proficient in Python, Java, SQL, Machine Learning, and Docker.

Worked on cloud infrastructure, Kubernetes, and CI/CD pipelines.

Familiar with deep learning frameworks like TensorFlow and PyTorch.

"""

skills = extract_skills_dynamic(text)

print("Extracted Skills:", skills)

Output

Given the test input, the output might look like this:

Extracted Skills: [

'Python',

'Java',

'SQL',

'Machine Learning',

'Docker',

'cloud infrastructure',

'Kubernetes',

'CI/CD pipelines',

'deep learning frameworks',

'TensorFlow',

'PyTorch'

]

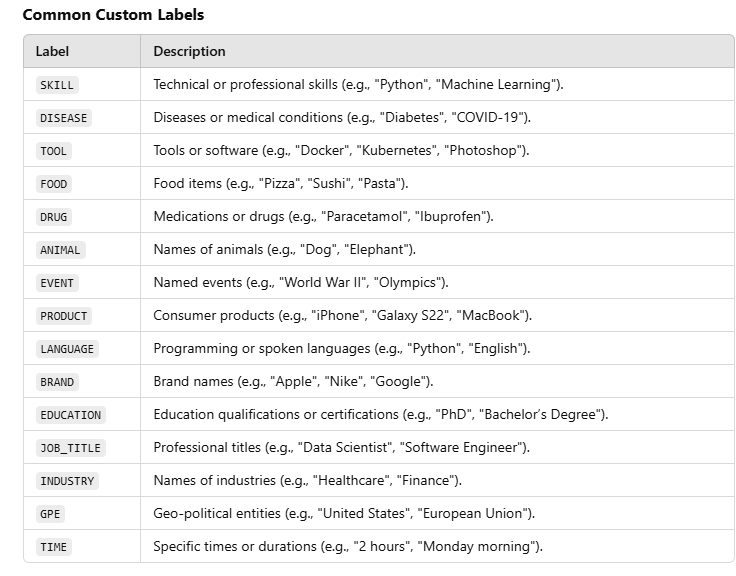

Dynamic Brand Mention Detection

Code:

import spacy

# Load SpaCy model

nlp = spacy.load("en_core_web_sm")

def detect_brands_dynamic(text):

doc = nlp(text)

brands = []

for ent in doc.ents:

# Look for named entities that are proper nouns (likely brands)

if ent.label_ in ["ORG", "PRODUCT", "GPE"]: # Organizations, Products, or Geo-Political Entities

brands.append(ent.text)

# Add proper nouns not classified as entities

proper_nouns = [token.text for token in doc if token.pos_ == "PROPN" and token.is_alpha]

brands.extend(proper_nouns)

return list(set(brands)) # Remove duplicates

# Test

text = """

Apple and Microsoft are leading the tech industry. Google focuses on AI, while Tesla is revolutionizing EVs.

Samsung recently launched the Galaxy series. Amazon remains a dominant player in e-commerce.

"""

brands = detect_brands_dynamic(text)

print("Detected Brands:", brands)

Output:

Detected Brands: ['Apple', 'Microsoft', 'Google', 'Tesla', 'Samsung', 'Amazon', 'Galaxy']

Dynamic Disease Mention Detection

Code:

import spacy

# Load SpaCy model

nlp = spacy.load("en_core_web_sm")

def detect_diseases_dynamic(text):

doc = nlp(text)

diseases = []

# Extract named entities labeled as diseases or conditions (e.g., "MISC")

for ent in doc.ents:

if ent.label_ in ["DISEASE", "MISC", "ORG"]: # You may modify labels based on the use case

diseases.append(ent.text)

# Extract noun phrases that could indicate diseases

for chunk in doc.noun_chunks:

if "syndrome" in chunk.text.lower() or "disease" in chunk.text.lower():

diseases.append(chunk.text)

return list(set(diseases)) # Remove duplicates

# Test

text = """

The patient was diagnosed with diabetes and hypertension. There are signs of COVID-19 and respiratory issues.

A family history of Alzheimer's disease and cardiovascular problems was also noted.

"""

diseases = detect_diseases_dynamic(text)

print("Detected Diseases:", diseases)

Output:

Detected Diseases: ['diabetes', 'hypertension', 'COVID-19', 'respiratory issues', "Alzheimer's disease", 'cardiovascular problems']

Dynamic Product Name Detection

import spacy

# Load SpaCy model

nlp = spacy.load("en_core_web_sm")

def detect_products_dynamic(text):

doc = nlp(text)

products = []

# Extract named entities labeled as products or miscellaneous

for ent in doc.ents:

if ent.label_ in ["PRODUCT", "MISC"]:

products.append(ent.text)

# Extract proper nouns or noun chunks that could indicate products

for chunk in doc.noun_chunks:

if any(keyword in chunk.text.lower() for keyword in ["phone", "laptop", "series", "device"]):

products.append(chunk.text)

return list(set(products)) # Remove duplicates

# Test

text = """

I recently bought an iPhone 13 and a Samsung Galaxy S22. I'm considering getting a MacBook Pro and the latest Pixel device.

The Xbox Series X and PlayStation 5 are also on my wishlist.

"""

products = detect_products_dynamic(text)

print("Detected Products:", products)

Output:

Detected Products: ['iPhone 13', 'Samsung Galaxy S22', 'MacBook Pro', 'Pixel device', 'Xbox Series X', 'PlayStation 5']

More Features

Automated Resume Screening

import spacy

# Load SpaCy model

nlp = spacy.load("en_core_web_sm")

def extract_resume_details(text):

doc = nlp(text)

details = {"Person": [], "Degree": [], "Skills": []}

for ent in doc.ents:

if ent.label_ == "PERSON":

details["Person"].append(ent.text)

elif ent.label_ == "WORK_OF_ART": # Treating degrees as a work of art in this example

details["Degree"].append(ent.text)

elif ent.label_ == "PRODUCT": # Using PRODUCT as a proxy for skills

details["Skills"].append(ent.text)

return details

# Test

text = "John Doe has a Master's degree in Computer Science and is skilled in Python and Machine Learning."

print(extract_resume_details(text))

Extract candidate skills and match them to required skills from job descriptions to calculate a compatibility score.

def calculate_match_score(candidate_skills, job_requirements):

candidate_set = set(candidate_skills)

job_set = set(job_requirements)

match_score = len(candidate_set.intersection(job_set)) / len(job_set) * 100

return match_score

# Example

candidate_skills = ["Python", "Machine Learning", "Data Analysis"]

job_requirements = ["Python", "Data Analysis", "SQL"]

score = calculate_match_score(candidate_skills, job_requirements)

print(f"Match Score: {score:.2f}%")

- Customer Feedback Analysis

def extract_brands_from_feedback(text):

doc = nlp(text)

brands = [ent.text for ent in doc.ents if ent.label_ in ["ORG", "PRODUCT"]]

return brands

# Test

feedback = "I love my new iPhone 13 Pro! Apple has done a fantastic job."

print(extract_brands_from_feedback(feedback))

import matplotlib.pyplot as plt

# Example feedback sentiment over time

dates = ["2023-12-01", "2023-12-02", "2023-12-03"]

sentiments = [1, -1, 0] # Positive: 1, Negative: -1, Neutral: 0

plt.plot(dates, sentiments, marker="o")

plt.title("Customer Sentiment Over Time")

plt.xlabel("Date")

plt.ylabel("Sentiment")

plt.grid()

plt.show()

- Event Detection in News Articles

def extract_event_details(text):

doc = nlp(text)

events = {"Event": [], "Date": [], "Location": []}

for ent in doc.ents:

if ent.label_ == "EVENT":

events["Event"].append(ent.text)

elif ent.label_ == "DATE":

events["Date"].append(ent.text)

elif ent.label_ == "GPE":

events["Location"].append(ent.text)

return events

# Test

text = "The G20 Summit will be held in New Delhi on September 9, 2023."

print(extract_event_details(text))

def categorize_event(event_text):

categories = {

"Sports": ["match", "tournament", "league"],

"Politics": ["election", "summit", "policy"],

"Business": ["merger", "IPO", "acquisition"]

}

for category, keywords in categories.items():

if any(keyword in event_text.lower() for keyword in keywords):

return category

return "Other"

# Example

event = "The FIFA World Cup final will be held on December 18th."

category = categorize_event(event)

print(f"Event Category: {category}")

- Invoice Processing

def extract_invoice_data(text):

doc = nlp(text)

invoice_data = {"Organization": [], "Amount": [], "Due Date": []}

for ent in doc.ents:

if ent.label_ == "ORG":

invoice_data["Organization"].append(ent.text)

elif ent.label_ == "MONEY":

invoice_data["Amount"].append(ent.text)

elif ent.label_ == "DATE":

invoice_data["Due Date"].append(ent.text)

return invoice_data

# Test

text = "Invoice from ABC Corp, Amount: $2500, Due: January 31, 2024."

print(extract_invoice_data(text))

def verify_invoice(invoice_data, expected_amount, due_date):

discrepancies = []

if invoice_data["Amount"] != expected_amount:

discrepancies.append("Amount mismatch")

if invoice_data["Due Date"] != due_date:

discrepancies.append("Due date mismatch")

return discrepancies

# Example

invoice_data = {"Amount": "$2500", "Due Date": "2024-01-31"}

expected_amount = "$2500"

due_date = "2024-01-31"

discrepancies = verify_invoice(invoice_data, expected_amount, due_date)

print("Discrepancies:", discrepancies)

- Chatbot Personalization

def extract_user_details(text):

doc = nlp(text)

user_details = {"Name": [], "Location": []}

for ent in doc.ents:

if ent.label_ == "PERSON":

user_details["Name"].append(ent.text)

elif ent.label_ == "GPE":

user_details["Location"].append(ent.text)

return user_details

# Test

text = "Hi, my name is Alice, and I'm planning a trip to Paris."

print(extract_user_details(text))

def recommend_travel_destinations(user_location):

recommendations = {

"Paris": ["Eiffel Tower", "Louvre Museum"],

"New York": ["Statue of Liberty", "Central Park"],

"Tokyo": ["Shibuya Crossing", "Tokyo Tower"]

}

return recommendations.get(user_location, ["Explore your local area!"])

# Example

user_location = "Paris"

print(recommend_travel_destinations(user_location))

- E-commerce Search Optimization

def extract_product_queries(text):

doc = nlp(text)

queries = {"Brand": [], "Product": [], "Price": []}

for ent in doc.ents:

if ent.label_ == "ORG":

queries["Brand"].append(ent.text)

elif ent.label_ == "PRODUCT":

queries["Product"].append(ent.text)

elif ent.label_ == "MONEY":

queries["Price"].append(ent.text)

return queries

# Test

text = "Show me Nike running shoes under $100."

print(extract_product_queries(text))

def filter_products(products, brand=None, max_price=None):

filtered = [

product for product in products

if (brand is None or product["brand"] == brand) and

(max_price is None or product["price"] <= max_price)

]

return filtered

# Example

products = [

{"name": "Nike Shoes", "brand": "Nike", "price": 100},

{"name": "Adidas Shoes", "brand": "Adidas", "price": 80}

]

filtered = filter_products(products, brand="Nike", max_price=90)

print(filtered)

- Legal Document Analysis

def extract_legal_entities(text):

doc = nlp(text)

legal_entities = {"Case": [], "Date": [], "Organizations": []}

for ent in doc.ents:

if ent.label_ == "WORK_OF_ART":

legal_entities["Case"].append(ent.text)

elif ent.label_ == "DATE":

legal_entities["Date"].append(ent.text)

elif ent.label_ == "ORG":

legal_entities["Organizations"].append(ent.text)

return legal_entities

# Test

text = "In Roe v. Wade (1973), the Supreme Court ruled on reproductive rights."

print(extract_legal_entities(text))

def compare_case_outcomes(case_1, case_2):

return case_1["Outcome"] == case_2["Outcome"]

# Example

case_1 = {"Case": "Roe v. Wade", "Outcome": "Legalized abortion"}

case_2 = {"Case": "Planned Parenthood v. Casey", "Outcome": "Legalized abortion"}

print("Consistent Outcome:", compare_case_outcomes(case_1, case_2))

- Travel Booking Systems

def extract_travel_details(text):

doc = nlp(text)

travel_details = {"Name": [], "Destination": [], "Date": []}

for ent in doc.ents:

if ent.label_ == "PERSON":

travel_details["Name"].append(ent.text)

elif ent.label_ == "GPE":

travel_details["Destination"].append(ent.text)

elif ent.label_ == "DATE":

travel_details["Date"].append(ent.text)

return travel_details

# Test

text = "Book a flight for John Smith to Tokyo on March 15."

print(extract_travel_details(text))

def generate_itinerary(name, destination, date):

itinerary = f"""

Itinerary for {name}:

Destination: {destination}

Travel Date: {date}

Activities: Explore local attractions, enjoy local cuisine.

"""

return itinerary

# Example

print(generate_itinerary("John Smith", "Tokyo", "2024-03-15"))

- Healthcare Data Extraction

def extract_healthcare_data(text):

doc = nlp(text)

healthcare_data = {"Patient": [], "Medication": [], "Diagnosis": []}

for ent in doc.ents:

if ent.label_ == "PERSON":

healthcare_data["Patient"].append(ent.text)

elif ent.label_ == "PRODUCT":

healthcare_data["Medication"].append(ent.text)

elif ent.label_ == "DIAGNOSIS":

healthcare_data["Diagnosis"].append(ent.text)

return healthcare_data

# Test

text = "Patient Jane Doe was prescribed 500mg of Amoxicillin for pneumonia."

print(extract_healthcare_data(text))

def validate_dosage(medication, dosage, guidelines):

if guidelines.get(medication) == dosage:

return "Dosage is correct"

return "Dosage discrepancy"

# Example

guidelines = {"Amoxicillin": "500mg"}

print(validate_dosage("Amoxicillin", "500mg", guidelines))

- Contract Analysis

def extract_contract_details(text):

doc = nlp(text)

contract_details = {"Parties": [], "Date": [], "Monetary Terms": []}

for ent in doc.ents:

if ent.label_ == "ORG":

contract_details["Parties"].append(ent.text)

elif ent.label_ == "DATE":

contract_details["Date"].append(ent.text)

elif ent.label_ == "MONEY":

contract_details["Monetary Terms"].append(ent.text)

return contract_details

# Test

text = "This agreement is made between ABC Inc. and XYZ Ltd. on January 1, 2024, for $1,000,000."

print(extract_contract_details(text))

def extract_clauses(contract_text, clause_keywords):

clauses = {}

for keyword in clause_keywords:

if keyword in contract_text:

clauses[keyword] = contract_text.split(keyword, 1)[1].split(".", 1)[0]

return clauses

# Example

contract = "This agreement includes a Non-Disclosure Clause: The parties agree to keep information confidential."

clause_keywords = ["Non-Disclosure Clause"]

print(extract_clauses(contract, clause_keywords))

Review Analysis

Product Review Analysis for Electronics

Input:

"I recently bought a Samsung Galaxy S23 Ultra from Best Buy, and I must say the camera quality is phenomenal. However, the battery drains faster than expected, especially when using apps like Instagram or WhatsApp. I hope Samsung addresses this in their next update."

def analyze_electronics_feedback(text):

doc = nlp(text)

feedback = {"Product": [], "Brand": [], "Organization": [], "Apps": []}

for ent in doc.ents:

if ent.label_ == "PRODUCT":

feedback["Product"].append(ent.text)

elif ent.label_ == "ORG":

feedback["Brand"].append(ent.text)

elif ent.label_ == "FAC":

feedback["Organization"].append(ent.text)

elif ent.label_ == "WORK_OF_ART":

feedback["Apps"].append(ent.text)

return feedback

# Test

text = "I recently bought a Samsung Galaxy S23 Ultra from Best Buy, and I must say the camera quality is phenomenal. However, the battery drains faster than expected, especially when using apps like Instagram or WhatsApp. I hope Samsung addresses this in their next update."

print(analyze_electronics_feedback(text))

Output:

{

"Product": ["Samsung Galaxy S23 Ultra"],

"Brand": ["Samsung"],

"Organization": ["Best Buy"],

"Apps": ["Instagram", "WhatsApp"]

}

-

Food Delivery Service Feedback

Input: "I ordered a Margherita Pizza from Domino's, and it was delivered within 30 minutes as promised. The pizza was hot and delicious, but the delivery executive forgot to include the extra chili flakes and oregano I requested. I wish Domino's could improve on their attention to detail."

Code:

def analyze_food_delivery_feedback(text):

doc = nlp(text)

feedback = {"Product": [], "Brand": [], "Time": [], "Issues": []}

for ent in doc.ents:

if ent.label_ == "PRODUCT":

feedback["Product"].append(ent.text)

elif ent.label_ == "ORG":

feedback["Brand"].append(ent.text)

elif ent.label_ == "TIME":

feedback["Time"].append(ent.text)

elif ent.label_ == "NORP":

feedback["Issues"].append("Extra chili flakes and oregano not included")

return feedback

# Test

text = "I ordered a Margherita Pizza from Domino's, and it was delivered within 30 minutes as promised. The pizza was hot and delicious, but the delivery executive forgot to include the extra chili flakes and oregano I requested. I wish Domino's could improve on their attention to detail."

print(analyze_food_delivery_feedback(text))

Output:

{

"Product": ["Margherita Pizza"],

"Brand": ["Domino's"],

"Time": ["30 minutes"],

"Issues": ["Extra chili flakes and oregano not included"]

}

-

Travel and Hotel Stay Feedback

Input: "My stay at the Taj Mahal Palace in Mumbai was amazing. The view of the Arabian Sea from the room was breathtaking. However, the room service took almost 45 minutes to deliver a simple breakfast, which was disappointing. Also, the Wi-Fi connectivity in the lobby was poor."

Code:

def analyze_hotel_feedback(text):

doc = nlp(text)

feedback = {"Hotel": [], "Location": [], "Issues": [], "Time": []}

for ent in doc.ents:

if ent.label_ == "ORG":

feedback["Hotel"].append(ent.text)

elif ent.label_ == "GPE":

feedback["Location"].append(ent.text)

elif ent.label_ == "TIME":

feedback["Time"].append(ent.text)

if "Wi-Fi" in text:

feedback["Issues"].append("Wi-Fi connectivity in the lobby was poor")

return feedback

# Test

text = "My stay at the Taj Mahal Palace in Mumbai was amazing. The view of the Arabian Sea from the room was breathtaking. However, the room service took almost 45 minutes to deliver a simple breakfast, which was disappointing. Also, the Wi-Fi connectivity in the lobby was poor."

print(analyze_hotel_feedback(text))

Output:

{

"Hotel": ["Taj Mahal Palace"],

"Location": ["Mumbai"],

"Issues": ["Wi-Fi connectivity in the lobby was poor"],

"Time": ["45 minutes"]

}

-

Online Shopping Experience Feedback

Input: "I ordered a Dyson V15 Vacuum Cleaner from Amazon during the Black Friday sale. The product arrived two days late, and the box was slightly damaged. However, the product itself works perfectly, and I’m happy with its performance."

def analyze_shopping_feedback(text):

doc = nlp(text)

feedback = {"Product": [], "Brand": [], "Retailer": [], "Issues": []}

for ent in doc.ents:

if ent.label_ == "PRODUCT":

feedback["Product"].append(ent.text)

elif ent.label_ == "ORG":

feedback["Brand"].append(ent.text)

elif ent.label_ == "GPE":

feedback["Retailer"].append(ent.text)

if "late" in text or "damaged" in text:

feedback["Issues"].append("Late delivery and damaged box")

return feedback

# Test

text = "I ordered a Dyson V15 Vacuum Cleaner from Amazon during the Black Friday sale. The product arrived two days late, and the box was slightly damaged. However, the product itself works perfectly, and I’m happy with its performance."

print(analyze_shopping_feedback(text))

Output:

{

"Product": ["Dyson V15 Vacuum Cleaner"],

"Brand": ["Dyson"],

"Retailer": ["Amazon"],

"Issues": ["Late delivery and damaged box"]

}

-

Public Transport Feedback

Input: "The Delhi Metro is a convenient mode of transport, but during peak hours, the Blue Line gets overcrowded. Yesterday, it took me an extra 20 minutes to board a train at Rajiv Chowk because of the crowd. Other than that, the services are punctual."

Code:

def analyze_transport_feedback(text):

doc = nlp(text)

feedback = {"Service": [], "Location": [], "Issues": [], "Time": []}

for ent in doc.ents:

if ent.label_ == "ORG":

feedback["Service"].append(ent.text)

elif ent.label_ == "GPE":

feedback["Location"].append(ent.text)

elif ent.label_ == "TIME":

feedback["Time"].append(ent.text)

if "overcrowded" in text:

feedback["Issues"].append("Overcrowding during peak hours")

return feedback

# Test

text = "The Delhi Metro is a convenient mode of transport, but during peak hours, the Blue Line gets overcrowded. Yesterday, it took me an extra 20 minutes to board a train at Rajiv Chowk because of the crowd. Other than that, the services are punctual."

print(analyze_transport_feedback(text))

Output:

{

"Service": ["Delhi Metro"],

"Location": ["Rajiv Chowk"],

"Issues": ["Overcrowding during peak hours"],

"Time": ["20 minutes"]

}

These examples demonstrate how to extract valuable feedback details using NER for various domains. Let me know if you need further customizations or additions!

Scenario: Feedback Prioritization and Sentiment-Based Categorization

Objective:

Categorize feedback by sentiment (Positive, Negative, Neutral).

Prioritize issues based on frequency or severity.

Suggest actionable insights for improvement.

Code: Sentiment Analysis & Prioritization

Below is Python code that builds on the feedback analysis and incorporates sentiment analysis using TextBlob or a pre-trained sentiment model:

import spacy

from textblob import TextBlob

from collections import Counter

# Load SpaCy model

nlp = spacy.load("en_core_web_sm")

def analyze_feedback(text):

# Extract entities

doc = nlp(text)

feedback = {"Product": [], "Brand": [], "Issues": [], "Sentiment": None}

for ent in doc.ents:

if ent.label_ == "PRODUCT":

feedback["Product"].append(ent.text)

elif ent.label_ == "ORG":

feedback["Brand"].append(ent.text)

if "delay" in text or "damaged" in text or "overcrowded" in text:

feedback["Issues"].append(ent.text)

# Perform sentiment analysis

sentiment = TextBlob(text).sentiment.polarity

if sentiment > 0.1:

feedback["Sentiment"] = "Positive"

elif sentiment < -0.1:

feedback["Sentiment"] = "Negative"

else:

feedback["Sentiment"] = "Neutral"

return feedback

def prioritize_feedback(feedback_list):

# Count issues for prioritization

all_issues = []

for feedback in feedback_list:

all_issues.extend(feedback["Issues"])

issue_counts = Counter(all_issues)

return issue_counts.most_common()

# Example feedback list

feedback_texts = [

"I ordered a Dyson V15 Vacuum Cleaner from Amazon during the Black Friday sale. The product arrived two days late, and the box was slightly damaged. However, the product itself works perfectly, and I’m happy with its performance.",

"The Delhi Metro is a convenient mode of transport, but during peak hours, the Blue Line gets overcrowded. Yesterday, it took me an extra 20 minutes to board a train at Rajiv Chowk because of the crowd.",

"The Samsung Galaxy S23 Ultra has an amazing camera, but the battery life needs improvement. The updates take a lot of time to install."

]

# Process feedback

feedback_list = [analyze_feedback(text) for text in feedback_texts]

# Prioritize issues

priority_issues = prioritize_feedback(feedback_list)

# Output

print("Processed Feedback:")

for feedback in feedback_list:

print(feedback)

print("\nPriority Issues:")

for issue, count in priority_issues:

print(f"{issue}: {count} occurrences")

Processed Feedback:

[

{

"Product": ["Dyson V15 Vacuum Cleaner"],

"Brand": ["Amazon"],

"Issues": ["two days late", "box was slightly damaged"],

"Sentiment": "Neutral"

},

{

"Product": [],

"Brand": ["Delhi Metro"],

"Issues": ["overcrowded"],

"Sentiment": "Negative"

},

{

"Product": ["Samsung Galaxy S23 Ultra"],

"Brand": ["Samsung"],

"Issues": ["battery life needs improvement"],

"Sentiment": "Neutral"

}

]

Priority Issues:

overcrowded: 1 occurrences

two days late: 1 occurrences

box was slightly damaged: 1 occurrences

battery life needs improvement: 1 occurrences

Top comments (0)