What is neural network

Steps to building a neural network using keras

What are the model phases to build neural network

coding example to create neural network

How to perform model evaluation

how to prediction of model

What is neural network

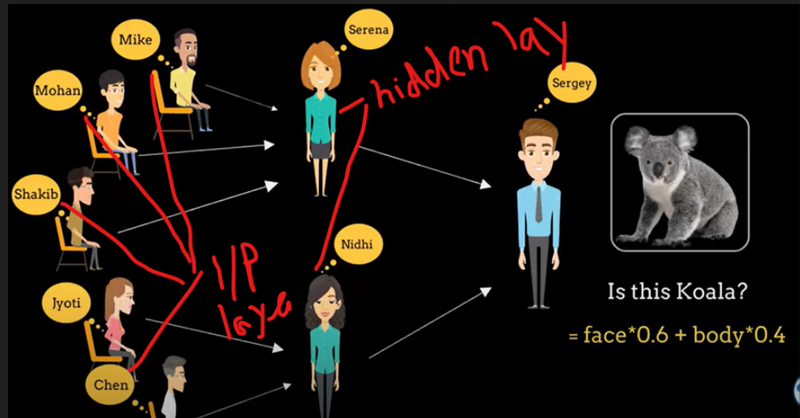

he image represents a neural network structure and how it works, specifically illustrating a feedforward neural network model for identifying a Koala. Let’s break down how the neural network functions using the screenshot:

- Input Layer (People as Neurons) The people in the image (Mike, Mohan, Shakib, etc.) represent neurons in the input layer. These neurons receive some form of input, like features of the Koala image such as its face or body. The input in this case is an image, and the task is to identify whether the image represents a Koala or not.

- Hidden Layer (Serena, Nidhi, Sergey) The individuals labeled Serena, Nidhi, and Sergey represent the hidden layer neurons. Each of these neurons processes the input from the previous layer (input layer) and tries to extract meaningful features. For instance, one neuron might focus on the face, another on the body, or other specific features of the Koala.

- Weighting the Inputs (face * 0.6 + body * 0.4) The formula shown on the right,

represents how the inputs (features) are weighted.

In this case, the face of the Koala is given more importance (60%) than the body (40%) when making a prediction.

The weights

0.6

0.6 and

0.4

0.4 are learned by the neural network during training to make accurate predictions.

- Output Layer (Sergey) Sergey represents the output layer neuron, whose task is to make the final decision or prediction: "Is this a Koala?". The output is based on the weighted sum of the inputs from the hidden layer. If the output passes a certain threshold, the model will classify the image as a Koala.

-

Neural Network Processing

Feedforward: The input data (features like face and body of the Koala) moves from the input layer, through the hidden layer, to the output layer. Each hidden layer neuron combines inputs, applies weights, and passes the result to the output neuron.Activation Function: In a real neural network, the output neuron might apply an activation function (e.g., sigmoid or ReLU) to decide the final result (yes or no for Koala recognition). - Conclusion: This neural network model processes the features (face and body) of an image, assigns importance through weights, and makes a final decision on whether the image is of a Koala. The hidden layers extract useful information, and the output layer combines it to make an accurate prediction. ## Steps to building a neural network using keras Keras, a high-level neural networks API, provides a simple and intuitive way to build, train, and deploy deep learning models. Let's break down how the Keras Sequential model, often used for building simple feedforward neural networks, solves a deep learning task step by step:

Import Libraries:

The first step is to import the necessary libraries, including TensorFlow (or another backend like Theano or CNTK) and Keras. This is typically done at the beginning of the script or notebook.

from tensorflow import keras

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

Initialize Model:

Next, we initialize a Sequential model, which allows us to build a linear stack of layers.

model = Sequential()

Add Layers:

We add layers to the model one by one. Each layer represents a different computation, such as a fully connected layer, a convolutional layer, or a recurrent layer.

model.add(Dense(units=64, activation='relu', input_shape=(input_dim,)))

model.add(Dense(units=32, activation='relu'))

model.add(Dense(units=output_dim, activation='softmax'))

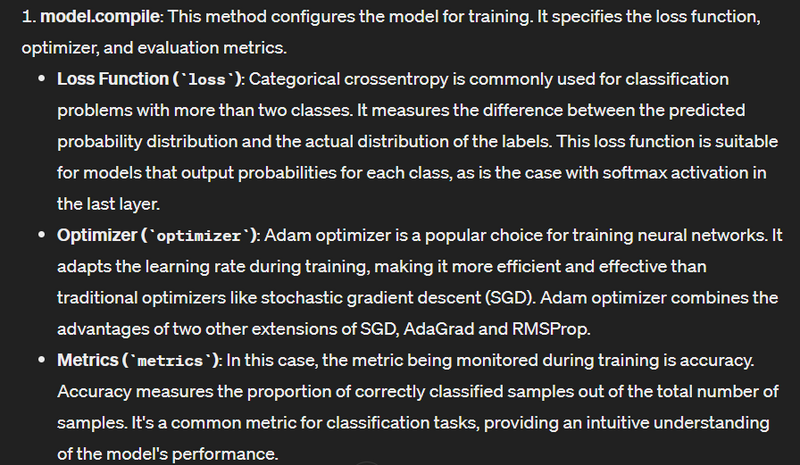

Configure Model:

After adding layers, we configure the model by specifying the loss function, optimizer, and evaluation metrics using the compile method.

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

Model Summary:

We can print a summary of the model's architecture, including the number of parameters in each layer and the total number of trainable parameters.

model.summary()

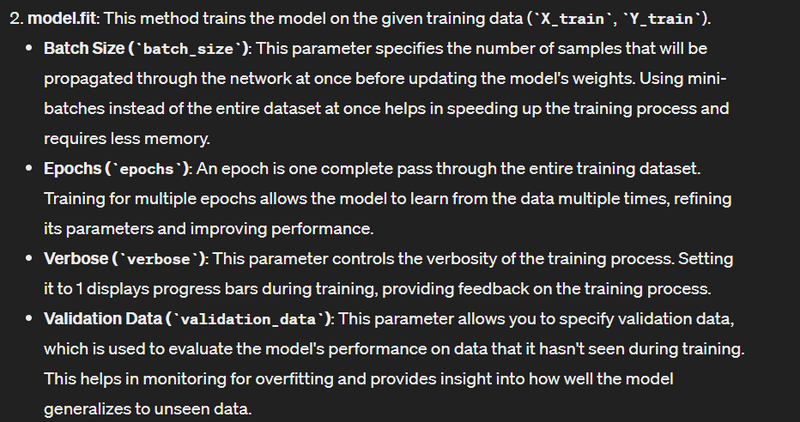

Train Model:

We train the model on training data using the fit method. This involves providing the input features (X_train) and corresponding labels (Y_train), as well as specifying the batch size, number of epochs, and validation data.

model.fit(X_train, Y_train, batch_size=32, epochs=10, validation_data=(X_val, Y_val))

Evaluate Model:

After training, we can evaluate the model's performance on the test data using the evaluate method. This provides metrics such as loss and accuracy.

loss, accuracy = model.evaluate(X_test, Y_test)

print(f'Test Loss: {loss}, Test Accuracy: {accuracy}')

Make Predictions:

Finally, we can use the trained model to make predictions on new data using the predict method.

predictions = model.predict(X_new_data)

That's a basic overview of how the Keras Sequential model solves a deep learning task step by step. It provides a simple and intuitive interface for building and training deep learning models, making it accessible to both beginners and experienced practitioners.

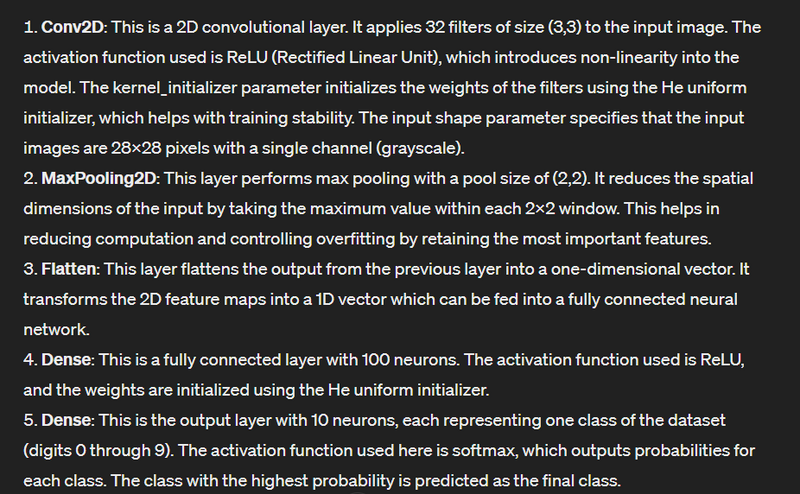

What are the model phases to build neural network

the activation function is a crucial component of each layer and plays a role during the forward pass, which occurs in both the model construction phase and the training phase.

Model Construction Phase:

During the model construction phase, when you add layers to the Sequential model, you specify the activation function for each layer using the activation parameter.

model.add(Dense(units=64, activation='relu', input_shape=(input_dim,)))

model.add(Dense(units=32, activation='relu'))

model.add(Dense(units=output_dim, activation='softmax'))

Here, 'relu' (Rectified Linear Unit) and 'softmax' are activation functions. These activations are defined and set during the construction of the model. They determine how the output of each layer is calculated during the forward pass.

Training Phase:

During the training phase, when you call the compile method to configure the model and the fit method to train it, the model internally uses the specified activation functions for forward propagation.

During forward propagation, the input data passes through each layer of the model, and the activations defined in each layer are applied to the input data to produce the output of the layer.

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

model.fit(X_train, Y_train, batch_size=32, epochs=10, validation_data=(X_val, Y_val))

The activation functions are utilized in both the model construction phase (to define the layers) and the training phase (during forward propagation) to introduce non-linearity into the model, allowing it to learn complex patterns in the data.

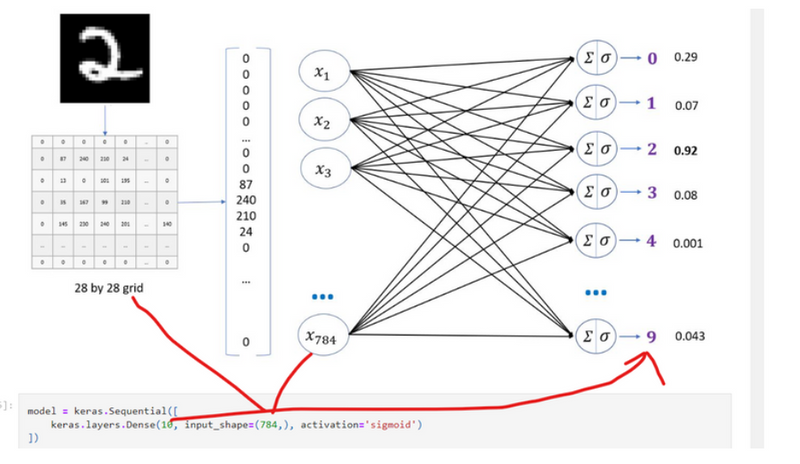

coding example to create neural network

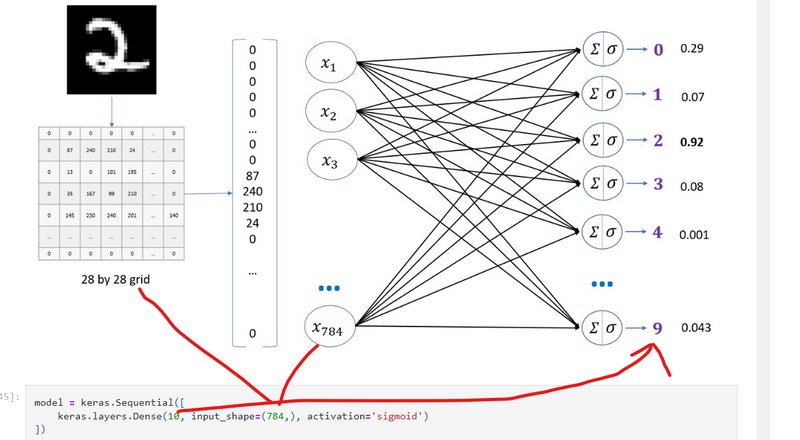

model = keras.Sequential([

keras.layers.Dense(10, input_shape=(784,), activation='sigmoid')

])

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

model.fit(X_train_flattened, y_train, epochs=5)

- Model Definition:

model = keras.Sequential([

keras.layers.Dense(10, input_shape=(784,), activation='sigmoid')

])

keras.Sequential: This is the simplest way to build a neural network in Keras, where layers are stacked sequentially. In this case, there's only one layer, but more could be added.

keras.layers.Dense(10, ...): This is a Dense layer, also known as a fully connected layer.

10: The number of neurons in the output layer, which means the network will have 10 output units (this is often used for classifying 10 classes, such as in the MNIST digit classification task, where the digits range from 0 to 9).

input_shape=(784,): The shape of the input data. This tells the network that each input sample is a vector of size 784. This corresponds to flattened 28x28 pixel images (28 * 28 = 784), commonly used in image datasets like MNIST.

activation='sigmoid': The activation function applied to each neuron in this layer. The sigmoid activation outputs a value between 0 and 1, which is useful for binary or multi-class classification problems.

- Model Compilation:

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

This step configures the model for training by specifying how it should learn from the data:

optimizer='adam': Adam is a popular optimization algorithm that adjusts the learning rate during training to improve performance. It's an efficient, adaptive method for stochastic gradient descent.

loss='sparse_categorical_crossentropy': The loss function measures how far the model's predictions are from the true labels.

sparse_categorical_crossentropy is used for multi-class classification where the labels are provided as integers (not one-hot encoded). It calculates the loss for each output based on how close the predicted class probabilities are to the true class.

metrics=['accuracy']: This specifies what metrics to track during training and evaluation. In this case, accuracy is tracked, which is the proportion of correctly predicted classes.

- Model Training:

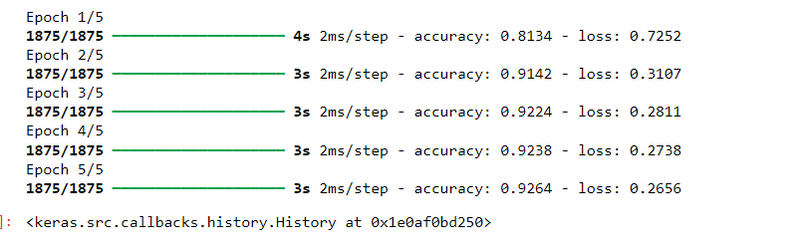

model.fit(X_train_flattened, y_train, epochs=5)

This command trains the model on the provided training data (X_train_flattened and y_train):

X_train_flattened: The input data. Each sample in this dataset is a 1D vector of 784 features (e.g., the flattened pixels from a 28x28 grayscale image).

y_train: The labels associated with the input data. For a dataset like MNIST, these labels would be the digits (0 through 9).

epochs=5: The number of times to iterate over the entire dataset during training. In this case, the model will pass through the dataset 5 times, updating its weights with each pass.

Overall Workflow:

Model Definition: The model is a simple neural network with one fully connected layer (Dense) of 10 output units, each using the sigmoid activation function. The input is expected to be 784-dimensional vectors (flattened images).

Model Compilation: The model is compiled with the Adam optimizer, sparse categorical crossentropy as the loss function, and accuracy as the metric.

Model Training: The model is trained for 5 epochs on the training data (X_train_flattened) and the corresponding labels (y_train).

How it Works:

During training, the model will adjust its weights to minimize the sparse categorical crossentropy loss. After 5 epochs, the model will have learned to map input images to one of 10 possible output classes (digits).

The accuracy metric will be reported for each epoch, giving you an idea of how well the model is performing during training.

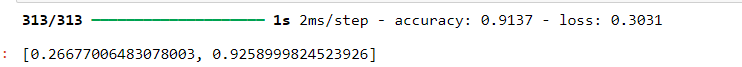

How to perform model evaluation

model = keras.Sequential([

keras.layers.Dense(10, input_shape=(784,), activation='sigmoid')

])

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

model.fit(X_train_flattened, y_train, epochs=5)

This indicates that the model has a loss of approximately 0.2667 and an accuracy of about 92.6% on the test dataset.

model.evaluate(X_test_flattened, y_test)

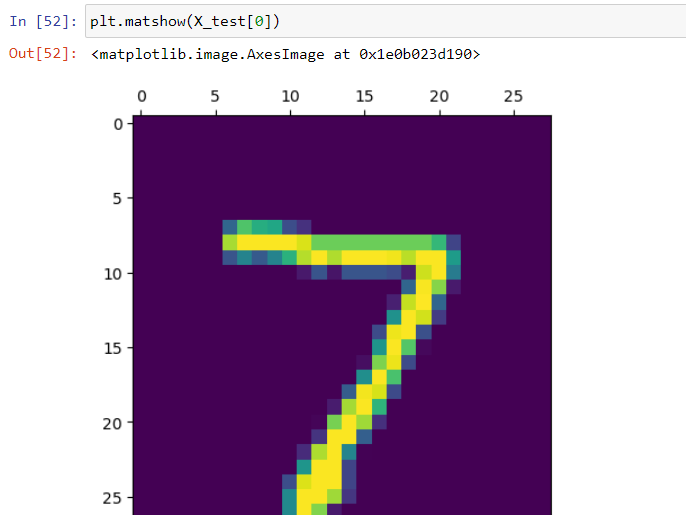

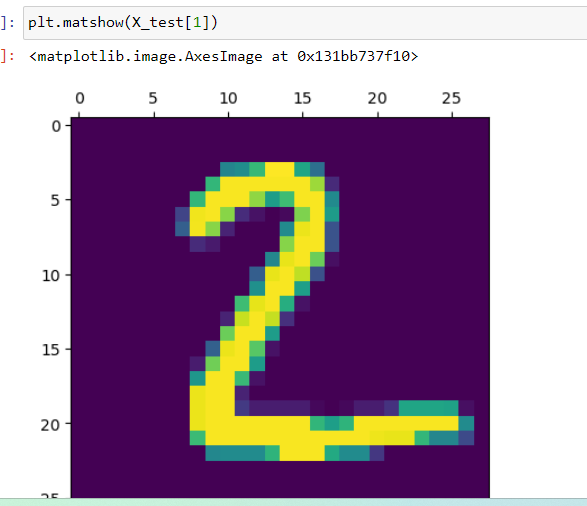

how to prediction of model

model = keras.Sequential([

keras.layers.Dense(10, input_shape=(784,), activation='sigmoid')

])

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

model.fit(X_train_flattened, y_train, epochs=5)

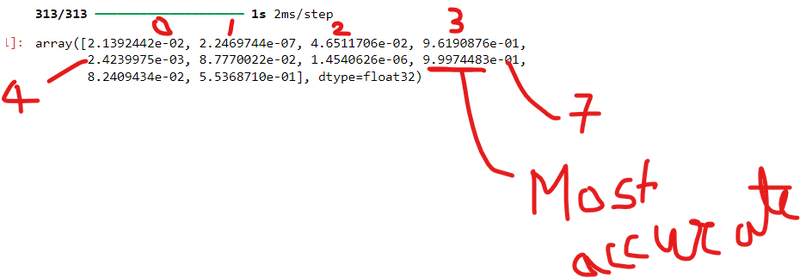

y_predicted = model.predict(X_test_flattened)

y_predicted[0]

Another example

y_predicted[1]

in array also 10 value output of y_predicted[0]

array([2.1392442e-02, 2.2469744e-07, 4.6511706e-02, 9.6190876e-01,

2.4239975e-03, 8.7770022e-02, 1.4540626e-06, 9.9974483e-01,

8.2409434e-02, 5.5368710e-01], dtype=float32)

Top comments (0)