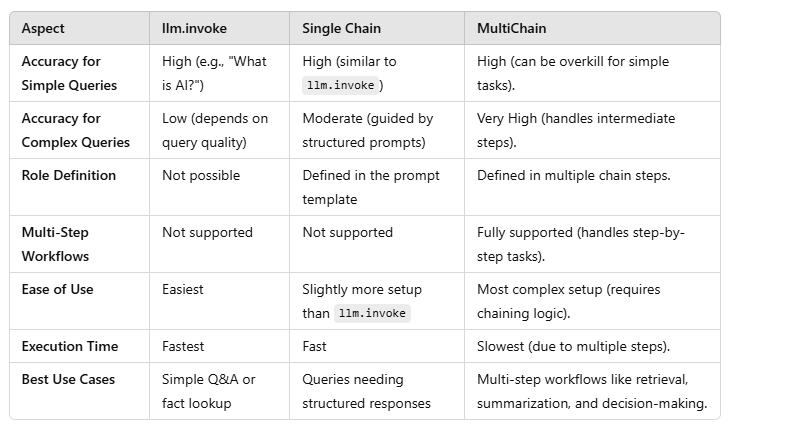

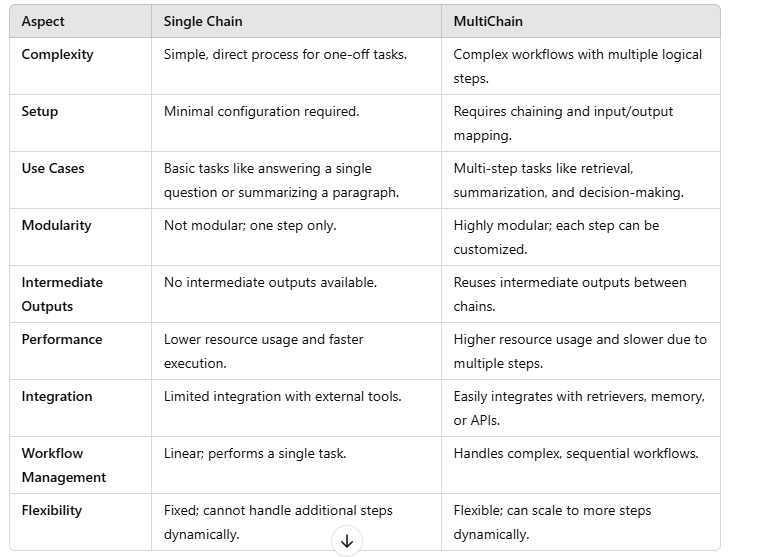

Single Chain

MultiChain

Difference between single chain and multichain

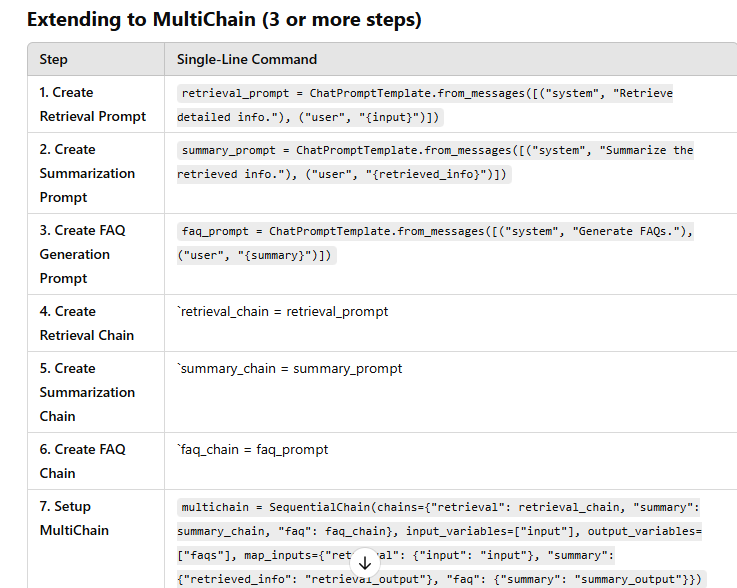

Coding example of Multichain

FAQ Generation from User Query

Recipe Recommendation with Instructions

Sentiment Analysis and Summary

Job Recommendation and Resume Tailoring

Language Translation and Polishing

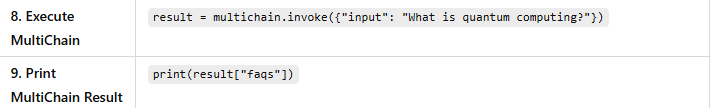

Research Workflow: Retrieval, Summarization, and FAQ Generation

Task

Personal Assistant: Task Suggestion, Prioritization, and Scheduling Task

Language Learning: Translation, Grammar Explanation, and Sentence Practice

Movie Recommendation: Suggestion, Sentiment Analysis, and Review

Business Pitch: Idea Generation, Problem-Solution Match, and Presentation Draft

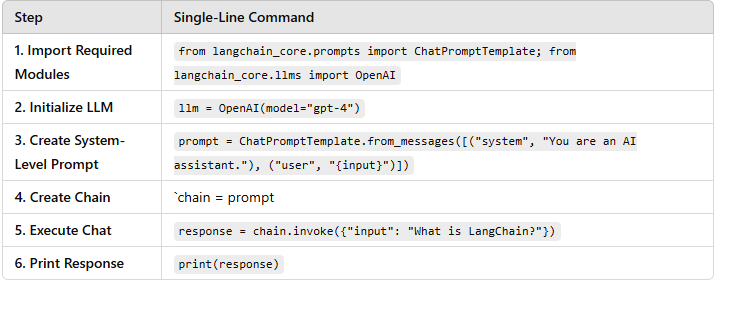

Single Chain

What is a Single Chain?

A single chain is a simple workflow where a single LLM or prompt template is used to process input and produce output. There’s no interconnection between multiple processing steps.

Advantages of Single Chain

Simplicity:

Easy to set up and execute for straightforward tasks.

Requires minimal configuration.

Low Resource Usage:

Only one model invocation is required, reducing computation time and cost.

Direct Output:

No intermediate steps or dependencies, so results are produced faster.

Best for Basic Use Cases:

Ideal for one-off tasks like answering a single query or generating content from a prompt.

Disadvantages of Single Chain

Limited Functionality:

Cannot handle workflows requiring multiple logical steps.

Lacks flexibility for complex tasks.

No Reusability of Intermediate Results:

Cannot reuse intermediate outputs for additional processing.

Use Case

Answering a simple query like:

result = llm.invoke("What is generative AI?")

print(result)

MultiChain

What is a MultiChain?

A MultiChain combines multiple chains (or steps) into a sequential or interconnected workflow. Each step processes the input or the output from the previous step.

Advantages of MultiChain

Modular Workflow:

Complex tasks are broken into smaller, manageable steps.

Each step can be customized independently.

Reusability of Intermediate Outputs:

Outputs of one chain (step) can be reused by subsequent chains, ensuring efficient workflows.

Scalability:

Useful for handling multi-step problems like information retrieval, summarization, and text transformation.

Flexibility:

Allows integration with external systems like retrievers, memory, or databases for richer functionality.

Can easily adapt to workflows with branching or conditional logic.

Clarity:

By separating tasks into multiple steps, the overall process becomes easier to debug and maintain.

Disadvantages of MultiChain

Complexity:

Requires additional setup for chaining, mapping inputs, and managing dependencies.

May be overkill for simple tasks.

Higher Resource Usage:

Each step in the chain invokes the LLM, increasing computational cost and response time.

Dependency Management:

Outputs of one chain must be correctly mapped as inputs to the next, which can introduce errors.

Use Case

For example, combining retrieval, summarization, and user interaction:

result = multichain.invoke({"input": "Tell me about Alan Turing."})

print(result["summarized_output"])

FAQ Generation from User Query

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.chains import SequentialChain

from langchain_core.llms import OpenAI

# Initialize the LLM

llm = OpenAI(model="gpt-4")

# Step 1: Retrieve information

retrieval_prompt = ChatPromptTemplate.from_messages([

("system", "You are an expert AI. Provide detailed information about the topic."),

("user", "{input}")

])

# Step 2: Generate FAQs

faq_prompt = ChatPromptTemplate.from_messages([

("system", "Based on the given information, generate a set of 5 frequently asked questions."),

("user", "{retrieved_info}")

])

# Create chains

retrieval_chain = retrieval_prompt | llm

faq_chain = faq_prompt | llm

# Combine chains

multichain = SequentialChain(

chains={

"retrieval_chain": retrieval_chain,

"faq_chain": faq_chain

},

input_variables=["input"],

output_variables=["retrieved_info", "faqs"],

map_inputs={

"retrieval_chain": {"input": "input"},

"faq_chain": {"retrieved_info": "retrieval_chain_output"}

},

map_outputs={

"retrieval_chain_output": "retrieved_info",

"faq_chain_output": "faqs"

}

)

# Execute the MultiChain

query = "Tell me about quantum computing."

result = multichain.invoke({"input": query})

# Output

print("Retrieved Information:\n", result["retrieved_info"])

print("\nFAQs:\n", result["faqs"])

Retrieved Information:

Quantum computing is a type of computing that uses quantum-mechanical phenomena, such as superposition and entanglement, to perform operations on data...

FAQs:

1. What is quantum computing?

2. How does quantum computing differ from classical computing?

3. What are qubits, and how do they work?

4. What are the practical applications of quantum computing?

5. What are the challenges in building quantum computers?

Another Example

from langchain.chains import SequentialChain

from langchain.prompts import ChatPromptTemplate

# 🔹 Define Available Chains

available_chains = {

"retrieval": ChatPromptTemplate.from_messages([

("system", "You are an expert AI. Provide detailed information about the topic."),

("user", "{input}")

]),

"faq_generation": ChatPromptTemplate.from_messages([

("system", "Based on the given information, generate a set of 5 frequently asked questions."),

("user", "{retrieved_info}")

]),

"answer_generation": ChatPromptTemplate.from_messages([

("system", "Provide detailed answers for each FAQ."),

("user", "FAQ: {faq}")

]),

"summarization": ChatPromptTemplate.from_messages([

("system", "Summarize the retrieved information in a concise and easy-to-read format."),

("user", "{retrieved_info}")

]),

"reference_suggestion": ChatPromptTemplate.from_messages([

("system", "Provide references or links where users can learn more about this topic."),

("user", "{retrieved_info}")

]),

"comparison": ChatPromptTemplate.from_messages([

("system", "Compare this topic with related concepts and highlight key differences."),

("user", "Topic: {input}")

]),

}

# 🔹 Function to Construct the Chain Dynamically

def construct_faq_chain(user_request, llm):

chains = {}

map_inputs = {}

map_outputs = {}

# Extract required chains based on user request

requested_chains = set(user_request) # Convert user request to a set for easy lookup

if "retrieval" in requested_chains:

chains["retrieval_chain"] = available_chains["retrieval"] | llm

map_inputs["retrieval_chain"] = {"input": "input"}

map_outputs["retrieval_chain_output"] = "retrieved_info"

if "faq_generation" in requested_chains:

chains["faq_chain"] = available_chains["faq_generation"] | llm

map_inputs["faq_chain"] = {"retrieved_info": "retrieval_chain_output"}

map_outputs["faq_chain_output"] = "faqs"

if "answer_generation" in requested_chains:

chains["answer_chain"] = available_chains["answer_generation"] | llm

map_inputs["answer_chain"] = {"faq": "faq_chain_output"}

map_outputs["answer_chain_output"] = "detailed_answers"

if "summarization" in requested_chains:

chains["summary_chain"] = available_chains["summarization"] | llm

map_inputs["summary_chain"] = {"retrieved_info": "retrieval_chain_output"}

map_outputs["summary_chain_output"] = "summary"

if "reference_suggestion" in requested_chains:

chains["reference_chain"] = available_chains["reference_suggestion"] | llm

map_inputs["reference_chain"] = {"retrieved_info": "retrieval_chain_output"}

map_outputs["reference_chain_output"] = "references"

if "comparison" in requested_chains:

chains["comparison_chain"] = available_chains["comparison"] | llm

map_inputs["comparison_chain"] = {"input": "input"}

map_outputs["comparison_chain_output"] = "comparison"

# Create the SequentialChain

multichain = SequentialChain(

chains=chains,

input_variables=["input"],

output_variables=list(map_outputs.values()),

map_inputs=map_inputs,

map_outputs=map_outputs

)

return multichain

# 🔹 User Request Configuration

# User can request any combination of:

# ["retrieval", "faq_generation", "answer_generation", "summarization", "reference_suggestion", "comparison"]

user_selected_chains = ["retrieval", "faq_generation", "answer_generation", "summarization", "reference_suggestion", "comparison"]

# 🔹 Create the Chain Based on User Request

faq_multichain = construct_faq_chain(user_selected_chains, llm)

# 🔹 Execute MultiChain

user_query = "What is machine learning?"

result = faq_multichain.invoke({"input": user_query})

# 🔹 Output Results Dynamically

print("\n📖 Retrieved Information:\n", result.get("retrieved_info", "Not Available"))

print("\n❓ FAQs Generated:\n", result.get("faqs", "Not Available"))

print("\n💡 Detailed Answers:\n", result.get("detailed_answers", "Not Available"))

print("\n📌 Summarized Information:\n", result.get("summary", "Not Available"))

print("\n🔗 References & Further Reading:\n", result.get("references", "Not Available"))

print("\n⚖️ Comparison with Related Topics:\n", result.get("comparison", "Not Available"))

Another example: based on user request

from flask import Flask, request, jsonify

from langchain.chains import SequentialChain

from langchain.prompts import ChatPromptTemplate

app = Flask(__name__)

# 🔹 Define Available Chains

available_chains = {

"retrieval": ChatPromptTemplate.from_messages([

("system", "You are an expert AI. Provide detailed information about the topic."),

("user", "{input}")

]),

"faq_generation": ChatPromptTemplate.from_messages([

("system", "Based on the given information, generate a set of 5 frequently asked questions."),

("user", "{retrieved_info}")

]),

"answer_generation": ChatPromptTemplate.from_messages([

("system", "Provide detailed answers for each FAQ."),

("user", "FAQ: {faq}")

]),

"summarization": ChatPromptTemplate.from_messages([

("system", "Summarize the retrieved information in a concise and easy-to-read format."),

("user", "{retrieved_info}")

]),

"reference_suggestion": ChatPromptTemplate.from_messages([

("system", "Provide references or links where users can learn more about this topic."),

("user", "{retrieved_info}")

]),

"comparison": ChatPromptTemplate.from_messages([

("system", "Compare this topic with related concepts and highlight key differences."),

("user", "Topic: {input}")

]),

}

# 🔹 Function to Construct the Chain Dynamically

def construct_faq_chain(user_selected_chains, llm):

chains = {}

map_inputs = {}

map_outputs = {}

if "retrieval" in user_selected_chains:

chains["retrieval_chain"] = available_chains["retrieval"] | llm

map_inputs["retrieval_chain"] = {"input": "input"}

map_outputs["retrieval_chain_output"] = "retrieved_info"

if "faq_generation" in user_selected_chains:

chains["faq_chain"] = available_chains["faq_generation"] | llm

map_inputs["faq_chain"] = {"retrieved_info": "retrieval_chain_output"}

map_outputs["faq_chain_output"] = "faqs"

if "answer_generation" in user_selected_chains:

chains["answer_chain"] = available_chains["answer_generation"] | llm

map_inputs["answer_chain"] = {"faq": "faq_chain_output"}

map_outputs["answer_chain_output"] = "detailed_answers"

if "summarization" in user_selected_chains:

chains["summary_chain"] = available_chains["summarization"] | llm

map_inputs["summary_chain"] = {"retrieved_info": "retrieval_chain_output"}

map_outputs["summary_chain_output"] = "summary"

if "reference_suggestion" in user_selected_chains:

chains["reference_chain"] = available_chains["reference_suggestion"] | llm

map_inputs["reference_chain"] = {"retrieved_info": "retrieval_chain_output"}

map_outputs["reference_chain_output"] = "references"

if "comparison" in user_selected_chains:

chains["comparison_chain"] = available_chains["comparison"] | llm

map_inputs["comparison_chain"] = {"input": "input"}

map_outputs["comparison_chain_output"] = "comparison"

# Create the SequentialChain

multichain = SequentialChain(

chains=chains,

input_variables=["input"],

output_variables=list(map_outputs.values()),

map_inputs=map_inputs,

map_outputs=map_outputs

)

return multichain

# 🔹 API Endpoint to Handle Frontend Requests

@app.route("/generate_faq", methods=["POST"])

def generate_faq():

try:

data = request.get_json()

# Extract user inputs from frontend

user_query = data.get("query", "")

selected_chains = data.get("selected_chains", []) # List of selected chains

if not user_query or not selected_chains:

return jsonify({"error": "Missing query or selected chains"}), 400

# 🔹 Create the Chain Based on User Request

llm = None # Initialize your LLM instance here

faq_multichain = construct_faq_chain(selected_chains, llm)

# 🔹 Execute MultiChain

result = faq_multichain.invoke({"input": user_query})

# 🔹 Prepare Response

response = {

"retrieved_info": result.get("retrieved_info", "Not Available"),

"faqs": result.get("faqs", "Not Available"),

"detailed_answers": result.get("detailed_answers", "Not Available"),

"summary": result.get("summary", "Not Available"),

"references": result.get("references", "Not Available"),

"comparison": result.get("comparison", "Not Available"),

}

return jsonify(response)

except Exception as e:

return jsonify({"error": str(e)}), 500

Recipe Recommendation with Instructions

Goal: Recommend a recipe based on a user’s preferred cuisine and then provide step-by-step cooking instructions.

# Prompts for recommending a recipe and instructions

recommendation_prompt = ChatPromptTemplate.from_messages([

("system", "You are a culinary expert. Recommend a dish based on the cuisine preference."),

("user", "{input}")

])

instruction_prompt = ChatPromptTemplate.from_messages([

("system", "Provide detailed cooking instructions for the given recipe."),

("user", "{recipe}")

])

# Create chains

recommendation_chain = recommendation_prompt | llm

instruction_chain = instruction_prompt | llm

# MultiChain setup

multichain = SequentialChain(

chains={

"recommendation_chain": recommendation_chain,

"instruction_chain": instruction_chain

},

input_variables=["input"],

output_variables=["cooking_instructions"],

map_inputs={

"recommendation_chain": {"input": "input"},

"instruction_chain": {"recipe": "recommendation_chain_output"}

},

map_outputs={"instruction_chain_output": "cooking_instructions"}

)

# Execute the MultiChain

query = "Italian cuisine"

result = multichain.invoke({"input": query})

# Output

print("Recipe and Instructions:\n", result["cooking_instructions"])

Recipe and Instructions:

Recommended Recipe: Spaghetti Carbonara

1. Cook spaghetti in salted boiling water until al dente.

2. Sauté pancetta until crispy.

3. Whisk eggs and Parmesan cheese in a bowl.

4. Combine spaghetti with pancetta and egg mixture, stirring quickly.

5. Serve with additional Parmesan and freshly ground black pepper.

Another Example

recommendation_prompt = ChatPromptTemplate.from_messages([

("system", "You are a culinary expert. Recommend a dish based on the cuisine preference."),

("user", "{input}")

])

# 2️⃣ Cooking Instructions Prompt

instruction_prompt = ChatPromptTemplate.from_messages([

("system", "Provide detailed cooking instructions for the given recipe."),

("user", "{recipe}")

])

# 3️⃣ Nutritional Analysis Prompt

nutrition_prompt = ChatPromptTemplate.from_messages([

("system", "Analyze the following recipe and determine its macronutrient breakdown (calories, proteins, fats, carbohydrates)."),

("user", "{recipe}")

])

# 4️⃣ Vitamin & Mineral Content Prompt

vitamin_prompt = ChatPromptTemplate.from_messages([

("system", "List the key vitamins and minerals present in the given recipe."),

("user", "{recipe}")

])

# 5️⃣ Health Benefits Prompt

health_benefit_prompt = ChatPromptTemplate.from_messages([

("system", "Determine the health benefits of the given recipe based on its ingredients."),

("user", "{recipe}")

])

# Create chains

recommendation_chain = recommendation_prompt | llm

instruction_chain = instruction_prompt | llm

nutrition_chain = nutrition_prompt | llm

vitamin_chain = vitamin_prompt | llm

health_benefit_chain = health_benefit_prompt | llm

# MultiChain setup

multichain = SequentialChain(

chains={

"recommendation_chain": recommendation_chain,

"instruction_chain": instruction_chain,

"nutrition_chain": nutrition_chain,

"vitamin_chain": vitamin_chain,

"health_benefit_chain": health_benefit_chain

},

input_variables=["input"],

output_variables=["cooking_instructions", "nutrition_facts", "vitamins", "health_benefits"],

map_inputs={

"recommendation_chain": {"input": "input"},

"instruction_chain": {"recipe": "recommendation_chain_output"},

"nutrition_chain": {"recipe": "recommendation_chain_output"},

"vitamin_chain": {"recipe": "recommendation_chain_output"},

"health_benefit_chain": {"recipe": "recommendation_chain_output"}

},

map_outputs={

"instruction_chain_output": "cooking_instructions",

"nutrition_chain_output": "nutrition_facts",

"vitamin_chain_output": "vitamins",

"health_benefit_chain_output": "health_benefits"

}

)

# Execute the MultiChain

query = "Italian cuisine"

result = multichain.invoke({"input": query})

# Output Results

print("\n🔹 Recommended Recipe:\n", result["recommendation_chain_output"])

print("\n🔹 Cooking Instructions:\n", result["cooking_instructions"])

print("\n🔹 Nutritional Facts:\n", result["nutrition_facts"])

print("\n🔹 Vitamin & Mineral Content:\n", result["vitamins"])

print("\n🔹 Health Benefits:\n", result["health_benefits"])

Another example based on user request

# 🔹 Define Available Chains

available_chains = {

"recommendation": ChatPromptTemplate.from_messages([

("system", "You are a culinary expert. Recommend a dish based on the cuisine preference."),

("user", "{input}")

]),

"cooking_instructions": ChatPromptTemplate.from_messages([

("system", "Provide detailed cooking instructions for the given recipe."),

("user", "{recipe}")

]),

"nutrition_analysis": ChatPromptTemplate.from_messages([

("system", "Analyze the following recipe and determine its macronutrient breakdown (calories, proteins, fats, carbohydrates)."),

("user", "{recipe}")

]),

"vitamin_minerals": ChatPromptTemplate.from_messages([

("system", "List the key vitamins and minerals present in the given recipe."),

("user", "{recipe}")

]),

"health_benefits": ChatPromptTemplate.from_messages([

("system", "Determine the health benefits of the given recipe based on its ingredients."),

("user", "{recipe}")

]),

}

# 🔹 Function to Construct the Chain Dynamically

def construct_recipe_chain(user_selected_chains, llm):

chains = {}

map_inputs = {}

map_outputs = {}

if "recommendation" in user_selected_chains:

chains["recommendation_chain"] = available_chains["recommendation"] | llm

map_inputs["recommendation_chain"] = {"input": "input"}

map_outputs["recommendation_chain_output"] = "recommended_recipe"

if "cooking_instructions" in user_selected_chains:

chains["instruction_chain"] = available_chains["cooking_instructions"] | llm

map_inputs["instruction_chain"] = {"recipe": "recommendation_chain_output"}

map_outputs["instruction_chain_output"] = "cooking_instructions"

if "nutrition_analysis" in user_selected_chains:

chains["nutrition_chain"] = available_chains["nutrition_analysis"] | llm

map_inputs["nutrition_chain"] = {"recipe": "recommendation_chain_output"}

map_outputs["nutrition_chain_output"] = "nutrition_facts"

if "vitamin_minerals" in user_selected_chains:

chains["vitamin_chain"] = available_chains["vitamin_minerals"] | llm

map_inputs["vitamin_chain"] = {"recipe": "recommendation_chain_output"}

map_outputs["vitamin_chain_output"] = "vitamins"

if "health_benefits" in user_selected_chains:

chains["health_benefit_chain"] = available_chains["health_benefits"] | llm

map_inputs["health_benefit_chain"] = {"recipe": "recommendation_chain_output"}

map_outputs["health_benefit_chain_output"] = "health_benefits"

# Create the SequentialChain

multichain = SequentialChain(

chains=chains,

input_variables=["input"],

output_variables=list(map_outputs.values()),

map_inputs=map_inputs,

map_outputs=map_outputs

)

return multichain

# 🔹 API Endpoint to Handle Frontend Requests

@app.route("/get_recipe", methods=["POST"])

def get_recipe():

try:

data = request.get_json()

# Extract user inputs from frontend

user_query = data.get("query", "")

selected_chains = data.get("selected_chains", []) # List of selected chains

if not user_query or not selected_chains:

return jsonify({"error": "Missing query or selected chains"}), 400

# 🔹 Create the Chain Based on User Request

llm = None # Initialize your LLM instance here

recipe_multichain = construct_recipe_chain(selected_chains, llm)

# 🔹 Execute MultiChain

result = recipe_multichain.invoke({"input": user_query})

# 🔹 Prepare Response

response = {

"recommended_recipe": result.get("recommended_recipe", "Not Available"),

"cooking_instructions": result.get("cooking_instructions", "Not Available"),

"nutrition_facts": result.get("nutrition_facts", "Not Available"),

"vitamins": result.get("vitamins", "Not Available"),

"health_benefits": result.get("health_benefits", "Not Available"),

}

return jsonify(response)

except Exception as e:

return jsonify({"error": str(e)}), 500

Sentiment Analysis and Summary

Goal: Analyze the sentiment of a user’s text and provide a summary based on the sentiment.

# Prompts for sentiment analysis and summary

sentiment_prompt = ChatPromptTemplate.from_messages([

("system", "Determine the sentiment (positive, negative, or neutral) of the given text."),

("user", "{input}")

])

summary_prompt = ChatPromptTemplate.from_messages([

("system", "Summarize the content based on its sentiment."),

("user", "Sentiment: {sentiment}\nText: {input}")

])

# Create chains

sentiment_chain = sentiment_prompt | llm

summary_chain = summary_prompt | llm

# MultiChain setup

multichain = SequentialChain(

chains={

"sentiment_chain": sentiment_chain,

"summary_chain": summary_chain

},

input_variables=["input"],

output_variables=["sentiment_summary"],

map_inputs={

"sentiment_chain": {"input": "input"},

"summary_chain": {"sentiment": "sentiment_chain_output", "input": "input"}

},

map_outputs={"summary_chain_output": "sentiment_summary"}

)

# Execute the MultiChain

text = "The movie had stunning visuals, but the storyline was boring and predictable."

result = multichain.invoke({"input": text})

# Output

print("Sentiment and Summary:\n", result["sentiment_summary"])

Output

Sentiment and Summary:

Sentiment: Negative

Summary: Although the visuals were impressive, the predictable storyline disappointed viewe

Job Recommendation and Resume Tailoring

Goal: Recommend a job based on user input and tailor their resume to match the job description.

# Prompts for job recommendation and resume tailoring

job_prompt = ChatPromptTemplate.from_messages([

("system", "Recommend a job based on the user's input."),

("user", "{input}")

])

resume_prompt = ChatPromptTemplate.from_messages([

("system", "Tailor the user's resume to match the given job description."),

("user", "Job: {job}\nResume: {resume}")

])

# Create chains

job_chain = job_prompt | llm

resume_chain = resume_prompt | llm

# MultiChain setup

multichain = SequentialChain(

chains={

"job_chain": job_chain,

"resume_chain": resume_chain

},

input_variables=["input", "resume"],

output_variables=["tailored_resume"],

map_inputs={

"job_chain": {"input": "input"},

"resume_chain": {"job": "job_chain_output", "resume": "resume"}

},

map_outputs={"resume_chain_output": "tailored_resume"}

)

# Execute the MultiChain

user_input = "Software engineering roles in AI"

resume_text = "Experienced software engineer with expertise in backend development and databases."

result = multichain.invoke({"input": user_input, "resume": resume_text})

# Output

print("Tailored Resume:\n", result["tailored_resume"])

Output

Tailored Resume:

Recommended Job: AI Software Engineer at OpenAI

Resume: Experienced software engineer with expertise in backend development, databases, and AI tools such as TensorFlow and PyTorch.

Another Example

# 🔹 Define Available Chains

available_chains = {

"job_recommendation": ChatPromptTemplate.from_messages([

("system", "Suggest job openings based on the user's skills, experience, and job preferences."),

("user", "{user_profile}")

]),

"resume_optimization": ChatPromptTemplate.from_messages([

("system", "Tailor the resume to better match the recommended job description."),

("user", "Resume: {resume}\nJob Description: {job}")

]),

"cover_letter_generation": ChatPromptTemplate.from_messages([

("system", "Generate a personalized cover letter for the selected job based on the user's profile."),

("user", "Job: {job}\nUser Profile: {user_profile}")

]),

"salary_package_estimation": ChatPromptTemplate.from_messages([

("system", "Estimate the salary range for the given job based on industry standards and experience level."),

("user", "Job: {job}")

]),

"job_type_classification": ChatPromptTemplate.from_messages([

("system", "Classify whether the given job is remote, hybrid, or on-site."),

("user", "Job: {job}")

]),

"future_demand_prediction": ChatPromptTemplate.from_messages([

("system", "Analyze the job market trends and predict the future demand for this job role."),

("user", "Job: {job}")

]),

"career_growth_analysis": ChatPromptTemplate.from_messages([

("system", "Evaluate the long-term career growth potential and opportunities for advancement in this job role."),

("user", "Job: {job}")

]),

}

# 🔹 Function to Construct the Chain Dynamically

def construct_job_chain(user_request, llm):

chains = {}

map_inputs = {}

map_outputs = {}

# Extract required chains based on user request

requested_chains = set(user_request) # Convert user request to a set for easy lookup

if "job_recommendation" in requested_chains:

chains["job_chain"] = available_chains["job_recommendation"] | llm

map_inputs["job_chain"] = {"user_profile": "user_profile"}

map_outputs["job_chain_output"] = "recommended_jobs"

if "resume_optimization" in requested_chains:

chains["resume_chain"] = available_chains["resume_optimization"] | llm

map_inputs["resume_chain"] = {"resume": "resume", "job": "job_chain_output"}

map_outputs["resume_chain_output"] = "optimized_resume"

if "cover_letter_generation" in requested_chains:

chains["cover_letter_chain"] = available_chains["cover_letter_generation"] | llm

map_inputs["cover_letter_chain"] = {"job": "job_chain_output", "user_profile": "user_profile"}

map_outputs["cover_letter_chain_output"] = "cover_letter"

if "salary_package_estimation" in requested_chains:

chains["salary_chain"] = available_chains["salary_package_estimation"] | llm

map_inputs["salary_chain"] = {"job": "job_chain_output"}

map_outputs["salary_chain_output"] = "salary_range"

if "job_type_classification" in requested_chains:

chains["job_type_chain"] = available_chains["job_type_classification"] | llm

map_inputs["job_type_chain"] = {"job": "job_chain_output"}

map_outputs["job_type_chain_output"] = "job_type"

if "future_demand_prediction" in requested_chains:

chains["future_demand_chain"] = available_chains["future_demand_prediction"] | llm

map_inputs["future_demand_chain"] = {"job": "job_chain_output"}

map_outputs["future_demand_chain_output"] = "future_demand"

if "career_growth_analysis" in requested_chains:

chains["career_growth_chain"] = available_chains["career_growth_analysis"] | llm

map_inputs["career_growth_chain"] = {"job": "job_chain_output"}

map_outputs["career_growth_chain_output"] = "career_growth"

# Create the SequentialChain

multichain = SequentialChain(

chains=chains,

input_variables=["user_profile", "resume"],

output_variables=list(map_outputs.values()),

map_inputs=map_inputs,

map_outputs=map_outputs

)

return multichain

# 🔹 User Request Configuration

# User can request any combination of:

# ["job_recommendation", "resume_optimization", "cover_letter_generation", "salary_package_estimation",

# "job_type_classification", "future_demand_prediction", "career_growth_analysis"]

user_selected_chains = ["job_recommendation", "resume_optimization", "cover_letter_generation",

"salary_package_estimation", "job_type_classification", "future_demand_prediction",

"career_growth_analysis"]

# 🔹 Create the Chain Based on User Request

job_multichain = construct_job_chain(user_selected_chains, llm)

# 🔹 Execute MultiChain

user_profile = "Software engineer with 5 years of experience in Python, AI, and cloud computing."

resume = "Previous work experience at Google, skilled in Python and AI development."

result = job_multichain.invoke({"user_profile": user_profile, "resume": resume})

# 🔹 Output Results Dynamically

print("\n💼 Recommended Jobs:\n", result.get("recommended_jobs", "Not Available"))

print("\n📄 Optimized Resume:\n", result.get("optimized_resume", "Not Available"))

print("\n✉️ Cover Letter:\n", result.get("cover_letter", "Not Available"))

print("\n💰 Salary Range:\n", result.get("salary_range", "Not Available"))

print("\n🏢 Job Type (Remote/On-site/Hybrid):\n", result.get("job_type", "Not Available"))

print("\n📈 Future Demand Prediction:\n", result.get("future_demand", "Not Available"))

print("\n🚀 Career Growth Potential:\n", result.get("career_growth", "Not Available"))

Language Translation and Polishing

Goal: Translate text to another language and then polish the translation for fluency.

# Prompts for translation and polishing

translation_prompt = ChatPromptTemplate.from_messages([

("system", "Translate the text to French."),

("user", "{input}")

])

polishing_prompt = ChatPromptTemplate.from_messages([

("system", "Polish the translated text for fluency."),

("user", "{translated_text}")

])

# Create chains

translation_chain = translation_prompt | llm

polishing_chain = polishing_prompt | llm

# MultiChain setup

multichain = SequentialChain(

chains={

"translation_chain": translation_chain,

"polishing_chain": polishing_chain

},

input_variables=["input"],

output_variables=["final_translation"],

map_inputs={

"translation_chain": {"input": "input"},

"polishing_chain": {"translated_text": "translation_chain_output"}

},

map_outputs={"polishing_chain_output": "final_translation"}

)

# Execute the MultiChain

text = "The future of artificial intelligence is exciting and full of potential."

result = multichain.invoke({"input": text})

# Output

print("Polished Translation:\n", result["final_translation"])

Output

Polished Translation:

L'avenir de l'intelligence artificielle est passionnant et plein de potentiel.

Research Workflow: Retrieval, Summarization, and FAQ Generation

Task:

Retrieve detailed information about a topic.

Summarize the retrieved content.

Generate a list of frequently asked questions (FAQs) from the summary.

Code:

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.chains import SequentialChain

from langchain_core.llms import OpenAI

# Initialize the LLM

llm = OpenAI(model="gpt-4")

# Step 1: Retrieve detailed information

retrieval_prompt = ChatPromptTemplate.from_messages([

("system", "You are a knowledgeable assistant. Provide detailed information on the topic."),

("user", "{input}")

])

# Step 2: Summarize the content

summary_prompt = ChatPromptTemplate.from_messages([

("system", "Summarize the given content in a concise manner."),

("user", "{retrieved_info}")

])

# Step 3: Generate FAQs

faq_prompt = ChatPromptTemplate.from_messages([

("system", "Generate 5 FAQs based on the summarized information."),

("user", "{summary}")

])

# Create individual chains

retrieval_chain = retrieval_prompt | llm

summary_chain = summary_prompt | llm

faq_chain = faq_prompt | llm

# Combine chains

multichain = SequentialChain(

chains={

"retrieval_chain": retrieval_chain,

"summary_chain": summary_chain,

"faq_chain": faq_chain

},

input_variables=["input"],

output_variables=["faqs"],

map_inputs={

"retrieval_chain": {"input": "input"},

"summary_chain": {"retrieved_info": "retrieval_chain_output"},

"faq_chain": {"summary": "summary_chain_output"}

},

map_outputs={"faq_chain_output": "faqs"}

)

# Execute MultiChain

query = "Quantum computing"

result = multichain.invoke({"input": query})

# Output

print("FAQs:\n", result["faqs"])

Output:

FAQs:

1. What is quantum computing?

2. How does it differ from classical computing?

3. What are the main components of a quantum computer?

4. What industries are benefiting from quantum computing?

5. What challenges do we face in building quantum computers?

- Personal Assistant: Task Suggestion, Prioritization, and Scheduling Task: Suggest tasks based on a user's input. Prioritize the suggested tasks. Generate a daily schedule based on priorities. Code:

# Prompts for task suggestion, prioritization, and scheduling

suggest_task_prompt = ChatPromptTemplate.from_messages([

("system", "You are a productivity assistant. Suggest tasks based on the user's input."),

("user", "{input}")

])

prioritize_task_prompt = ChatPromptTemplate.from_messages([

("system", "Prioritize the following tasks."),

("user", "{tasks}")

])

schedule_prompt = ChatPromptTemplate.from_messages([

("system", "Generate a daily schedule based on the prioritized tasks."),

("user", "{prioritized_tasks}")

])

# Create chains

task_chain = suggest_task_prompt | llm

priority_chain = prioritize_task_prompt | llm

schedule_chain = schedule_prompt | llm

# MultiChain setup

multichain = SequentialChain(

chains={

"task_chain": task_chain,

"priority_chain": priority_chain,

"schedule_chain": schedule_chain

},

input_variables=["input"],

output_variables=["daily_schedule"],

map_inputs={

"task_chain": {"input": "input"},

"priority_chain": {"tasks": "task_chain_output"},

"schedule_chain": {"prioritized_tasks": "priority_chain_output"}

},

map_outputs={"schedule_chain_output": "daily_schedule"}

)

# Execute MultiChain

query = "I need to prepare a presentation, finish a report, and exercise."

result = multichain.invoke({"input": query})

# Output

print("Daily Schedule:\n", result["daily_schedule"])

Output:

Daily Schedule:

9:00 AM - Prepare presentation slides

11:00 AM - Draft the report

2:00 PM - Finalize the presentation

4:00 PM - Review and edit the report

6:00 PM - Exercise

- Language Learning: Translation, Grammar Explanation, and Sentence Practice Task: Translate text into a foreign language. Explain the grammar structure of the translated sentence. Generate practice sentences for the learner. Code:

# Prompts for translation, grammar explanation, and sentence practice

translation_prompt = ChatPromptTemplate.from_messages([

("system", "Translate the following text into Spanish."),

("user", "{input}")

])

grammar_prompt = ChatPromptTemplate.from_messages([

("system", "Explain the grammar structure of the translated text."),

("user", "{translated_text}")

])

practice_prompt = ChatPromptTemplate.from_messages([

("system", "Generate 3 practice sentences based on the grammar explained."),

("user", "{grammar}")

])

# Create chains

translation_chain = translation_prompt | llm

grammar_chain = grammar_prompt | llm

practice_chain = practice_prompt | llm

# MultiChain setup

multichain = SequentialChain(

chains={

"translation_chain": translation_chain,

"grammar_chain": grammar_chain,

"practice_chain": practice_chain

},

input_variables=["input"],

output_variables=["practice_sentences"],

map_inputs={

"translation_chain": {"input": "input"},

"grammar_chain": {"translated_text": "translation_chain_output"},

"practice_chain": {"grammar": "grammar_chain_output"}

},

map_outputs={"practice_chain_output": "practice_sentences"}

)

# Execute MultiChain

text = "The cat is sitting on the mat."

result = multichain.invoke({"input": text})

# Output

print("Practice Sentences:\n", result["practice_sentences"])

Output:

Practice Sentences:

1. El perro está sentado en la alfombra.

2. El niño está jugando en el patio.

3. La mujer está leyendo en la silla.

- Movie Recommendation: Suggestion, Sentiment Analysis, and Review Summary Task: Recommend a movie based on user preferences. Perform sentiment analysis on recent reviews for the movie. Provide a brief summary of the reviews. Code:

# Prompts for movie suggestion, sentiment analysis, and review summary

movie_prompt = ChatPromptTemplate.from_messages([

("system", "Suggest a movie based on the user's preferences."),

("user", "{input}")

])

sentiment_prompt = ChatPromptTemplate.from_messages([

("system", "Analyze the sentiment of the following reviews."),

("user", "{reviews}")

])

review_summary_prompt = ChatPromptTemplate.from_messages([

("system", "Summarize the reviews based on their sentiment."),

("user", "Sentiment: {sentiment}\nReviews: {reviews}")

])

# Create chains

movie_chain = movie_prompt | llm

sentiment_chain = sentiment_prompt | llm

review_summary_chain = review_summary_prompt | llm

# MultiChain setup

multichain = SequentialChain(

chains={

"movie_chain": movie_chain,

"sentiment_chain": sentiment_chain,

"review_summary_chain": review_summary_chain

},

input_variables=["input", "reviews"],

output_variables=["review_summary"],

map_inputs={

"movie_chain": {"input": "input"},

"sentiment_chain": {"reviews": "reviews"},

"review_summary_chain": {"sentiment": "sentiment_chain_output", "reviews": "reviews"}

},

map_outputs={"review_summary_chain_output": "review_summary"}

)

# Execute MultiChain

user_input = "I enjoy sci-fi and action movies."

reviews = "The movie was visually stunning but lacked a strong storyline."

result = multichain.invoke({"input": user_input, "reviews": reviews})

# Output

print("Review Summary:\n", result["review_summary"])

Output:

Review Summary:

The movie received mixed reviews. While the visuals were praised, the storyline was criticized for being weak.

Business Pitch: Idea Generation, Problem-Solution Match, and Presentation Draft

Task:

Generate a business idea based on user input.

Match the idea to a specific problem and solution.

Draft a presentation outline for the idea.

Another Example

from langchain.chains import SequentialChain

from langchain.prompts import ChatPromptTemplate

# 🔹 Define Available Chains

available_chains = {

"movie_recommendation": ChatPromptTemplate.from_messages([

("system", "Suggest a movie based on the user's preferences."),

("user", "{input}")

]),

"latest_movie": ChatPromptTemplate.from_messages([

("system", "Suggest a recently released movie similar to the user's preferences."),

("user", "{input}")

]),

"movie_genre": ChatPromptTemplate.from_messages([

("system", "Classify the given movie into genres such as action, drama, sci-fi, comedy, horror, etc."),

("user", "Movie: {movie}")

]),

"movie_cast": ChatPromptTemplate.from_messages([

("system", "Provide details about the cast of the given movie."),

("user", "Movie: {movie}")

]),

"movie_ratings": ChatPromptTemplate.from_messages([

("system", "Fetch the audience and critic ratings for the given movie."),

("user", "Movie: {movie}")

]),

"box_office": ChatPromptTemplate.from_messages([

("system", "Provide box office collection details for the given movie."),

("user", "Movie: {movie}")

]),

"sentiment_analysis": ChatPromptTemplate.from_messages([

("system", "Analyze the sentiment of the following reviews."),

("user", "{reviews}")

]),

"review_summary": ChatPromptTemplate.from_messages([

("system", "Summarize the reviews based on their sentiment."),

("user", "Sentiment: {sentiment}\nReviews: {reviews}")

]),

}

# 🔹 Function to Construct the Chain Dynamically

def construct_movie_chain(user_request, llm):

chains = {}

map_inputs = {}

map_outputs = {}

# Extract required chains based on user request

requested_chains = set(user_request) # Convert user request to set for easy lookup

if "movie_recommendation" in requested_chains:

chains["movie_chain"] = available_chains["movie_recommendation"] | llm

map_inputs["movie_chain"] = {"input": "input"}

map_outputs["movie_chain_output"] = "recommended_movie"

if "latest_movie" in requested_chains:

chains["latest_movie_chain"] = available_chains["latest_movie"] | llm

map_inputs["latest_movie_chain"] = {"input": "input"}

map_outputs["latest_movie_chain_output"] = "latest_movie"

if "movie_genre" in requested_chains:

chains["movie_genre_chain"] = available_chains["movie_genre"] | llm

map_inputs["movie_genre_chain"] = {"movie": "recommended_movie"}

map_outputs["movie_genre_chain_output"] = "movie_genre"

if "movie_cast" in requested_chains:

chains["movie_cast_chain"] = available_chains["movie_cast"] | llm

map_inputs["movie_cast_chain"] = {"movie": "recommended_movie"}

map_outputs["movie_cast_chain_output"] = "movie_cast"

if "movie_ratings" in requested_chains:

chains["movie_ratings_chain"] = available_chains["movie_ratings"] | llm

map_inputs["movie_ratings_chain"] = {"movie": "recommended_movie"}

map_outputs["movie_ratings_chain_output"] = "movie_ratings"

if "box_office" in requested_chains:

chains["box_office_chain"] = available_chains["box_office"] | llm

map_inputs["box_office_chain"] = {"movie": "recommended_movie"}

map_outputs["box_office_chain_output"] = "box_office"

if "sentiment_analysis" in requested_chains:

chains["sentiment_chain"] = available_chains["sentiment_analysis"] | llm

map_inputs["sentiment_chain"] = {"reviews": "reviews"}

map_outputs["sentiment_chain_output"] = "sentiment"

if "review_summary" in requested_chains:

chains["review_summary_chain"] = available_chains["review_summary"] | llm

map_inputs["review_summary_chain"] = {"sentiment": "sentiment_chain_output", "reviews": "reviews"}

map_outputs["review_summary_chain_output"] = "review_summary"

# Create the SequentialChain

multichain = SequentialChain(

chains=chains,

input_variables=["input", "reviews"],

output_variables=list(map_outputs.values()),

map_inputs=map_inputs,

map_outputs=map_outputs

)

return multichain

# 🔹 User Request Configuration

# User can request any combination of the following: ["movie_recommendation", "latest_movie", "movie_genre", "movie_cast", "movie_ratings", "box_office", "sentiment_analysis", "review_summary"]

user_selected_chains = ["movie_recommendation", "latest_movie", "movie_genre", "movie_cast", "movie_ratings", "box_office", "sentiment_analysis", "review_summary"]

# 🔹 Create the Chain Based on User Request

movie_multichain = construct_movie_chain(user_selected_chains, llm)

# 🔹 Execute MultiChain

user_input = "I enjoy sci-fi and action movies."

reviews = "The movie was visually stunning but lacked a strong storyline."

result = movie_multichain.invoke({"input": user_input, "reviews": reviews})

# 🔹 Output Results Dynamically

print("\n🎬 Recommended Movie:\n", result.get("recommended_movie", "Not Available"))

print("\n🎥 Latest Similar Movie:\n", result.get("latest_movie", "Not Available"))

print("\n🎭 Movie Genre:\n", result.get("movie_genre", "Not Available"))

print("\n🎭 Cast Details:\n", result.get("movie_cast", "Not Available"))

print("\n⭐ Movie Ratings:\n", result.get("movie_ratings", "Not Available"))

print("\n💰 Box Office Collection:\n", result.get("box_office", "Not Available"))

print("\n📝 Review Sentiment:\n", result.get("sentiment", "Not Available"))

print("\n📝 Review Summary:\n", result.get("review_summary", "Not Available"))

# Prompts for idea generation, problem-solution, and presentation draft

idea_prompt = ChatPromptTemplate.from_messages([

("system", "Generate a business idea based on the user's input."),

("user", "{input}")

])

problem_solution_prompt = ChatPromptTemplate.from_messages([

("system", "Match the business idea to a specific problem and its solution."),

("user", "{idea}")

])

presentation_prompt = ChatPromptTemplate.from_messages([

("system", "Draft a presentation outline for the business idea."),

("user", "{problem_solution}")

])

# Create chains

idea_chain = idea_prompt | llm

problem_solution_chain = problem_solution_prompt | llm

presentation_chain = presentation_prompt | llm

# MultiChain setup

multichain = SequentialChain(

chains={

"idea_chain": idea_chain,

"problem_solution_chain": problem_solution_chain,

"presentation_chain": presentation_chain

},

input_variables=["input"],

output_variables=["presentation"],

map_inputs={

"idea_chain": {"input": "input"},

"problem_solution_chain": {"idea": "idea_chain_output"},

"presentation_chain": {"problem_solution": "problem_solution_chain_output"}

},

map_outputs={"presentation_chain_output": "presentation"}

)

# Execute MultiChain

query = "Eco-friendly products for urban living."

result = multichain.invoke({"input": query})

# Output

print("Presentation Outline:\n", result["presentation"])

Output:

Presentation Outline:

1. Introduction to eco-friendly urban products.

2. The problem: Unsustainable living in cities.

3. The solution: Affordable, eco-friendly daily-use products.

4. Target market and potential growth.

5. Implementation plan and marketing strategy.

track the vehicle location and suggest top 10 place to visit

available_chains.update({

# 1️⃣ Find Bike Rental Shops

"bike_rental_suggestions": ChatPromptTemplate.from_messages([

("system", "Find the best bike rental shops in the given location."),

("user", "Location: {location}")

]),

# 2️⃣ Place Suggestions

"place_recommendation": ChatPromptTemplate.from_messages([

("system", "Suggest top places to visit based on the rental location."),

("user", "Rental Location: {rental_location}")

]),

# 3️⃣ Route Suggestions

"route_suggestion": ChatPromptTemplate.from_messages([

("system", "Suggest the best route to visit selected places."),

("user", "Starting Point: {rental_location}, Destinations: {places}")

]),

# 4️⃣ Weather & Climate Conditions

"weather_suggestion": ChatPromptTemplate.from_messages([

("system", "Provide real-time weather and climate details for the given route."),

("user", "Route: {route}, Locations: {places}")

])

})

def construct_travel_chain(user_selected_chains, llm):

chains = {}

map_inputs = {}

map_outputs = {}

# 1️⃣ Find Bike Rental Shops

if "bike_rental_suggestions" in user_selected_chains:

chains["rental_chain"] = available_chains["bike_rental_suggestions"] | llm

map_inputs["rental_chain"] = {"location": "user_location"}

map_outputs["rental_chain_output"] = "rental_location"

# 2️⃣ Suggest Places to Visit

if "place_recommendation" in user_selected_chains:

chains["place_chain"] = available_chains["place_recommendation"] | llm

map_inputs["place_chain"] = {"rental_location": "rental_chain_output"}

map_outputs["place_chain_output"] = "recommended_places"

# 3️⃣ Suggest Routes

if "route_suggestion" in user_selected_chains:

chains["route_chain"] = available_chains["route_suggestion"] | llm

map_inputs["route_chain"] = {

"rental_location": "rental_chain_output",

"places": "place_chain_output"

}

map_outputs["route_chain_output"] = "suggested_route"

# 4️⃣ Weather & Climate Conditions

if "weather_suggestion" in user_selected_chains:

chains["weather_chain"] = available_chains["weather_suggestion"] | llm

map_inputs["weather_chain"] = {

"route": "route_chain_output",

"places": "place_chain_output"

}

map_outputs["weather_chain_output"] = "weather_info"

# Create the SequentialChain

travel_chain = SequentialChain(

chains=chains,

input_variables=["user_location"],

output_variables=list(map_outputs.values()),

map_inputs=map_inputs,

map_outputs=map_outputs

)

return travel_chain

@app.route("/travel_recommendation", methods=["POST"])

def travel_recommendation():

try:

data = request.get_json()

user_location = data.get("location", "")

selected_chains = data.get("selected_chains", [])

if not user_location or not selected_chains:

return jsonify({"error": "Missing user location or selected chains"}), 400

llm = None # Replace with your LLM instance

travel_multichain = construct_travel_chain(selected_chains, llm)

result = travel_multichain.invoke({"user_location": user_location})

response = {

"rental_location": result.get("rental_location", "Not Available"),

"recommended_places": result.get("recommended_places", "Not Available"),

"suggested_route": result.get("suggested_route", "Not Available"),

"weather_info": result.get("weather_info", "Not Available")

}

return jsonify(response)

except Exception as e:

return jsonify({"error": str(e)}), 500

Top comments (0)