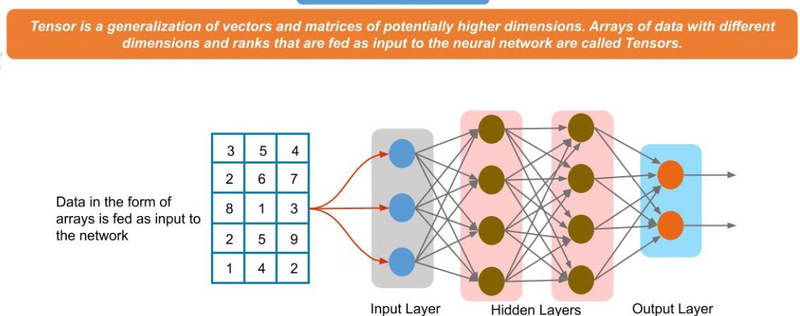

Tensors are used in deep learning and neural networks for the following key reasons:

Data Storage

Deep learning models process large and complex data such as images, text, audio, etc. All such input data is efficiently stored and represented as tensors. This multi-dimensional array format allows handling of scalars (single values), vectors, matrices, and higher-dimensional data uniformly.

Weights and Biases Representation

The learnable parameters of neural networks—weights and biases—are stored as tensors. This uniform structure makes mathematical operations and gradient calculations seamless during training.

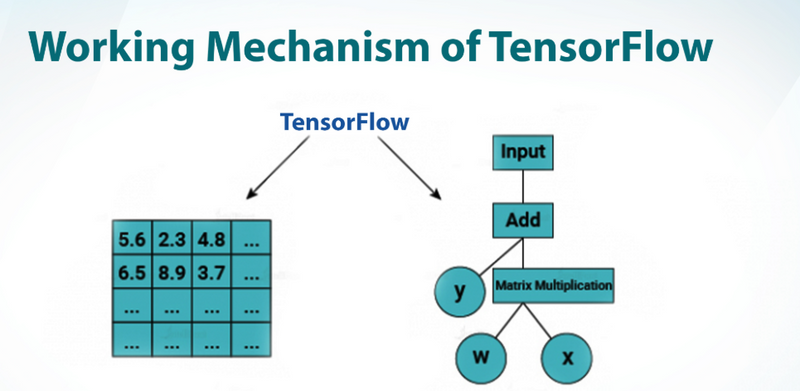

Matrix Operations

Neural networks rely heavily on operations such as matrix multiplications, dot products, and broadcasting. All these operations are efficiently implemented on tensors, enabling fast and parallel computation.

Training Process

During forward passes, tensors flow through the network layers carrying data and transformed features. During backward passes, tensors represent gradients for the parameters, facilitating efficient gradient descent optimizations.

In summary, tensors provide the multi-dimensional data structure essential for representing and manipulating all types of data and parameters in neural networks, enabling the complex computations behind deep learning to be both mathematically rigorous and computationally efficient.

The different types of dimensional tensors and their examples based on the screenshot are:

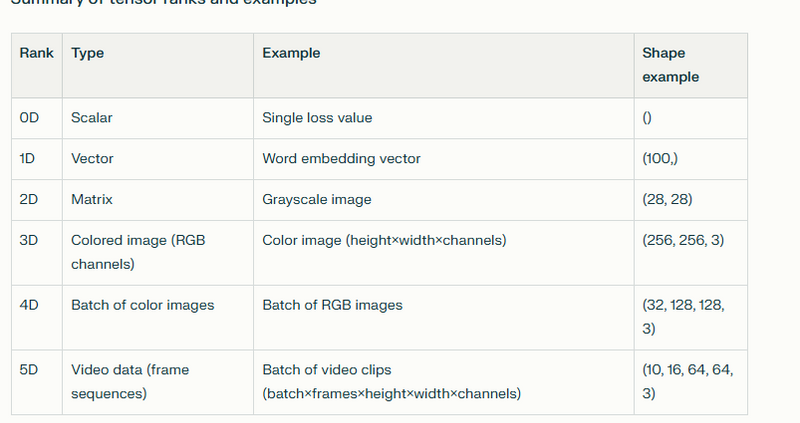

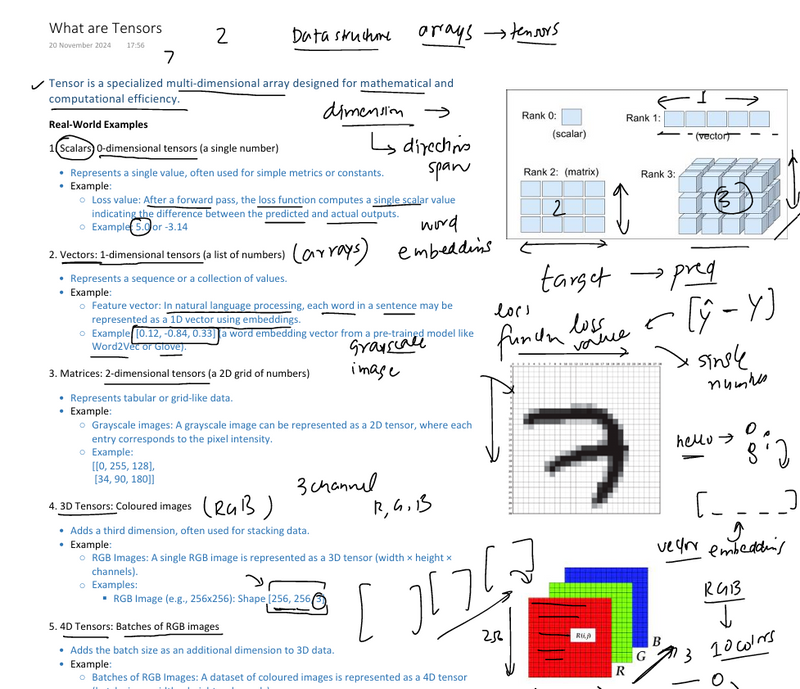

0-dimensional tensor (Scalar)

A single number, representing a single value.

Example: A loss value in ML such as 5.0 or -3.14.

It has no axes or directions.

Used for simple metrics or constants.

import numpy as np

scalar = np.array(7)

print(scalar.shape) # Output: ()

Shape: () (no dimension).

Used as constants or simple metrics.

1-dimensional tensor (Vector)

A list or sequence of numbers.

Example: Word embeddings like [0.12, -0.84, 0.33] representing a word in natural language processing.

Has one axis indicating length or count of elements.

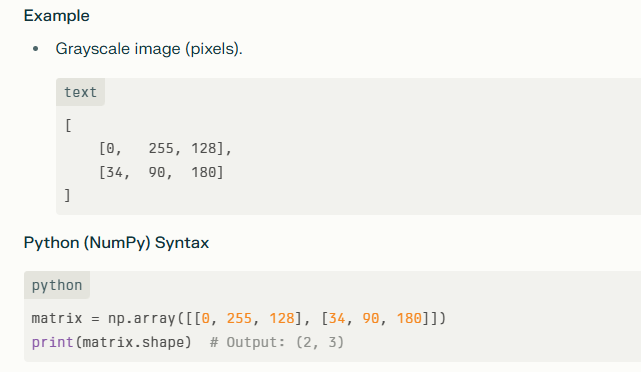

2-dimensional tensor (Matrix)

A grid or table of numbers with rows and columns.

Example: A grayscale image where each entry is a pixel intensity, e.g., [, ].

Two axes: height and width (rows and columns).

Example

Word embedding: [0.12, -0.84, 0.33]

Feature vector in NLP, sensor readings, etc.

vector = np.array([0.12, -0.84, 0.33])

print(vector.shape) # Output: (3,)

Explanation

Shape: (N,) (where N is the number of elements).

Used to represent a sequence, such as each word in a sentence (word embeddings).

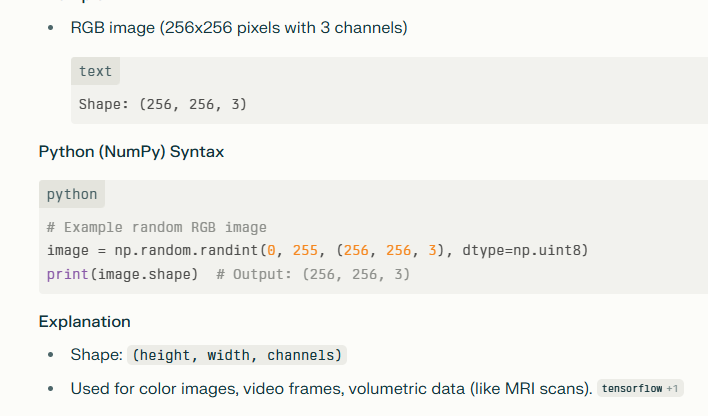

3-dimensional tensor

Adds a third axis to a 2D matrix, often for depth or channels.

Example: RGB colored image represented as height × width × 3 channels (red, green, blue).

For example, a 256×256 pixel color image with 3 color channels.

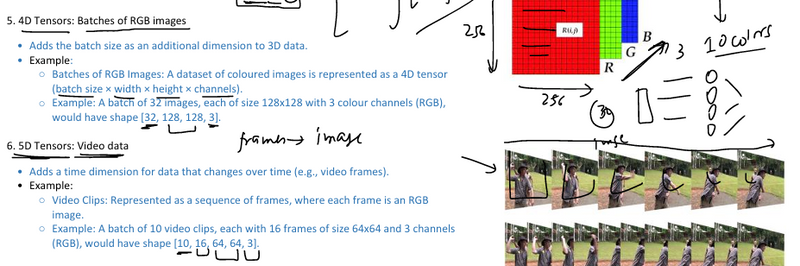

4-dimensional tensor

Adds batch size as an additional dimension over 3D images.

Example: A batch of RGB images; shape: batch_size × height × width × channels.

Enables handling multiple images simultaneously in training or inference.

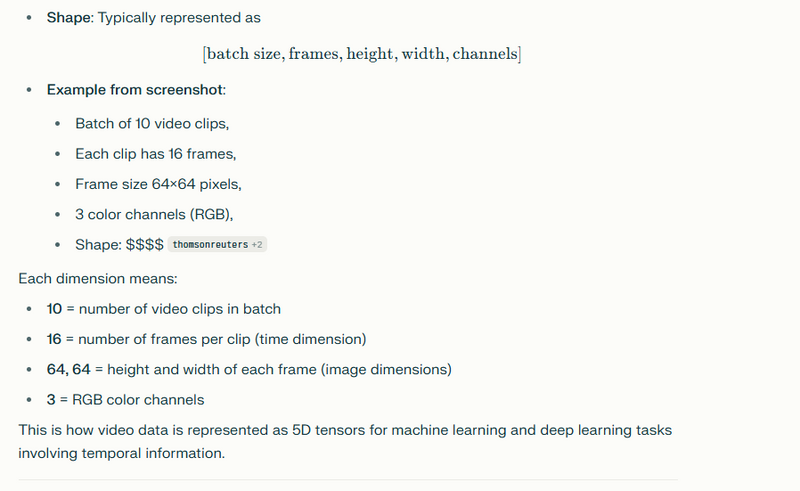

5-dimensional tensor

The 5-dimensional (5D) tensor refers to video data or data that changes over time, adding an extra time dimension to 4D tensors (such as batches of RGB images).

Explanation of 5D Tensor:

Definition: Adds a time dimension to the data, representing sequences of images (video frames) over time.

Example: Video clips as sequences of frames, where each frame is an RGB image.

Top comments (0)