What is Flattening

When is Flattening Needed?

Why is Flattening Important in Deep Learning

How Does Flattening Work?

How to Perform Flattening in Code (TensorFlow/Keras Example):

Why Flattening is Necessary in CNN Architectures:

What is Flattening

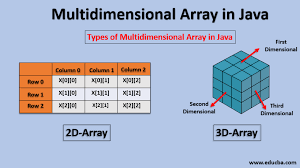

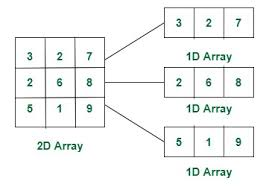

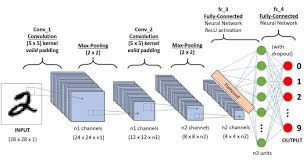

Flattening is the process of converting a multi-dimensional (e.g., 3D) array into a 2D array (or 1D for each sample). In the context of deep learning, this usually means converting the multi-dimensional feature maps (or images) from a convolutional layer into a single long vector. Flattening is often used in convolutional neural networks (CNNs) before passing data to fully connected (dense) layers.

When is Flattening Needed?

Flattening is necessary when transitioning between layers that work with multi-dimensional data (such as convolutional layers) to layers that work with 1D data, such as fully connected (dense) layers.

For example:

- Convolutional Neural Networks (CNNs) work with 3D data (height, width, and channels of images).

- After convolutional and pooling layers, the data is still in 3D form.

- Before feeding this 3D data into a fully connected layer, which expects 2D data, we need to flatten the 3D output into a 2D array .

Why is Flattening Important in Deep Learning

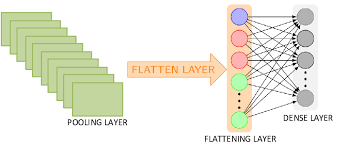

In deep learning models, especially CNNs, the convolutional and pooling layers extract spatial features from images. However, after feature extraction, you need to classify or perform a prediction based on those features.

Convolutional Layers: These layers maintain spatial structure (height × width × depth), which is great for feature extraction.

Fully Connected Layers (Dense Layers): These layers are often used to perform classification or regression, and they expect 2D inputs, where each sample is represented as a flat vector of features.

Therefore, flattening is crucial because:

Transition from feature extraction to classification: The convolutional layers output feature maps, which need to be flattened before being fed into the fully connected layers for classification.

Linear Layers Require 1D Inputs: Fully connected layers process a flat 1D array of features (e.g., neurons), not 2D or 3D arrays, so flattening is required for this transition.

How Does Flattening Work

?

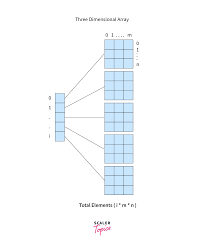

Let’s say you have an output from a CNN layer that has the shape (batch_size, height, width, channels):

Batch size: Number of samples.

Height and Width: The dimensions of each feature map.

Channels: The number of feature maps (filters).

Example:

Suppose after some convolutional and pooling layers, you have an output of shape (32, 8, 8, 16).

32: Number of images in a batch (batch size).

8, 8: Dimensions of each feature map.

16: Number of channels (feature maps) generated by the last convolutional layer.

To flatten this output, you simply take each feature map and convert it into a long vector. For each sample, you multiply the dimensions 8 * 8 * 16 = 1024, meaning that each image’s feature map is now a single vector with 1024 elements.

After flattening, the shape becomes (32, 1024), where each sample in the batch is represented by a 1024-dimensional feature vector.

Note

remember here

model.fit(X_train_flattened, y_train, epochs=5)

where X_train_flattened must be flattened in 2dim

and y_train alraedy in 2 dim

If you're using a fully connected dense layer, you must flatten your 3D input (e.g., (28, 28) into 784) before passing it to the network.

Flattening using TensorFlow/Keras

When working with deep learning frameworks like Keras, you can easily flatten an array using the Flatten() layer. This is typically used between convolutional layers and fully connected (dense) layers.

Example: Flattening in a Convolutional Neural Network (CNN)

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Conv2D, MaxPooling2D, Flatten, Dense

import numpy as np

# Create a sample model with convolutional and fully connected layers

model = Sequential([

Conv2D(32, (3, 3), activation='relu', input_shape=(28, 28, 1)),

MaxPooling2D(pool_size=(2, 2)),

Conv2D(64, (3, 3), activation='relu'),

MaxPooling2D(pool_size=(2, 2)),

# Flatten layer to convert 3D output to 2D

Flatten(),

# Fully connected layer

Dense(128, activation='relu'),

Dense(10, activation='softmax') # Output layer for classification

])

# Check model summary to see how the flattening layer transforms the output shape

model.summary()

# Dummy input of 28x28 grayscale image

X_train = np.random.rand(60000, 28, 28, 1)

# Predict using the model (it will automatically flatten the output from convolutional layers)

output = model.predict(X_train)

print("Output shape after flattening:", output.shape)

In the code above, Flatten() automatically flattens the 3D output from the convolutional layers into a 2D array, so it can be fed into the dense layers.

If X_train has a shape of (60000, 28, 28, 1), the flattening will reduce each image to (28 * 28 = 784) features, resulting in a shape (60000, 784).

Flatten the Input (Keep the Dense Layer):

keras.layers.Flatten(input_shape=(28, 28)),

model = keras.Sequential([

keras.layers.Flatten(input_shape=(28, 28)), # Flatten 28x28 into 784

keras.layers.Dense(10, activation='sigmoid')

])

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

model.fit(X_train, y_train, epochs=5) # No need to flatten manually

Use Convolutional Layers: If you want to use a 3D input directly (without flattening), you can use convolutional layers, which are better suited for image data. Here’s how you can modify your model:

model = keras.Sequential([

keras.layers.Conv2D(32, (3, 3), input_shape=(28, 28, 1), activation='relu'),

keras.layers.MaxPooling2D(pool_size=(2, 2)),

keras.layers.Flatten(),

keras.layers.Dense(10, activation='softmax')

])

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

model.fit(X_train, y_train, epochs=5) # Ensure X_train has shape (num_samples, 28, 28,

- Flattening using NumPy You can manually flatten a 3D array using NumPy's reshape() method.

Example: Flattening a 3D Array using NumPy

import numpy as np

# Dummy data: a 3D array of shape (60000, 28, 28), representing a batch of 60,000 images

X_train = np.random.rand(60000, 28, 28)

# Flattening the 3D array into a 2D array

# Each 28x28 image is flattened into 784 pixels

X_train_flattened = X_train.reshape(60000, 28 * 28)

print("Original shape:", X_train.shape)

print("Flattened shape:", X_train_flattened.shape)

Explanation:

The reshape() function converts the 3D array of shape (60000, 28, 28) into a 2D array with shape (60000, 784). Each image is now represented as a vector of 784 elements.

This manually achieves the flattening process without deep learning frameworks.

Top comments (0)