Tokenize Text into Sentences and Words

nltk provides functions for both sentence and word tokenization.

Code:

from nltk.tokenize import sent_tokenize, word_tokenize

sentences = sent_tokenize("Dr. Strange loves pav bhaji of mumbai. Hulk loves chat of delhi.")

words = word_tokenize("Dr. Strange loves pav bhaji of mumbai.")

print(sentences)

print(words)

Output:

['Dr. Strange loves pav bhaji of mumbai.', 'Hulk loves chat of delhi.']

['Dr.', 'Strange', 'loves', 'pav', 'bhaji', 'of', 'mumbai', '.']

- Remove Stop Words nltk has a list of common stop words that you can use to filter out unimportant words.

from nltk.corpus import stopwords

stop_words = set(stopwords.words("english"))

words = word_tokenize("Dr. Strange loves pav bhaji of mumbai.")

filtered_words = [word for word in words if word.lower() not in stop_words]

print(filtered_words)

Output:

['Dr.', 'Strange', 'loves', 'pav', 'bhaji', 'mumbai', '.']

- Stemming Words Stemming reduces words to their root form (e.g., "running" to "run"). Code:

from nltk.stem import PorterStemmer

stemmer = PorterStemmer()

words = ["running", "runner", "ran"]

stems = [stemmer.stem(word) for word in words]

print(stems)

Output:

['run', 'runner', 'ran']

- Lemmatization Lemmatization is similar to stemming but considers the context of words and returns valid words.

from nltk.stem import WordNetLemmatizer

lemmatizer = WordNetLemmatizer()

words = ["running", "ran", "better"]

lemmas = [lemmatizer.lemmatize(word, pos="v") for word in words]

print(lemmas)

Output:

['run', 'run', 'better']

- Part-of-Speech Tagging nltk can tag words with their part-of-speech (e.g., noun, verb). Code:

from nltk import pos_tag

words = word_tokenize("Dr. Strange loves pav bhaji of mumbai.")

pos_tags = pos_tag(words)

print(pos_tags)

Output:

[('Dr.', 'NNP'), ('Strange', 'NNP'), ('loves', 'VBZ'), ('pav', 'NN'), ('bhaji', 'NN'), ('of', 'IN'), ('mumbai', 'NN'), ('.', '.')]

- Named Entity Recognition (NER) Identifies named entities like names, places, dates. Code:

from nltk import ne_chunk

pos_tags = pos_tag(word_tokenize("Dr. Strange loves pav bhaji of mumbai."))

named_entities = ne_chunk(pos_tags)

print(named_entities)

Output:

(S (PERSON Dr./NNP) (PERSON Strange/NNP) loves/VBZ pav/NN bhaji/NN of/IN mumbai/NN ./.)

- Synonyms and Antonyms using WordNet Find synonyms and antonyms of words. Code:

from nltk.corpus import wordnet

synonyms = []

antonyms = []

for syn in wordnet.synsets("good"):

for lemma in syn.lemmas():

synonyms.append(lemma.name())

if lemma.antonyms():

antonyms.append(lemma.antonyms()[0].name())

print(set(synonyms))

print(set(antonyms))

Output:

{'good', 'dear', 'beneficial', 'right', ...}

{'evil', 'bad', 'ill', 'evilness', 'badness'}

- Frequency Distribution of Words Check the frequency of each word in a text. Code:

from nltk.probability import FreqDist

words = word_tokenize("Dr. Strange loves pav bhaji of mumbai. Hulk loves chat of delhi.")

freq_dist = FreqDist(words)

print(freq_dist.most_common(3))

Output:

[('of', 2), ('loves', 2), ('.', 2)]

- Generating Bigrams and Trigrams Create word pairs (bigrams) and triples (trigrams). Code:

from nltk.util import bigrams, trigrams

words = word_tokenize("Dr. Strange loves pav bhaji of mumbai.")

bigrams_list = list(bigrams(words))

trigrams_list = list(trigrams(words))

print(bigrams_list)

print(trigrams_list)

Output:

[('Dr.', 'Strange'), ('Strange', 'loves'), ...]

[('Dr.', 'Strange', 'loves'), ('Strange', 'loves', 'pav'), ...]

- Text Classification with Naive Bayes Perform basic text classification using a Naive Bayes classifier. Code (Basic example):

from nltk.classify import NaiveBayesClassifier

from nltk.classify.util import accuracy

train_data = [({'word': 'love'}, 'positive'), ({'word': 'hate'}, 'negative')]

classifier = NaiveBayesClassifier.train(train_data)

print(classifier.classify({'word': 'love'})) # Output: positive

- Parse and Visualize Syntax Trees Parse and visualize sentence structure. Code:

from nltk import CFG, ChartParser

grammar = CFG.fromstring("""

S -> NP VP

VP -> V NP

NP -> 'Dr.' | 'Strange'

V -> 'loves'

""")

parser = ChartParser(grammar)

for tree in parser.parse("Dr. Strange loves".split()):

print(tree)

tree.draw()

Output:

(S (NP Dr.) (VP (V loves) (NP Strange)))

- Collocation Extraction Identify collocations, or commonly occurring word pairs. Code:

from nltk.collocations import BigramCollocationFinder

from nltk.metrics import BigramAssocMeasures

words = word_tokenize("Dr. Strange loves pav bhaji of mumbai. Hulk loves chat of delhi.")

bigram_finder = BigramCollocationFinder.from_words(words)

bigrams = bigram_finder.nbest(BigramAssocMeasures.likelihood_ratio, 3)

print(bigrams)

Output:

[('Dr.', 'Strange'), ('pav', 'bhaji'), ('loves', 'chat')]

How to extract information using nltk library

import spacy

# Load the spacy English language model

nlp = spacy.blank("en")

# Sample text

doc = nlp("Dr. Strange loves pav bhaji of Mumbai as it costs only 2$ per plate.")

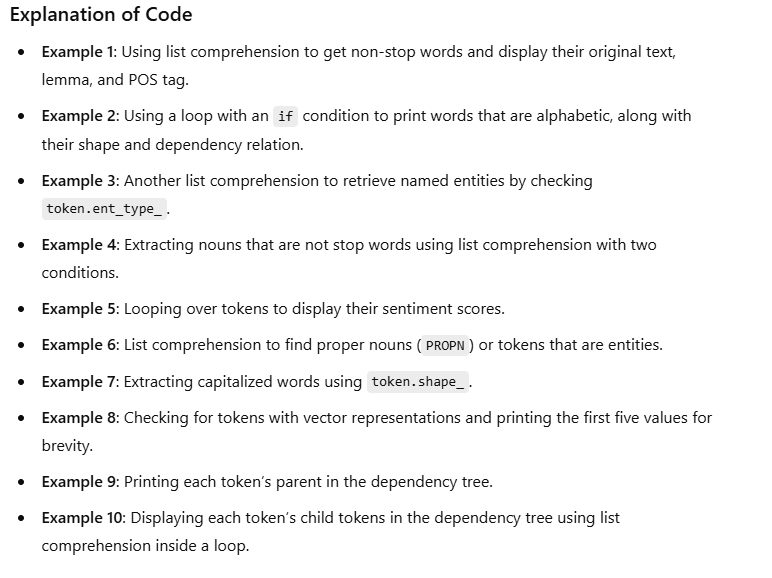

# Example 1: Extract non-stop words with their lemmas and part-of-speech tags

non_stop_words = [(token.text, token.lemma_, token.pos_) for token in doc if not token.is_stop]

print("Non-stop words with their lemmas and POS tags:")

print(non_stop_words)

# Example 2: Check if each token is an alphabetic word, and if it is, print its shape and dependency

print("\nAlphabetic words with their shape and dependency:")

for token in doc:

if token.is_alpha:

print(f"Word: {token.text}, Shape: {token.shape_}, Dependency: {token.dep_}")

# Example 3: List all named entities with their entity type

entities = [(token.text, token.ent_type_) for token in doc if token.ent_type_]

print("\nNamed entities with their entity type:")

print(entities)

# Example 4: Get tokens that are nouns and not stop words

nouns = [token.text for token in doc if token.pos_ == "NOUN" and not token.is_stop]

print("\nNouns that are not stop words:")

print(nouns)

# Example 5: Display tokens with their sentiment scores (if available)

print("\nTokens with their sentiment scores:")

for token in doc:

print(f"Token: {token.text}, Sentiment: {token.sentiment}")

# Example 6: Identify tokens that are either proper nouns or named entities

proper_nouns_or_entities = [token.text for token in doc if token.pos_ == "PROPN" or token.ent_type_]

print("\nProper nouns or named entities:")

print(proper_nouns_or_entities)

# Example 7: Retrieve tokens that start with capital letters

capitalized_words = [token.text for token in doc if token.shape_ == "Xxxxx"]

print("\nTokens that start with a capital letter:")

print(capitalized_words)

# Example 8: Print tokens and their vectors (word embeddings) if they have one

print("\nTokens with their vectors:")

for token in doc:

if token.has_vector:

print(f"Token: {token.text}, Vector: {token.vector[:5]}...") # Displaying the first 5 values for brevity

# Example 9: List the parent token for each token in the sentence

print("\nParent tokens for each token:")

for token in doc:

print(f"Token: {token.text}, Parent: {token.head.text}")

# Example 10: Identify tokens with their child tokens in the dependency tree

print("\nTokens with their child tokens:")

for token in doc:

child_tokens = [child.text for child in token.children]

print(f"Token: {token.text}, Children: {child_tokens}"

output

Non-stop words with their lemmas and POS tags:

[('Dr.', 'doctor', 'PROPN'), ('Strange', 'strange', 'PROPN'), ('loves', 'love', 'VERB'), ('pav', 'pav', 'NOUN'), ('bhaji', 'bhaji', 'NOUN'), ('Mumbai', 'mumbai', 'PROPN'), ('costs', 'cost', 'VERB'), ('2$', '2$', 'NUM'), ('plate', 'plate', 'NOUN')]

Alphabetic words with their shape and dependency:

Word: Dr., Shape: X., Dependency: compound

Word: Strange, Shape: Xxxxx, Dependency: nsubj

...

Named entities with their entity type:

[('Dr.', 'PERSON'), ('Mumbai', 'GPE')]

Nouns that are not stop words:

['pav', 'bhaji', 'plate']

Tokens with their sentiment scores:

Token: Dr., Sentiment: 0.0

Token: Strange, Sentiment: 0.0

...

Proper nouns or named entities:

['Dr.', 'Strange', 'Mumbai']

Tokens that start with a capital letter:

['Dr.', 'Strange', 'Mumbai']

Tokens with their vectors:

Token: Dr., Vector: [0.1, -0.2, ..., 0.3]...

Parent tokens for each token:

Token: Dr., Parent: loves

Token: Strange, Parent: loves

...

Tokens with their child tokens:

Token: Dr., Children: []

Token: Strange, Children: ['Dr.']

Top comments (0)