About Keras mnist datasets

How to perform Scaling

Meaning of X_train: (60000, 28, 28)

3 advantage of sacaling

How Scaling provides faster convergence and uniformity while traing model

Keras mnist.load_data

This loads the MNIST dataset, which contains 70,000 grayscale images of handwritten digits (0-9), where each image is a 28x28 pixel image.

This is one of the most popular datasets for image classification, especially for benchmarking machine learning models on digit recognition tasks

Data format:

Images (X_train, X_test):

Each image is represented as a 2D array (28x28 pixels) with integer values (0 to 255) representing pixel intensity (grayscale).

X_train: Contains 60,000 training images.

X_test: Contains 10,000 test images

.

Labels (y_train, y_test):

These represent the digit corresponding to each image (0-9).

y_train: Contains 60,000 labels for training.

y_test: Contains 10,000 labels for testing.

Shape:

X_train: (60000, 28, 28) → 60,000 images of size 28x28 pixels.

y_train: (60000,) → 60,000 labels (digits 0-9).

X_test: (10000, 28, 28) → 10,000 images of size 28x28 pixels.

y_test: (10000,) → 10,000 labels.

Key characteristics:

The data is returned as NumPy arrays, and the images are structured in a 3D array (samples x height x width).

This is a raw image dataset, suitable for image classification tasks.

You usually need to preprocess the images (e.g., flatten them into vectors if used with traditional machine learning models or normalize pixel values between 0 and 1).

How to perform Scaling

X_train = X_train / 255

X_test = X_test / 255

This is a common normalization step when dealing with image data, particularly in neural networks.

Explanation:

The MNIST dataset consists of grayscale images where pixel values range from 0 to 255. Each pixel value represents the intensity of the color:

0 represents black

255 represents white

Any value in between represents different shades of gray.

Dividing by 255 scales the pixel values to the range [0, 1]

, which is beneficial for several reasons:

Normalization: Neural networks generally perform better when the input data is within a smaller range (e.g., [0, 1]). This ensures that the magnitude of the data is kept small, which can help prevent issues like vanishing/exploding gradients.

Faster convergence: Scaling the data can help the model train faster and achieve better performance. It reduces the burden on the optimizer to adjust weights for large value differences.

Uniformity: This also ensures that all features (in this case, pixel values) are on a comparable scale, which helps the model learn effectively.

In short, this step is used to standardize the input image data for better and more efficient training of machine learning models.

import tensorflow as tf

from tensorflow import keras

import matplotlib.pyplot as plt

%matplotlib inline

import numpy as np

(X_train, y_train) , (X_test, y_test) = keras.datasets.mnist.load_data()

len(X_train)=>60000

len(X_test)=>10000

#X_train.shape =>(60000, 28, 28)

X_train[0].shape=>(28, 28)

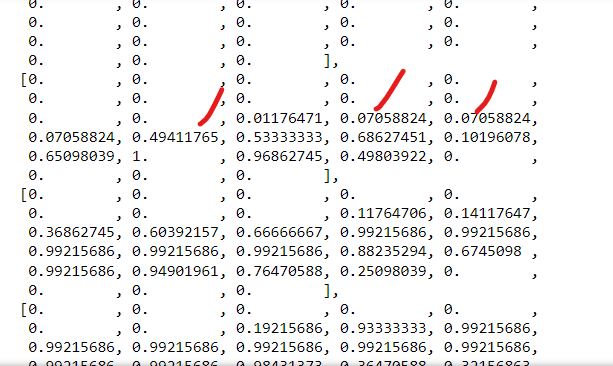

X_train[0]

Before scaling lots of value above 1 in below output

After scaling all value range between 0 to 1 in below output

X_train = X_train / 255

X_test = X_test / 255

Top comments (0)