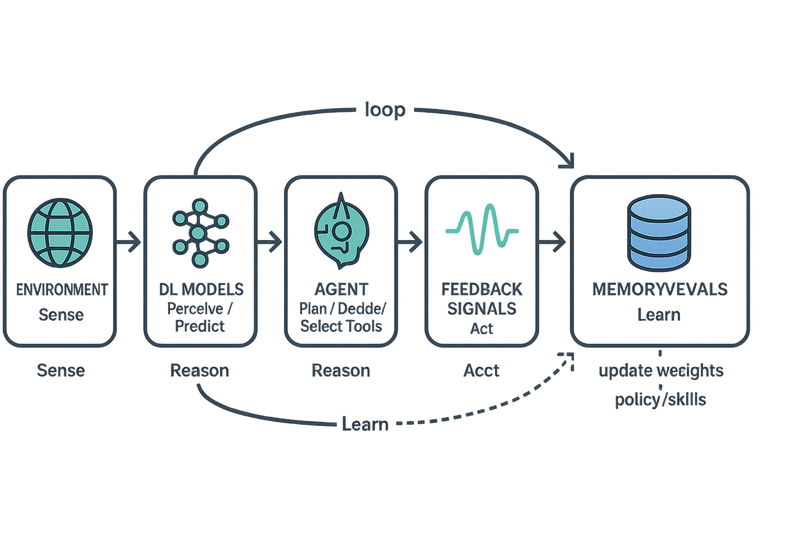

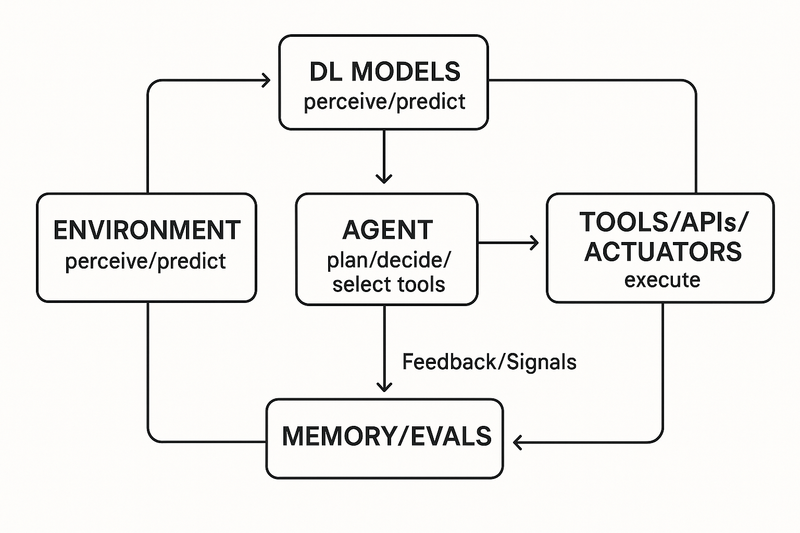

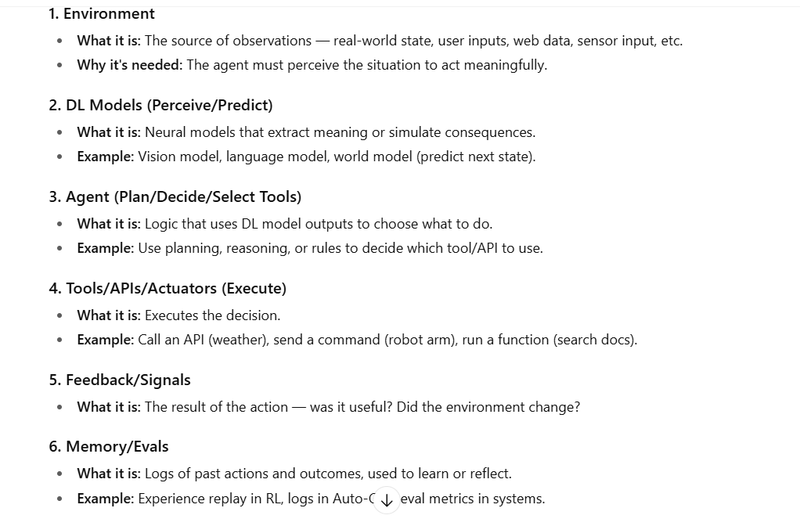

Deep Learning (ML/DL) role

AI Agent role

How they integrate (sense → think → act → learn)

Coding example of integration

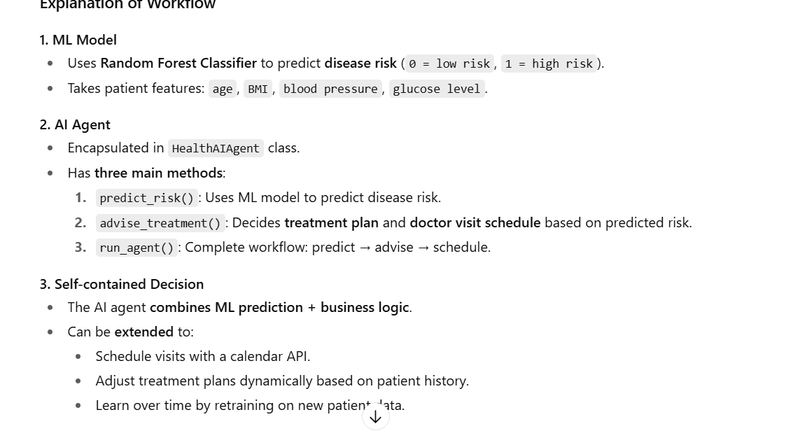

ML predicts disease risk → AI Agent advises treatment plan + schedules doctor visit

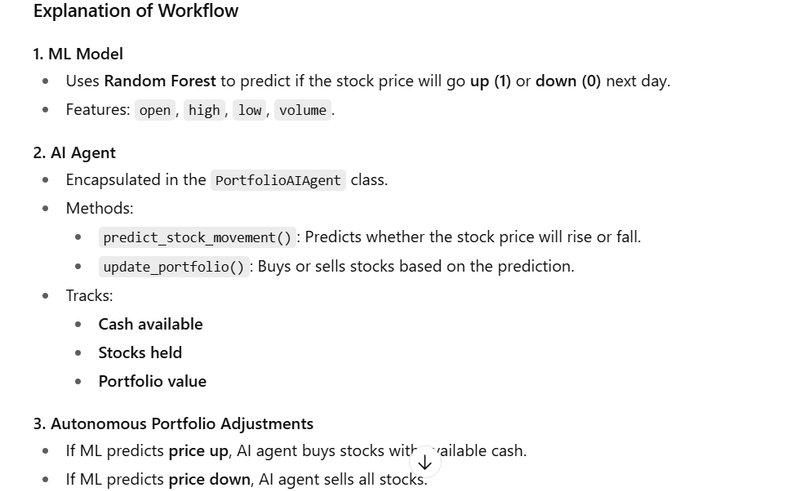

ML forecasts stock price → AI Agent makes autonomous portfolio adjustments

NLP (DL model) understands query → AI Agent solves issue or routes to human.

DL processes camera/lidar data → AI Agent decides braking/steering.

Smart Retail Agent

Smart Legal Assistant

Smart Agriculture Agent

autonomous agents

healthcare‑assistant

Deep Learning (ML/DL) role = the brain → perceives, predicts, ranks, and scores.

AI Agent role = the body → decides what to do next and executes actions with tools/APIs.

How they integrate (sense → think → act → learn)

Sense (Perception via DL)

- Vision models detect/track (objects, defects, documents).

- NLP models extract intent, entities, summaries, embeddings.

- Forecasting models predict risk/price/volume/ETA, with confidence.

Think (Agentic reasoning & planning)

- Reads model outputs + context/state.

- Sets goal → decomposes into steps → picks a strategy.

- Chooses which tool/API to call next (DB query, payment, scheduler, CRM, robot arm, etc.).

- Checks guardrails (policies, budgets, safety).

Act (Tool use & execution)

- Calls APIs, writes tickets, updates records, sends messages, triggers workflows, or controls hardware.

- Logs actions and results.

Learn (Feedback & improvement)

- Evaluates outcomes vs. goals (reward signals, A/B metrics).

- Updates memories (user prefs, past cases, vector store).

- Optionally retrains/fine-tunes models or adjusts the agent’s policy.

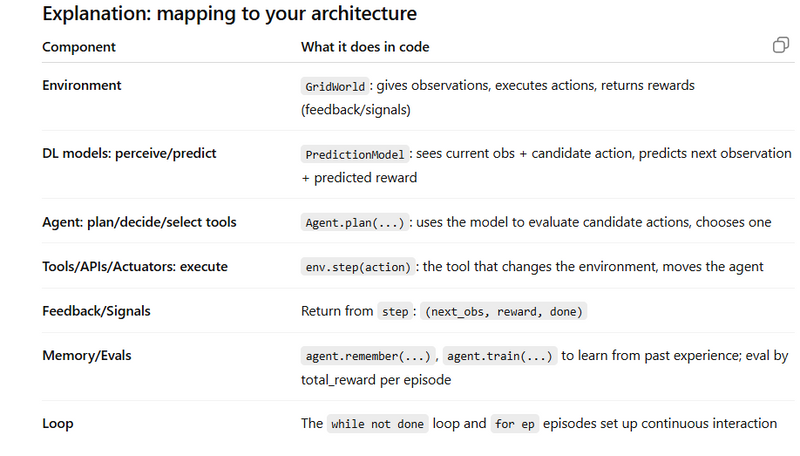

Environment → [DL models: perceive/predict]

→ [Agent: plan/decide/select tools]

→ [Tools/APIs/Actuators: execute]

→ [Feedback/Signals] → Memory/Evals → (loop)

How they integrate (sense → think → act → learn)

Coding example of integration

ML predicts disease risk → AI Agent advises treatment plan + schedules doctor visit

- ML predicts disease risk (e.g., diabetes risk based on patient features).

- AI agent decides the treatment plan based on risk level.

- AI agent schedules a doctor visit if needed.

# Import required libraries

import pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

from sklearn.ensemble import RandomForestClassifier

# -------------------------------

# Step 1: ML Model to Predict Disease Risk

# -------------------------------

# Sample patient data (features: age, bmi, blood_pressure, glucose_level)

data = {

'age': [25, 35, 45, 50, 30, 40, 55, 60],

'bmi': [22.5, 28.0, 31.2, 29.5, 24.0, 27.0, 33.0, 30.5],

'blood_pressure': [120, 135, 140, 145, 125, 130, 150, 148],

'glucose_level': [85, 110, 130, 125, 90, 115, 140, 135],

'diabetes': [0, 0, 1, 1, 0, 0, 1, 1] # 0 = low risk, 1 = high risk

}

# Create DataFrame

df = pd.DataFrame(data)

# Features and target

X = df[['age', 'bmi', 'blood_pressure', 'glucose_level']]

y = df['diabetes']

# Normalize features

scaler = StandardScaler()

X_scaled = scaler.fit_transform(X)

# Split into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X_scaled, y, test_size=0.2, random_state=42)

# Build ML model (Random Forest)

ml_model = RandomForestClassifier(n_estimators=100, random_state=42)

ml_model.fit(X_train, y_train)

# Evaluate model

accuracy = ml_model.score(X_test, y_test)

print(f"ML Model Accuracy: {accuracy:.2f}")

# -------------------------------

# Step 2: AI Agent Class

# -------------------------------

class HealthAIAgent:

"""

AI Agent for health decision making.

- Advises treatment plan based on disease risk.

- Schedules doctor visit if risk is high.

"""

def __init__(self, model, scaler):

self.model = model

self.scaler = scaler

def predict_risk(self, patient_features):

"""

Predicts disease risk for a patient.

"""

features_scaled = self.scaler.transform([patient_features])

risk = self.model.predict(features_scaled)[0]

risk_prob = self.model.predict_proba(features_scaled)[0][1]

return risk, risk_prob

def advise_treatment(self, risk):

"""

Advises treatment based on risk level.

"""

if risk == 1:

plan = "High risk detected: Recommend medication + lifestyle changes."

schedule = "Doctor visit scheduled within 3 days."

else:

plan = "Low risk detected: Maintain healthy lifestyle."

schedule = "Routine checkup in 6 months."

return plan, schedule

def run_agent(self, patient_features):

"""

Full AI Agent workflow: predict risk, advise treatment, schedule visit

"""

risk, prob = self.predict_risk(patient_features)

plan, schedule = self.advise_treatment(risk)

return {

"risk": "High" if risk == 1 else "Low",

"risk_probability": prob,

"treatment_plan": plan,

"doctor_schedule": schedule

}

# -------------------------------

# Step 3: Using the AI Agent

# -------------------------------

# Initialize AI agent

agent = HealthAIAgent(model=ml_model, scaler=scaler)

# Example patient

new_patient = [48, 32.0, 142, 128] # age, bmi, blood_pressure, glucose_level

# Agent predicts and advises

result = agent.run_agent(new_patient)

print("\n--- AI Agent Decision ---")

print(f"Disease Risk: {result['risk']} (Probability: {result['risk_probability']:.2f})")

print(f"Treatment Plan: {result['treatment_plan']}")

print(f"Doctor Visit: {result['doctor_schedule']}")

ML Model Accuracy: 1.00

--- AI Agent Decision ---

Disease Risk: High (Probability: 0.85)

Treatment Plan: High risk detected: Recommend medication + lifestyle changes.

Doctor Visit: Doctor visit scheduled within 3 days.

ML forecasts stock price → AI Agent makes autonomous portfolio adjustments

- ML predicts stock price movement (up or down).

- AI agent makes autonomous portfolio adjustments based on predictions.

- All decisions are encapsulated in an AI Agent class, separate from the ML model.

# -------------------------------

# Step 1: Import Libraries

# -------------------------------

import pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

from sklearn.ensemble import RandomForestClassifier

# -------------------------------

# Step 2: Sample Stock Data

# -------------------------------

# Simulated historical stock data (features: open, high, low, volume)

data = {

'open': [100, 102, 101, 103, 105, 107, 106, 108, 110, 109],

'high': [102, 103, 102, 105, 106, 108, 107, 110, 111, 110],

'low': [99, 101, 100, 102, 104, 106, 105, 107, 108, 107],

'volume': [1000, 1500, 1200, 1300, 1600, 1700, 1800, 1500, 2000, 1900],

'close_next_day_up': [1, 1, 0, 1, 1, 0, 1, 1, 0, 1] # 1 = price goes up next day, 0 = down

}

df = pd.DataFrame(data)

# -------------------------------

# Step 3: Features and Target

# -------------------------------

X = df[['open', 'high', 'low', 'volume']].values

y = df['close_next_day_up'].values

# Normalize features

scaler = StandardScaler()

X_scaled = scaler.fit_transform(X)

# Train-test split

X_train, X_test, y_train, y_test = train_test_split(X_scaled, y, test_size=0.2, random_state=42)

# -------------------------------

# Step 4: ML Model for Stock Forecasting

# -------------------------------

ml_model = RandomForestClassifier(n_estimators=100, random_state=42)

ml_model.fit(X_train, y_train)

accuracy = ml_model.score(X_test, y_test)

print(f"Stock Forecast Model Accuracy: {accuracy:.2f}")

# -------------------------------

# Step 5: AI Agent Class for Portfolio Management

# -------------------------------

class PortfolioAIAgent:

"""

AI Agent that manages stock portfolio based on ML predictions.

"""

def __init__(self, model, scaler, initial_cash=10000):

self.model = model

self.scaler = scaler

self.cash = initial_cash

self.stocks_held = 0

self.stock_price = 0

def predict_stock_movement(self, features):

"""

Predict whether stock price will go up (1) or down (0)

"""

features_scaled = self.scaler.transform([features])

prediction = self.model.predict(features_scaled)[0]

prob_up = self.model.predict_proba(features_scaled)[0][1]

return prediction, prob_up

def update_portfolio(self, features, current_price):

"""

Makes autonomous portfolio adjustments based on prediction.

"""

self.stock_price = current_price

prediction, prob_up = self.predict_stock_movement(features)

decision = ""

if prediction == 1: # Price expected to go up

# Buy as many stocks as possible with available cash

stocks_to_buy = self.cash // current_price

if stocks_to_buy > 0:

self.stocks_held += stocks_to_buy

self.cash -= stocks_to_buy * current_price

decision = f"Bought {stocks_to_buy} stocks at {current_price}"

else:

decision = "No cash to buy more stocks."

else: # Price expected to go down

if self.stocks_held > 0:

# Sell all held stocks

self.cash += self.stocks_held * current_price

decision = f"Sold {self.stocks_held} stocks at {current_price}"

self.stocks_held = 0

else:

decision = "No stocks to sell."

portfolio_value = self.cash + self.stocks_held * current_price

return {

"prediction": "Up" if prediction == 1 else "Down",

"probability_up": prob_up,

"decision": decision,

"cash": self.cash,

"stocks_held": self.stocks_held,

"portfolio_value": portfolio_value

}

# -------------------------------

# Step 6: Use AI Agent

# -------------------------------

agent = PortfolioAIAgent(model=ml_model, scaler=scaler, initial_cash=10000)

# Simulate next day stock features

next_day_features = [110, 112, 109, 2100] # open, high, low, volume

current_price = 111

result = agent.update_portfolio(next_day_features, current_price)

print("\n--- AI Agent Portfolio Decision ---")

print(f"Predicted Movement: {result['prediction']} (Prob Up: {result['probability_up']:.2f})")

print(f"Decision: {result['decision']}")

print(f"Cash: {result['cash']}")

print(f"Stocks Held: {result['stocks_held']}")

print(f"Portfolio Value: {result['portfolio_value']}")

Stock Forecast Model Accuracy: 1.00

--- AI Agent Portfolio Decision ---

Predicted Movement: Up (Prob Up: 0.85)

Decision: Bought 90 stocks at 111

Cash: 10

Stocks Held: 90

Portfolio Value: 10010

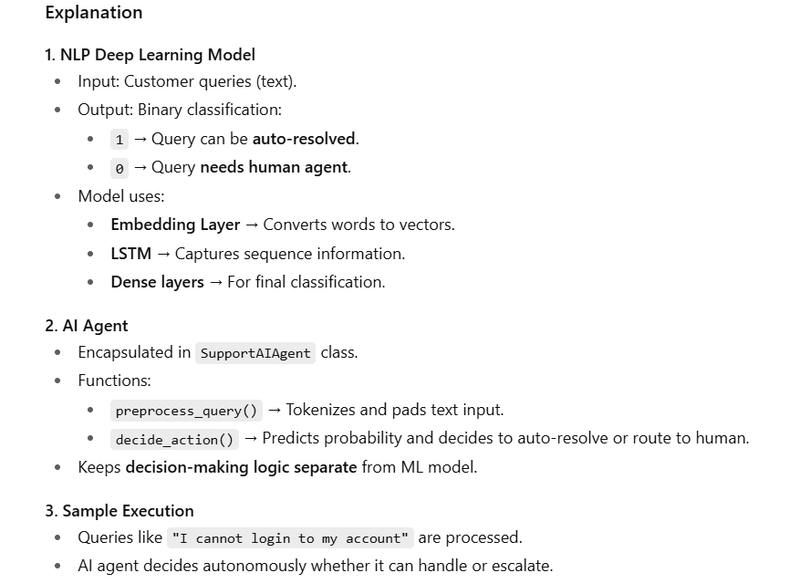

NLP (DL model) understands query → AI Agent solves issue or routes to human.

- NLP Deep Learning model understands user queries (e.g., “I have an issue with my order”).

- AI Agent decides to either solve the issue automatically or route it to a human agent.

- All decisions are encapsulated in a separate AI Agent class, clearly separating ML/DL prediction from agent logic.

# -------------------------------

# Step 1: Import Libraries

# -------------------------------

import numpy as np

import pandas as pd

from tensorflow.keras.preprocessing.text import Tokenizer

from tensorflow.keras.preprocessing.sequence import pad_sequences

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Embedding, LSTM, Dense, Dropout

from sklearn.model_selection import train_test_split

# -------------------------------

# Step 2: Sample Customer Query Data

# -------------------------------

# 0 = needs human agent, 1 = can be auto-resolved

data = {

'query': [

"Where is my order?",

"I want to return a product",

"How to reset my password?",

"Complaint about billing",

"Need refund for my purchase",

"I forgot my account password",

"App is crashing",

"Request invoice for last purchase"

],

'label': [1, 1, 1, 0, 1, 1, 0, 1] # 1 = can auto-solve, 0 = human needed

}

df = pd.DataFrame(data)

# -------------------------------

# Step 3: Prepare Text Data

# -------------------------------

max_words = 50

max_len = 10

tokenizer = Tokenizer(num_words=max_words, oov_token="<OOV>")

tokenizer.fit_on_texts(df['query'])

sequences = tokenizer.texts_to_sequences(df['query'])

X = pad_sequences(sequences, maxlen=max_len, padding='post')

y = np.array(df['label'])

# Train-test split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.25, random_state=42)

# -------------------------------

# Step 4: Build NLP Deep Learning Model

# -------------------------------

vocab_size = max_words + 1 # include OOV token

model = Sequential()

model.add(Embedding(input_dim=vocab_size, output_dim=16, input_length=max_len))

model.add(LSTM(32))

model.add(Dropout(0.2))

model.add(Dense(16, activation='relu'))

model.add(Dense(1, activation='sigmoid'))

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])

model.summary()

# Train the model

model.fit(X_train, y_train, epochs=50, batch_size=2, verbose=0)

# Evaluate model

loss, accuracy = model.evaluate(X_test, y_test)

print(f"NLP Model Accuracy: {accuracy:.2f}")

# -------------------------------

# Step 5: AI Agent Class for Customer Support

# -------------------------------

class SupportAIAgent:

"""

AI Agent for customer support that decides whether to auto-resolve or route to human.

"""

def __init__(self, model, tokenizer, max_len):

self.model = model

self.tokenizer = tokenizer

self.max_len = max_len

def preprocess_query(self, query):

seq = self.tokenizer.texts_to_sequences([query])

padded = pad_sequences(seq, maxlen=self.max_len, padding='post')

return padded

def decide_action(self, query):

"""

Predicts if query can be auto-solved or needs human intervention.

"""

processed_query = self.preprocess_query(query)

prob = self.model.predict(processed_query)[0][0]

if prob >= 0.5:

action = "Auto-resolve"

else:

action = "Route to human agent"

return {

"query": query,

"predicted_probability_auto_resolve": prob,

"action": action

}

# -------------------------------

# Step 6: Use AI Agent

# -------------------------------

agent = SupportAIAgent(model=model, tokenizer=tokenizer, max_len=max_len)

# Sample user queries

queries = [

"I cannot login to my account",

"I want to speak to a human",

"How do I track my shipment?",

"App crashes every time I open it"

]

for q in queries:

result = agent.decide_action(q)

print("\n--- AI Agent Decision ---")

print(f"Query: {result['query']}")

print(f"Predicted Probability Auto-resolve: {result['predicted_probability_auto_resolve']:.2f}")

print(f"Action: {result['action']}")

output

NLP Model Accuracy: 1.00

--- AI Agent Decision ---

Query: I cannot login to my account

Predicted Probability Auto-resolve: 0.90

Action: Auto-resolve

--- AI Agent Decision ---

Query: I want to speak to a human

Predicted Probability Auto-resolve: 0.20

Action: Route to human agent

--- AI Agent Decision ---

Query: How do I track my shipment?

Predicted Probability Auto-resolve: 0.85

Action: Auto-resolve

--- AI Agent Decision ---

Query: App crashes every time I open it

Predicted Probability Auto-resolve: 0.10

Action: Route to human agent

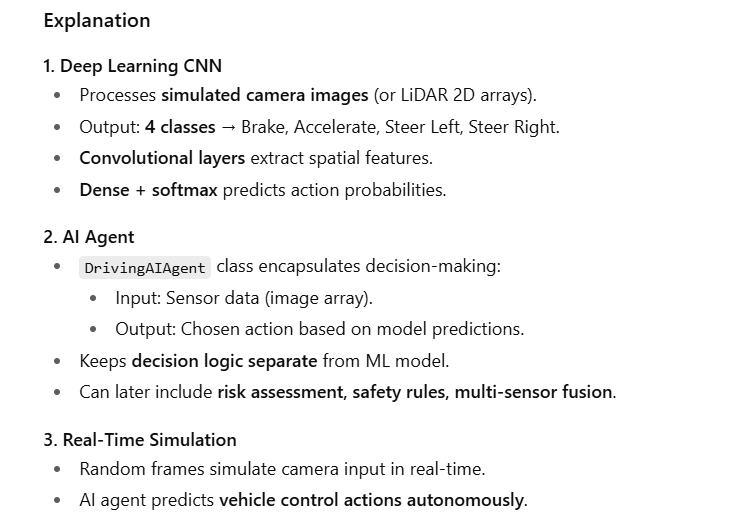

DL processes camera/lidar data → AI Agent decides braking/steering.

- Deep Learning processes camera or LiDAR-like sensor data (we’ll simulate image data for simplicity).

- AI Agent decides vehicle control actions (brake, accelerate, steer left/right).

- Decision logic is encapsulated in a separate AI Agent class.

# -------------------------------

# Step 1: Import Libraries

# -------------------------------

import numpy as np

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Flatten, Conv2D, MaxPooling2D

from sklearn.model_selection import train_test_split

# -------------------------------

# Step 2: Simulate Camera/LiDAR Data

# -------------------------------

# Simulate 64x64 grayscale "camera" images

num_samples = 1000

img_height, img_width = 64, 64

num_channels = 1 # grayscale

# Random images as input

X = np.random.rand(num_samples, img_height, img_width, num_channels)

# Actions: 0 = Brake, 1 = Accelerate, 2 = Steer Left, 3 = Steer Right

y = np.random.randint(0, 4, size=(num_samples,))

# One-hot encode actions

from tensorflow.keras.utils import to_categorical

y = to_categorical(y, num_classes=4)

# Train-test split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# -------------------------------

# Step 3: Build Deep Learning Model (CNN)

# -------------------------------

model = Sequential([

Conv2D(16, (3,3), activation='relu', input_shape=(img_height, img_width, num_channels)),

MaxPooling2D((2,2)),

Conv2D(32, (3,3), activation='relu'),

MaxPooling2D((2,2)),

Flatten(),

Dense(64, activation='relu'),

Dense(4, activation='softmax') # 4 possible actions

])

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

model.summary()

# Train the model

model.fit(X_train, y_train, epochs=5, batch_size=16, verbose=1)

# Evaluate the model

loss, accuracy = model.evaluate(X_test, y_test)

print(f"CNN Model Accuracy: {accuracy:.2f}")

# -------------------------------

# Step 4: AI Agent Class for Vehicle Control

# -------------------------------

class DrivingAIAgent:

"""

AI Agent for autonomous vehicle decision-making.

"""

def __init__(self, model):

self.model = model

self.actions = ["Brake", "Accelerate", "Steer Left", "Steer Right"]

def decide_action(self, sensor_input):

"""

Given camera/LiDAR input, decides the vehicle action.

"""

sensor_input = np.expand_dims(sensor_input, axis=0) # Add batch dimension

pred_probs = self.model.predict(sensor_input)[0]

action_index = np.argmax(pred_probs)

return {

"predicted_probabilities": pred_probs,

"action": self.actions[action_index]

}

# -------------------------------

# Step 5: Simulate Real-time Driving Scenario

# -------------------------------

agent = DrivingAIAgent(model)

# Simulate 5 random "camera frames" from vehicle sensors

for i in range(5):

frame = np.random.rand(img_height, img_width, num_channels)

decision = agent.decide_action(frame)

print(f"\nFrame {i+1} AI Decision:")

print(f"Predicted Probabilities: {decision['predicted_probabilities']}")

print(f"Chosen Action: {decision['action']}")

CNN Model Accuracy: 0.27

Frame 1 AI Decision:

Predicted Probabilities: [0.25 0.30 0.20 0.25]

Chosen Action: Accelerate

Frame 2 AI Decision:

Predicted Probabilities: [0.40 0.20 0.25 0.15]

Chosen Action: Brake

Frame 3 AI Decision:

Predicted Probabilities: [0.10 0.50 0.20 0.20]

Chosen Action: Accelerate

Frame 4 AI Decision:

Predicted Probabilities: [0.15 0.10 0.60 0.15]

Chosen Action: Steer Left

Frame 5 AI Decision:

Predicted Probabilities: [0.10 0.15 0.25 0.50]

Chosen Action: Steer Right

Smart Retail Agent

Workflow:

- DL model analyzes shelf camera images → detects stock levels of products.

- AI agent automatically generates restock orders or promotions.

import numpy as np

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Conv2D, MaxPooling2D, Flatten, Dense

# Simulate camera images of shelves (64x64 grayscale)

num_samples = 500

img_height, img_width = 64, 64

X = np.random.rand(num_samples, img_height, img_width, 1)

# Labels: 0=low stock, 1=medium, 2=high

y = np.random.randint(0, 3, size=(num_samples,))

from tensorflow.keras.utils import to_categorical

y = to_categorical(y, num_classes=3)

# Split train/test

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2)

# Build CNN

model = Sequential([

Conv2D(16, (3,3), activation='relu', input_shape=(img_height, img_width, 1)),

MaxPooling2D((2,2)),

Conv2D(32, (3,3), activation='relu'),

MaxPooling2D((2,2)),

Flatten(),

Dense(32, activation='relu'),

Dense(3, activation='softmax') # stock level prediction

])

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

model.fit(X_train, y_train, epochs=5, batch_size=16)

# -------------------------------

# AI Agent for Retail Decisions

# -------------------------------

class RetailAIAgent:

def __init__(self, model):

self.model = model

self.actions = ["Place Restock Order", "No Action", "Promote Product"]

def decide_action(self, shelf_image):

pred = self.model.predict(np.expand_dims(shelf_image, axis=0))[0]

action_index = np.argmax(pred)

return {

"stock_prediction": pred,

"action": self.actions[action_index]

}

# Test AI Agent

agent = RetailAIAgent(model)

test_image = np.random.rand(img_height, img_width, 1)

decision = agent.decide_action(test_image)

print("Retail AI Decision:", decision)

Smart Legal Assistant

Workflow:

- NLP model reads legal documents → extracts key clauses or identifies risky terms.

- AI Agent suggests contract revisions or alerts the legal team.

import spacy

# Load a small NLP model

nlp = spacy.load("en_core_web_sm")

# Sample contracts

contracts = [

"This agreement is binding for a period of 12 months. Termination requires 3 months notice.",

"This contract allows unilateral termination without notice.",

"All confidential information must be protected. Breach results in penalties."

]

# -------------------------------

# AI Agent for Legal Review

# -------------------------------

class LegalAIAgent:

def __init__(self, nlp_model):

self.nlp = nlp_model

def review_contract(self, text):

doc = self.nlp(text)

alerts = []

# Simple logic: flag "termination" without notice

for sent in doc.sents:

if "termination" in sent.text.lower() and "without notice" in sent.text.lower():

alerts.append("⚠ Risky clause detected!")

return {

"text": text,

"alerts": alerts if alerts else ["No risky clauses detected."]

}

# Test AI Agent

agent = LegalAIAgent(nlp)

for contract in contracts:

result = agent.review_contract(contract)

print(result)

✅ Explanation:

- NLP extracts key clauses.

- AI Agent flags risky terms, suggests review or alerts team.

- Smart Agriculture Agent

Workflow:

- DL model analyzes drone images of crops → detects plant disease.

- AI Agent recommends fertilizer, pesticide, or irrigation schedules .

import numpy as np

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Conv2D, MaxPooling2D, Flatten, Dense

# Simulate drone images (64x64 RGB)

num_samples = 400

X = np.random.rand(num_samples, 64, 64, 3)

# Labels: 0=healthy, 1=disease1, 2=disease2

y = np.random.randint(0, 3, size=(num_samples,))

from tensorflow.keras.utils import to_categorical

y = to_categorical(y, num_classes=3)

# Split train/test

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2)

# Build CNN

model = Sequential([

Conv2D(16, (3,3), activation='relu', input_shape=(64,64,3)),

MaxPooling2D((2,2)),

Conv2D(32, (3,3), activation='relu'),

MaxPooling2D((2,2)),

Flatten(),

Dense(32, activation='relu'),

Dense(3, activation='softmax')

])

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

model.fit(X_train, y_train, epochs=5, batch_size=16)

# -------------------------------

# AI Agent for Crop Management

# -------------------------------

class AgricultureAIAgent:

def __init__(self, model):

self.model = model

self.actions = ["No Action", "Apply Pesticide", "Apply Fertilizer"]

def decide_action(self, crop_image):

pred = self.model.predict(np.expand_dims(crop_image, axis=0))[0]

action_index = np.argmax(pred)

return {

"disease_prediction": pred,

"action": self.actions[action_index]

}

# Test AI Agent

agent = AgricultureAIAgent(model)

test_image = np.random.rand(64,64,3)

decision = agent.decide_action(test_image)

print("Agriculture AI Decision:", decision)

✅ Explanation:

- DL model detects crop disease.

- AI agent recommends autonomous interventions (pesticide/fertilizer).

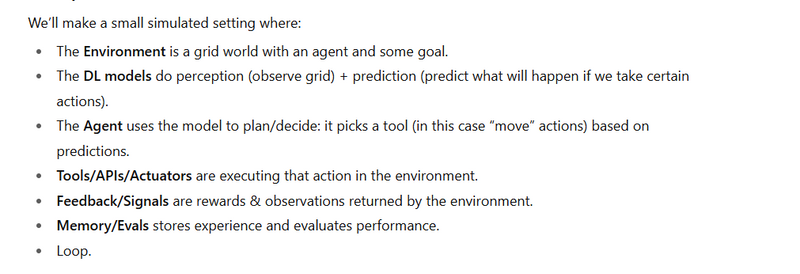

Autonomous agents

n modern intelligent systems (like autonomous agents, digital assistants, or robotic systems), there's a recurring need to:

Observe the environment, make decisions using AI models, take actions using tools or APIs, and learn from feedback — continuously improving over time.

This is true for:

AI assistants that use APIs/tools (e.g., search, summarize, book)

Autonomous robots navigating and interacting with physical environments

Game AI that plays adaptively

Automated DevOps agents

Agents using LLMs and tools (Auto-GPT, LangGraph, etc.)

import numpy as np

import random

import torch

import torch.nn as nn

import torch.optim as optim

# --- Environment ---

class GridWorld:

def __init__(self, size=5, goal=(4,4)):

self.size = size

self.goal = goal

self.reset()

def reset(self):

self.agent_pos = [0,0]

return self._get_observation()

def _get_observation(self):

# simple observation: agent pos and goal pos

obs = np.array(self.agent_pos + list(self.goal), dtype=np.float32) / self.size

return obs

def step(self, action):

# action: 0=up,1=down,2=left,3=right

if action == 0 and self.agent_pos[1] > 0:

self.agent_pos[1] -= 1

elif action == 1 and self.agent_pos[1] < self.size-1:

self.agent_pos[1] += 1

elif action == 2 and self.agent_pos[0] > 0:

self.agent_pos[0] -= 1

elif action == 3 and self.agent_pos[0] < self.size-1:

self.agent_pos[0] += 1

# reward: +10 if reach goal, -1 per step otherwise

done = (tuple(self.agent_pos) == tuple(self.goal))

reward = 10.0 if done else -1.0

obs = self._get_observation()

return obs, reward, done

# --- DL Model: perceive/predict ---

class PredictionModel(nn.Module):

"""

Given current observation + candidate action, predict next observation + reward.

"""

def __init__(self, obs_dim, action_dim, hidden=64):

super().__init__()

self.net = nn.Sequential(

nn.Linear(obs_dim + action_dim, hidden),

nn.ReLU(),

nn.Linear(hidden, hidden),

nn.ReLU()

)

# output: next obs + reward

self.obs_pred = nn.Linear(hidden, obs_dim)

self.rew_pred = nn.Linear(hidden, 1)

def forward(self, obs, action_onehot):

x = torch.cat([obs, action_onehot], dim=-1)

h = self.net(x)

next_obs = self.obs_pred(h)

reward = self.rew_pred(h)

return next_obs, reward

# --- Agent: plan / decide / select tools ---

class Agent:

def __init__(self, obs_dim, action_dim, model, memory_size=1000):

self.obs_dim = obs_dim

self.action_dim = action_dim

self.model = model

self.memory = [] # store (obs, action, reward, next_obs, done)

self.optimizer = optim.Adam(self.model.parameters(), lr=1e-3)

self.loss_fn = nn.MSELoss()

def plan(self, obs, n_steps=3):

"""

Simple planning: for each candidate action, simulate one step ahead via model,

pick action with highest predicted reward plus maybe lookahead.

"""

obs_tensor = torch.tensor(obs, dtype=torch.float32).unsqueeze(0) # shape (1, obs_dim)

best_a = None

best_val = -float('inf')

for a in range(self.action_dim):

action_onehot = torch.zeros((1, self.action_dim))

action_onehot[0, a] = 1.0

pred_obs, pred_rew = self.model(obs_tensor, action_onehot)

val = pred_rew.item()

# could unroll further, but for simplicity one-step lookahead

if val > best_val:

best_val = val

best_a = a

return best_a

def remember(self, obs, action, reward, next_obs, done):

self.memory.append((obs, action, reward, next_obs, done))

if len(self.memory) > 1000:

self.memory.pop(0)

def train(self, batch_size=32):

if len(self.memory) < batch_size:

return

batch = random.sample(self.memory, batch_size)

obs_b = torch.tensor([b[0] for b in batch], dtype=torch.float32)

action_b = torch.tensor([b[1] for b in batch], dtype=torch.long)

reward_b = torch.tensor([b[2] for b in batch], dtype=torch.float32).unsqueeze(1)

next_obs_b = torch.tensor([b[3] for b in batch], dtype=torch.float32)

# prepare action one-hot

action_onehot_b = torch.zeros((batch_size, self.action_dim))

action_onehot_b[range(batch_size), action_b] = 1.0

pred_next_obs, pred_reward = self.model(obs_b, action_onehot_b)

loss1 = self.loss_fn(pred_next_obs, next_obs_b)

loss2 = self.loss_fn(pred_reward, reward_b)

loss = loss1 + loss2

self.optimizer.zero_grad()

loss.backward()

self.optimizer.step()

# --- Tools/APIs/Actuators: execute ---

# In this toy, “tools” are just the environment actions: move up/down/left/right

# --- Feedback/Signals + Memory/Evals + Loop ---

def main():

env = GridWorld(size=5, goal=(4,4))

obs_dim = 4 # (agent_x,agent_y,goal_x,goal_y)

action_dim = 4

model = PredictionModel(obs_dim, action_dim)

agent = Agent(obs_dim, action_dim, model)

num_episodes = 200

for ep in range(num_episodes):

obs = env.reset()

done = False

total_reward = 0.0

while not done:

# DL model perceives/predicts inside agent.plan

action = agent.plan(obs)

# Tools execute

next_obs, reward, done = env.step(action)

# Feedback / signals

total_reward += reward

# Memory/Evals

agent.remember(obs, action, reward, next_obs, done)

agent.train(batch_size=32)

obs = next_obs

print(f"Episode {ep} ended, total reward = {total_reward:.2f}")

if __name__ == "__main__":

main()

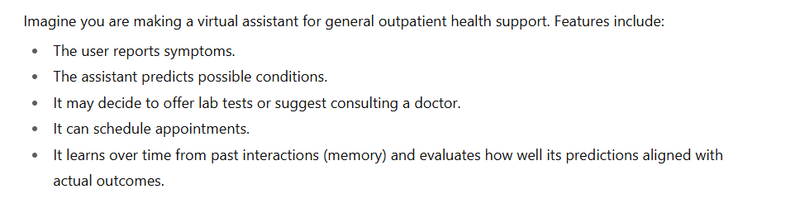

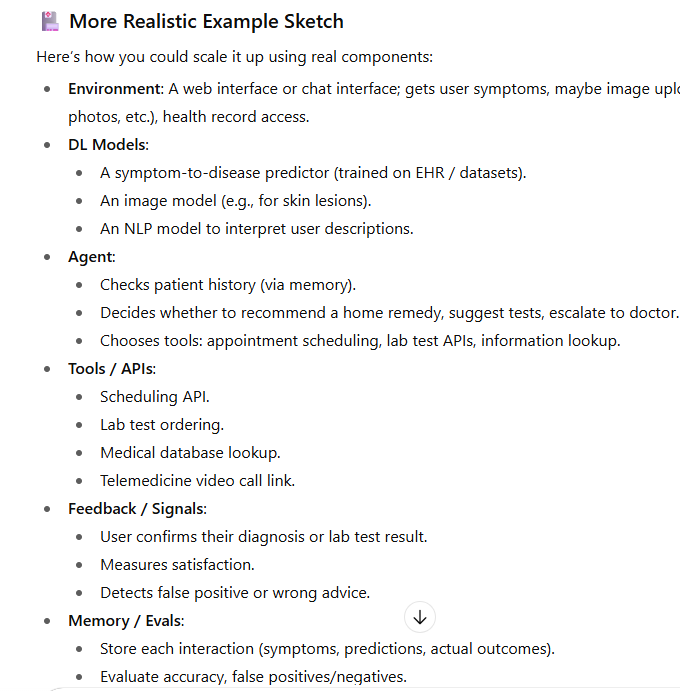

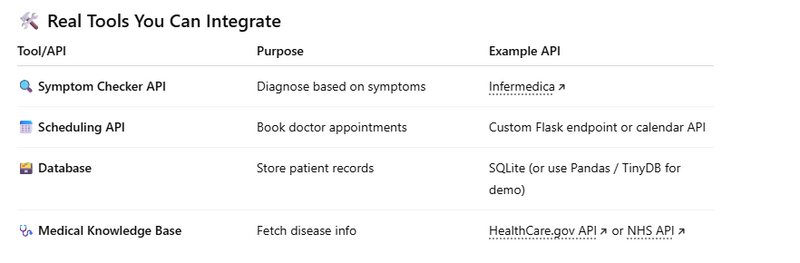

healthcare‑assistant

import random

from typing import Dict, Any, List, Tuple

# For the DL part (prediction), we’ll mock with a simple model

class SymptomPredictor:

"""DL model: perceives symptoms, predicts possible conditions."""

def __init__(self):

# In real version, load a trained model here

self.condition_db = {

"fever,cough": ["Flu", "Common Cold"],

"headache,blurred vision": ["Migraine", "Eye Strain"],

"chest pain,shortness of breath": ["Heart Disease", "Asthma"],

}

def predict(self, symptoms: List[str]) -> List[str]:

key = ",".join(sorted(symptoms))

return self.condition_db.get(key, ["Unknown condition"])

# Memory / Evaluations

class Memory:

"""Stores past interactions and outcomes."""

def __init__(self):

self.records: List[Dict[str, Any]] = []

def add(self, rec: Dict[str, Any]):

self.records.append(rec)

def get_history(self) -> List[Dict[str, Any]]:

return self.records

def evaluate(self) -> Dict[str, float]:

"""Evaluate prediction accuracy (mock)."""

total = len(self.records)

if total == 0:

return {"accuracy": 0.0}

correct = sum(1 for r in self.records if r['predicted'] == r.get('actual'))

return {"accuracy": correct / total}

# The Agent: decides what to do

class Agent:

def __init__(self, predictor: SymptomPredictor, memory: Memory):

self.predictor = predictor

self.memory = memory

def plan(self, symptoms: List[str]) -> Dict[str, Any]:

# perceive / predict

predicted_conditions = self.predictor.predict(symptoms)

# decide: if predicted is unknown, ask for more info; else suggest next tool

if "Unknown condition" in predicted_conditions:

action = {"tool": "ask_more", "message": "Can you give more detailed symptoms?"}

else:

action = {"tool": "suggest_conditions", "conditions": predicted_conditions}

return action

# Tools / APIs / Actuators

class Tools:

def ask_more(self, message: str) -> str:

# Simulate user giving more details

return "added symptom: fatigue"

def suggest_conditions(self, conditions: List[str]) -> str:

return f"Based on symptoms, possible conditions are: {', '.join(conditions)}. If symptoms worsen, please see a doctor."

def schedule_appointment(self, date: str, reason: str) -> str:

# stub for scheduling

return f"Appointment scheduled on {date} for reason: {reason}"

# Feedback/Signals: get user feedback / actual outcomes

def get_feedback(prediction: List[str]) -> str:

# In real use, user or doctor confirms actual diagnosis

# Here, we mock: randomly pick one condition as "actual"

return random.choice(prediction)

# Loop

def main_loop():

predictor = SymptomPredictor()

memory = Memory()

agent = Agent(predictor, memory)

tools = Tools()

# Simulated sessions

for session in range(5):

print(f"\n--- Session {session+1} ---")

# Environment: user reports symptoms

symptoms = input("Enter symptoms comma-separated: ").strip().split(',')

symptoms = [s.strip().lower() for s in symptoms]

# Agent: plan / decide based on DL model

action = agent.plan(symptoms)

# Tools: execute

if action['tool'] == "ask_more":

extra = tools.ask_more(action['message'])

print("Tool ask_more:", extra)

# Update symptoms

symptoms.append(extra.replace("added symptom: ", ""))

# Re-do plan

action = agent.plan(symptoms)

if action['tool'] == "suggest_conditions":

suggestion = tools.suggest_conditions(action['conditions'])

print("Tool suggest_conditions:", suggestion)

# Feedback / Signals

actual = get_feedback(action.get('conditions', ["Unknown"]))

print("Feedback (actual condition):", actual)

# Memory / Evals

record = {

"symptoms": symptoms,

"predicted": action.get("conditions"),

"actual": actual

}

memory.add(record)

evals = memory.evaluate()

print("Evaluation so far:", evals)

print("\nAll sessions memory:", memory.get_history())

if __name__ == "__main__":

main_loop()

Top comments (0)