Model architecture of RNN

pros and cons of RNN

Model architecture of RNN

What is Recurrent Neural Network (RNN)?

Recurrent Neural Network(RNN) is a type of Neural Network where the output from the previous step is fed as input to the current step. In traditional neural networks, all the inputs and outputs are independent of each other. Still, in cases when it is required to predict the next word of a sentence, the previous words are required and hence there is a need to remember the previous words. Thus RNN came into existence, which solved this issue with the help of a Hidden Layer. The main and most important feature of RNN is its Hidden state, which remembers some information about a sequence. The state is also referred to as Memory State since it remembers the previous input to the network. It uses the same parameters for each input as it performs the same task on all the inputs or hidden layers to produce the output. This reduces the complexity of parameters, unlike other neural networks.

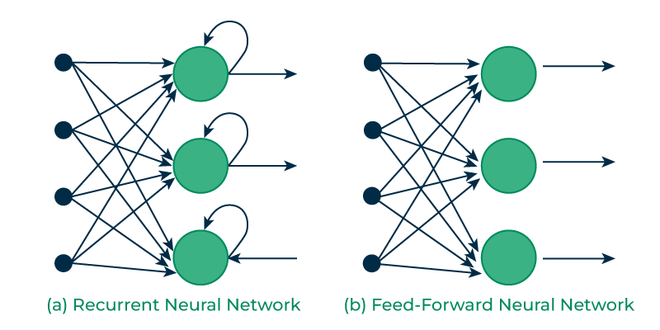

How RNN differs from Feedforward Neural Network

?

Artificial neural networks that do not have looping nodes are called feed forward neural networks. Because all information is only passed forward, this kind of neural network is also referred to as a multi-layer neural network.

Information moves from the input layer to the output layer – if any hidden layers are present – unidirectionally in a feedforward neural network. These networks are appropriate for image classification tasks, for example, where input and output are independent. Nevertheless, their inability to retain previous inputs automatically renders them less useful for sequential data analysis.

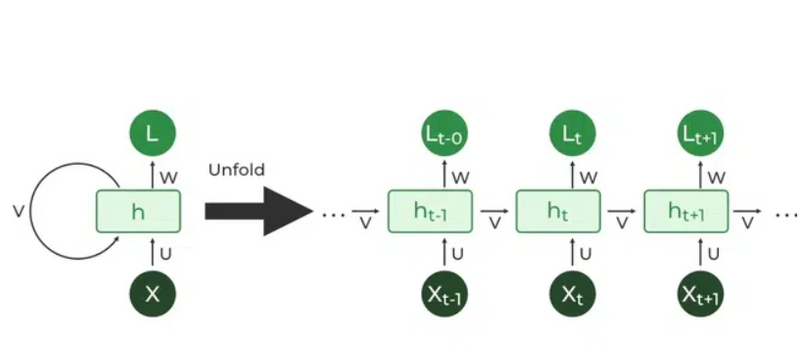

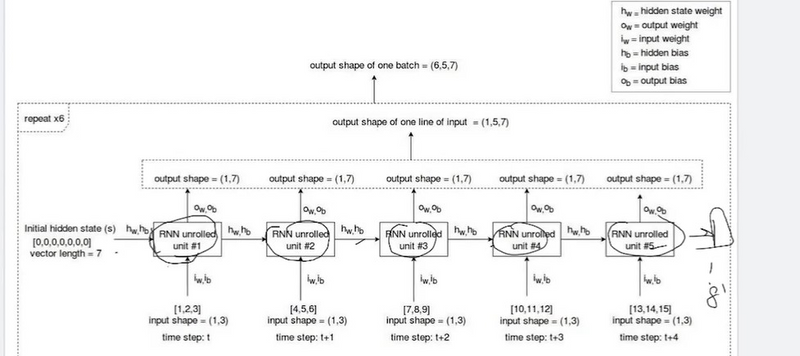

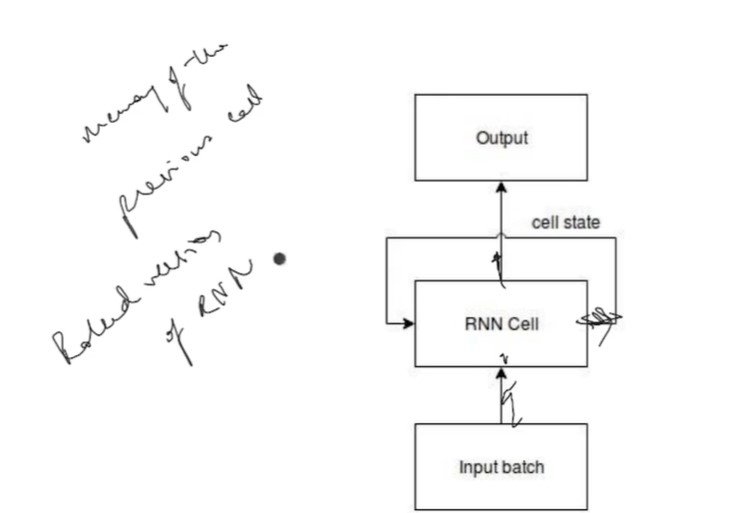

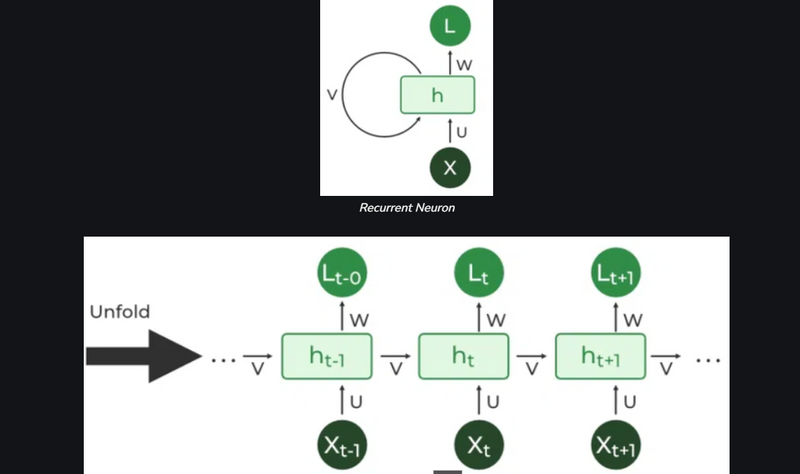

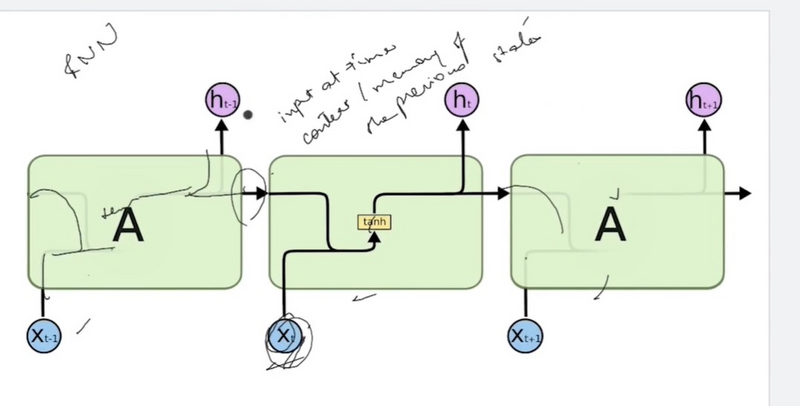

Recurrent Neuron and RNN Unfolding

The fundamental processing unit in a Recurrent Neural Network (RNN) is a Recurrent Unit, which is not explicitly called a “Recurrent Neuron.” This unit has the unique ability to maintain a hidden state, allowing the network to capture sequential dependencies by remembering previous inputs while processing. Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) versions improve the RNN’s ability to handle long-term dependencies.

How does RNN work

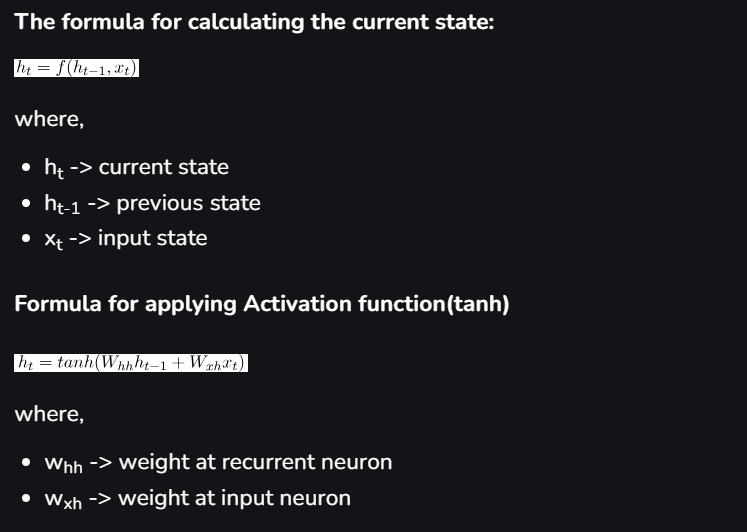

The Recurrent Neural Network consists of multiple fixed activation function units, one for each time step. Each unit has an internal state which is called the hidden state of the unit. This hidden state signifies the past knowledge that the network currently holds at a given time step. This hidden state is updated at every time step to signify the change in the knowledge of the network about the past. The hidden state is updated using the following recurrence relation:-

Types Of RNN

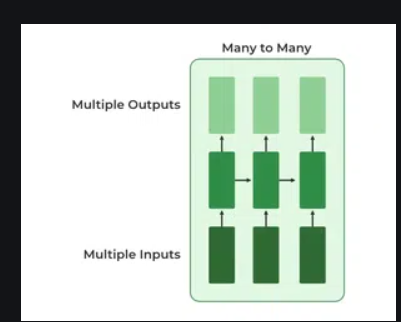

There are four types of RNNs based on the number of inputs and outputs in the network.

- One to One

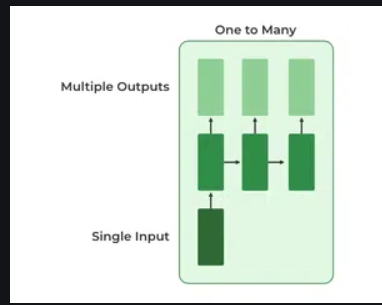

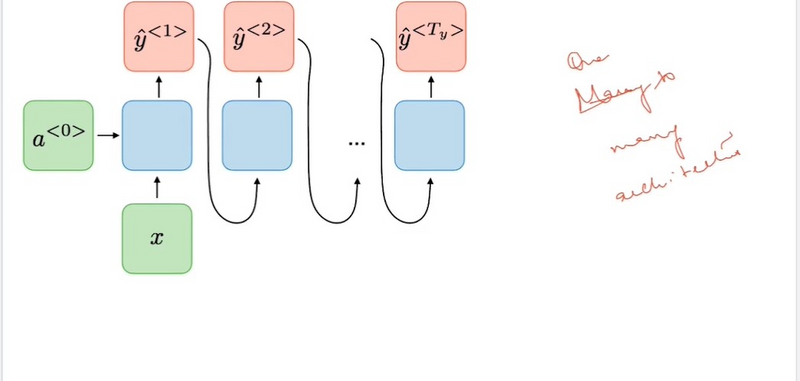

- One to Many

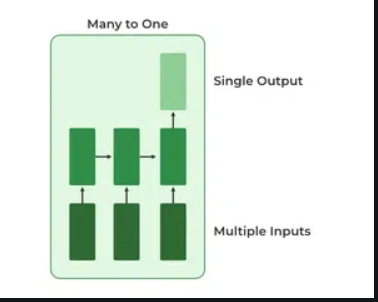

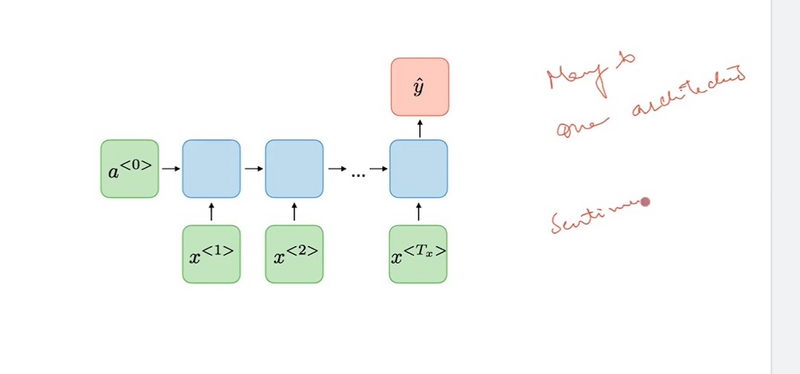

- Many to One

- Many to Many

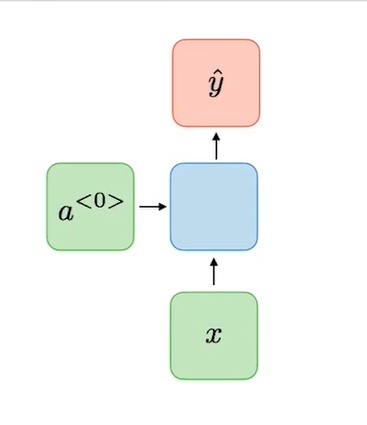

One to One

This type of RNN behaves the same as any simple Neural network it is also known as Vanilla Neural Network. In this Neural network, there is only one input and one output.

One To Many

In this type of RNN, there is one input and many outputs associated with it. One of the most used examples of this network is Image captioning where given an image we predict a sentence having Multiple words.

Many to One

In this type of network, Many inputs are fed to the network at several states of the network generating only one output. This type of network is used in the problems like sentimental analysis. Where we give multiple words as input and predict only the sentiment of the sentence as output.

Many to Many

In this type of neural network, there are multiple inputs and multiple outputs corresponding to a problem. One Example of this Problem will be language translation. In language translation, we provide multiple words from one language as input and predict multiple words from the second language as output.

pros and cons of RNN

Recurrent Neural Networks (RNNs) have several advantages and disadvantages. Let's explore them:

Pros

:

Ability to Capture Sequential Information: RNNs are designed to process sequential data by maintaining an internal state or memory. This makes them suitable for tasks such as time series prediction, natural language processing, and speech recognition, where understanding the order of data is crucial.

Flexibility in Input and Output Length: RNNs can handle input sequences of variable lengths and produce output sequences of variable lengths. This flexibility makes them suitable for tasks where the length of the input or output varies, such as machine translation or text generation.

Parameter Sharing: RNNs share parameters across different time steps, which reduces the number of parameters compared to feedforward neural networks. This parameter sharing can lead to more efficient training and better generalization, especially when dealing with limited training data.

Feature Extraction: RNNs can automatically learn useful features from sequential data without requiring handcrafted features. This makes them effective for tasks where feature engineering is challenging or time-consuming.

Cons

:

Difficulty in Capturing Long-Term Dependencies: Traditional RNNs suffer from the vanishing gradient problem, which makes it difficult for them to capture long-term dependencies in sequences. As a result, they may struggle with tasks that require understanding of context over long distances, such as long-range temporal dependencies in time series data or long sentences in natural language processing.

Training Instability: RNNs can be challenging to train, especially when dealing with long sequences or noisy data. They are prone to issues such as exploding or vanishing gradients, which can hinder convergence during training.

Limited Memory: RNNs have a fixed-size memory that stores information from previous time steps. This limited memory can restrict their ability to retain important information over long sequences, especially in tasks with lengthy dependencies.

Sequential Processing Limitation: RNNs process sequential data one time step at a time, which can lead to slower inference speeds compared to parallelizable architectures like convolutional neural networks (CNNs). This sequential processing can also make RNNs less suitable for real-time applications that require fast responses.

Example

Sometimes we just need to look at recent information to perform the present task. For example, consider a language model trying to predict the last word in “ the clouds are in the sky”. Here it’s easy to predict the next word as sky based on the previous words. But consider the sentence “ I grew up in France I speak fluent French. “ Here it is not easy to predict that the language is French directly. It depends on previous input also. In such sentences it’s entirely possible for the gap between the relevant information and the point where it is needed to become very large. In theory, RNN’s are absolutely capable of handling such “long-term dependencies.

Top comments (0)