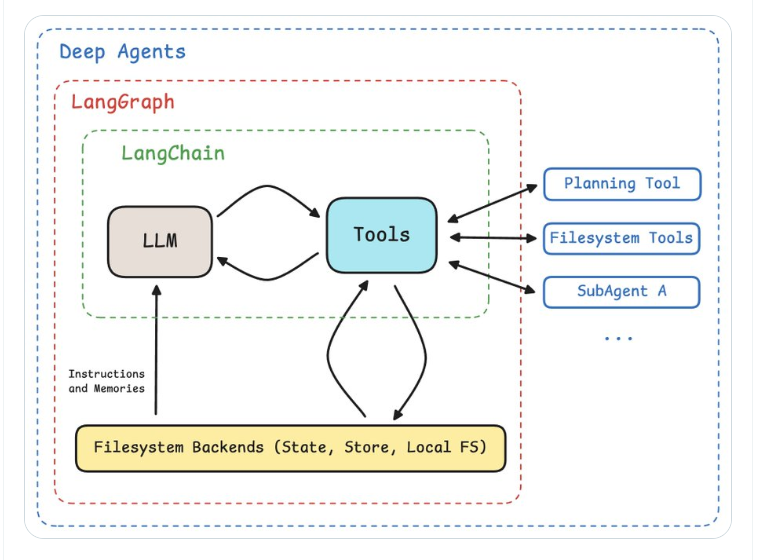

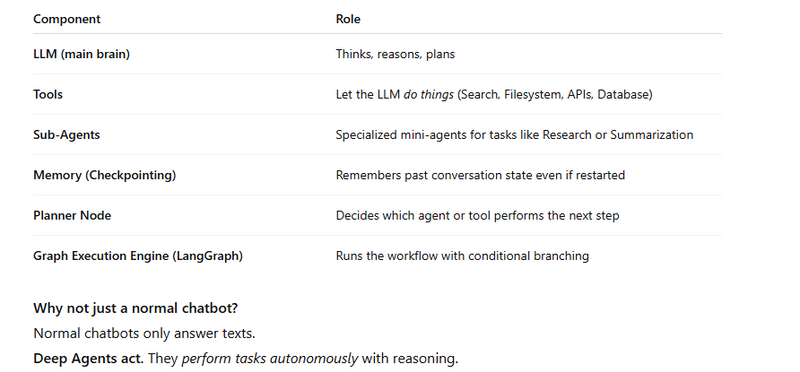

A Deep Agent is not just a chatbot — it is a thinking AI system that can:

Understand user goals

Break goals into steps (Planning)

Choose which sub-agent or tool should perform each step

Store and recall long-term memory

Read / Write / Modify files (Workspace)

Summarize conversations when they get long

Deep Agents build on LangChain + LangGraph:

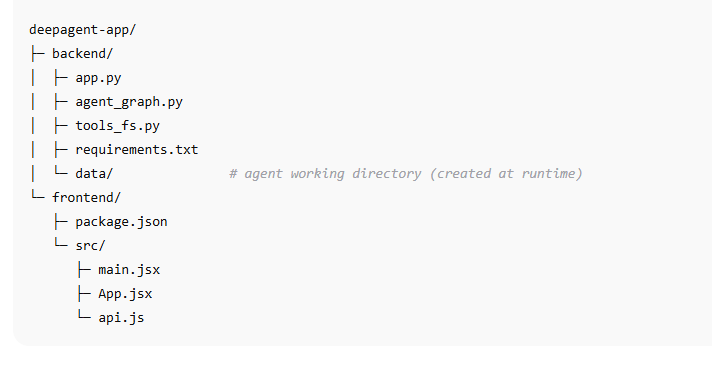

Folder layout

Backend (Flask + LangChain + LangGraph)

backend/requirements.txt

flask

flask-cors

python-dotenv

langchain

langchain-openai

langgraph

langchain-community

sqlite3-binary

Set one LLM provider via env (example uses OpenAI):

OPENAI_API_KEY=sk-...

backend/tools_fs.py

Small wrapper exposing safe filesystem tools inside a sandbox folder.

tools_fs.py

from pathlib import Path

from langchain_community.tools.file_management.read_file import ReadFileTool

from langchain_community.tools.file_management.write_file import WriteFileTool

from langchain_community.tools.file_management.list_directory import ListDirectoryTool

from langchain_community.tools.file_management.copy_file import CopyFileTool

from langchain_community.tools.file_management.move_file import MoveFileTool

from langchain_community.tools.file_management.search import FileSearchTool

def make_filesystem_tools(root_dir: Path):

root_dir.mkdir(parents=True, exist_ok=True)

# Each tool can be pinned to a base path for safety

read = ReadFileTool(root_dir=root_dir)

write = WriteFileTool(root_dir=root_dir)

ls = ListDirectoryTool(root_dir=root_dir)

cp = CopyFileTool(root_dir=root_dir)

mv = MoveFileTool(root_dir=root_dir)

search = FileSearchTool(root_dir=root_dir)

return [read, write, ls, cp, mv, search]

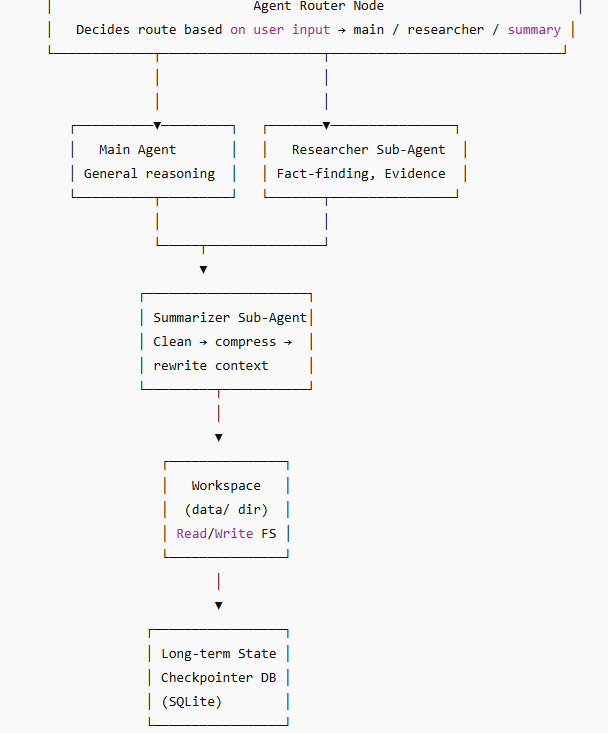

backend/agent_graph.py

This builds a LangGraph with:

a main ReAct-style agent

a planner node that can split tasks

two sub-agents (Researcher & Summarizer as examples)

filesystem tools

summarization when history gets long

a SQLite checkpointer so each thread_id resumes memory

# agent_graph.py

from __future__ import annotations

from typing import TypedDict, List, Literal, Optional

from dataclasses import dataclass

from pathlib import Path

import os

from langchain_openai import ChatOpenAI

from langchain.tools import Tool

from langchain.agents import AgentExecutor, create_react_agent

from langchain.prompts import PromptTemplate

from langgraph.graph import StateGraph, END

from langgraph.checkpoint.sqlite import SqliteSaver

from langchain.memory import ConversationBufferMemory

from langchain.schema import SystemMessage

from tools_fs import make_filesystem_tools

# ---------- Config ----------

WORKDIR = Path(__file__).parent / "data"

DBPATH = Path(__file__).parent / "deepagent.sqlite"

MODEL = os.environ.get("DEEPAGENT_MODEL", "gpt-4o-mini") # choose any OpenAI chat model

TEMPERATURE = float(os.environ.get("DEEPAGENT_TEMP", "0.2"))

# ---------- Shared LLM ----------

def llm_factory():

return ChatOpenAI(model=MODEL, temperature=TEMPERATURE)

# ---------- Sub-agents ----------

def build_research_agent(tools: List[Tool]) -> AgentExecutor:

# You can add your web/search tools here if you have keys.

sys = "You are a Researcher. Find facts, structure notes, and write findings to files when helpful."

prompt = PromptTemplate.from_template(

"""{system}

Use tools when needed. Think step-by-step, then produce concise bullet findings.

{chat_history}

Question: {input}

{agent_scratchpad}

""")

llm = llm_factory().bind(messages=[SystemMessage(content=sys)])

agent = create_react_agent(llm, tools, prompt)

memory = ConversationBufferMemory(return_messages=True, memory_key="chat_history")

return AgentExecutor(agent=agent, tools=tools, memory=memory, verbose=False)

def build_summarizer_agent(tools: List[Tool]) -> AgentExecutor:

sys = "You are a Summarizer. Summarize content precisely. Prefer writing summaries to files if requested."

prompt = PromptTemplate.from_template(

"""{system}

Summarize clearly with headings and bullets.

{chat_history}

Task: {input}

{agent_scratchpad}

""")

llm = llm_factory().bind(messages=[SystemMessage(content=sys)])

agent = create_react_agent(llm, tools, prompt)

memory = ConversationBufferMemory(return_messages=True, memory_key="chat_history")

return AgentExecutor(agent=agent, tools=tools, memory=memory, verbose=False)

# ---------- Main agent ----------

def build_main_agent(tools: List[Tool]) -> AgentExecutor:

sys = (

"You are a Helpful General Agent. You can plan, call sub-agents, and use filesystem tools. "

"Prefer decomposing tasks into steps. Keep responses concise unless asked."

)

prompt = PromptTemplate.from_template(

"""{system}

If the task is large, suggest a plan. Use tools to read/write files in the project 'data/' folder.

{chat_history}

User: {input}

{agent_scratchpad}

""")

llm = llm_factory().bind(messages=[SystemMessage(content=sys)])

agent = create_react_agent(llm, tools, prompt)

memory = ConversationBufferMemory(return_messages=True, memory_key="chat_history")

return AgentExecutor(agent=agent, tools=tools, memory=memory, verbose=False)

# ---------- Graph State ----------

class GraphState(TypedDict):

thread_id: str

input: str

plan: Optional[List[str]]

route: Literal["main", "researcher", "summarizer"]

output: Optional[str]

history_tokens: int

# ---------- Router / Planner ----------

def plan_node(state: GraphState) -> GraphState:

"""Light planner: if the user asks to 'research' or 'summarize', choose subagent; else main."""

text = state["input"].lower()

route: Literal["main","researcher","summarizer"] = "main"

plan: Optional[List[str]] = None

if any(k in text for k in ["research", "investigate", "find sources", "compare"]):

route = "researcher"

plan = ["Collect facts", "Organize notes", "Save a /data/*.md file"]

elif any(k in text for k in ["summarize", "tl;dr", "make summary"]):

route = "summarizer"

plan = ["Read target text or files", "Write short structured summary"]

return {**state, "route": route, "plan": plan}

# ---------- Agent runners ----------

@dataclass

class AgentsBundle:

main: AgentExecutor

researcher: AgentExecutor

summarizer: AgentExecutor

def build_bundle() -> AgentsBundle:

fs_tools = make_filesystem_tools(WORKDIR)

# (You can append more tools here, e.g., math, web, etc.)

return AgentsBundle(

main=build_main_agent(fs_tools),

researcher=build_research_agent(fs_tools),

summarizer=build_summarizer_agent(fs_tools),

)

def run_agent(executor: AgentExecutor, user_input: str) -> str:

result = executor.invoke({"input": user_input})

return result["output"] if isinstance(result, dict) else str(result)

# ---------- Summarization gate (naive) ----------

def maybe_summarize_memory(agent: AgentExecutor):

"""When memory gets long, ask the summarizer-agent to compress it."""

msgs = agent.memory.chat_memory.messages

if len(msgs) > 20: # very simple threshold

text = "\n".join([m.content for m in msgs[-20:]])

summarizer = build_summarizer_agent([])

summary = run_agent(summarizer, f"Summarize the following:\n{text}")

agent.memory.clear()

agent.memory.chat_memory.add_ai_message(f"[Summary of previous context]\n{summary}")

# ---------- Build graph ----------

def build_graph():

bundle = build_bundle()

cp = SqliteSaver(str(DBPATH)) # persistent state per thread_id

graph = StateGraph(GraphState)

def node_plan(state: GraphState):

return plan_node(state)

def node_main(state: GraphState):

out = run_agent(bundle.main, state["input"])

maybe_summarize_memory(bundle.main)

return {**state, "output": out}

def node_researcher(state: GraphState):

out = run_agent(bundle.researcher, state["input"])

maybe_summarize_memory(bundle.researcher)

return {**state, "output": out}

def node_summarizer(state: GraphState):

out = run_agent(bundle.summarizer, state["input"])

maybe_summarize_memory(bundle.summarizer)

return {**state, "output": out}

graph.add_node("plan", node_plan)

graph.add_node("main", node_main)

graph.add_node("researcher", node_researcher)

graph.add_node("summarizer", node_summarizer)

graph.set_entry_point("plan")

graph.add_conditional_edges(

"plan",

lambda s: s["route"],

{

"main": "main",

"researcher": "researcher",

"summarizer": "summarizer",

},

)

graph.add_edge("main", END)

graph.add_edge("researcher", END)

graph.add_edge("summarizer", END)

app = graph.compile(checkpointer=cp)

return app

backend/app.py

REST API with two endpoints:

POST /api/agent/run → run one step for a thread_id

GET /api/fs/list → list files created by tools (optional)

# app.py

import os

from pathlib import Path

from flask import Flask, request, jsonify

from flask_cors import CORS

from dotenv import load_dotenv

from agent_graph import build_graph, WORKDIR

load_dotenv()

app = Flask(__name__)

CORS(app, supports_credentials=True)

graph = build_graph()

@app.route("/api/agent/run", methods=["POST"])

def run():

data = request.json or {}

thread_id = str(data.get("thread_id") or "default")

user_input = str(data.get("input") or "").strip()

if not user_input:

return jsonify({"error": "input required"}), 400

# Run graph per-thread (checkpointed):

result = graph.invoke(

{"thread_id": thread_id, "input": user_input, "route": "main", "plan": None, "output": None, "history_tokens": 0},

config={"configurable": {"thread_id": thread_id}},

)

return jsonify({

"thread_id": thread_id,

"plan": result.get("plan"),

"route": result.get("route"),

"output": result.get("output"),

})

@app.route("/api/fs/list", methods=["GET"])

def list_files():

root = WORKDIR

entries = []

for p in root.glob("**/*"):

if p.is_file():

entries.append(str(p.relative_to(root)))

return jsonify({"root": str(root), "files": entries})

if __name__ == "__main__":

# Ensure sandbox exists

Path(WORKDIR).mkdir(parents=True, exist_ok=True)

app.run(host="0.0.0.0", port=int(os.environ.get("PORT", 5001)))

3) Frontend (React)

frontend/package.json

{

"name": "deepagent-react",

"private": true,

"version": "0.0.1",

"type": "module",

"scripts": {

"dev": "vite",

"build": "vite build",

"preview": "vite preview"

},

"dependencies": {

"react": "^18.3.1",

"react-dom": "^18.3.1"

},

"devDependencies": {

"@vitejs/plugin-react": "^4.3.0",

"vite": "^5.4.0"

}

}

Create a basic Vite app if you like; this package.json matches that.

frontend/src/api.js

const BASE = import.meta.env.VITE_BACKEND || "http://localhost:5001";

export async function runAgent({ threadId, input }) {

const res = await fetch(`${BASE}/api/agent/run`, {

method: "POST",

headers: { "Content-Type": "application/json" },

body: JSON.stringify({ thread_id: threadId, input })

});

if (!res.ok) throw new Error(await res.text());

return res.json();

}

export async function listFiles() {

const res = await fetch(`${BASE}/api/fs/list`);

if (!res.ok) throw new Error(await res.text());

return res.json();

}

frontend/src/App.jsx

Simple professional chat UI, shows plan/route, lets you pick a thread id, and list files.

import { useEffect, useRef, useState } from "react";

import { runAgent, listFiles } from "./api";

export default function App() {

const [threadId, setThreadId] = useState("demo-thread-1");

const [input, setInput] = useState("");

const [msgs, setMsgs] = useState([]);

const [files, setFiles] = useState([]);

const boxRef = useRef(null);

async function send() {

if (!input.trim()) return;

const you = { role: "user", text: input };

setMsgs((m) => [...m, you]);

setInput("");

try {

const res = await runAgent({ threadId, input: you.text });

const bot = {

role: "agent",

text: res.output || "",

meta: { route: res.route, plan: res.plan }

};

setMsgs((m) => [...m, bot]);

refreshFiles();

setTimeout(() => boxRef.current?.scrollTo(0, boxRef.current.scrollHeight), 50);

} catch (e) {

setMsgs((m) => [...m, { role: "error", text: String(e) }]);

}

}

async function refreshFiles() {

try {

const r = await listFiles();

setFiles(r.files || []);

} catch {}

}

useEffect(() => { refreshFiles(); }, []);

return (

<div style={{ maxWidth: 920, margin: "24px auto", fontFamily: "Inter, system-ui, Arial" }}>

<h2 style={{ marginBottom: 8 }}>Deep Agent (React + Flask)</h2>

<div style={{ display: "flex", gap: 8, alignItems: "center", marginBottom: 12 }}>

<label>Thread:</label>

<input

value={threadId}

onChange={(e) => setThreadId(e.target.value)}

style={{ padding: 8, borderRadius: 8, border: "1px solid #ddd", width: 260 }}

/>

<button onClick={() => { setMsgs([]); }} style={btn}>Reset Chat</button>

<button onClick={refreshFiles} style={btn}>Refresh Files</button>

</div>

<div ref={boxRef} style={chatBox}>

{msgs.map((m, i) => (

<div key={i} style={{ marginBottom: 12 }}>

<div style={{ fontSize: 12, color: "#667", marginBottom: 4 }}>

{m.role.toUpperCase()}

{m.meta?.route ? ` · route: ${m.meta.route}` : ""}

</div>

{m.meta?.plan?.length ? (

<div style={planCard}>

<div style={{ fontWeight: 600, marginBottom: 6 }}>Plan</div>

<ul style={{ margin: 0, paddingLeft: 18 }}>

{m.meta.plan.map((p, idx) => <li key={idx}>{p}</li>)}

</ul>

</div>

) : null}

<div style={bubble(m.role)}>{m.text}</div>

</div>

))}

</div>

<div style={{ display: "flex", gap: 8, marginTop: 10 }}>

<input

placeholder="Ask: research X, summarize Y, or work with files…"

value={input}

onChange={(e) => setInput(e.target.value)}

onKeyDown={(e) => e.key === "Enter" && send()}

style={{ flex: 1, padding: 12, borderRadius: 10, border: "1px solid #ddd" }}

/>

<button onClick={send} style={btnPrimary}>Send</button>

</div>

<div style={{ marginTop: 20 }}>

<div style={{ fontWeight: 700, marginBottom: 6 }}>Workspace files (data/):</div>

{files.length === 0 ? <div style={{ color: "#999" }}>No files yet.</div> :

<ul>{files.map((f) => <li key={f}>{f}</li>)}</ul>}

</div>

</div>

);

}

const chatBox = {

height: 380,

overflow: "auto",

border: "1px solid #eee",

borderRadius: 12,

padding: 14,

background: "#fafbff"

};

const btn = {

padding: "8px 12px",

borderRadius: 10,

border: "1px solid #ddd",

background: "#fff",

cursor: "pointer"

};

const btnPrimary = { ...btn, background: "#4f6df6", color: "#fff", border: "1px solid #4f6df6" };

const bubble = (role) => ({

background: role === "user" ? "#fff" : role === "error" ? "#ffecec" : "#eef2ff",

border: role === "user" ? "1px solid #e5e7eb" : "1px solid #dfe3ff",

padding: 12,

borderRadius: 12,

whiteSpace: "pre-wrap"

});

const planCard = {

border: "1px dashed #c9d0ff",

background: "#f6f8ff",

padding: 10,

borderRadius: 10,

marginBottom: 8

};

frontend/src/main.jsx

import React from "react";

import { createRoot } from "react-dom/client";

import App from "./App.jsx";

createRoot(document.getElementById("root")).render(<App />);

Your index.html just needs a

like a normal Vite app.4) Run it

Backend

cd backend

python -m venv .venv && source .venv/bin/activate # (Windows: .venv\Scripts\activate)

pip install -r requirements.txt

export OPENAI_API_KEY=sk-... # or put in .env

python app.py

# -> serves on http://localhost:5001

Frontend

cd ../frontend

npm i

npm run dev

# Set VITE_BACKEND=http://localhost:5001 if needed

Open the React app, choose a thread ID, and chat.

Top comments (0)