How to Search Input Element By ID

How to send keys to search input elements

How to submit button on icon using id By XPATH after send keys

How to select checkbox using id By XPATH

How to scrape the data using CLASS_NAME

How to scrape the data using span class BY XPATH

How to select checkbox by selecting icon tag using xpath and full xpath

How to select checkbox by selecting span tag using xpath

How to select span element of li class

How to take or select span aria label value using get-attribute

How to take or select first three character

Learning Point

How to apply list slicing in for loop to get range of records like 10,100

:Go to webpage https://www.amazon.in/ Enter “Laptop” in the search field and then click the search icon. Then

set CPU Type filter to “Intel Core i7” as shown in the below image:

Step 1: install selenium using python

pip install --upgrade selenium

Step 2: Import Required Libraries

import pandas as pd

from selenium import webdriver

import warnings

warnings.filterwarnings('ignore')

from selenium.webdriver.common.by import By

import time

Step 3: Start a WebDriver Instance

driver=webdriver.Chrome()

Step 4: Navigate to a Webpage

Navigate to the webpage where you want to interact with the search input bar:

driver.get(' https://www.amazon.in/')

Step 5: Find the Search Input Element

input_field = driver.find_element(By.ID,"twotabsearchtextbox")

Step 6: Find the Search Input Element for sunglasses by sending S Keys to the Input Element and then submit button

input_field.send_keys("Laptop")

input_submit =driver.find_element(By.XPATH, "//*[@id='nav-search-submit-button']").click()

Step 7: Select checkbox By ID then click

input_submit = driver.find_element(By.XPATH, "//*[@id=\"p_n_feature_thirteen_browse-bin/12598163031\"]/span/a/span").click()

===OR===

input_submit = driver.find_element(By.XPATH, "//*[@id=\"p_n_feature_thirteen_browse-bin/12598163031\"]/span/a/span").click()

Step 8: Scrape the Results

title=[]

price=[]

rating=[]

Step 9: Scrape the all title

all_title=driver.find_elements(By.CLASS_NAME,"a-size-medium.a-color-base.a-text-normal")

all_title

for alltitles in all_title[0:10]:

title.append(alltitles.text)

title

output

Step 10: Scrape the all price

all_price=driver.find_elements(By.CLASS_NAME,"a-price-whole")

all_price

for allprice in all_price[0:10]:

price.append(allprice.text)

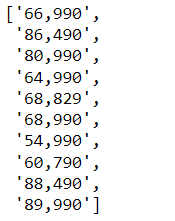

price

output

Step 10: Scrape the all ratings

all_rating= driver.find_elements(By.XPATH, '//span[@class="a-size-base puis-bold-weight-text"]')

all_rating

ratings=[]

for rating_element in all_rating[0:10]:

ratings.append(rating_element.text)

ratings

output

=====================================

: In this question you have to scrape data using the filters available on the webpage as shown below: You have to use the location and salary filter. You have to scrape data for “Data Scientist” designation for first 10 job results. You have to scrape the job-title, job-location, company_name, experience_required. The location filter to be used is “Delhi/NCR” The salary filter to be used is “3-6” lakhs The task will be done as shown in the below steps:

first get the webpage https://www.naukri.com/

Enter “Data Scientist” in “Skill,Designations,Companies” field .

Then click the search button.

Then apply the location filter and salary filter by checking the respective boxes

Then scrape the data for the first 10 jobs results you get.

Finally create a dataframe of the scraped data.

# Activating the chrome browser

driver=webdriver.Chrome(r"C:\Users\HP\Desktop\Fliprobo notes\chromedriver.exe")

# Opening the homepage-

driver.get("https://www.naukri.com/")

How to select checkbox by selecting icon tag using xpath and full xpath

How to select checkbox by selecting span tag using xpath

# Entering Designation in search box-

designation=driver.find_element(By.CLASS_NAME,"suggestor-input")

designation.send_keys('Data Scientist')

#Clicking on Search Button-

search=driver.find_element(By.CLASS_NAME,"qsbSubmit")

search.click()

# Setting the location filter-

location_delhi=driver.find_element(By.XPATH,"/html/body/div[1]/div[3]/div[2]/section[1]/div[2]/div[5]/div[2]/div[3]/label/i")

location_delhi.click()

===or==========

//*[@id="search-result-container"]/div[1]/div[1]/div/div/div[2]/div[5]/div[2]/div[3]/label/i

# Setting the Salary filter-

salary_filter=driver.find_element(By.XPATH,"/html/body/div[1]/div[3]/div[2]/section[1]/div[2]/div[6]/div[2]/div[2]/label/p/span[1]")

salary_filter.click()

#Creating Empty List for different attributes-

job_title=[]

job_location=[]

company_name=[]

exp_Reqd=[]

# Scraping Data for different Attributes from different pages-

start=0

end=1

for page in range(start,end):

title=driver.find_elements(By.XPATH,"//a[@class='title fw500 ellipsis']")

for i in title[0:10]:

job_title.append(i.text)

location=driver.find_elements(By.XPATH,"//li[@class='fleft grey-text br2 placeHolderLi location']//span")

for i in location[0:10]:

job_location.append(i.text)

company=driver.find_elements(By.XPATH,"//a[@class='subTitle ellipsis fleft']")

for i in company[0:10]:

company_name.append(i.text)

experience=driver.find_elements(By.XPATH,"//li[@class='fleft grey-text br2 placeHolderLi experience']")

for i in experience[0:10]:

exp_Reqd.append(i.text)

next_button=driver.find_elements(By.XPATH,"//a[@class='_1LKTO3']")

#checking length for all attributes-

print(len(job_title),len(job_location),len(company_name),len(exp_Reqd))

# Creating Dataframe-

df=pd.DataFrame({'Job_title':job_title,'Job_location':job_location,'Company_name':company_name,'Experience':exp_Reqd})

df

========================================================

Q7: Go to webpage https://www.amazon.in/Enter “Laptop” in the search field and then click the search icon.Then set CPU Type filter to “Intel Core i7”

After setting the filters scrape first 10 laptops data.You have to scrape 3 attributes for each laptop:

- title

- Ratings

- Price# 8)Amazon Laptop

# Activating the chrome browser

driver=webdriver.Chrome()

# Opening the homepage-

driver.get("https://www.amazon.in/")

# Entering laptop in search box-

laptop=driver.find_element(By.XPATH,"//div[@class='nav-search-field ']//input")

laptop.send_keys('Laptop')

#Clicking on Search Button-

search=driver.find_element(By.XPATH,"/html/body/div[1]/header/div/div[1]/div[2]/div/form/div[3]/div/span/input")

search.click()

# code to filter i7 CPu-

cpu_filter1=driver.find_element(By.XPATH,"/html/body/div[1]/div[2]/div[1]/div[2]/div/div[3]/span/div[1]/div/div/div[5]/ul[6]/li[12]/span/a/div/label/i")

cpu_filter1.click()

#Creating Empty List for different attributes-

Title=[]

price=[]

Ratings=[]

Rating=[]

start=0

end=1

for page in range(start,end):

title=driver.find_elements(By.XPATH,"//h2[@class='a-size-mini a-spacing-none a-color-base s-line-clamp-2']")

for i in title[0:10]:

Title.append(i.text)

prices=driver.find_elements(By.XPATH,'//span[@class="a-price-whole"]')

for i in prices[0:10]:

price.append(i.text)

How to take or select span aria label value using get-attribute

How to take or select first three character

titles_rat=driver.find_elements(By.XPATH,"//div[@class='a-row a-size-small']/span")

for i in titles_rat:

Rating.append(i.get_attribute("aria-label"))

rating=[]

for i in range(0,len(Rating))[0:20]:

if i == 0 or i/2 == i//2:

rating.append(Rating[i][0:3])

Top comments (0)